+

{

{messagesInMetricData.data && (

<>

-

+

{

@@ -354,14 +382,25 @@ const DetailChart = (props: { children: JSX.Element }): JSX.Element => {

-

- {metricDataList.length ? (

- metricDataList.map((data: any, i: number) => {

- const { metricName, metricUnit, metricLines } = data;

- return (

-

-

-

+ {metricDataList.length ? (

+

+

+ {metricDataList.map((data: any, i: number) => {

+ const { metricName, metricUnit, metricLines } = data;

+ return (

+

+

{

@@ -379,15 +418,6 @@ const DetailChart = (props: { children: JSX.Element }): JSX.Element => {

-

{

- setChartDetail(data);

- setShowChartDetailModal(true);

- }}

- >

-

-

{

})}

/>

+

+ ) : chartLoading ? (

+ <>

+ ) : (

+

+ )}

{props.children}

-

- {/* 图表详情 */}

- setShowChartDetailModal(false)}

- >

-

-

setShowChartDetailModal(false)}>

-

-

- {chartDetail && (

-

- )}

-

);

};

diff --git a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/LeftSider.tsx b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/LeftSider.tsx

index 5a428b5b..bd5989d9 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/LeftSider.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/LeftSider.tsx

@@ -1,4 +1,5 @@

-import { AppContainer, Divider, IconFont, Progress, Tooltip, Utils } from 'knowdesign';

+import { AppContainer, Divider, Progress, Tooltip, Utils } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import React, { useEffect, useState } from 'react';

import AccessClusters from '../MutliClusterPage/AccessCluster';

import './index.less';

diff --git a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/config.tsx b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/config.tsx

index b2d9eb0c..6ab6e317 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/config.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/config.tsx

@@ -2,7 +2,8 @@ import moment from 'moment';

import React from 'react';

import { timeFormat } from '../../constants/common';

import TagsWithHide from '../../components/TagsWithHide/index';

-import { Form, IconFont, InputNumber, Tooltip } from 'knowdesign';

+import { Form, InputNumber, Tooltip } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import { Link } from 'react-router-dom';

import { systemKey } from '../../constants/menu';

diff --git a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.less b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.less

index 7baae7b8..3fa013de 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.less

+++ b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.less

@@ -231,9 +231,10 @@

}

.chart-panel {

- flex: 1;

+ flex: auto;

margin-left: 12px;

margin-right: 10px;

+ overflow: hidden;

}

.change-log-panel {

diff --git a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.tsx b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.tsx

index e4b4b2c6..59e679b3 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/SingleClusterDetail/index.tsx

@@ -21,11 +21,9 @@ const SingleClusterDetail = (): JSX.Element => {

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/Consume.tsx b/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/Consume.tsx

index d74d723f..be43d454 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/Consume.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/Consume.tsx

@@ -1,6 +1,7 @@

/* eslint-disable no-case-declarations */

import { DownloadOutlined } from '@ant-design/icons';

-import { AppContainer, Divider, IconFont, message, Tooltip, Utils } from 'knowdesign';

+import { AppContainer, Divider, message, Tooltip, Utils } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import * as React from 'react';

import moment from 'moment';

import { timeFormat } from '../../constants/common';

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/config.tsx b/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/config.tsx

index d27f8d4e..fc529e9d 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/config.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TestingConsumer/config.tsx

@@ -268,6 +268,7 @@ export const getFormConfig = (topicMetaData: any, info = {} as any, partitionLis

type: FormItemType.inputNumber,

attrs: {

min: 1,

+ max: 1000,

},

invisible: !info?.needMsgNum,

rules: [

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/component/EditTable.tsx b/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/component/EditTable.tsx

index 49e2568d..a5300af2 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/component/EditTable.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/component/EditTable.tsx

@@ -1,6 +1,7 @@

/* eslint-disable react/display-name */

import React, { useState } from 'react';

-import { Table, Input, InputNumber, Popconfirm, Form, Typography, Button, message, IconFont, Select } from 'knowdesign';

+import { Table, Input, InputNumber, Popconfirm, Form, Typography, Button, message, Select } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import './style/edit-table.less';

import { CheckOutlined, CloseOutlined, PlusSquareOutlined } from '@ant-design/icons';

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/config.tsx b/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/config.tsx

index 81503271..3e34ad67 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/config.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TestingProduce/config.tsx

@@ -1,5 +1,6 @@

import { QuestionCircleOutlined } from '@ant-design/icons';

-import { IconFont, Switch, Tooltip } from 'knowdesign';

+import { Switch, Tooltip } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import { FormItemType, IFormItem } from 'knowdesign/es/extend/x-form';

import moment from 'moment';

import React from 'react';

@@ -152,6 +153,7 @@ export const getFormConfig = (params: any) => {

rules: [{ required: true, message: '请输入' }],

attrs: {

min: 0,

+ max: 1000,

style: { width: 232 },

},

},

@@ -391,7 +393,7 @@ export const getTableColumns = () => {

{

title: 'time',

dataIndex: 'costTimeUnitMs',

- width: 60,

+ width: 100,

},

];

};

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/BrokersDetail.tsx b/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/BrokersDetail.tsx

index 1c40cd66..9b3a12a0 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/BrokersDetail.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/BrokersDetail.tsx

@@ -1,6 +1,7 @@

import React, { useCallback } from 'react';

import { useEffect, useState } from 'react';

-import { AppContainer, Button, Empty, IconFont, List, Popover, ProTable, Radio, Spin, Utils } from 'knowdesign';

+import { AppContainer, Button, Empty, List, Popover, ProTable, Radio, Spin, Utils } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import { CloseOutlined } from '@ant-design/icons';

import api, { MetricType } from '@src/api';

import { useParams } from 'react-router-dom';

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/ConfigurationEdit.tsx b/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/ConfigurationEdit.tsx

index a2638384..7fc4ab80 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/ConfigurationEdit.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/ConfigurationEdit.tsx

@@ -1,5 +1,6 @@

import React from 'react';

-import { Drawer, Form, Input, Space, Button, Utils, Row, Col, IconFont, Divider, message } from 'knowdesign';

+import { Drawer, Form, Input, Space, Button, Utils, Row, Col, Divider, message } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import { useParams } from 'react-router-dom';

import Api from '@src/api';

export const ConfigurationEdit = (props: any) => {

diff --git a/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/Messages.tsx b/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/Messages.tsx

index eecf792a..450cb59b 100644

--- a/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/Messages.tsx

+++ b/km-console/packages/layout-clusters-fe/src/pages/TopicDetail/Messages.tsx

@@ -1,5 +1,6 @@

import React, { useState, useEffect } from 'react';

-import { Alert, Button, Checkbox, Form, IconFont, Input, ProTable, Select, Tooltip, Utils } from 'knowdesign';

+import { Alert, Button, Checkbox, Form, Input, ProTable, Select, Tooltip, Utils } from 'knowdesign';

+import { IconFont } from '@knowdesign/icons';

import Api from '@src/api';

import { useParams, useHistory } from 'react-router-dom';

import { getTopicMessagesColmns } from './config';

@@ -10,7 +11,7 @@ const defaultParams: any = {

maxRecords: 100,

pullTimeoutUnitMs: 5000,

// filterPartitionId: 1,

- filterOffsetReset: 0

+ filterOffsetReset: 0,

};

const defaultpaPagination = {

current: 1,

@@ -32,8 +33,8 @@ const TopicMessages = (props: any) => {

// 获取消息开始位置

const offsetResetList = [

- { 'label': 'latest', value: 0 },

- { 'label': 'earliest', value: 1 }

+ { label: 'latest', value: 0 },

+ { label: 'earliest', value: 1 },

];

// 默认排序

@@ -99,10 +100,10 @@ const TopicMessages = (props: any) => {

const onTableChange = (pagination: any, filters: any, sorter: any, extra: any) => {

setPagination(pagination);

// 只有排序事件时,触发重新请求后端数据

- if(extra.action === 'sort') {

+ if (extra.action === 'sort') {

setSorter({

sortField: sorter.field || '',

- sortType: sorter.order ? sorter.order.substring(0, sorter.order.indexOf('end')) : ''

+ sortType: sorter.order ? sorter.order.substring(0, sorter.order.indexOf('end')) : '',

});

}

// const asc = sorter?.order && sorter?.order === 'ascend' ? true : false;

@@ -137,11 +138,11 @@ const TopicMessages = (props: any) => {

## Star History

diff --git a/Releases_Notes.md b/Releases_Notes.md

index 616d1eab..f453b582 100644

--- a/Releases_Notes.md

+++ b/Releases_Notes.md

@@ -1,5 +1,66 @@

+## v3.0.0

+

+**Bug修复**

+- 修复 Group 指标防重复采集不生效问题

+- 修复自动创建 ES 索引模版失败问题

+- 修复 Group+Topic 列表中存在已删除Topic的问题

+- 修复使用 MySQL-8 ,因兼容问题, start_time 信息为 NULL 时,会导致创建任务失败的问题

+- 修复 Group 信息表更新时,出现死锁的问题

+- 修复图表补点逻辑与图表时间范围不适配的问题

+

+

+**体验优化**

+- 按照资源类别,拆分健康巡检任务

+- 优化 Group 详情页的指标为实时获取

+- 图表拖拽排序支持用户级存储

+- 多集群列表 ZK 信息展示兼容无 ZK 情况

+- Topic 详情消息预览支持复制功能

+- 部分内容大数字支持千位分割符展示

+

+

+**新增**

+- 集群信息中,新增 Zookeeper 客户端配置字段

+- 集群信息中,新增 Kafka 集群运行模式字段

+- 新增 docker-compose 的部署方式

+

+

+

+## v3.0.0-beta.3

+

+**文档**

+- FAQ 补充权限识别失败问题的说明

+- 同步更新文档,保持与官网一致

+

+

+**Bug修复**

+- Offset 信息获取时,过滤掉无 Leader 的分区

+- 升级 oshi-core 版本至 5.6.1 版本,修复 Windows 系统获取系统指标失败问题

+- 修复 JMX 连接被关闭后,未进行重建的问题

+- 修复因 DB 中 Broker 信息不存在导致 TotalLogSize 指标获取时抛空指针问题

+- 修复 dml-logi.sql 中,SQL 注释错误的问题

+- 修复 startup.sh 中,识别操作系统类型错误的问题

+- 修复配置管理页面删除配置失败的问题

+- 修复系统管理应用文件引用路径

+- 修复 Topic Messages 详情提示信息点击跳转 404 的问题

+- 修复扩副本时,当前副本数不显示问题

+

+

+**体验优化**

+- Topic-Messages 页面,增加返回数据的排序以及按照Earliest/Latest的获取方式

+- 优化 GroupOffsetResetEnum 类名为 OffsetTypeEnum,使得类名含义更准确

+- 移动 KafkaZKDAO 类,及 Kafka Znode 实体类的位置,使得 Kafka Zookeeper DAO 更加内聚及便于识别

+- 后端补充 Overview 页面指标排序的功能

+- 前端 Webpack 配置优化

+- Cluster Overview 图表取消放大展示功能

+- 列表页增加手动刷新功能

+- 接入/编辑集群,优化 JMX-PORT,Version 信息的回显,优化JMX信息的展示

+- 提高登录页面图片展示清晰度

+- 部分样式和文案优化

+

+---

+

## v3.0.0-beta.2

**文档**

diff --git a/docs/install_guide/单机部署手册.md b/docs/install_guide/单机部署手册.md

index f9f5ad1a..c42e6318 100644

--- a/docs/install_guide/单机部署手册.md

+++ b/docs/install_guide/单机部署手册.md

@@ -59,6 +59,8 @@ sh deploy_KnowStreaming-offline.sh

### 2.1.3、容器部署

+#### 2.1.3.1、Helm

+

**环境依赖**

- Kubernetes >= 1.14 ,Helm >= 2.17.0

@@ -72,11 +74,11 @@ sh deploy_KnowStreaming-offline.sh

```bash

# 相关镜像在Docker Hub都可以下载

# 快速安装(NAMESPACE需要更改为已存在的,安装启动需要几分钟初始化请稍等~)

-helm install -n [NAMESPACE] [NAME] http://download.knowstreaming.com/charts/knowstreaming-manager-0.1.3.tgz

+helm install -n [NAMESPACE] [NAME] http://download.knowstreaming.com/charts/knowstreaming-manager-0.1.5.tgz

# 获取KnowStreaming前端ui的service. 默认nodeport方式.

# (http://nodeIP:nodeport,默认用户名密码:admin/admin2022_)

-# `v3.0.0-beta.2`版本开始,默认账号密码为`admin` / `admin`;

+# `v3.0.0-beta.2`版本开始(helm chart包版本0.1.4开始),默认账号密码为`admin` / `admin`;

# 添加仓库

helm repo add knowstreaming http://download.knowstreaming.com/charts

@@ -87,6 +89,156 @@ helm pull knowstreaming/knowstreaming-manager

+#### 2.1.3.2、Docker Compose

+**环境依赖**

+

+- [Docker](https://docs.docker.com/engine/install/)

+- [Docker Compose](https://docs.docker.com/compose/install/)

+

+

+**安装命令**

+```bash

+# `v3.0.0-beta.2`版本开始(docker镜像为0.2.0版本开始),默认账号密码为`admin` / `admin`;

+# https://hub.docker.com/u/knowstreaming 在此处寻找最新镜像版本

+# mysql与es可以使用自己搭建的服务,调整对应配置即可

+

+# 复制docker-compose.yml到指定位置后执行下方命令即可启动

+docker-compose up -d

+```

+

+**验证安装**

+```shell

+docker-compose ps

+# 验证启动 - 状态为 UP 则表示成功

+ Name Command State Ports

+----------------------------------------------------------------------------------------------------

+elasticsearch-single /usr/local/bin/docker-entr ... Up 9200/tcp, 9300/tcp

+knowstreaming-init /bin/bash /es_template_cre ... Up

+knowstreaming-manager /bin/sh /ks-start.sh Up 80/tcp

+knowstreaming-mysql /entrypoint.sh mysqld Up (health: starting) 3306/tcp, 33060/tcp

+knowstreaming-ui /docker-entrypoint.sh ngin ... Up 0.0.0.0:80->80/tcp

+

+# 稍等一分钟左右 knowstreaming-init 会退出,表示es初始化完成,可以访问页面

+ Name Command State Ports

+-------------------------------------------------------------------------------------------

+knowstreaming-init /bin/bash /es_template_cre ... Exit 0

+knowstreaming-mysql /entrypoint.sh mysqld Up (healthy) 3306/tcp, 33060/tcp

+```

+

+**访问**

+```http request

+http://127.0.0.1:80/

+```

+

+

+**docker-compose.yml**

+```yml

+version: "2"

+services:

+ # *不要调整knowstreaming-manager服务名称,ui中会用到

+ knowstreaming-manager:

+ image: knowstreaming/knowstreaming-manager:latest

+ container_name: knowstreaming-manager

+ privileged: true

+ restart: always

+ depends_on:

+ - elasticsearch-single

+ - knowstreaming-mysql

+ expose:

+ - 80

+ command:

+ - /bin/sh

+ - /ks-start.sh

+ environment:

+ TZ: Asia/Shanghai

+ # mysql服务地址

+ SERVER_MYSQL_ADDRESS: knowstreaming-mysql:3306

+ # mysql数据库名

+ SERVER_MYSQL_DB: know_streaming

+ # mysql用户名

+ SERVER_MYSQL_USER: root

+ # mysql用户密码

+ SERVER_MYSQL_PASSWORD: admin2022_

+ # es服务地址

+ SERVER_ES_ADDRESS: elasticsearch-single:9200

+ # 服务JVM参数

+ JAVA_OPTS: -Xmx1g -Xms1g

+ # 对于kafka中ADVERTISED_LISTENERS填写的hostname可以通过该方式完成

+# extra_hosts:

+# - "hostname:x.x.x.x"

+ # 服务日志路径

+# volumes:

+# - /ks/manage/log:/logs

+ knowstreaming-ui:

+ image: knowstreaming/knowstreaming-ui:latest

+ container_name: knowstreaming-ui

+ restart: always

+ ports:

+ - '80:80'

+ environment:

+ TZ: Asia/Shanghai

+ depends_on:

+ - knowstreaming-manager

+# extra_hosts:

+# - "hostname:x.x.x.x"

+ elasticsearch-single:

+ image: docker.io/library/elasticsearch:7.6.2

+ container_name: elasticsearch-single

+ restart: always

+ expose:

+ - 9200

+ - 9300

+# ports:

+# - '9200:9200'

+# - '9300:9300'

+ environment:

+ TZ: Asia/Shanghai

+ # es的JVM参数

+ ES_JAVA_OPTS: -Xms512m -Xmx512m

+ # 单节点配置,多节点集群参考 https://www.elastic.co/guide/en/elasticsearch/reference/7.6/docker.html#docker-compose-file

+ discovery.type: single-node

+ # 数据持久化路径

+# volumes:

+# - /ks/es/data:/usr/share/elasticsearch/data

+

+ # es初始化服务,与manager使用同一镜像

+ # 首次启动es需初始化模版和索引,后续会自动创建

+ knowstreaming-init:

+ image: knowstreaming/knowstreaming-manager:latest

+ container_name: knowstreaming-init

+ depends_on:

+ - elasticsearch-single

+ command:

+ - /bin/bash

+ - /es_template_create.sh

+ environment:

+ TZ: Asia/Shanghai

+ # es服务地址

+ SERVER_ES_ADDRESS: elasticsearch-single:9200

+

+ knowstreaming-mysql:

+ image: knowstreaming/knowstreaming-mysql:latest

+ container_name: knowstreaming-mysql

+ restart: always

+ environment:

+ TZ: Asia/Shanghai

+ # root 用户密码

+ MYSQL_ROOT_PASSWORD: admin2022_

+ # 初始化时创建的数据库名称

+ MYSQL_DATABASE: know_streaming

+ # 通配所有host,可以访问远程

+ MYSQL_ROOT_HOST: '%'

+ expose:

+ - 3306

+# ports:

+# - '3306:3306'

+ # 数据持久化路径

+# volumes:

+# - /ks/mysql/data:/data/mysql

+```

+

+

+

### 2.1.4、手动部署

**部署流程**

diff --git a/docs/install_guide/版本升级手册.md b/docs/install_guide/版本升级手册.md

index 2af3f69a..a75f71fd 100644

--- a/docs/install_guide/版本升级手册.md

+++ b/docs/install_guide/版本升级手册.md

@@ -1,12 +1,28 @@

## 6.2、版本升级手册

-注意:如果想升级至具体版本,需要将你当前版本至你期望使用版本的变更统统执行一遍,然后才能正常使用。

+注意:

+- 如果想升级至具体版本,需要将你当前版本至你期望使用版本的变更统统执行一遍,然后才能正常使用。

+- 如果中间某个版本没有升级信息,则表示该版本直接替换安装包即可从前一个版本升级至当前版本。

+

### 6.2.0、升级至 `master` 版本

暂无

-### 6.2.1、升级至 `v3.0.0-beta.2`版本

+

+### 6.2.1、升级至 `v3.0.0` 版本

+

+**SQL 变更**

+

+```sql

+ALTER TABLE `ks_km_physical_cluster`

+ADD COLUMN `zk_properties` TEXT NULL COMMENT 'ZK配置' AFTER `jmx_properties`;

+```

+

+---

+

+

+### 6.2.2、升级至 `v3.0.0-beta.2`版本

**配置变更**

@@ -77,7 +93,7 @@ ALTER TABLE `logi_security_oplog`

---

-### 6.2.2、升级至 `v3.0.0-beta.1`版本

+### 6.2.3、升级至 `v3.0.0-beta.1`版本

**SQL 变更**

@@ -96,7 +112,7 @@ ALTER COLUMN `operation_methods` set default '';

---

-### 6.2.3、`2.x`版本 升级至 `v3.0.0-beta.0`版本

+### 6.2.4、`2.x`版本 升级至 `v3.0.0-beta.0`版本

**升级步骤:**

diff --git a/docs/user_guide/faq.md b/docs/user_guide/faq.md

index 764c58b9..98dfbf83 100644

--- a/docs/user_guide/faq.md

+++ b/docs/user_guide/faq.md

@@ -166,3 +166,19 @@ Node 版本: v12.22.12

需要到具体的应用中执行 `npm run start`,例如 `cd packages/layout-clusters-fe` 后,执行 `npm run start`。

应用启动后需要到基座应用中查看(需要启动基座应用,即 layout-clusters-fe)。

+

+

+## 8.12、权限识别失败问题

+1、使用admin账号登陆KnowStreaming时,点击系统管理-用户管理-角色管理-新增角色,查看页面是否正常。

+

+

## Star History

diff --git a/Releases_Notes.md b/Releases_Notes.md

index 616d1eab..f453b582 100644

--- a/Releases_Notes.md

+++ b/Releases_Notes.md

@@ -1,5 +1,66 @@

+## v3.0.0

+

+**Bug修复**

+- 修复 Group 指标防重复采集不生效问题

+- 修复自动创建 ES 索引模版失败问题

+- 修复 Group+Topic 列表中存在已删除Topic的问题

+- 修复使用 MySQL-8 ,因兼容问题, start_time 信息为 NULL 时,会导致创建任务失败的问题

+- 修复 Group 信息表更新时,出现死锁的问题

+- 修复图表补点逻辑与图表时间范围不适配的问题

+

+

+**体验优化**

+- 按照资源类别,拆分健康巡检任务

+- 优化 Group 详情页的指标为实时获取

+- 图表拖拽排序支持用户级存储

+- 多集群列表 ZK 信息展示兼容无 ZK 情况

+- Topic 详情消息预览支持复制功能

+- 部分内容大数字支持千位分割符展示

+

+

+**新增**

+- 集群信息中,新增 Zookeeper 客户端配置字段

+- 集群信息中,新增 Kafka 集群运行模式字段

+- 新增 docker-compose 的部署方式

+

+

+

+## v3.0.0-beta.3

+

+**文档**

+- FAQ 补充权限识别失败问题的说明

+- 同步更新文档,保持与官网一致

+

+

+**Bug修复**

+- Offset 信息获取时,过滤掉无 Leader 的分区

+- 升级 oshi-core 版本至 5.6.1 版本,修复 Windows 系统获取系统指标失败问题

+- 修复 JMX 连接被关闭后,未进行重建的问题

+- 修复因 DB 中 Broker 信息不存在导致 TotalLogSize 指标获取时抛空指针问题

+- 修复 dml-logi.sql 中,SQL 注释错误的问题

+- 修复 startup.sh 中,识别操作系统类型错误的问题

+- 修复配置管理页面删除配置失败的问题

+- 修复系统管理应用文件引用路径

+- 修复 Topic Messages 详情提示信息点击跳转 404 的问题

+- 修复扩副本时,当前副本数不显示问题

+

+

+**体验优化**

+- Topic-Messages 页面,增加返回数据的排序以及按照Earliest/Latest的获取方式

+- 优化 GroupOffsetResetEnum 类名为 OffsetTypeEnum,使得类名含义更准确

+- 移动 KafkaZKDAO 类,及 Kafka Znode 实体类的位置,使得 Kafka Zookeeper DAO 更加内聚及便于识别

+- 后端补充 Overview 页面指标排序的功能

+- 前端 Webpack 配置优化

+- Cluster Overview 图表取消放大展示功能

+- 列表页增加手动刷新功能

+- 接入/编辑集群,优化 JMX-PORT,Version 信息的回显,优化JMX信息的展示

+- 提高登录页面图片展示清晰度

+- 部分样式和文案优化

+

+---

+

## v3.0.0-beta.2

**文档**

diff --git a/docs/install_guide/单机部署手册.md b/docs/install_guide/单机部署手册.md

index f9f5ad1a..c42e6318 100644

--- a/docs/install_guide/单机部署手册.md

+++ b/docs/install_guide/单机部署手册.md

@@ -59,6 +59,8 @@ sh deploy_KnowStreaming-offline.sh

### 2.1.3、容器部署

+#### 2.1.3.1、Helm

+

**环境依赖**

- Kubernetes >= 1.14 ,Helm >= 2.17.0

@@ -72,11 +74,11 @@ sh deploy_KnowStreaming-offline.sh

```bash

# 相关镜像在Docker Hub都可以下载

# 快速安装(NAMESPACE需要更改为已存在的,安装启动需要几分钟初始化请稍等~)

-helm install -n [NAMESPACE] [NAME] http://download.knowstreaming.com/charts/knowstreaming-manager-0.1.3.tgz

+helm install -n [NAMESPACE] [NAME] http://download.knowstreaming.com/charts/knowstreaming-manager-0.1.5.tgz

# 获取KnowStreaming前端ui的service. 默认nodeport方式.

# (http://nodeIP:nodeport,默认用户名密码:admin/admin2022_)

-# `v3.0.0-beta.2`版本开始,默认账号密码为`admin` / `admin`;

+# `v3.0.0-beta.2`版本开始(helm chart包版本0.1.4开始),默认账号密码为`admin` / `admin`;

# 添加仓库

helm repo add knowstreaming http://download.knowstreaming.com/charts

@@ -87,6 +89,156 @@ helm pull knowstreaming/knowstreaming-manager

+#### 2.1.3.2、Docker Compose

+**环境依赖**

+

+- [Docker](https://docs.docker.com/engine/install/)

+- [Docker Compose](https://docs.docker.com/compose/install/)

+

+

+**安装命令**

+```bash

+# `v3.0.0-beta.2`版本开始(docker镜像为0.2.0版本开始),默认账号密码为`admin` / `admin`;

+# https://hub.docker.com/u/knowstreaming 在此处寻找最新镜像版本

+# mysql与es可以使用自己搭建的服务,调整对应配置即可

+

+# 复制docker-compose.yml到指定位置后执行下方命令即可启动

+docker-compose up -d

+```

+

+**验证安装**

+```shell

+docker-compose ps

+# 验证启动 - 状态为 UP 则表示成功

+ Name Command State Ports

+----------------------------------------------------------------------------------------------------

+elasticsearch-single /usr/local/bin/docker-entr ... Up 9200/tcp, 9300/tcp

+knowstreaming-init /bin/bash /es_template_cre ... Up

+knowstreaming-manager /bin/sh /ks-start.sh Up 80/tcp

+knowstreaming-mysql /entrypoint.sh mysqld Up (health: starting) 3306/tcp, 33060/tcp

+knowstreaming-ui /docker-entrypoint.sh ngin ... Up 0.0.0.0:80->80/tcp

+

+# 稍等一分钟左右 knowstreaming-init 会退出,表示es初始化完成,可以访问页面

+ Name Command State Ports

+-------------------------------------------------------------------------------------------

+knowstreaming-init /bin/bash /es_template_cre ... Exit 0

+knowstreaming-mysql /entrypoint.sh mysqld Up (healthy) 3306/tcp, 33060/tcp

+```

+

+**访问**

+```http request

+http://127.0.0.1:80/

+```

+

+

+**docker-compose.yml**

+```yml

+version: "2"

+services:

+ # *不要调整knowstreaming-manager服务名称,ui中会用到

+ knowstreaming-manager:

+ image: knowstreaming/knowstreaming-manager:latest

+ container_name: knowstreaming-manager

+ privileged: true

+ restart: always

+ depends_on:

+ - elasticsearch-single

+ - knowstreaming-mysql

+ expose:

+ - 80

+ command:

+ - /bin/sh

+ - /ks-start.sh

+ environment:

+ TZ: Asia/Shanghai

+ # mysql服务地址

+ SERVER_MYSQL_ADDRESS: knowstreaming-mysql:3306

+ # mysql数据库名

+ SERVER_MYSQL_DB: know_streaming

+ # mysql用户名

+ SERVER_MYSQL_USER: root

+ # mysql用户密码

+ SERVER_MYSQL_PASSWORD: admin2022_

+ # es服务地址

+ SERVER_ES_ADDRESS: elasticsearch-single:9200

+ # 服务JVM参数

+ JAVA_OPTS: -Xmx1g -Xms1g

+ # 对于kafka中ADVERTISED_LISTENERS填写的hostname可以通过该方式完成

+# extra_hosts:

+# - "hostname:x.x.x.x"

+ # 服务日志路径

+# volumes:

+# - /ks/manage/log:/logs

+ knowstreaming-ui:

+ image: knowstreaming/knowstreaming-ui:latest

+ container_name: knowstreaming-ui

+ restart: always

+ ports:

+ - '80:80'

+ environment:

+ TZ: Asia/Shanghai

+ depends_on:

+ - knowstreaming-manager

+# extra_hosts:

+# - "hostname:x.x.x.x"

+ elasticsearch-single:

+ image: docker.io/library/elasticsearch:7.6.2

+ container_name: elasticsearch-single

+ restart: always

+ expose:

+ - 9200

+ - 9300

+# ports:

+# - '9200:9200'

+# - '9300:9300'

+ environment:

+ TZ: Asia/Shanghai

+ # es的JVM参数

+ ES_JAVA_OPTS: -Xms512m -Xmx512m

+ # 单节点配置,多节点集群参考 https://www.elastic.co/guide/en/elasticsearch/reference/7.6/docker.html#docker-compose-file

+ discovery.type: single-node

+ # 数据持久化路径

+# volumes:

+# - /ks/es/data:/usr/share/elasticsearch/data

+

+ # es初始化服务,与manager使用同一镜像

+ # 首次启动es需初始化模版和索引,后续会自动创建

+ knowstreaming-init:

+ image: knowstreaming/knowstreaming-manager:latest

+ container_name: knowstreaming-init

+ depends_on:

+ - elasticsearch-single

+ command:

+ - /bin/bash

+ - /es_template_create.sh

+ environment:

+ TZ: Asia/Shanghai

+ # es服务地址

+ SERVER_ES_ADDRESS: elasticsearch-single:9200

+

+ knowstreaming-mysql:

+ image: knowstreaming/knowstreaming-mysql:latest

+ container_name: knowstreaming-mysql

+ restart: always

+ environment:

+ TZ: Asia/Shanghai

+ # root 用户密码

+ MYSQL_ROOT_PASSWORD: admin2022_

+ # 初始化时创建的数据库名称

+ MYSQL_DATABASE: know_streaming

+ # 通配所有host,可以访问远程

+ MYSQL_ROOT_HOST: '%'

+ expose:

+ - 3306

+# ports:

+# - '3306:3306'

+ # 数据持久化路径

+# volumes:

+# - /ks/mysql/data:/data/mysql

+```

+

+

+

### 2.1.4、手动部署

**部署流程**

diff --git a/docs/install_guide/版本升级手册.md b/docs/install_guide/版本升级手册.md

index 2af3f69a..a75f71fd 100644

--- a/docs/install_guide/版本升级手册.md

+++ b/docs/install_guide/版本升级手册.md

@@ -1,12 +1,28 @@

## 6.2、版本升级手册

-注意:如果想升级至具体版本,需要将你当前版本至你期望使用版本的变更统统执行一遍,然后才能正常使用。

+注意:

+- 如果想升级至具体版本,需要将你当前版本至你期望使用版本的变更统统执行一遍,然后才能正常使用。

+- 如果中间某个版本没有升级信息,则表示该版本直接替换安装包即可从前一个版本升级至当前版本。

+

### 6.2.0、升级至 `master` 版本

暂无

-### 6.2.1、升级至 `v3.0.0-beta.2`版本

+

+### 6.2.1、升级至 `v3.0.0` 版本

+

+**SQL 变更**

+

+```sql

+ALTER TABLE `ks_km_physical_cluster`

+ADD COLUMN `zk_properties` TEXT NULL COMMENT 'ZK配置' AFTER `jmx_properties`;

+```

+

+---

+

+

+### 6.2.2、升级至 `v3.0.0-beta.2`版本

**配置变更**

@@ -77,7 +93,7 @@ ALTER TABLE `logi_security_oplog`

---

-### 6.2.2、升级至 `v3.0.0-beta.1`版本

+### 6.2.3、升级至 `v3.0.0-beta.1`版本

**SQL 变更**

@@ -96,7 +112,7 @@ ALTER COLUMN `operation_methods` set default '';

---

-### 6.2.3、`2.x`版本 升级至 `v3.0.0-beta.0`版本

+### 6.2.4、`2.x`版本 升级至 `v3.0.0-beta.0`版本

**升级步骤:**

diff --git a/docs/user_guide/faq.md b/docs/user_guide/faq.md

index 764c58b9..98dfbf83 100644

--- a/docs/user_guide/faq.md

+++ b/docs/user_guide/faq.md

@@ -166,3 +166,19 @@ Node 版本: v12.22.12

需要到具体的应用中执行 `npm run start`,例如 `cd packages/layout-clusters-fe` 后,执行 `npm run start`。

应用启动后需要到基座应用中查看(需要启动基座应用,即 layout-clusters-fe)。

+

+

+## 8.12、权限识别失败问题

+1、使用admin账号登陆KnowStreaming时,点击系统管理-用户管理-角色管理-新增角色,查看页面是否正常。

+

+ +

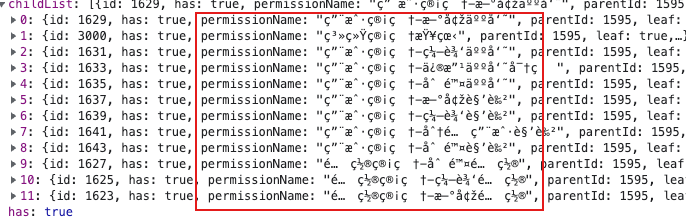

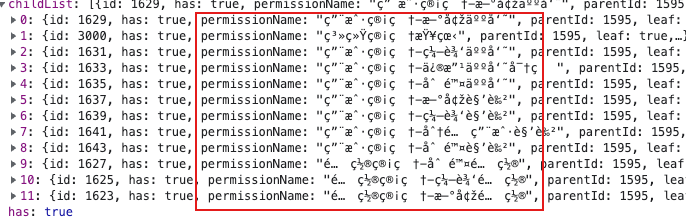

+2、查看'/logi-security/api/v1/permission/tree'接口返回值,出现如下图所示乱码现象。

+

+

+3、查看logi_security_permission表,看看是否出现了中文乱码现象。

+

+根据以上几点,我们可以确定是由于数据库乱码造成的权限识别失败问题。

+

++ 原因:由于数据库编码和我们提供的脚本不一致,数据库里的数据发生了乱码,因此出现权限识别失败问题。

++ 解决方案:清空数据库数据,将数据库字符集调整为utf8,最后重新执行[dml-logi.sql](https://github.com/didi/KnowStreaming/blob/master/km-dist/init/sql/dml-logi.sql)脚本导入数据即可。

diff --git a/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java b/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java

index 1095d5ee..5ccc3e98 100644

--- a/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java

+++ b/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java

@@ -272,15 +272,11 @@ public class GroupManagerImpl implements GroupManager {

// 获取Group指标信息

- Result

+

+2、查看'/logi-security/api/v1/permission/tree'接口返回值,出现如下图所示乱码现象。

+

+

+3、查看logi_security_permission表,看看是否出现了中文乱码现象。

+

+根据以上几点,我们可以确定是由于数据库乱码造成的权限识别失败问题。

+

++ 原因:由于数据库编码和我们提供的脚本不一致,数据库里的数据发生了乱码,因此出现权限识别失败问题。

++ 解决方案:清空数据库数据,将数据库字符集调整为utf8,最后重新执行[dml-logi.sql](https://github.com/didi/KnowStreaming/blob/master/km-dist/init/sql/dml-logi.sql)脚本导入数据即可。

diff --git a/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java b/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java

index 1095d5ee..5ccc3e98 100644

--- a/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java

+++ b/km-biz/src/main/java/com/xiaojukeji/know/streaming/km/biz/group/impl/GroupManagerImpl.java

@@ -272,15 +272,11 @@ public class GroupManagerImpl implements GroupManager {

// 获取Group指标信息

- Result