mirror of

https://github.com/didi/KnowStreaming.git

synced 2026-01-06 05:22:16 +08:00

41

Dockerfile

Normal file

41

Dockerfile

Normal file

@@ -0,0 +1,41 @@

|

|||||||

|

ARG MAVEN_VERSION=3.8.4-openjdk-8-slim

|

||||||

|

ARG JAVA_VERSION=8-jdk-alpine3.9

|

||||||

|

FROM maven:${MAVEN_VERSION} AS builder

|

||||||

|

ARG CONSOLE_ENABLE=true

|

||||||

|

|

||||||

|

WORKDIR /opt

|

||||||

|

COPY . .

|

||||||

|

COPY distribution/conf/settings.xml /root/.m2/settings.xml

|

||||||

|

|

||||||

|

# whether to build console

|

||||||

|

RUN set -eux; \

|

||||||

|

if [ $CONSOLE_ENABLE = 'false' ]; then \

|

||||||

|

sed -i "/kafka-manager-console/d" pom.xml; \

|

||||||

|

fi \

|

||||||

|

&& mvn -Dmaven.test.skip=true clean install -U

|

||||||

|

|

||||||

|

FROM openjdk:${JAVA_VERSION}

|

||||||

|

|

||||||

|

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && apk add --no-cache tini

|

||||||

|

|

||||||

|

ENV TZ=Asia/Shanghai

|

||||||

|

ENV AGENT_HOME=/opt/agent/

|

||||||

|

|

||||||

|

COPY --from=builder /opt/kafka-manager-web/target/kafka-manager.jar /opt

|

||||||

|

COPY --from=builder /opt/container/dockerfiles/docker-depends/config.yaml $AGENT_HOME

|

||||||

|

COPY --from=builder /opt/container/dockerfiles/docker-depends/jmx_prometheus_javaagent-0.15.0.jar $AGENT_HOME

|

||||||

|

COPY --from=builder /opt/distribution/conf/application-docker.yml /opt

|

||||||

|

|

||||||

|

WORKDIR /opt

|

||||||

|

|

||||||

|

ENV JAVA_AGENT="-javaagent:$AGENT_HOME/jmx_prometheus_javaagent-0.15.0.jar=9999:$AGENT_HOME/config.yaml"

|

||||||

|

ENV JAVA_HEAP_OPTS="-Xms1024M -Xmx1024M -Xmn100M "

|

||||||

|

ENV JAVA_OPTS="-verbose:gc \

|

||||||

|

-XX:MaxMetaspaceSize=256M -XX:+DisableExplicitGC -XX:+UseStringDeduplication \

|

||||||

|

-XX:+UseG1GC -XX:+HeapDumpOnOutOfMemoryError -XX:-UseContainerSupport"

|

||||||

|

|

||||||

|

EXPOSE 8080 9999

|

||||||

|

|

||||||

|

ENTRYPOINT ["tini", "--"]

|

||||||

|

|

||||||

|

CMD [ "sh", "-c", "java -jar $JAVA_AGENT $JAVA_HEAP_OPTS $JAVA_OPTS kafka-manager.jar --spring.config.location=application-docker.yml"]

|

||||||

16

README.md

16

README.md

@@ -61,15 +61,15 @@

|

|||||||

- [滴滴LogiKM FAQ](docs/user_guide/faq.md)

|

- [滴滴LogiKM FAQ](docs/user_guide/faq.md)

|

||||||

|

|

||||||

### 2.2 社区文章

|

### 2.2 社区文章

|

||||||

- [kafka最强最全知识图谱](https://www.szzdzhp.com/kafka/)

|

|

||||||

- [LogiKM新用户入门系列文章专栏 --石臻臻](https://www.szzdzhp.com/categories/LogIKM/)

|

|

||||||

- [滴滴云官网产品介绍](https://www.didiyun.com/production/logi-KafkaManager.html)

|

- [滴滴云官网产品介绍](https://www.didiyun.com/production/logi-KafkaManager.html)

|

||||||

- [7年沉淀之作--滴滴Logi日志服务套件](https://mp.weixin.qq.com/s/-KQp-Qo3WKEOc9wIR2iFnw)

|

- [7年沉淀之作--滴滴Logi日志服务套件](https://mp.weixin.qq.com/s/-KQp-Qo3WKEOc9wIR2iFnw)

|

||||||

- [滴滴Logi-KafkaManager 一站式Kafka监控与管控平台](https://mp.weixin.qq.com/s/9qSZIkqCnU6u9nLMvOOjIQ)

|

- [滴滴LogiKM 一站式Kafka监控与管控平台](https://mp.weixin.qq.com/s/9qSZIkqCnU6u9nLMvOOjIQ)

|

||||||

- [滴滴Logi-KafkaManager 开源之路](https://xie.infoq.cn/article/0223091a99e697412073c0d64)

|

- [滴滴LogiKM 开源之路](https://xie.infoq.cn/article/0223091a99e697412073c0d64)

|

||||||

- [滴滴Logi-KafkaManager 系列视频教程](https://space.bilibili.com/442531657/channel/seriesdetail?sid=571649)

|

- [滴滴LogiKM 系列视频教程](https://space.bilibili.com/442531657/channel/seriesdetail?sid=571649)

|

||||||

- [kafka实践(十五):滴滴开源Kafka管控平台 Logi-KafkaManager研究--A叶子叶来](https://blog.csdn.net/yezonggang/article/details/113106244)

|

- [kafka最强最全知识图谱](https://www.szzdzhp.com/kafka/)

|

||||||

|

- [滴滴LogiKM新用户入门系列文章专栏 --石臻臻](https://www.szzdzhp.com/categories/LogIKM/)

|

||||||

|

- [kafka实践(十五):滴滴开源Kafka管控平台 LogiKM研究--A叶子叶来](https://blog.csdn.net/yezonggang/article/details/113106244)

|

||||||

|

- [基于云原生应用管理平台Rainbond安装 滴滴LogiKM](https://www.rainbond.com/docs/opensource-app/logikm/?channel=logikm)

|

||||||

|

|

||||||

## 3 滴滴Logi开源用户交流群

|

## 3 滴滴Logi开源用户交流群

|

||||||

|

|

||||||

@@ -104,7 +104,7 @@ PS:提问请尽量把问题一次性描述清楚,并告知环境信息情况

|

|||||||

|

|

||||||

### 5.1 内部核心人员

|

### 5.1 内部核心人员

|

||||||

|

|

||||||

`iceyuhui`、`liuyaguang`、`limengmonty`、`zhangliangmike`、`xiepeng`、`nullhuangyiming`、`zengqiao`、`eilenexuzhe`、`huangjiaweihjw`、`zhaoyinrui`、`marzkonglingxu`、`joysunchao`、`石臻臻`

|

`iceyuhui`、`liuyaguang`、`limengmonty`、`zhangliangmike`、`zhaoqingrong`、`xiepeng`、`nullhuangyiming`、`zengqiao`、`eilenexuzhe`、`huangjiaweihjw`、`zhaoyinrui`、`marzkonglingxu`、`joysunchao`、`石臻臻`

|

||||||

|

|

||||||

|

|

||||||

### 5.2 外部贡献者

|

### 5.2 外部贡献者

|

||||||

|

|||||||

@@ -1,29 +0,0 @@

|

|||||||

FROM openjdk:16-jdk-alpine3.13

|

|

||||||

|

|

||||||

LABEL author="fengxsong"

|

|

||||||

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && apk add --no-cache tini

|

|

||||||

|

|

||||||

ENV VERSION 2.4.2

|

|

||||||

WORKDIR /opt/

|

|

||||||

|

|

||||||

ENV AGENT_HOME /opt/agent/

|

|

||||||

COPY docker-depends/config.yaml $AGENT_HOME

|

|

||||||

COPY docker-depends/jmx_prometheus_javaagent-0.15.0.jar $AGENT_HOME

|

|

||||||

|

|

||||||

ENV JAVA_AGENT="-javaagent:$AGENT_HOME/jmx_prometheus_javaagent-0.15.0.jar=9999:$AGENT_HOME/config.yaml"

|

|

||||||

ENV JAVA_HEAP_OPTS="-Xms1024M -Xmx1024M -Xmn100M "

|

|

||||||

ENV JAVA_OPTS="-verbose:gc \

|

|

||||||

-XX:MaxMetaspaceSize=256M -XX:+DisableExplicitGC -XX:+UseStringDeduplication \

|

|

||||||

-XX:+UseG1GC -XX:+HeapDumpOnOutOfMemoryError -XX:-UseContainerSupport"

|

|

||||||

|

|

||||||

RUN wget https://github.com/didi/Logi-KafkaManager/releases/download/v${VERSION}/kafka-manager-${VERSION}.tar.gz && \

|

|

||||||

tar xvf kafka-manager-${VERSION}.tar.gz && \

|

|

||||||

mv kafka-manager-${VERSION}/kafka-manager.jar /opt/app.jar && \

|

|

||||||

mv kafka-manager-${VERSION}/application.yml /opt/application.yml && \

|

|

||||||

rm -rf kafka-manager-${VERSION}*

|

|

||||||

|

|

||||||

EXPOSE 8080 9999

|

|

||||||

|

|

||||||

ENTRYPOINT ["tini", "--"]

|

|

||||||

|

|

||||||

CMD [ "sh", "-c", "java -jar $JAVA_AGENT $JAVA_HEAP_OPTS $JAVA_OPTS app.jar --spring.config.location=application.yml"]

|

|

||||||

13

container/dockerfiles/mysql/Dockerfile

Normal file

13

container/dockerfiles/mysql/Dockerfile

Normal file

@@ -0,0 +1,13 @@

|

|||||||

|

FROM mysql:5.7.37

|

||||||

|

|

||||||

|

COPY mysqld.cnf /etc/mysql/mysql.conf.d/

|

||||||

|

ENV TZ=Asia/Shanghai

|

||||||

|

ENV MYSQL_ROOT_PASSWORD=root

|

||||||

|

|

||||||

|

RUN apt-get update \

|

||||||

|

&& apt -y install wget \

|

||||||

|

&& wget https://ghproxy.com/https://raw.githubusercontent.com/didi/LogiKM/master/distribution/conf/create_mysql_table.sql -O /docker-entrypoint-initdb.d/create_mysql_table.sql

|

||||||

|

|

||||||

|

EXPOSE 3306

|

||||||

|

|

||||||

|

VOLUME ["/var/lib/mysql"]

|

||||||

24

container/dockerfiles/mysql/mysqld.cnf

Normal file

24

container/dockerfiles/mysql/mysqld.cnf

Normal file

@@ -0,0 +1,24 @@

|

|||||||

|

[client]

|

||||||

|

default-character-set = utf8

|

||||||

|

|

||||||

|

[mysqld]

|

||||||

|

character_set_server = utf8

|

||||||

|

pid-file = /var/run/mysqld/mysqld.pid

|

||||||

|

socket = /var/run/mysqld/mysqld.sock

|

||||||

|

datadir = /var/lib/mysql

|

||||||

|

symbolic-links=0

|

||||||

|

|

||||||

|

max_allowed_packet = 10M

|

||||||

|

sort_buffer_size = 1M

|

||||||

|

read_rnd_buffer_size = 2M

|

||||||

|

max_connections=2000

|

||||||

|

|

||||||

|

lower_case_table_names=1

|

||||||

|

character-set-server=utf8

|

||||||

|

|

||||||

|

max_allowed_packet = 1G

|

||||||

|

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION

|

||||||

|

group_concat_max_len = 102400

|

||||||

|

default-time-zone = '+08:00'

|

||||||

|

[mysql]

|

||||||

|

default-character-set = utf8

|

||||||

28

distribution/conf/application-docker.yml

Normal file

28

distribution/conf/application-docker.yml

Normal file

@@ -0,0 +1,28 @@

|

|||||||

|

|

||||||

|

## kafka-manager的配置文件,该文件中的配置会覆盖默认配置

|

||||||

|

## 下面的配置信息基本就是jar中的 application.yml默认配置了;

|

||||||

|

## 可以只修改自己变更的配置,其他的删除就行了; 比如只配置一下mysql

|

||||||

|

|

||||||

|

|

||||||

|

server:

|

||||||

|

port: 8080

|

||||||

|

tomcat:

|

||||||

|

accept-count: 1000

|

||||||

|

max-connections: 10000

|

||||||

|

max-threads: 800

|

||||||

|

min-spare-threads: 100

|

||||||

|

|

||||||

|

spring:

|

||||||

|

application:

|

||||||

|

name: kafkamanager

|

||||||

|

version: 2.6.0

|

||||||

|

profiles:

|

||||||

|

active: dev

|

||||||

|

datasource:

|

||||||

|

kafka-manager:

|

||||||

|

jdbc-url: jdbc:mysql://${LOGI_MYSQL_HOST:mysql}:${LOGI_MYSQL_PORT:3306}/${LOGI_MYSQL_DATABASE:logi_kafka_manager}?characterEncoding=UTF-8&useSSL=false&serverTimezone=GMT%2B8

|

||||||

|

username: ${LOGI_MYSQL_USER:root}

|

||||||

|

password: ${LOGI_MYSQL_PASSWORD:root}

|

||||||

|

driver-class-name: com.mysql.cj.jdbc.Driver

|

||||||

|

main:

|

||||||

|

allow-bean-definition-overriding: true

|

||||||

@@ -15,7 +15,7 @@ server:

|

|||||||

spring:

|

spring:

|

||||||

application:

|

application:

|

||||||

name: kafkamanager

|

name: kafkamanager

|

||||||

version: 2.6.0

|

version: 2.6.1

|

||||||

profiles:

|

profiles:

|

||||||

active: dev

|

active: dev

|

||||||

datasource:

|

datasource:

|

||||||

|

|||||||

@@ -15,7 +15,7 @@ server:

|

|||||||

spring:

|

spring:

|

||||||

application:

|

application:

|

||||||

name: kafkamanager

|

name: kafkamanager

|

||||||

version: 2.6.0

|

version: 2.6.1

|

||||||

profiles:

|

profiles:

|

||||||

active: dev

|

active: dev

|

||||||

datasource:

|

datasource:

|

||||||

|

|||||||

10

distribution/conf/settings.xml

Normal file

10

distribution/conf/settings.xml

Normal file

@@ -0,0 +1,10 @@

|

|||||||

|

<settings>

|

||||||

|

<mirrors>

|

||||||

|

<mirror>

|

||||||

|

<id>aliyunmaven</id>

|

||||||

|

<mirrorOf>*</mirrorOf>

|

||||||

|

<name>阿里云公共仓库</name>

|

||||||

|

<url>https://maven.aliyun.com/repository/public</url>

|

||||||

|

</mirror>

|

||||||

|

</mirrors>

|

||||||

|

</settings>

|

||||||

@@ -29,6 +29,7 @@

|

|||||||

- `JMX`配置错误:见`2、解决方法`。

|

- `JMX`配置错误:见`2、解决方法`。

|

||||||

- 存在防火墙或者网络限制:网络通的另外一台机器`telnet`试一下看是否可以连接上。

|

- 存在防火墙或者网络限制:网络通的另外一台机器`telnet`试一下看是否可以连接上。

|

||||||

- 需要进行用户名及密码的认证:见`3、解决方法 —— 认证的JMX`。

|

- 需要进行用户名及密码的认证:见`3、解决方法 —— 认证的JMX`。

|

||||||

|

- 当logikm和kafka不在同一台机器上时,kafka的Jmx端口不允许其他机器访问:见`4、解决方法`。

|

||||||

|

|

||||||

|

|

||||||

错误日志例子:

|

错误日志例子:

|

||||||

@@ -99,3 +100,8 @@ SQL的例子:

|

|||||||

```sql

|

```sql

|

||||||

UPDATE cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false }' where id={xxx};

|

UPDATE cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false }' where id={xxx};

|

||||||

```

|

```

|

||||||

|

### 4、解决方法 —— 不允许其他机器访问

|

||||||

|

|

||||||

|

|

||||||

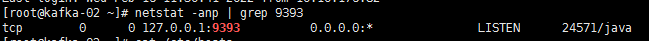

|

该图中的127.0.0.1表明该端口只允许本机访问.

|

||||||

|

在cdh中可以点击配置->搜索jmx->寻找broker_java_opts 修改com.sun.management.jmxremote.host和java.rmi.server.hostname为本机ip

|

||||||

|

|||||||

132

docs/install_guide/install_guide_docker_cn.md

Normal file

132

docs/install_guide/install_guide_docker_cn.md

Normal file

@@ -0,0 +1,132 @@

|

|||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**一站式`Apache Kafka`集群指标监控与运维管控平台**

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

## 基于Docker部署Logikm

|

||||||

|

|

||||||

|

为了方便用户快速的在自己的环境搭建Logikm,可使用docker快速搭建

|

||||||

|

|

||||||

|

### 部署Mysql

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker run --name mysql -p 3306:3306 -d registry.cn-hangzhou.aliyuncs.com/zqqq/logikm-mysql:5.7.37

|

||||||

|

```

|

||||||

|

|

||||||

|

可选变量参考[文档](https://hub.docker.com/_/mysql)

|

||||||

|

|

||||||

|

默认参数

|

||||||

|

|

||||||

|

* MYSQL_ROOT_PASSWORD:root

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 部署Logikm Allinone

|

||||||

|

|

||||||

|

> 前后端部署在一起

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker run --name logikm -p 8080:8080 --link mysql -d registry.cn-hangzhou.aliyuncs.com/zqqq/logikm:2.6.0

|

||||||

|

```

|

||||||

|

|

||||||

|

参数详解:

|

||||||

|

|

||||||

|

* -p 映射容器8080端口至宿主机的8080

|

||||||

|

* --link 连接mysql容器

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 部署前后端分离

|

||||||

|

|

||||||

|

#### 部署后端 Logikm-backend

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker run --name logikm-backend --link mysql -d registry.cn-hangzhou.aliyuncs.com/zqqq/logikm-backend:2.6.0

|

||||||

|

```

|

||||||

|

|

||||||

|

可选参数:

|

||||||

|

|

||||||

|

* -e LOGI_MYSQL_HOST mysql连接地址,默认mysql

|

||||||

|

* -e LOGI_MYSQL_PORT mysql端口,默认3306

|

||||||

|

* -e LOGI_MYSQL_DATABASE 数据库,默认logi_kafka_manager

|

||||||

|

* -e LOGI_MYSQL_USER mysql用户名,默认root

|

||||||

|

* -e LOGI_MYSQL_PASSWORD mysql密码,默认root

|

||||||

|

|

||||||

|

#### 部署前端 Logikm-front

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker run --name logikm-front -p 8088:80 --link logikm-backend -d registry.cn-hangzhou.aliyuncs.com/zqqq/logikm-front:2.6.0

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### Logi后端可配置参数

|

||||||

|

|

||||||

|

docker run 运行参数 -e 可指定环境变量如下

|

||||||

|

|

||||||

|

| 环境变量 | 变量解释 | 默认值 |

|

||||||

|

| ------------------- | ------------- | ------------------ |

|

||||||

|

| LOGI_MYSQL_HOST | mysql连接地址 | mysql |

|

||||||

|

| LOGI_MYSQL_PORT | mysql端口 | 3306 |

|

||||||

|

| LOGI_MYSQL_DATABASE | 数据库 | logi_kafka_manager |

|

||||||

|

| LOGI_MYSQL_USER | mysql用户名 | root |

|

||||||

|

| LOGI_MYSQL_PASSWORD | mysql密码 | root |

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 基于Docker源码构建

|

||||||

|

|

||||||

|

根据此文档用户可自行通过Docker 源码构建 Logikm

|

||||||

|

|

||||||

|

### 构建Mysql

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker build -t mysql:{TAG} -f container/dockerfiles/mysql/Dockerfile container/dockerfiles/mysql

|

||||||

|

```

|

||||||

|

|

||||||

|

### 构建Allinone

|

||||||

|

|

||||||

|

将前后端打包在一起

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker build -t logikm:{TAG} .

|

||||||

|

```

|

||||||

|

|

||||||

|

可选参数 --build-arg :

|

||||||

|

|

||||||

|

* MAVEN_VERSION maven镜像tag

|

||||||

|

* JAVA_VERSION java镜像tag

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 构建前后端分离

|

||||||

|

|

||||||

|

前后端分离打包

|

||||||

|

|

||||||

|

#### 构建后端

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker build --build-arg CONSOLE_ENABLE=false -t logikm-backend:{TAG} .

|

||||||

|

```

|

||||||

|

|

||||||

|

参数:

|

||||||

|

|

||||||

|

* MAVEN_VERSION maven镜像tag

|

||||||

|

* JAVA_VERSION java镜像tag

|

||||||

|

|

||||||

|

* CONSOLE_ENABLE=false 不构建console模块

|

||||||

|

|

||||||

|

#### 构建前端

|

||||||

|

|

||||||

|

```shell

|

||||||

|

docker build -t logikm-front:{TAG} -f kafka-manager-console/Dockerfile kafka-manager-console

|

||||||

|

```

|

||||||

|

|

||||||

|

可选参数:

|

||||||

|

|

||||||

|

* --build-arg:OUTPUT_PATH 修改默认打包输出路径,默认当前目录下的dist

|

||||||

20

kafka-manager-console/Dockerfile

Normal file

20

kafka-manager-console/Dockerfile

Normal file

@@ -0,0 +1,20 @@

|

|||||||

|

ARG NODE_VERSION=12.20.0

|

||||||

|

ARG NGINX_VERSION=1.21.5-alpine

|

||||||

|

FROM node:${NODE_VERSION} AS builder

|

||||||

|

ARG OUTPUT_PATH=dist

|

||||||

|

|

||||||

|

ENV TZ Asia/Shanghai

|

||||||

|

WORKDIR /opt

|

||||||

|

COPY . .

|

||||||

|

RUN npm config set registry https://registry.npm.taobao.org \

|

||||||

|

&& npm install \

|

||||||

|

# Change the output directory to dist

|

||||||

|

&& sed -i "s#../kafka-manager-web/src/main/resources/templates#$OUTPUT_PATH#g" webpack.config.js \

|

||||||

|

&& npm run prod-build

|

||||||

|

|

||||||

|

FROM nginx:${NGINX_VERSION}

|

||||||

|

|

||||||

|

ENV TZ=Asia/Shanghai

|

||||||

|

|

||||||

|

COPY --from=builder /opt/dist /opt/dist

|

||||||

|

COPY --from=builder /opt/web.conf /etc/nginx/conf.d/default.conf

|

||||||

@@ -1,6 +1,6 @@

|

|||||||

{

|

{

|

||||||

"name": "logi-kafka",

|

"name": "logi-kafka",

|

||||||

"version": "2.6.0",

|

"version": "2.6.1",

|

||||||

"description": "",

|

"description": "",

|

||||||

"scripts": {

|

"scripts": {

|

||||||

"prestart": "npm install --save-dev webpack-dev-server",

|

"prestart": "npm install --save-dev webpack-dev-server",

|

||||||

|

|||||||

@@ -145,7 +145,7 @@ export const Header = observer((props: IHeader) => {

|

|||||||

<div className="left-content">

|

<div className="left-content">

|

||||||

<img className="kafka-header-icon" src={logoUrl} alt="" />

|

<img className="kafka-header-icon" src={logoUrl} alt="" />

|

||||||

<span className="kafka-header-text">LogiKM</span>

|

<span className="kafka-header-text">LogiKM</span>

|

||||||

<a className='kafka-header-version' href="https://github.com/didi/Logi-KafkaManager/releases" target='_blank'>v2.6.0</a>

|

<a className='kafka-header-version' href="https://github.com/didi/Logi-KafkaManager/releases" target='_blank'>v2.6.1</a>

|

||||||

{/* 添加版本超链接 */}

|

{/* 添加版本超链接 */}

|

||||||

</div>

|

</div>

|

||||||

<div className="mid-content">

|

<div className="mid-content">

|

||||||

|

|||||||

13

kafka-manager-console/web.conf

Normal file

13

kafka-manager-console/web.conf

Normal file

@@ -0,0 +1,13 @@

|

|||||||

|

server {

|

||||||

|

listen 80;

|

||||||

|

|

||||||

|

location / {

|

||||||

|

root /opt/dist;

|

||||||

|

try_files $uri $uri/ /index.html;

|

||||||

|

index index.html index.htm;

|

||||||

|

}

|

||||||

|

|

||||||

|

location /api {

|

||||||

|

proxy_pass http://logikm-backend:8080;

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -1,5 +1,6 @@

|

|||||||

package com.xiaojukeji.kafka.manager.service.service.gateway.impl;

|

package com.xiaojukeji.kafka.manager.service.service.gateway.impl;

|

||||||

|

|

||||||

|

import com.xiaojukeji.kafka.manager.common.bizenum.KafkaClientEnum;

|

||||||

import com.xiaojukeji.kafka.manager.common.entity.pojo.gateway.TopicConnectionDO;

|

import com.xiaojukeji.kafka.manager.common.entity.pojo.gateway.TopicConnectionDO;

|

||||||

import com.xiaojukeji.kafka.manager.common.entity.ao.topic.TopicConnection;

|

import com.xiaojukeji.kafka.manager.common.entity.ao.topic.TopicConnection;

|

||||||

import com.xiaojukeji.kafka.manager.common.constant.KafkaConstant;

|

import com.xiaojukeji.kafka.manager.common.constant.KafkaConstant;

|

||||||

@@ -167,8 +168,10 @@ public class TopicConnectionServiceImpl implements TopicConnectionService {

|

|||||||

TopicConnection dto = convert2TopicConnectionDTO(connectionDO);

|

TopicConnection dto = convert2TopicConnectionDTO(connectionDO);

|

||||||

|

|

||||||

// 过滤掉broker的机器

|

// 过滤掉broker的机器

|

||||||

if (brokerHostnameSet.contains(dto.getHostname()) || brokerHostnameSet.contains(dto.getIp())) {

|

if (KafkaClientEnum.FETCH_CLIENT.getName().toLowerCase().equals(connectionDO.getType())

|

||||||

// 发现消费的机器是broker, 则直接跳过. brokerHostnameSet有的集群存储的是IP

|

&& (brokerHostnameSet.contains(dto.getHostname()) || brokerHostnameSet.contains(dto.getIp()))) {

|

||||||

|

// 如果是fetch请求,并且是Broker的机器,则将数据进行过滤。

|

||||||

|

// bad-case:如果broker上部署了消费客户端,则这个消费客户端也会一并被过滤掉。

|

||||||

continue;

|

continue;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|||||||

@@ -9,7 +9,7 @@ server:

|

|||||||

spring:

|

spring:

|

||||||

application:

|

application:

|

||||||

name: kafkamanager

|

name: kafkamanager

|

||||||

version: 2.6.0

|

version: 2.6.1

|

||||||

profiles:

|

profiles:

|

||||||

active: dev

|

active: dev

|

||||||

datasource:

|

datasource:

|

||||||

|

|||||||

2

pom.xml

2

pom.xml

@@ -16,7 +16,7 @@

|

|||||||

</parent>

|

</parent>

|

||||||

|

|

||||||

<properties>

|

<properties>

|

||||||

<kafka-manager.revision>2.6.0</kafka-manager.revision>

|

<kafka-manager.revision>2.6.1</kafka-manager.revision>

|

||||||

<spring.boot.version>2.1.18.RELEASE</spring.boot.version>

|

<spring.boot.version>2.1.18.RELEASE</spring.boot.version>

|

||||||

<swagger2.version>2.9.2</swagger2.version>

|

<swagger2.version>2.9.2</swagger2.version>

|

||||||

<swagger.version>1.5.21</swagger.version>

|

<swagger.version>1.5.21</swagger.version>

|

||||||

|

|||||||

Reference in New Issue

Block a user