mirror of

https://github.com/didi/KnowStreaming.git

synced 2026-02-07 15:00:50 +08:00

合并Master分支

This commit is contained in:

43

.github/workflows/ci_build.yml

vendored

Normal file

43

.github/workflows/ci_build.yml

vendored

Normal file

@@ -0,0 +1,43 @@

|

|||||||

|

name: KnowStreaming Build

|

||||||

|

|

||||||

|

on:

|

||||||

|

push:

|

||||||

|

branches: [ "master", "ve_3.x", "ve_demo_3.x" ]

|

||||||

|

pull_request:

|

||||||

|

branches: [ "master", "ve_3.x", "ve_demo_3.x" ]

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

build:

|

||||||

|

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v3

|

||||||

|

|

||||||

|

- name: Set up JDK 11

|

||||||

|

uses: actions/setup-java@v3

|

||||||

|

with:

|

||||||

|

java-version: '11'

|

||||||

|

distribution: 'temurin'

|

||||||

|

cache: maven

|

||||||

|

|

||||||

|

- name: Setup Node

|

||||||

|

uses: actions/setup-node@v1

|

||||||

|

with:

|

||||||

|

node-version: '12.22.12'

|

||||||

|

|

||||||

|

- name: Build With Maven

|

||||||

|

run: mvn -Prelease-package -Dmaven.test.skip=true clean install -U

|

||||||

|

|

||||||

|

- name: Get KnowStreaming Version

|

||||||

|

if: ${{ success() }}

|

||||||

|

run: |

|

||||||

|

version=`mvn -Dexec.executable='echo' -Dexec.args='${project.version}' --non-recursive exec:exec -q`

|

||||||

|

echo "VERSION=${version}" >> $GITHUB_ENV

|

||||||

|

|

||||||

|

- name: Upload Binary Package

|

||||||

|

if: ${{ success() }}

|

||||||

|

uses: actions/upload-artifact@v3

|

||||||

|

with:

|

||||||

|

name: KnowStreaming-${{ env.VERSION }}.tar.gz

|

||||||

|

path: km-dist/target/KnowStreaming-${{ env.VERSION }}.tar.gz

|

||||||

@@ -90,6 +90,7 @@

|

|||||||

- [单机部署手册](docs/install_guide/单机部署手册.md)

|

- [单机部署手册](docs/install_guide/单机部署手册.md)

|

||||||

- [版本升级手册](docs/install_guide/版本升级手册.md)

|

- [版本升级手册](docs/install_guide/版本升级手册.md)

|

||||||

- [本地源码启动手册](docs/dev_guide/本地源码启动手册.md)

|

- [本地源码启动手册](docs/dev_guide/本地源码启动手册.md)

|

||||||

|

- [页面无数据排查手册](docs/dev_guide/页面无数据排查手册.md)

|

||||||

|

|

||||||

**`产品相关手册`**

|

**`产品相关手册`**

|

||||||

|

|

||||||

@@ -155,3 +156,4 @@ PS: 提问请尽量把问题一次性描述清楚,并告知环境信息情况

|

|||||||

## Star History

|

## Star History

|

||||||

|

|

||||||

[](https://star-history.com/#didi/KnowStreaming&Date)

|

[](https://star-history.com/#didi/KnowStreaming&Date)

|

||||||

|

|

||||||

|

|||||||

@@ -47,14 +47,13 @@

|

|||||||

|

|

||||||

**1、`Header` 规范**

|

**1、`Header` 规范**

|

||||||

|

|

||||||

`Header` 格式为 `[Type]Message(#IssueID)`, 主要有三部分组成,分别是`Type`、`Message`、`IssueID`,

|

`Header` 格式为 `[Type]Message`, 主要有三部分组成,分别是`Type`、`Message`,

|

||||||

|

|

||||||

- `Type`:说明这个提交是哪一个类型的,比如有 Bugfix、Feature、Optimize等;

|

- `Type`:说明这个提交是哪一个类型的,比如有 Bugfix、Feature、Optimize等;

|

||||||

- `Message`:说明提交的信息,比如修复xx问题;

|

- `Message`:说明提交的信息,比如修复xx问题;

|

||||||

- `IssueID`:该提交,关联的Issue的编号;

|

|

||||||

|

|

||||||

|

|

||||||

实际例子:[`[Bugfix]修复新接入的集群,Controller-Host不显示的问题(#927)`](https://github.com/didi/KnowStreaming/pull/933/commits)

|

实际例子:[`[Bugfix]修复新接入的集群,Controller-Host不显示的问题`](https://github.com/didi/KnowStreaming/pull/933/commits)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -67,7 +66,7 @@

|

|||||||

**3、实际例子**

|

**3、实际例子**

|

||||||

|

|

||||||

```

|

```

|

||||||

[Optimize]优化 MySQL & ES 测试容器的初始化(#906)

|

[Optimize]优化 MySQL & ES 测试容器的初始化

|

||||||

|

|

||||||

主要的变更

|

主要的变更

|

||||||

1、knowstreaming/knowstreaming-manager 容器;

|

1、knowstreaming/knowstreaming-manager 容器;

|

||||||

@@ -138,7 +137,7 @@

|

|||||||

1. 切换到主分支:`git checkout github_master`;

|

1. 切换到主分支:`git checkout github_master`;

|

||||||

2. 主分支拉最新代码:`git pull`;

|

2. 主分支拉最新代码:`git pull`;

|

||||||

3. 基于主分支拉新分支:`git checkout -b fix_928`;

|

3. 基于主分支拉新分支:`git checkout -b fix_928`;

|

||||||

4. 提交代码,安装commit的规范进行提交,例如:`git commit -m "[Optimize]优化xxx问题(#928)"`;

|

4. 提交代码,安装commit的规范进行提交,例如:`git commit -m "[Optimize]优化xxx问题"`;

|

||||||

5. 提交到自己远端仓库:`git push --set-upstream origin fix_928`;

|

5. 提交到自己远端仓库:`git push --set-upstream origin fix_928`;

|

||||||

6. `GitHub` 页面发起 `Pull Request` 请求,管理员合入主仓库。这部分详细见下一节;

|

6. `GitHub` 页面发起 `Pull Request` 请求,管理员合入主仓库。这部分详细见下一节;

|

||||||

|

|

||||||

@@ -162,6 +161,8 @@

|

|||||||

|

|

||||||

### 4.1、如何将多个 Commit-Log 合并为一个?

|

### 4.1、如何将多个 Commit-Log 合并为一个?

|

||||||

|

|

||||||

可以使用 `git rebase -i` 命令进行解决。

|

可以不需要将多个commit合并为一个,如果要合并,可以使用 `git rebase -i` 命令进行解决。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

Binary file not shown.

|

Before Width: | Height: | Size: 382 KiB |

180

docs/dev_guide/接入ZK带认证Kafka集群.md

Normal file

180

docs/dev_guide/接入ZK带认证Kafka集群.md

Normal file

@@ -0,0 +1,180 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

# 接入 ZK 带认证的 Kafka 集群

|

||||||

|

|

||||||

|

- [接入 ZK 带认证的 Kafka 集群](#接入-zk-带认证的-kafka-集群)

|

||||||

|

- [1、简要说明](#1简要说明)

|

||||||

|

- [2、支持 Digest-MD5 认证](#2支持-digest-md5-认证)

|

||||||

|

- [3、支持 Kerberos 认证](#3支持-kerberos-认证)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 1、简要说明

|

||||||

|

|

||||||

|

- 1、当前 KnowStreaming 暂无页面可以直接配置 ZK 的认证信息,但是 KnowStreaming 的后端预留了 MySQL 的字段用于存储 ZK 的认证信息,用户可通过将认证信息存储至该字段,从而达到支持接入 ZK 带认证的 Kafka 集群。

|

||||||

|

|

||||||

|

|

||||||

|

- 2、该字段位于 MySQL 库 ks_km_physical_cluster 表中的 zk_properties 字段,该字段的格式是:

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"openSecure": false, # 是否开启认证,开启时配置为true

|

||||||

|

"sessionTimeoutUnitMs": 15000, # session超时时间

|

||||||

|

"requestTimeoutUnitMs": 5000, # request超时时间

|

||||||

|

"otherProps": { # 其他配置,认证信息主要配置在该位置

|

||||||

|

"zookeeper.sasl.clientconfig": "kafkaClusterZK1" # 例子,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

- 3、实际生效的代码位置

|

||||||

|

```java

|

||||||

|

// 代码位置:https://github.com/didi/KnowStreaming/blob/master/km-persistence/src/main/java/com/xiaojukeji/know/streaming/km/persistence/kafka/KafkaAdminZKClient.java

|

||||||

|

|

||||||

|

kafkaZkClient = KafkaZkClient.apply(

|

||||||

|

clusterPhy.getZookeeper(),

|

||||||

|

zkConfig.getOpenSecure(), // 是否开启认证,开启时配置为true

|

||||||

|

zkConfig.getSessionTimeoutUnitMs(), // session超时时间

|

||||||

|

zkConfig.getRequestTimeoutUnitMs(), // request超时时间

|

||||||

|

5,

|

||||||

|

Time.SYSTEM,

|

||||||

|

"KS-ZK-ClusterPhyId-" + clusterPhyId,

|

||||||

|

"KS-ZK-SessionExpireListener-clusterPhyId-" + clusterPhyId,

|

||||||

|

Option.apply("KS-ZK-ClusterPhyId-" + clusterPhyId),

|

||||||

|

Option.apply(this.getZKConfig(clusterPhyId, zkConfig.getOtherProps())) // 其他配置,认证信息主要配置在该位置

|

||||||

|

);

|

||||||

|

```

|

||||||

|

|

||||||

|

- 4、SQL例子

|

||||||

|

```sql

|

||||||

|

update ks_km_physical_cluster set zk_properties='{ "openSecure": true, "otherProps": { "zookeeper.sasl.clientconfig": "kafkaClusterZK1" } }' where id=集群1的ID;

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

- 5、zk_properties 字段不能覆盖所有的场景,所以实际使用过程中还可能需要在此基础之上,进行其他的调整。比如,`Digest-MD5 认证` 和 `Kerberos 认证` 都还需要修改启动脚本等。后续看能否通过修改 ZK 客户端的源码,使得 ZK 认证的相关配置能和 Kafka 认证的配置一样方便。

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

## 2、支持 Digest-MD5 认证

|

||||||

|

|

||||||

|

1. 假设你有两个 Kafka 集群, 对应两个 ZK 集群;

|

||||||

|

2. 两个 ZK 集群的认证信息如下所示

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# ZK1集群的认证信息,这里的 kafkaClusterZK1 可以是随意的名称,只需要和后续数据库的配置对应上即可。

|

||||||

|

kafkaClusterZK1 {

|

||||||

|

org.apache.zookeeper.server.auth.DigestLoginModule required

|

||||||

|

username="zk1"

|

||||||

|

password="zk1-passwd";

|

||||||

|

};

|

||||||

|

|

||||||

|

# ZK2集群的认证信息,这里的 kafkaClusterZK2 可以是随意的名称,只需要和后续数据库的配置对应上即可。

|

||||||

|

kafkaClusterZK2 {

|

||||||

|

org.apache.zookeeper.server.auth.DigestLoginModule required

|

||||||

|

username="zk2"

|

||||||

|

password="zk2-passwd";

|

||||||

|

};

|

||||||

|

```

|

||||||

|

|

||||||

|

3. 将这两个ZK集群的认证信息存储到 `/xxx/zk_client_jaas.conf` 文件中,文件中的内容如下所示:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

kafkaClusterZK1 {

|

||||||

|

org.apache.zookeeper.server.auth.DigestLoginModule required

|

||||||

|

username="zk1"

|

||||||

|

password="zk1-passwd";

|

||||||

|

};

|

||||||

|

|

||||||

|

kafkaClusterZK2 {

|

||||||

|

org.apache.zookeeper.server.auth.DigestLoginModule required

|

||||||

|

username="zk2"

|

||||||

|

password="zk2-passwd";

|

||||||

|

};

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

4. 修改 KnowStreaming 的启动脚本

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# `KnowStreaming/bin/startup.sh` 中的 47 行的 JAVA_OPT 中追加如下设置

|

||||||

|

|

||||||

|

-Djava.security.auth.login.config=/xxx/zk_client_jaas.conf

|

||||||

|

```

|

||||||

|

|

||||||

|

5. 修改 KnowStreaming 的表数据

|

||||||

|

|

||||||

|

```sql

|

||||||

|

# 这里的 kafkaClusterZK1 要和 /xxx/zk_client_jaas.conf 中的对应上

|

||||||

|

update ks_km_physical_cluster set zk_properties='{ "openSecure": true, "otherProps": { "zookeeper.sasl.clientconfig": "kafkaClusterZK1" } }' where id=集群1的ID;

|

||||||

|

|

||||||

|

update ks_km_physical_cluster set zk_properties='{ "openSecure": true, "otherProps": { "zookeeper.sasl.clientconfig": "kafkaClusterZK2" } }' where id=集群2的ID;

|

||||||

|

```

|

||||||

|

|

||||||

|

6. 重启 KnowStreaming

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

## 3、支持 Kerberos 认证

|

||||||

|

|

||||||

|

**第一步:查看用户在ZK的ACL**

|

||||||

|

|

||||||

|

假设我们使用的用户是 `kafka` 这个用户。

|

||||||

|

|

||||||

|

- 1、查看 server.properties 的配置的 zookeeper.connect 的地址;

|

||||||

|

- 2、使用 `zkCli.sh -serve zookeeper.connect的地址` 登录到ZK页面;

|

||||||

|

- 3、ZK页面上,执行命令 `getAcl /kafka` 查看 `kafka` 用户的权限;

|

||||||

|

|

||||||

|

此时,我们可以看到如下信息:

|

||||||

|

|

||||||

|

|

||||||

|

`kafka` 用户需要的权限是 `cdrwa`。如果用户没有 `cdrwa` 权限的话,需要创建用户并授权,授权命令为:`setAcl`

|

||||||

|

|

||||||

|

|

||||||

|

**第二步:创建Kerberos的keytab并修改 KnowStreaming 主机**

|

||||||

|

|

||||||

|

- 1、在 Kerberos 的域中创建 `kafka/_HOST` 的 `keytab`,并导出。例如:`kafka/dbs-kafka-test-8-53`;

|

||||||

|

- 2、导出 keytab 后上传到安装 KS 的机器的 `/etc/keytab` 下;

|

||||||

|

- 3、在 KS 机器上,执行 `kinit -kt zookeepe.keytab kafka/dbs-kafka-test-8-53` 看是否能进行 `Kerberos` 登录;

|

||||||

|

- 4、可以登录后,配置 `/opt/zookeeper.jaas` 文件,例子如下:

|

||||||

|

```bash

|

||||||

|

Client {

|

||||||

|

com.sun.security.auth.module.Krb5LoginModule required

|

||||||

|

useKeyTab=true

|

||||||

|

storeKey=false

|

||||||

|

serviceName="zookeeper"

|

||||||

|

keyTab="/etc/keytab/zookeeper.keytab"

|

||||||

|

principal="kafka/dbs-kafka-test-8-53@XXX.XXX.XXX";

|

||||||

|

};

|

||||||

|

```

|

||||||

|

- 5、需要配置 `KDC-Server` 对 `KnowStreaming` 的机器开通防火墙,并在KS的机器 `/etc/host/` 配置 `kdc-server` 的 `hostname`。并将 `krb5.conf` 导入到 `/etc` 下;

|

||||||

|

|

||||||

|

|

||||||

|

**第三步:修改 KnowStreaming 的配置**

|

||||||

|

|

||||||

|

- 1、修改数据库,开启ZK的认证

|

||||||

|

```sql

|

||||||

|

update ks_km_physical_cluster set zk_properties='{ "openSecure": true }' where id=集群1的ID;

|

||||||

|

```

|

||||||

|

|

||||||

|

- 2、在 `KnowStreaming/bin/startup.sh` 中的47行的JAVA_OPT中追加如下设置

|

||||||

|

```bash

|

||||||

|

-Dsun.security.krb5.debug=true -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/opt/zookeeper.jaas

|

||||||

|

```

|

||||||

|

|

||||||

|

- 3、重启KS集群后再 start.out 中看到如下信息,则证明Kerberos配置成功;

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**第四步:补充说明**

|

||||||

|

|

||||||

|

- 1、多Kafka集群如果用的是一样的Kerberos域的话,只需在每个`ZK`中给`kafka`用户配置`crdwa`权限即可,这样集群初始化的时候`zkclient`是都可以认证;

|

||||||

|

- 2、多个Kerberos域暂时未适配;

|

||||||

@@ -1,69 +0,0 @@

|

|||||||

|

|

||||||

## 支持Kerberos认证的ZK

|

|

||||||

|

|

||||||

|

|

||||||

### 1、修改 KnowStreaming 代码

|

|

||||||

|

|

||||||

代码位置:`src/main/java/com/xiaojukeji/know/streaming/km/persistence/kafka/KafkaAdminZKClient.java`

|

|

||||||

|

|

||||||

将 `createZKClient` 的 `135行 的 false 改为 true

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

修改完后重新进行打包编译,打包编译见:[打包编译](https://github.com/didi/KnowStreaming/blob/master/docs/install_guide/%E6%BA%90%E7%A0%81%E7%BC%96%E8%AF%91%E6%89%93%E5%8C%85%E6%89%8B%E5%86%8C.md

|

|

||||||

)

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 2、查看用户在ZK的ACL

|

|

||||||

|

|

||||||

假设我们使用的用户是 `kafka` 这个用户。

|

|

||||||

|

|

||||||

- 1、查看 server.properties 的配置的 zookeeper.connect 的地址;

|

|

||||||

- 2、使用 `zkCli.sh -serve zookeeper.connect的地址` 登录到ZK页面;

|

|

||||||

- 3、ZK页面上,执行命令 `getAcl /kafka` 查看 `kafka` 用户的权限;

|

|

||||||

|

|

||||||

此时,我们可以看到如下信息:

|

|

||||||

|

|

||||||

|

|

||||||

`kafka` 用户需要的权限是 `cdrwa`。如果用户没有 `cdrwa` 权限的话,需要创建用户并授权,授权命令为:`setAcl`

|

|

||||||

|

|

||||||

|

|

||||||

### 3、创建Kerberos的keytab并修改 KnowStreaming 主机

|

|

||||||

|

|

||||||

- 1、在 Kerberos 的域中创建 `kafka/_HOST` 的 `keytab`,并导出。例如:`kafka/dbs-kafka-test-8-53`;

|

|

||||||

- 2、导出 keytab 后上传到安装 KS 的机器的 `/etc/keytab` 下;

|

|

||||||

- 3、在 KS 机器上,执行 `kinit -kt zookeepe.keytab kafka/dbs-kafka-test-8-53` 看是否能进行 `Kerberos` 登录;

|

|

||||||

- 4、可以登录后,配置 `/opt/zookeeper.jaas` 文件,例子如下:

|

|

||||||

```sql

|

|

||||||

Client {

|

|

||||||

com.sun.security.auth.module.Krb5LoginModule required

|

|

||||||

useKeyTab=true

|

|

||||||

storeKey=false

|

|

||||||

serviceName="zookeeper"

|

|

||||||

keyTab="/etc/keytab/zookeeper.keytab"

|

|

||||||

principal="kafka/dbs-kafka-test-8-53@XXX.XXX.XXX";

|

|

||||||

};

|

|

||||||

```

|

|

||||||

- 5、需要配置 `KDC-Server` 对 `KnowStreaming` 的机器开通防火墙,并在KS的机器 `/etc/host/` 配置 `kdc-server` 的 `hostname`。并将 `krb5.conf` 导入到 `/etc` 下;

|

|

||||||

|

|

||||||

|

|

||||||

### 4、修改 KnowStreaming 的配置

|

|

||||||

|

|

||||||

- 1、在 `/usr/local/KnowStreaming/KnowStreaming/bin/startup.sh` 中的47行的JAVA_OPT中追加如下设置

|

|

||||||

```bash

|

|

||||||

-Dsun.security.krb5.debug=true -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.auth.login.config=/opt/zookeeper.jaas

|

|

||||||

```

|

|

||||||

|

|

||||||

- 2、重启KS集群后再 start.out 中看到如下信息,则证明Kerberos配置成功;

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 5、补充说明

|

|

||||||

|

|

||||||

- 1、多Kafka集群如果用的是一样的Kerberos域的话,只需在每个`ZK`中给`kafka`用户配置`crdwa`权限即可,这样集群初始化的时候`zkclient`是都可以认证;

|

|

||||||

- 2、当前需要修改代码重新打包才可以支持,后续考虑通过页面支持Kerberos认证的ZK接入;

|

|

||||||

- 3、多个Kerberos域暂时未适配;

|

|

||||||

@@ -1,285 +0,0 @@

|

|||||||

## 1、集群接入错误

|

|

||||||

|

|

||||||

### 1.1、异常现象

|

|

||||||

|

|

||||||

如下图所示,集群非空时,大概率为地址配置错误导致。

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_BRiXBvqYFK2dxSF1aqgZ width="80%">

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 1.2、解决方案

|

|

||||||

|

|

||||||

接入集群时,依据提示的错误,进行相应的解决。例如:

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_Yn4LhV8aeSEKX1zrrkUi width="50%">

|

|

||||||

|

|

||||||

### 1.3、正常情况

|

|

||||||

|

|

||||||

接入集群时,页面信息都自动正常出现,没有提示错误。

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 2、JMX连接失败(需使用3.0.1及以上版本)

|

|

||||||

|

|

||||||

### 2.1异常现象

|

|

||||||

|

|

||||||

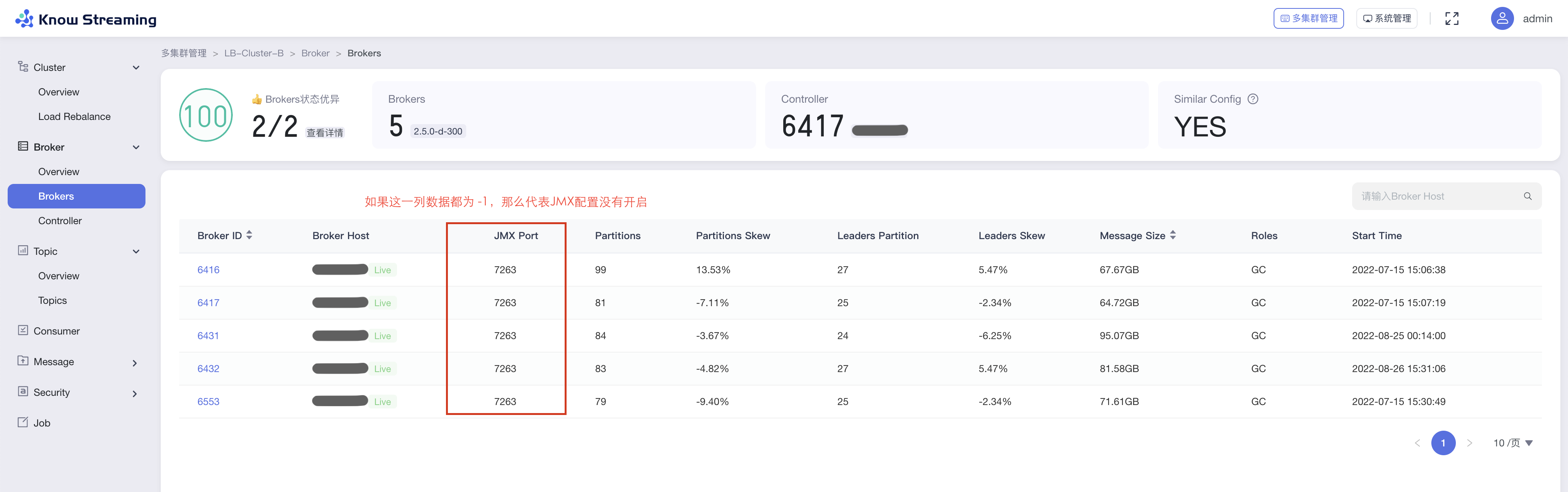

Broker列表的JMX Port列出现红色感叹号,则该Broker的JMX连接异常。

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_MLlLCfAktne4X6MBtBUd width="90%">

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

#### 2.1.1、原因一:JMX未开启

|

|

||||||

|

|

||||||

##### 2.1.1.1、异常现象

|

|

||||||

|

|

||||||

broker列表的JMX Port值为-1,对应Broker的JMX未开启。

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_E1PD8tPsMeR2zYLFBFAu width="90%">

|

|

||||||

|

|

||||||

##### 2.1.1.2、解决方案

|

|

||||||

|

|

||||||

开启JMX,开启流程如下:

|

|

||||||

|

|

||||||

1、修改kafka的bin目录下面的:`kafka-server-start.sh`文件

|

|

||||||

|

|

||||||

```

|

|

||||||

# 在这个下面增加JMX端口的配置

|

|

||||||

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

|

|

||||||

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

|

|

||||||

export JMX_PORT=9999 # 增加这个配置, 这里的数值并不一定是要9999

|

|

||||||

fi

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

2、修改kafka的bin目录下面对的:`kafka-run-class.sh`文件

|

|

||||||

|

|

||||||

```

|

|

||||||

# JMX settings

|

|

||||||

if [ -z "$KAFKA_JMX_OPTS" ]; then

|

|

||||||

KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false

|

|

||||||

-Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=${当前机器的IP}"

|

|

||||||

fi

|

|

||||||

|

|

||||||

# JMX port to use

|

|

||||||

if [ $JMX_PORT ]; then

|

|

||||||

KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT - Dcom.sun.management.jmxremote.rmi.port=$JMX_PORT"

|

|

||||||

fi

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

3、重启Kafka-Broker。

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

#### 2.1.2、原因二:JMX配置错误

|

|

||||||

|

|

||||||

##### 2.1.2.1、异常现象

|

|

||||||

|

|

||||||

错误日志:

|

|

||||||

|

|

||||||

```

|

|

||||||

# 错误一: 错误提示的是真实的IP,这样的话基本就是JMX配置的有问题了。

|

|

||||||

2021-01-27 10:06:20.730 ERROR 50901 --- [ics-Thread-1-62] c.x.k.m.c.utils.jmx.JmxConnectorWrap : JMX connect exception, host:192.168.0.1 port:9999. java.rmi.ConnectException: Connection refused to host: 192.168.0.1; nested exception is:

|

|

||||||

|

|

||||||

# 错误二:错误提示的是127.0.0.1这个IP,这个是机器的hostname配置的可能有问题。

|

|

||||||

2021-01-27 10:06:20.730 ERROR 50901 --- [ics-Thread-1-62] c.x.k.m.c.utils.jmx.JmxConnectorWrap : JMX connect exception, host:127.0.0.1 port:9999. java.rmi.ConnectException: Connection refused to host: 127.0.0.1;; nested exception is:

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

##### 2.1.2.2、解决方案

|

|

||||||

|

|

||||||

开启JMX,开启流程如下:

|

|

||||||

|

|

||||||

1、修改kafka的bin目录下面的:`kafka-server-start.sh`文件

|

|

||||||

|

|

||||||

```

|

|

||||||

# 在这个下面增加JMX端口的配置

|

|

||||||

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

|

|

||||||

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

|

|

||||||

export JMX_PORT=9999 # 增加这个配置, 这里的数值并不一定是要9999

|

|

||||||

fi

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

2、修改kafka的bin目录下面对的:`kafka-run-class.sh`文件

|

|

||||||

|

|

||||||

```

|

|

||||||

# JMX settings

|

|

||||||

if [ -z "$KAFKA_JMX_OPTS" ]; then

|

|

||||||

KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false

|

|

||||||

-Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=${当前机器的IP}"

|

|

||||||

fi

|

|

||||||

|

|

||||||

# JMX port to use

|

|

||||||

if [ $JMX_PORT ]; then

|

|

||||||

KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT - Dcom.sun.management.jmxremote.rmi.port=$JMX_PORT"

|

|

||||||

fi

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

3、重启Kafka-Broker。

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

#### 2.1.3、原因三:JMX开启SSL

|

|

||||||

|

|

||||||

##### 2.1.3.1、解决方案

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_kNyCi8H9wtHSRkWurB6S width="50%">

|

|

||||||

|

|

||||||

#### 2.1.4、原因四:连接了错误IP

|

|

||||||

|

|

||||||

##### 2.1.4.1、异常现象

|

|

||||||

|

|

||||||

Broker 配置了内外网,而JMX在配置时,可能配置了内网IP或者外网IP,此时`KnowStreaming` 需要连接到特定网络的IP才可以进行访问。

|

|

||||||

|

|

||||||

比如:Broker在ZK的存储结构如下所示,我们期望连接到 `endpoints` 中标记为 `INTERNAL` 的地址,但是 `KnowStreaming` 却连接了 `EXTERNAL` 的地址。

|

|

||||||

|

|

||||||

```json

|

|

||||||

{

|

|

||||||

"listener_security_protocol_map": {

|

|

||||||

"EXTERNAL": "SASL_PLAINTEXT",

|

|

||||||

"INTERNAL": "SASL_PLAINTEXT"

|

|

||||||

},

|

|

||||||

"endpoints": [

|

|

||||||

"EXTERNAL://192.168.0.1:7092",

|

|

||||||

"INTERNAL://192.168.0.2:7093"

|

|

||||||

],

|

|

||||||

"jmx_port": 8099,

|

|

||||||

"host": "192.168.0.1",

|

|

||||||

"timestamp": "1627289710439",

|

|

||||||

"port": -1,

|

|

||||||

"version": 4

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

##### 2.1.4.2、解决方案

|

|

||||||

|

|

||||||

可以手动往`ks_km_physical_cluster`表的`jmx_properties`字段增加一个`useWhichEndpoint`字段,从而控制 `KnowStreaming` 连接到特定的JMX IP及PORT。

|

|

||||||

|

|

||||||

`jmx_properties`格式:

|

|

||||||

|

|

||||||

```json

|

|

||||||

{

|

|

||||||

"maxConn": 100, // KM对单台Broker的最大JMX连接数

|

|

||||||

"username": "xxxxx", //用户名,可以不填写

|

|

||||||

"password": "xxxx", // 密码,可以不填写

|

|

||||||

"openSSL": true, //开启SSL, true表示开启ssl, false表示关闭

|

|

||||||

"useWhichEndpoint": "EXTERNAL" //指定要连接的网络名称,填写EXTERNAL就是连接endpoints里面的EXTERNAL地址

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

SQL例子:

|

|

||||||

|

|

||||||

```sql

|

|

||||||

UPDATE ks_km_physical_cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false , "useWhichEndpoint": "xxx"}' where id={xxx};

|

|

||||||

```

|

|

||||||

|

|

||||||

### 2.2、正常情况

|

|

||||||

|

|

||||||

修改完成后,如果看到 JMX PORT这一列全部为绿色,则表示JMX已正常。

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_ymtDTCiDlzfrmSCez2lx width="90%">

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 3、Elasticsearch问题

|

|

||||||

|

|

||||||

注意:mac系统在执行curl指令时,可能报zsh错误。可参考以下操作。

|

|

||||||

|

|

||||||

```

|

|

||||||

1 进入.zshrc 文件 vim ~/.zshrc

|

|

||||||

2.在.zshrc中加入 setopt no_nomatch

|

|

||||||

3.更新配置 source ~/.zshrc

|

|

||||||

```

|

|

||||||

|

|

||||||

### 3.1、原因一:缺少索引

|

|

||||||

|

|

||||||

#### 3.1.1、异常现象

|

|

||||||

|

|

||||||

报错信息

|

|

||||||

|

|

||||||

```

|

|

||||||

com.didiglobal.logi.elasticsearch.client.model.exception.ESIndexNotFoundException: method [GET], host[http://127.0.0.1:9200], URI [/ks_kafka_broker_metric_2022-10-21,ks_kafka_broker_metric_2022-10-22/_search], status line [HTTP/1.1 404 Not Found]

|

|

||||||

```

|

|

||||||

|

|

||||||

curl http://{ES的IP地址}:{ES的端口号}/_cat/indices/ks_kafka* 查看KS索引列表,发现没有索引。

|

|

||||||

|

|

||||||

#### 3.1.2、解决方案

|

|

||||||

|

|

||||||

执行 [ES索引及模版初始化](https://github.com/didi/KnowStreaming/blob/master/bin/init_es_template.sh) 脚本,来创建索引及模版。

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 3.2、原因二:索引模板错误

|

|

||||||

|

|

||||||

#### 3.2.1、异常现象

|

|

||||||

|

|

||||||

多集群列表有数据,集群详情页图标无数据。查询KS索引模板列表,发现不存在。

|

|

||||||

|

|

||||||

```

|

|

||||||

curl {ES的IP地址}:{ES的端口号}/_cat/templates/ks_kafka*?v&h=name

|

|

||||||

```

|

|

||||||

|

|

||||||

正常KS模板如下图所示。

|

|

||||||

|

|

||||||

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_l79bPYSci9wr6KFwZDA6 width="90%">

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

#### 3.2.2、解决方案

|

|

||||||

|

|

||||||

删除KS索引模板和索引

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -XDELETE {ES的IP地址}:{ES的端口号}/ks_kafka*

|

|

||||||

curl -XDELETE {ES的IP地址}:{ES的端口号}/_template/ks_kafka*

|

|

||||||

```

|

|

||||||

|

|

||||||

执行 [ES索引及模版初始化](https://github.com/didi/KnowStreaming/blob/master/bin/init_es_template.sh) 脚本,来创建索引及模版。

|

|

||||||

|

|

||||||

|

|

||||||

### 3.3、原因三:集群Shard满

|

|

||||||

|

|

||||||

#### 3.3.1、异常现象

|

|

||||||

|

|

||||||

报错信息

|

|

||||||

|

|

||||||

```

|

|

||||||

com.didiglobal.logi.elasticsearch.client.model.exception.ESIndexNotFoundException: method [GET], host[http://127.0.0.1:9200], URI [/ks_kafka_broker_metric_2022-10-21,ks_kafka_broker_metric_2022-10-22/_search], status line [HTTP/1.1 404 Not Found]

|

|

||||||

```

|

|

||||||

|

|

||||||

尝试手动创建索引失败。

|

|

||||||

|

|

||||||

```

|

|

||||||

#创建ks_kafka_cluster_metric_test索引的指令

|

|

||||||

curl -s -XPUT http://{ES的IP地址}:{ES的端口号}/ks_kafka_cluster_metric_test

|

|

||||||

```

|

|

||||||

|

|

||||||

#### 3.3.2、解决方案

|

|

||||||

|

|

||||||

ES索引的默认分片数量为1000,达到数量以后,索引创建失败。

|

|

||||||

|

|

||||||

+ 扩大ES索引数量上限,执行指令

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -XPUT -H"content-type:application/json" http://{ES的IP地址}:{ES的端口号}/_cluster/settings -d '

|

|

||||||

{

|

|

||||||

"persistent": {

|

|

||||||

"cluster": {

|

|

||||||

"max_shards_per_node":{索引上限,默认为1000}

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}'

|

|

||||||

```

|

|

||||||

|

|

||||||

执行 [ES索引及模版初始化](https://github.com/didi/KnowStreaming/blob/master/bin/init_es_template.sh) 脚本,来补全索引。

|

|

||||||

@@ -2,125 +2,275 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## JMX-连接失败问题解决

|

|

||||||

|

|

||||||

集群正常接入`KnowStreaming`之后,即可以看到集群的Broker列表,此时如果查看不了Topic的实时流量,或者是Broker的实时流量信息时,那么大概率就是`JMX`连接的问题了。

|

## 2、解决连接 JMX 失败

|

||||||

|

|

||||||

下面我们按照步骤来一步一步的检查。

|

- [2、解决连接 JMX 失败](#2解决连接-jmx-失败)

|

||||||

|

- [2.1、正异常现象](#21正异常现象)

|

||||||

### 1、问题说明

|

- [2.2、异因一:JMX未开启](#22异因一jmx未开启)

|

||||||

|

- [2.2.1、异常现象](#221异常现象)

|

||||||

**类型一:JMX配置未开启**

|

- [2.2.2、解决方案](#222解决方案)

|

||||||

|

- [2.3、异原二:JMX配置错误](#23异原二jmx配置错误)

|

||||||

未开启时,直接到`2、解决方法`查看如何开启即可。

|

- [2.3.1、异常现象](#231异常现象)

|

||||||

|

- [2.3.2、解决方案](#232解决方案)

|

||||||

|

- [2.4、异因三:JMX开启SSL](#24异因三jmx开启ssl)

|

||||||

|

- [2.4.1、异常现象](#241异常现象)

|

||||||

|

- [2.4.2、解决方案](#242解决方案)

|

||||||

|

- [2.5、异因四:连接了错误IP](#25异因四连接了错误ip)

|

||||||

|

- [2.5.1、异常现象](#251异常现象)

|

||||||

|

- [2.5.2、解决方案](#252解决方案)

|

||||||

|

- [2.6、异因五:连接了错误端口](#26异因五连接了错误端口)

|

||||||

|

- [2.6.1、异常现象](#261异常现象)

|

||||||

|

- [2.6.2、解决方案](#262解决方案)

|

||||||

|

|

||||||

|

|

||||||

**类型二:配置错误**

|

背景:Kafka 通过 JMX 服务进行运行指标的暴露,因此 `KnowStreaming` 会主动连接 Kafka 的 JMX 服务进行指标采集。如果我们发现页面缺少指标,那么可能原因之一是 Kafka 的 JMX 端口配置的有问题导致指标获取失败,进而页面没有数据。

|

||||||

|

|

||||||

`JMX`端口已经开启的情况下,有的时候开启的配置不正确,此时也会导致出现连接失败的问题。这里大概列举几种原因:

|

|

||||||

|

|

||||||

- `JMX`配置错误:见`2、解决方法`。

|

|

||||||

- 存在防火墙或者网络限制:网络通的另外一台机器`telnet`试一下看是否可以连接上。

|

|

||||||

- 需要进行用户名及密码的认证:见`3、解决方法 —— 认证的JMX`。

|

|

||||||

|

|

||||||

|

|

||||||

错误日志例子:

|

### 2.1、正异常现象

|

||||||

```

|

|

||||||

# 错误一: 错误提示的是真实的IP,这样的话基本就是JMX配置的有问题了。

|

**1、异常现象**

|

||||||

2021-01-27 10:06:20.730 ERROR 50901 --- [ics-Thread-1-62] c.x.k.m.c.utils.jmx.JmxConnectorWrap : JMX connect exception, host:192.168.0.1 port:9999.

|

|

||||||

java.rmi.ConnectException: Connection refused to host: 192.168.0.1; nested exception is:

|

Broker 列表的 JMX PORT 列出现红色感叹号,则表示 JMX 连接存在异常。

|

||||||

|

|

||||||

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_MLlLCfAktne4X6MBtBUd width="90%">

|

||||||

|

|

||||||

|

|

||||||

# 错误二:错误提示的是127.0.0.1这个IP,这个是机器的hostname配置的可能有问题。

|

**2、正常现象**

|

||||||

2021-01-27 10:06:20.730 ERROR 50901 --- [ics-Thread-1-62] c.x.k.m.c.utils.jmx.JmxConnectorWrap : JMX connect exception, host:127.0.0.1 port:9999.

|

|

||||||

java.rmi.ConnectException: Connection refused to host: 127.0.0.1;; nested exception is:

|

|

||||||

```

|

|

||||||

|

|

||||||

**类型三:连接特定IP**

|

Broker 列表的 JMX PORT 列出现绿色,则表示 JMX 连接正常。

|

||||||

|

|

||||||

Broker 配置了内外网,而JMX在配置时,可能配置了内网IP或者外网IP,此时 `KnowStreaming` 需要连接到特定网络的IP才可以进行访问。

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_ymtDTCiDlzfrmSCez2lx width="90%">

|

||||||

|

|

||||||

比如:

|

|

||||||

|

|

||||||

Broker在ZK的存储结构如下所示,我们期望连接到 `endpoints` 中标记为 `INTERNAL` 的地址,但是 `KnowStreaming` 却连接了 `EXTERNAL` 的地址,此时可以看 `4、解决方法 —— JMX连接特定网络` 进行解决。

|

---

|

||||||

|

|

||||||

```json

|

|

||||||

{

|

|

||||||

"listener_security_protocol_map": {"EXTERNAL":"SASL_PLAINTEXT","INTERNAL":"SASL_PLAINTEXT"},

|

|

||||||

"endpoints": ["EXTERNAL://192.168.0.1:7092","INTERNAL://192.168.0.2:7093"],

|

|

||||||

"jmx_port": 8099,

|

|

||||||

"host": "192.168.0.1",

|

|

||||||

"timestamp": "1627289710439",

|

|

||||||

"port": -1,

|

|

||||||

"version": 4

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

### 2、解决方法

|

|

||||||

|

|

||||||

这里仅介绍一下比较通用的解决方式,如若有更好的方式,欢迎大家指导告知一下。

|

|

||||||

|

|

||||||

修改`kafka-server-start.sh`文件:

|

|

||||||

```

|

|

||||||

|

### 2.2、异因一:JMX未开启

|

||||||

|

|

||||||

|

#### 2.2.1、异常现象

|

||||||

|

|

||||||

|

broker列表的JMX Port值为-1,对应Broker的JMX未开启。

|

||||||

|

|

||||||

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_E1PD8tPsMeR2zYLFBFAu width="90%">

|

||||||

|

|

||||||

|

#### 2.2.2、解决方案

|

||||||

|

|

||||||

|

开启JMX,开启流程如下:

|

||||||

|

|

||||||

|

1、修改kafka的bin目录下面的:`kafka-server-start.sh`文件

|

||||||

|

|

||||||

|

```bash

|

||||||

# 在这个下面增加JMX端口的配置

|

# 在这个下面增加JMX端口的配置

|

||||||

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

|

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

|

||||||

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

|

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

|

||||||

export JMX_PORT=9999 # 增加这个配置, 这里的数值并不一定是要9999

|

export JMX_PORT=9999 # 增加这个配置, 这里的数值并不一定是要9999

|

||||||

fi

|

fi

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

修改`kafka-run-class.sh`文件

|

2、修改kafka的bin目录下面对的:`kafka-run-class.sh`文件

|

||||||

```

|

|

||||||

# JMX settings

|

```bash

|

||||||

|

# JMX settings

|

||||||

if [ -z "$KAFKA_JMX_OPTS" ]; then

|

if [ -z "$KAFKA_JMX_OPTS" ]; then

|

||||||

KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=${当前机器的IP}"

|

KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=当前机器的IP"

|

||||||

fi

|

fi

|

||||||

|

|

||||||

# JMX port to use

|

# JMX port to use

|

||||||

if [ $JMX_PORT ]; then

|

if [ $JMX_PORT ]; then

|

||||||

KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT -Dcom.sun.management.jmxremote.rmi.port=$JMX_PORT"

|

KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT -Dcom.sun.management.jmxremote.rmi.port=$JMX_PORT"

|

||||||

fi

|

fi

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

### 3、解决方法 —— 认证的JMX

|

|

||||||

|

|

||||||

如果您是直接看的这个部分,建议先看一下上一节:`2、解决方法`以确保`JMX`的配置没有问题了。

|

3、重启Kafka-Broker。

|

||||||

|

|

||||||

在`JMX`的配置等都没有问题的情况下,如果是因为认证的原因导致连接不了的,可以在集群接入界面配置你的`JMX`认证信息。

|

|

||||||

|

|

||||||

<img src='http://img-ys011.didistatic.com/static/dc2img/do1_EUU352qMEX1Jdp7pxizp' width=350>

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 4、解决方法 —— JMX连接特定网络

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 2.3、异原二:JMX配置错误

|

||||||

|

|

||||||

|

#### 2.3.1、异常现象

|

||||||

|

|

||||||

|

错误日志:

|

||||||

|

|

||||||

|

```log

|

||||||

|

# 错误一: 错误提示的是真实的IP,这样的话基本就是JMX配置的有问题了。

|

||||||

|

2021-01-27 10:06:20.730 ERROR 50901 --- [ics-Thread-1-62] c.x.k.m.c.utils.jmx.JmxConnectorWrap : JMX connect exception, host:192.168.0.1 port:9999. java.rmi.ConnectException: Connection refused to host: 192.168.0.1; nested exception is:

|

||||||

|

|

||||||

|

# 错误二:错误提示的是127.0.0.1这个IP,这个是机器的hostname配置的可能有问题。

|

||||||

|

2021-01-27 10:06:20.730 ERROR 50901 --- [ics-Thread-1-62] c.x.k.m.c.utils.jmx.JmxConnectorWrap : JMX connect exception, host:127.0.0.1 port:9999. java.rmi.ConnectException: Connection refused to host: 127.0.0.1;; nested exception is:

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.3.2、解决方案

|

||||||

|

|

||||||

|

开启JMX,开启流程如下:

|

||||||

|

|

||||||

|

1、修改kafka的bin目录下面的:`kafka-server-start.sh`文件

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# 在这个下面增加JMX端口的配置

|

||||||

|

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

|

||||||

|

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

|

||||||

|

export JMX_PORT=9999 # 增加这个配置, 这里的数值并不一定是要9999

|

||||||

|

fi

|

||||||

|

```

|

||||||

|

|

||||||

|

2、修改kafka的bin目录下面对的:`kafka-run-class.sh`文件

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# JMX settings

|

||||||

|

if [ -z "$KAFKA_JMX_OPTS" ]; then

|

||||||

|

KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=当前机器的IP"

|

||||||

|

fi

|

||||||

|

|

||||||

|

# JMX port to use

|

||||||

|

if [ $JMX_PORT ]; then

|

||||||

|

KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT -Dcom.sun.management.jmxremote.rmi.port=$JMX_PORT"

|

||||||

|

fi

|

||||||

|

```

|

||||||

|

|

||||||

|

3、重启Kafka-Broker。

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 2.4、异因三:JMX开启SSL

|

||||||

|

|

||||||

|

#### 2.4.1、异常现象

|

||||||

|

|

||||||

|

```log

|

||||||

|

# 连接JMX的日志中,出现SSL认证失败的相关日志。TODO:欢迎补充具体日志案例。

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.4.2、解决方案

|

||||||

|

|

||||||

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_kNyCi8H9wtHSRkWurB6S width="50%">

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

### 2.5、异因四:连接了错误IP

|

||||||

|

|

||||||

|

#### 2.5.1、异常现象

|

||||||

|

|

||||||

|

Broker 配置了内外网,而JMX在配置时,可能配置了内网IP或者外网IP,此时`KnowStreaming` 需要连接到特定网络的IP才可以进行访问。

|

||||||

|

|

||||||

|

比如:Broker在ZK的存储结构如下所示,我们期望连接到 `endpoints` 中标记为 `INTERNAL` 的地址,但是 `KnowStreaming` 却连接了 `EXTERNAL` 的地址。

|

||||||

|

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"listener_security_protocol_map": {

|

||||||

|

"EXTERNAL": "SASL_PLAINTEXT",

|

||||||

|

"INTERNAL": "SASL_PLAINTEXT"

|

||||||

|

},

|

||||||

|

"endpoints": [

|

||||||

|

"EXTERNAL://192.168.0.1:7092",

|

||||||

|

"INTERNAL://192.168.0.2:7093"

|

||||||

|

],

|

||||||

|

"jmx_port": 8099,

|

||||||

|

"host": "192.168.0.1",

|

||||||

|

"timestamp": "1627289710439",

|

||||||

|

"port": -1,

|

||||||

|

"version": 4

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 2.5.2、解决方案

|

||||||

|

|

||||||

可以手动往`ks_km_physical_cluster`表的`jmx_properties`字段增加一个`useWhichEndpoint`字段,从而控制 `KnowStreaming` 连接到特定的JMX IP及PORT。

|

可以手动往`ks_km_physical_cluster`表的`jmx_properties`字段增加一个`useWhichEndpoint`字段,从而控制 `KnowStreaming` 连接到特定的JMX IP及PORT。

|

||||||

|

|

||||||

`jmx_properties`格式:

|

`jmx_properties`格式:

|

||||||

|

|

||||||

```json

|

```json

|

||||||

{

|

{

|

||||||

"maxConn": 100, # KM对单台Broker的最大JMX连接数

|

"maxConn": 100, // KM对单台Broker的最大JMX连接数

|

||||||

"username": "xxxxx", # 用户名,可以不填写

|

"username": "xxxxx", //用户名,可以不填写

|

||||||

"password": "xxxx", # 密码,可以不填写

|

"password": "xxxx", // 密码,可以不填写

|

||||||

"openSSL": true, # 开启SSL, true表示开启ssl, false表示关闭

|

"openSSL": true, //开启SSL, true表示开启ssl, false表示关闭

|

||||||

"useWhichEndpoint": "EXTERNAL" #指定要连接的网络名称,填写EXTERNAL就是连接endpoints里面的EXTERNAL地址

|

"useWhichEndpoint": "EXTERNAL" //指定要连接的网络名称,填写EXTERNAL就是连接endpoints里面的EXTERNAL地址

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

SQL例子:

|

SQL例子:

|

||||||

|

|

||||||

```sql

|

```sql

|

||||||

UPDATE ks_km_physical_cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false , "useWhichEndpoint": "xxx"}' where id={xxx};

|

UPDATE ks_km_physical_cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false , "useWhichEndpoint": "xxx"}' where id={xxx};

|

||||||

```

|

```

|

||||||

|

|

||||||

注意:

|

|

||||||

|

|

||||||

+ 目前此功能只支持采用 `ZK` 做分布式协调的kafka集群。

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 2.6、异因五:连接了错误端口

|

||||||

|

|

||||||

|

3.3.0 以上版本,或者是 master 分支最新代码,才具备该能力。

|

||||||

|

|

||||||

|

#### 2.6.1、异常现象

|

||||||

|

|

||||||

|

在 AWS 或者是容器上的 Kafka-Broker,使用同一个IP,但是外部服务想要去连接 JMX 端口时,需要进行映射。因此 KnowStreaming 如果直接连接 ZK 上获取到的 JMX 端口,会连接失败,因此需要具备连接端口可配置的能力。

|

||||||

|

|

||||||

|

TODO:补充具体的日志。

|

||||||

|

|

||||||

|

|

||||||

|

#### 2.6.2、解决方案

|

||||||

|

|

||||||

|

可以手动往`ks_km_physical_cluster`表的`jmx_properties`字段增加一个`specifiedJmxPortList`字段,从而控制 `KnowStreaming` 连接到特定的JMX PORT。

|

||||||

|

|

||||||

|

`jmx_properties`格式:

|

||||||

|

```json

|

||||||

|

{

|

||||||

|

"jmxPort": 2445, // 最低优先级使用的jmx端口

|

||||||

|

"maxConn": 100, // KM对单台Broker的最大JMX连接数

|

||||||

|

"username": "xxxxx", //用户名,可以不填写

|

||||||

|

"password": "xxxx", // 密码,可以不填写

|

||||||

|

"openSSL": true, //开启SSL, true表示开启ssl, false表示关闭

|

||||||

|

"useWhichEndpoint": "EXTERNAL", //指定要连接的网络名称,填写EXTERNAL就是连接endpoints里面的EXTERNAL地址

|

||||||

|

"specifiedJmxPortList": [ // 配置最高优先使用的jmx端口

|

||||||

|

{

|

||||||

|

"serverId": "1", // kafka-broker的brokerId, 注意这个是字符串类型,字符串类型的原因是要兼容connect的jmx端口的连接

|

||||||

|

"jmxPort": 1234 // 该 broker 所连接的jmx端口

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"serverId": "2",

|

||||||

|

"jmxPort": 1234

|

||||||

|

},

|

||||||

|

]

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

SQL例子:

|

||||||

|

|

||||||

|

```sql

|

||||||

|

UPDATE ks_km_physical_cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false , "specifiedJmxPortList": [{"serverId": "1", "jmxPort": 1234}] }' where id={xxx};

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|||||||

183

docs/dev_guide/页面无数据排查手册.md

Normal file

183

docs/dev_guide/页面无数据排查手册.md

Normal file

@@ -0,0 +1,183 @@

|

|||||||

|

|

||||||

|

|

||||||

|

# 页面无数据排查手册

|

||||||

|

|

||||||

|

- [页面无数据排查手册](#页面无数据排查手册)

|

||||||

|

- [1、集群接入错误](#1集群接入错误)

|

||||||

|

- [1.1、异常现象](#11异常现象)

|

||||||

|

- [1.2、解决方案](#12解决方案)

|

||||||

|

- [1.3、正常情况](#13正常情况)

|

||||||

|

- [2、JMX连接失败](#2jmx连接失败)

|

||||||

|

- [3、ElasticSearch问题](#3elasticsearch问题)

|

||||||

|

- [3.1、异因一:缺少索引](#31异因一缺少索引)

|

||||||

|

- [3.1.1、异常现象](#311异常现象)

|

||||||

|

- [3.1.2、解决方案](#312解决方案)

|

||||||

|

- [3.2、异因二:索引模板错误](#32异因二索引模板错误)

|

||||||

|

- [3.2.1、异常现象](#321异常现象)

|

||||||

|

- [3.2.2、解决方案](#322解决方案)

|

||||||

|

- [3.3、异因三:集群Shard满](#33异因三集群shard满)

|

||||||

|

- [3.3.1、异常现象](#331异常现象)

|

||||||

|

- [3.3.2、解决方案](#332解决方案)

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 1、集群接入错误

|

||||||

|

|

||||||

|

### 1.1、异常现象

|

||||||

|

|

||||||

|

如下图所示,集群非空时,大概率为地址配置错误导致。

|

||||||

|

|

||||||

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_BRiXBvqYFK2dxSF1aqgZ width="80%">

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 1.2、解决方案

|

||||||

|

|

||||||

|

接入集群时,依据提示的错误,进行相应的解决。例如:

|

||||||

|

|

||||||

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_Yn4LhV8aeSEKX1zrrkUi width="50%">

|

||||||

|

|

||||||

|

### 1.3、正常情况

|

||||||

|

|

||||||

|

接入集群时,页面信息都自动正常出现,没有提示错误。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 2、JMX连接失败

|

||||||

|

|

||||||

|

背景:Kafka 通过 JMX 服务进行运行指标的暴露,因此 `KnowStreaming` 会主动连接 Kafka 的 JMX 服务进行指标采集。如果我们发现页面缺少指标,那么可能原因之一是 Kafka 的 JMX 端口配置的有问题导致指标获取失败,进而页面没有数据。

|

||||||

|

|

||||||

|

|

||||||

|

具体见同目录下的文档:[解决连接JMX失败](./%E8%A7%A3%E5%86%B3%E8%BF%9E%E6%8E%A5JMX%E5%A4%B1%E8%B4%A5.md)

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## 3、ElasticSearch问题

|

||||||

|

|

||||||

|

**背景:**

|

||||||

|

`KnowStreaming` 将从 Kafka 中采集到的指标存储到 ES 中,如果 ES 存在问题,则也可能会导致页面出现无数据的情况。

|

||||||

|

|

||||||

|

**日志:**

|

||||||

|

`KnowStreaming` 读写 ES 相关日志,在 `logs/es/es.log` 中!

|

||||||

|

|

||||||

|

|

||||||

|

**注意:**

|

||||||

|

mac系统在执行curl指令时,可能报zsh错误。可参考以下操作。

|

||||||

|

|

||||||

|

```bash

|

||||||

|

1 进入.zshrc 文件 vim ~/.zshrc

|

||||||

|

2.在.zshrc中加入 setopt no_nomatch

|

||||||

|

3.更新配置 source ~/.zshrc

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### 3.1、异因一:缺少索引

|

||||||

|

|

||||||

|

#### 3.1.1、异常现象

|

||||||

|

|

||||||

|

报错信息

|

||||||

|

|

||||||

|

```log

|

||||||

|

# 日志位置 logs/es/es.log

|

||||||

|

com.didiglobal.logi.elasticsearch.client.model.exception.ESIndexNotFoundException: method [GET], host[http://127.0.0.1:9200], URI [/ks_kafka_broker_metric_2022-10-21,ks_kafka_broker_metric_2022-10-22/_search], status line [HTTP/1.1 404 Not Found]

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

`curl http://{ES的IP地址}:{ES的端口号}/_cat/indices/ks_kafka*` 查看KS索引列表,发现没有索引。

|

||||||

|

|

||||||

|

#### 3.1.2、解决方案

|

||||||

|

|

||||||

|

执行 [ES索引及模版初始化](https://github.com/didi/KnowStreaming/blob/master/bin/init_es_template.sh) 脚本,来创建索引及模版。

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

### 3.2、异因二:索引模板错误

|

||||||

|

|

||||||

|

#### 3.2.1、异常现象

|

||||||

|

|

||||||

|

多集群列表有数据,集群详情页图标无数据。查询KS索引模板列表,发现不存在。

|

||||||

|

|

||||||

|

```bash

|

||||||

|

curl {ES的IP地址}:{ES的端口号}/_cat/templates/ks_kafka*?v&h=name

|

||||||

|

```

|

||||||

|

|

||||||

|

正常KS模板如下图所示。

|

||||||

|

|

||||||

|

<img src=http://img-ys011.didistatic.com/static/dc2img/do1_l79bPYSci9wr6KFwZDA6 width="90%">

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

#### 3.2.2、解决方案

|

||||||

|

|

||||||

|

删除KS索引模板和索引

|

||||||

|

|

||||||

|

```bash

|

||||||

|

curl -XDELETE {ES的IP地址}:{ES的端口号}/ks_kafka*

|

||||||

|

curl -XDELETE {ES的IP地址}:{ES的端口号}/_template/ks_kafka*

|

||||||

|

```

|

||||||

|

|

||||||

|

执行 [ES索引及模版初始化](https://github.com/didi/KnowStreaming/blob/master/bin/init_es_template.sh) 脚本,来创建索引及模版。

|

||||||

|

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

### 3.3、异因三:集群Shard满

|

||||||

|

|

||||||

|

#### 3.3.1、异常现象

|

||||||

|

|

||||||

|

报错信息

|

||||||

|

|

||||||

|

```log

|

||||||

|

# 日志位置 logs/es/es.log

|

||||||

|

|

||||||

|

{"error":{"root_cause":[{"type":"validation_exception","reason":"Validation Failed: 1: this action would add [4] total shards, but this cluster currently has [1000]/[1000] maximum shards open;"}],"type":"validation_exception","reason":"Validation Failed: 1: this action would add [4] total shards, but this cluster currently has [1000]/[1000] maximum shards open;"},"status":400}

|

||||||

|

```

|

||||||

|

|

||||||

|

尝试手动创建索引失败。

|

||||||

|

|

||||||

|

```bash

|

||||||

|

#创建ks_kafka_cluster_metric_test索引的指令

|

||||||

|

curl -s -XPUT http://{ES的IP地址}:{ES的端口号}/ks_kafka_cluster_metric_test

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

#### 3.3.2、解决方案

|

||||||

|

|

||||||

|

ES索引的默认分片数量为1000,达到数量以后,索引创建失败。

|

||||||

|

|

||||||

|

+ 扩大ES索引数量上限,执行指令

|

||||||

|

|

||||||

|

```

|

||||||

|

curl -XPUT -H"content-type:application/json" http://{ES的IP地址}:{ES的端口号}/_cluster/settings -d '

|

||||||

|

{

|

||||||

|

"persistent": {

|

||||||

|

"cluster": {

|

||||||

|

"max_shards_per_node":{索引上限,默认为1000, 测试时可以将其调整为10000}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}'

|

||||||

|

```

|

||||||

|

|

||||||

|

执行 [ES索引及模版初始化](https://github.com/didi/KnowStreaming/blob/master/bin/init_es_template.sh) 脚本,来补全索引。

|

||||||

|

|

||||||

|

|

||||||

@@ -6,6 +6,54 @@

|

|||||||

|

|

||||||

### 升级至 `master` 版本

|

### 升级至 `master` 版本

|

||||||

|

|

||||||

|

**配置变更**

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

# 新增的配置

|

||||||

|

request: # 请求相关的配置

|

||||||

|

api-call: # api调用

|

||||||

|

timeout-unit-ms: 8000 # 超时时间,默认8000毫秒

|

||||||

|

```

|

||||||

|

|

||||||

|

**SQL 变更**

|

||||||

|

```sql

|

||||||

|

-- 多集群管理权限2023-06-27新增

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2026', 'Connector-新增', '1593', '1', '2', 'Connector-新增', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2028', 'Connector-编辑', '1593', '1', '2', 'Connector-编辑', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2030', 'Connector-删除', '1593', '1', '2', 'Connector-删除', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2032', 'Connector-重启', '1593', '1', '2', 'Connector-重启', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2034', 'Connector-暂停&恢复', '1593', '1', '2', 'Connector-暂停&恢复', '0', 'know-streaming');

|

||||||

|

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2026', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2028', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2030', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2032', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2034', '0', 'know-streaming');

|

||||||

|

|

||||||

|

|

||||||

|

-- 多集群管理权限2023-06-29新增

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2036', 'Security-ACL新增', '1593', '1', '2', 'Security-ACL新增', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2038', 'Security-ACL删除', '1593', '1', '2', 'Security-ACL删除', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2040', 'Security-User新增', '1593', '1', '2', 'Security-User新增', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2042', 'Security-User删除', '1593', '1', '2', 'Security-User删除', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2044', 'Security-User修改密码', '1593', '1', '2', 'Security-User修改密码', '0', 'know-streaming');

|

||||||

|

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2036', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2038', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2040', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2042', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2044', '0', 'know-streaming');

|

||||||

|

|

||||||

|

|

||||||

|

-- 多集群管理权限2023-07-06新增

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2046', 'Group-删除', '1593', '1', '2', 'Group-删除', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2048', 'GroupOffset-Topic纬度删除', '1593', '1', '2', 'GroupOffset-Topic纬度删除', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2050', 'GroupOffset-Partition纬度删除', '1593', '1', '2', 'GroupOffset-Partition纬度删除', '0', 'know-streaming');

|

||||||

|

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2046', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2048', '0', 'know-streaming');

|

||||||

|

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2050', '0', 'know-streaming');

|

||||||

|

```

|

||||||

|

|

||||||

### 升级至 `3.3.0` 版本

|

### 升级至 `3.3.0` 版本

|

||||||

|

|

||||||

|

|||||||

@@ -1,13 +1,35 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

# FAQ

|

# FAQ

|

||||||

|

|

||||||

## 8.1、支持哪些 Kafka 版本?

|

- [FAQ](#faq)

|

||||||

|

- [1、支持哪些 Kafka 版本?](#1支持哪些-kafka-版本)

|

||||||

|

- [1、2.x 版本和 3.0 版本有什么差异?](#12x-版本和-30-版本有什么差异)

|

||||||

|

- [3、页面流量信息等无数据?](#3页面流量信息等无数据)

|

||||||

|

- [8.4、`Jmx`连接失败如何解决?](#84jmx连接失败如何解决)

|

||||||

|

- [5、有没有 API 文档?](#5有没有-api-文档)

|

||||||

|

- [6、删除 Topic 成功后,为何过段时间又出现了?](#6删除-topic-成功后为何过段时间又出现了)

|

||||||

|

- [7、如何在不登录的情况下,调用接口?](#7如何在不登录的情况下调用接口)

|

||||||

|

- [8、Specified key was too long; max key length is 767 bytes](#8specified-key-was-too-long-max-key-length-is-767-bytes)

|

||||||

|

- [9、出现 ESIndexNotFoundEXception 报错](#9出现-esindexnotfoundexception-报错)

|

||||||

|

- [10、km-console 打包构建失败](#10km-console-打包构建失败)

|

||||||

|

- [11、在 `km-console` 目录下执行 `npm run start` 时看不到应用构建和热加载过程?如何启动单个应用?](#11在-km-console-目录下执行-npm-run-start-时看不到应用构建和热加载过程如何启动单个应用)

|

||||||

|

- [12、权限识别失败问题](#12权限识别失败问题)

|

||||||

|

- [13、接入开启kerberos认证的kafka集群](#13接入开启kerberos认证的kafka集群)

|

||||||

|

- [14、对接Ldap的配置](#14对接ldap的配置)

|

||||||

|

- [15、测试时使用Testcontainers的说明](#15测试时使用testcontainers的说明)

|

||||||

|

- [16、JMX连接失败怎么办](#16jmx连接失败怎么办)

|

||||||

|

- [17、zk监控无数据问题](#17zk监控无数据问题)

|

||||||

|

|

||||||

|

## 1、支持哪些 Kafka 版本?

|

||||||

|

|

||||||

- 支持 0.10+ 的 Kafka 版本;

|

- 支持 0.10+ 的 Kafka 版本;

|

||||||

- 支持 ZK 及 Raft 运行模式的 Kafka 版本;

|

- 支持 ZK 及 Raft 运行模式的 Kafka 版本;

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 8.1、2.x 版本和 3.0 版本有什么差异?

|

## 1、2.x 版本和 3.0 版本有什么差异?

|

||||||

|

|

||||||

**全新设计理念**

|

**全新设计理念**

|

||||||

|

|

||||||

@@ -23,7 +45,7 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 8.3、页面流量信息等无数据?

|

## 3、页面流量信息等无数据?

|

||||||

|

|

||||||

- 1、`Broker JMX`未正确开启

|

- 1、`Broker JMX`未正确开启

|

||||||

|

|

||||||

@@ -41,7 +63,7 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 8.5、有没有 API 文档?

|

## 5、有没有 API 文档?

|

||||||

|

|

||||||

`KnowStreaming` 采用 Swagger 进行 API 说明,在启动 KnowStreaming 服务之后,就可以从下面地址看到。

|

`KnowStreaming` 采用 Swagger 进行 API 说明,在启动 KnowStreaming 服务之后,就可以从下面地址看到。

|

||||||

|

|

||||||

@@ -49,7 +71,7 @@ Swagger-API 地址: [http://IP:PORT/swagger-ui.html#/](http://IP:PORT/swagger-

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 8.6、删除 Topic 成功后,为何过段时间又出现了?

|

## 6、删除 Topic 成功后,为何过段时间又出现了?

|

||||||

|

|

||||||

**原因说明:**

|

**原因说明:**

|

||||||

|

|

||||||

@@ -74,7 +96,7 @@ for (int i= 0; i < 100000; ++i) {

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 8.7、如何在不登录的情况下,调用接口?

|

## 7、如何在不登录的情况下,调用接口?

|

||||||

|

|

||||||

步骤一:接口调用时,在 header 中,增加如下信息:

|

步骤一:接口调用时,在 header 中,增加如下信息:

|

||||||

|

|

||||||

@@ -109,7 +131,7 @@ SECURITY.TRICK_USERS

|

|||||||

|

|

||||||

但是还有一点需要注意,绕过的用户仅能调用他有权限的接口,比如一个普通用户,那么他就只能调用普通的接口,不能去调用运维人员的接口。

|

但是还有一点需要注意,绕过的用户仅能调用他有权限的接口,比如一个普通用户,那么他就只能调用普通的接口,不能去调用运维人员的接口。

|

||||||

|

|

||||||

## 8.8、Specified key was too long; max key length is 767 bytes

|

## 8、Specified key was too long; max key length is 767 bytes

|

||||||

|

|

||||||