mirror of

https://github.com/didi/KnowStreaming.git

synced 2026-02-07 06:30:49 +08:00

1

.github/ISSUE_TEMPLATE.md

vendored

1

.github/ISSUE_TEMPLATE.md

vendored

@@ -1 +0,0 @@

|

||||

## Issue 模板

|

||||

46

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

46

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,46 @@

|

||||

---

|

||||

name: 报告Bug

|

||||

about: 报告KnowStreaming的相关Bug

|

||||

title: ''

|

||||

labels: bug

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

- [ ] 我已经在 [issues](https://github.com/didi/KnowStreaming/issues) 搜索过相关问题了,并没有重复的。

|

||||

|

||||

### 环境信息

|

||||

|

||||

* KnowStreaming version : <font size=4 color =red> xxx </font>

|

||||

* Operating System version : <font size=4 color =red> xxx </font>

|

||||

* Java version : <font size=4 color =red> xxx </font>

|

||||

|

||||

### 重现该问题的步骤

|

||||

|

||||

1. xxx

|

||||

|

||||

|

||||

|

||||

2. xxx

|

||||

|

||||

|

||||

3. xxx

|

||||

|

||||

|

||||

|

||||

### 预期结果

|

||||

|

||||

<!-- 写下应该出现的预期结果?-->

|

||||

|

||||

### 实际结果

|

||||

|

||||

<!-- 实际发生了什么? -->

|

||||

|

||||

|

||||

---

|

||||

|

||||

如果有异常,请附上异常Trace:

|

||||

|

||||

```

|

||||

Just put your stack trace here!

|

||||

```

|

||||

5

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

5

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,5 @@

|

||||

blank_issues_enabled: true

|

||||

contact_links:

|

||||

- name: KnowStreaming官网

|

||||

url: https://knowstreaming.com/

|

||||

about: KnowStreaming website

|

||||

22

.github/ISSUE_TEMPLATE/detail_optimizing.md

vendored

Normal file

22

.github/ISSUE_TEMPLATE/detail_optimizing.md

vendored

Normal file

@@ -0,0 +1,22 @@

|

||||

---

|

||||

name: 优化建议

|

||||

about: 相关功能优化建议

|

||||

title: ''

|

||||

labels: Optimization Suggestions

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

- [ ] 我已经在 [issues](https://github.com/didi/KnowStreaming/issues) 搜索过相关问题了,并没有重复的。

|

||||

|

||||

### 环境信息

|

||||

|

||||

* KnowStreaming version : <font size=4 color =red> xxx </font>

|

||||

* Operating System version : <font size=4 color =red> xxx </font>

|

||||

* Java version : <font size=4 color =red> xxx </font>

|

||||

|

||||

### 需要优化的功能点

|

||||

|

||||

|

||||

### 建议如何优化

|

||||

|

||||

12

.github/ISSUE_TEMPLATE/discussion.md

vendored

Normal file

12

.github/ISSUE_TEMPLATE/discussion.md

vendored

Normal file

@@ -0,0 +1,12 @@

|

||||

---

|

||||

name: 讨论/discussion

|

||||

about: 开启一个关于KnowStreaming的讨论

|

||||

title: ''

|

||||

labels: discussion

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

## 讨论主题

|

||||

|

||||

...

|

||||

15

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

15

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,15 @@

|

||||

---

|

||||

name: 提议新功能/需求

|

||||

about: 给KnowStreaming提一个功能需求

|

||||

title: ''

|

||||

labels: feature

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

- [ ] 我在 [issues](https://github.com/didi/KnowStreaming/issues) 中并未搜索到与此相关的功能需求。

|

||||

- [ ] 我在 [release notes] (https://github.com/didi/KnowStreaming/releases)已经发布的版本中并没有搜到相关功能.

|

||||

|

||||

## 这里描述需求

|

||||

<!--请尽可能的描述清楚您的需求 -->

|

||||

|

||||

12

.github/ISSUE_TEMPLATE/question.md

vendored

Normal file

12

.github/ISSUE_TEMPLATE/question.md

vendored

Normal file

@@ -0,0 +1,12 @@

|

||||

---

|

||||

name: 提个问题

|

||||

about: 问KnowStreaming相关问题

|

||||

title: ''

|

||||

labels: question

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

- [ ] 我已经在 [issues](https://github.com/didi/KnowStreaming/issues) 搜索过相关问题了,并没有重复的。

|

||||

|

||||

## 在这里提出你的问题

|

||||

22

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

22

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@@ -0,0 +1,22 @@

|

||||

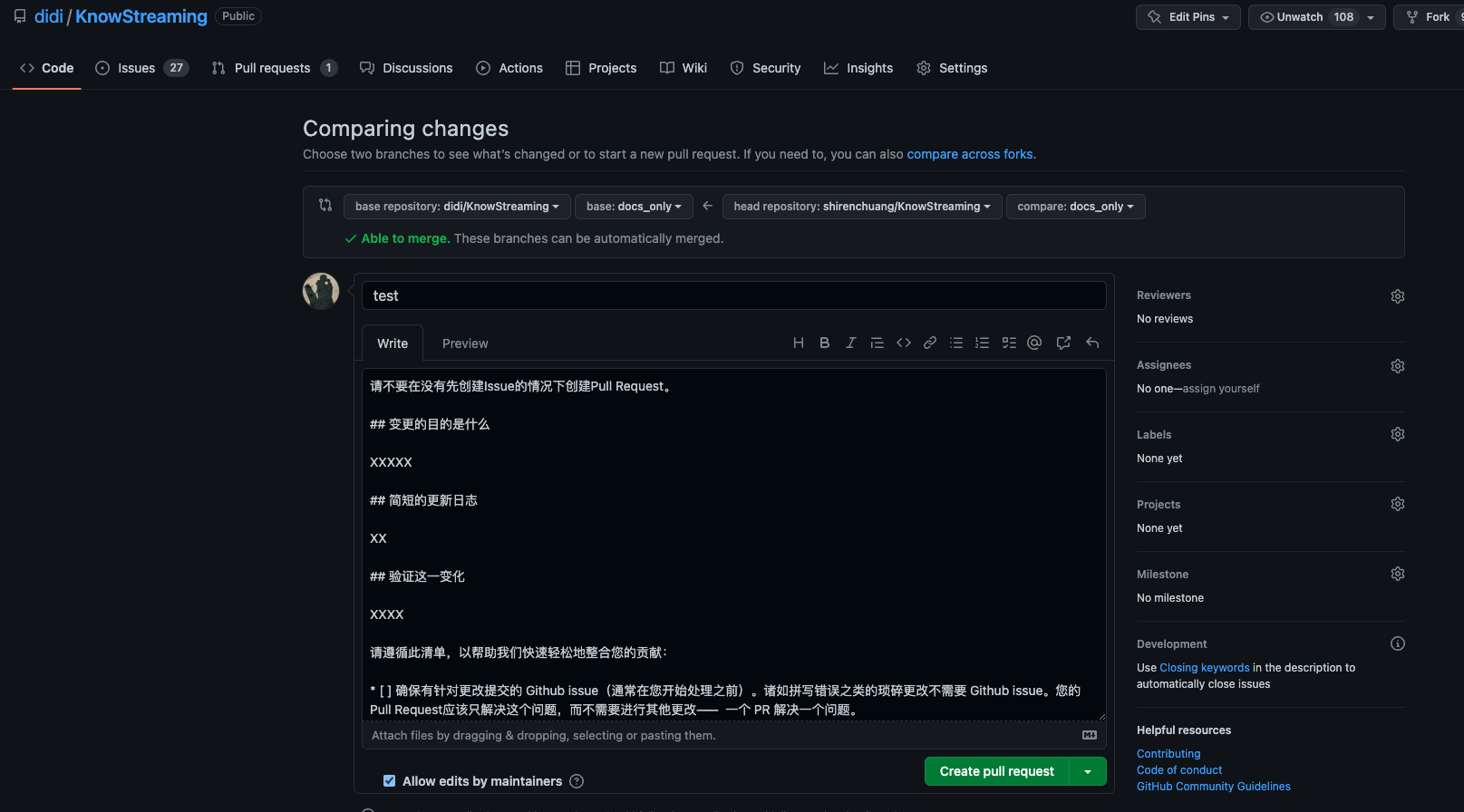

请不要在没有先创建Issue的情况下创建Pull Request。

|

||||

|

||||

## 变更的目的是什么

|

||||

|

||||

XXXXX

|

||||

|

||||

## 简短的更新日志

|

||||

|

||||

XX

|

||||

|

||||

## 验证这一变化

|

||||

|

||||

XXXX

|

||||

|

||||

请遵循此清单,以帮助我们快速轻松地整合您的贡献:

|

||||

|

||||

* [ ] 确保有针对更改提交的 Github issue(通常在您开始处理之前)。诸如拼写错误之类的琐碎更改不需要 Github issue。您的Pull Request应该只解决这个问题,而不需要进行其他更改—— 一个 PR 解决一个问题。

|

||||

* [ ] 格式化 Pull Request 标题,如[ISSUE #123] support Confluent Schema Registry。 Pull Request 中的每个提交都应该有一个有意义的主题行和正文。

|

||||

* [ ] 编写足够详细的Pull Request描述,以了解Pull Request的作用、方式和原因。

|

||||

* [ ] 编写必要的单元测试来验证您的逻辑更正。如果提交了新功能或重大更改,请记住在test 模块中添加 integration-test

|

||||

* [ ] 确保编译通过,集成测试通过

|

||||

|

||||

74

CODE_OF_CONDUCT.md

Normal file

74

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,74 @@

|

||||

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

In the interest of fostering an open and welcoming environment, we as

|

||||

contributors and maintainers pledge to making participation in our project and

|

||||

our community a harassment-free experience for everyone, regardless of age, body

|

||||

size, disability, ethnicity, gender identity and expression, level of experience,

|

||||

education, socio-economic status, nationality, personal appearance, race,

|

||||

religion, or sexual identity and orientation.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to creating a positive environment

|

||||

include:

|

||||

|

||||

* Using welcoming and inclusive language

|

||||

* Being respectful of differing viewpoints and experiences

|

||||

* Gracefully accepting constructive criticism

|

||||

* Focusing on what is best for the community

|

||||

* Showing empathy towards other community members

|

||||

|

||||

Examples of unacceptable behavior by participants include:

|

||||

|

||||

* The use of sexualized language or imagery and unwelcome sexual attention or

|

||||

advances

|

||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or electronic

|

||||

address, without explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Our Responsibilities

|

||||

|

||||

Project maintainers are responsible for clarifying the standards of acceptable

|

||||

behavior and are expected to take appropriate and fair corrective action in

|

||||

response to any instances of unacceptable behavior.

|

||||

|

||||

Project maintainers have the right and responsibility to remove, edit, or

|

||||

reject comments, commits, code, wiki edits, issues, and other contributions

|

||||

that are not aligned to this Code of Conduct, or to ban temporarily or

|

||||

permanently any contributor for other behaviors that they deem inappropriate,

|

||||

threatening, offensive, or harmful.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies both within project spaces and in public spaces

|

||||

when an individual is representing the project or its community. Examples of

|

||||

representing a project or community include using an official project e-mail

|

||||

address, posting via an official social media account, or acting as an appointed

|

||||

representative at an online or offline event. Representation of a project may be

|

||||

further defined and clarified by project maintainers.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported by contacting the project team at shirenchuang@didiglobal.com . All

|

||||

complaints will be reviewed and investigated and will result in a response that

|

||||

is deemed necessary and appropriate to the circumstances. The project team is

|

||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

||||

Further details of specific enforcement policies may be posted separately.

|

||||

|

||||

Project maintainers who do not follow or enforce the Code of Conduct in good

|

||||

faith may face temporary or permanent repercussions as determined by other

|

||||

members of the project's leadership.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

||||

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

158

CONTRIBUTING.md

158

CONTRIBUTING.md

@@ -1,28 +1,150 @@

|

||||

# Contribution Guideline

|

||||

|

||||

Thanks for considering to contribute this project. All issues and pull requests are highly appreciated.

|

||||

|

||||

## Pull Requests

|

||||

|

||||

Before sending pull request to this project, please read and follow guidelines below.

|

||||

# 为KnowStreaming做贡献

|

||||

|

||||

1. Branch: We only accept pull request on `dev` branch.

|

||||

2. Coding style: Follow the coding style used in LogiKM.

|

||||

3. Commit message: Use English and be aware of your spell.

|

||||

4. Test: Make sure to test your code.

|

||||

|

||||

Add device mode, API version, related log, screenshots and other related information in your pull request if possible.

|

||||

欢迎👏🏻来到KnowStreaming!本文档是关于如何为KnowStreaming做出贡献的指南。

|

||||

|

||||

NOTE: We assume all your contribution can be licensed under the [AGPL-3.0](LICENSE).

|

||||

如果您发现不正确或遗漏的内容, 请留下意见/建议。

|

||||

|

||||

## Issues

|

||||

## 行为守则

|

||||

请务必阅读并遵守我们的 [行为准则](./CODE_OF_CONDUCT.md).

|

||||

|

||||

We love clearly described issues. :)

|

||||

|

||||

Following information can help us to resolve the issue faster.

|

||||

|

||||

* Device mode and hardware information.

|

||||

* API version.

|

||||

* Logs.

|

||||

* Screenshots.

|

||||

* Steps to reproduce the issue.

|

||||

## 贡献

|

||||

|

||||

**KnowStreaming** 欢迎任何角色的新参与者,包括 **User** 、**Contributor**、**Committer**、**PMC** 。

|

||||

|

||||

我们鼓励新人积极加入 **KnowStreaming** 项目,从User到Contributor、Committer ,甚至是 PMC 角色。

|

||||

|

||||

为了做到这一点,新人需要积极地为 **KnowStreaming** 项目做出贡献。以下介绍如何对 **KnowStreaming** 进行贡献。

|

||||

|

||||

|

||||

### 创建/打开 Issue

|

||||

|

||||

如果您在文档中发现拼写错误、在代码中**发现错误**或想要**新功能**或想要**提供建议**,您可以在 GitHub 上[创建一个Issue](https://github.com/didi/KnowStreaming/issues/new/choose) 进行报告。

|

||||

|

||||

|

||||

如果您想直接贡献, 您可以选择下面标签的问题。

|

||||

|

||||

- [contribution welcome](https://github.com/didi/KnowStreaming/labels/contribution%20welcome) : 非常需要解决/新增 的Issues

|

||||

- [good first issue](https://github.com/didi/KnowStreaming/labels/good%20first%20issue): 对新人比较友好, 新人可以拿这个Issue来练练手热热身。

|

||||

|

||||

<font color=red ><b> 请注意,任何 PR 都必须与有效issue相关联。否则,PR 将被拒绝。</b></font>

|

||||

|

||||

|

||||

|

||||

### 开始你的贡献

|

||||

|

||||

**分支介绍**

|

||||

|

||||

我们将 `dev`分支作为开发分支, 说明这是一个不稳定的分支。

|

||||

|

||||

此外,我们的分支模型符合 [https://nvie.com/posts/a-successful-git-branching-model/](https://nvie.com/posts/a-successful-git-branching-model/). 我们强烈建议新人在创建PR之前先阅读上述文章。

|

||||

|

||||

|

||||

|

||||

**贡献流程**

|

||||

|

||||

为方便描述,我们这里定义一下2个名词:

|

||||

|

||||

自己Fork出来的仓库是私人仓库, 我们这里称之为 :**分叉仓库**

|

||||

Fork的源项目,我们称之为:**源仓库**

|

||||

|

||||

|

||||

现在,如果您准备好创建PR, 以下是贡献者的工作流程:

|

||||

|

||||

1. Fork [KnowStreaming](https://github.com/didi/KnowStreaming) 项目到自己的仓库

|

||||

|

||||

2. 从源仓库的`dev`拉取并创建自己的本地分支,例如: `dev`

|

||||

3. 在本地分支上对代码进行修改

|

||||

4. Rebase 开发分支, 并解决冲突

|

||||

5. commit 并 push 您的更改到您自己的**分叉仓库**

|

||||

6. 创建一个 Pull Request 到**源仓库**的`dev`分支中。

|

||||

7. 等待回复。如果回复的慢,请无情的催促。

|

||||

|

||||

|

||||

更为详细的贡献流程请看:[贡献流程](./docs/contributer_guide/贡献流程.md)

|

||||

|

||||

创建Pull Request时:

|

||||

|

||||

1. 请遵循 PR的 [模板](./.github/PULL_REQUEST_TEMPLATE.md)

|

||||

2. 请确保 PR 有相应的issue。

|

||||

3. 如果您的 PR 包含较大的更改,例如组件重构或新组件,请编写有关其设计和使用的详细文档(在对应的issue中)。

|

||||

4. 注意单个 PR 不能太大。如果需要进行大量更改,最好将更改分成几个单独的 PR。

|

||||

5. 在合并PR之前,尽量的将最终的提交信息清晰简洁, 将多次修改的提交尽可能的合并为一次提交。

|

||||

6. 创建 PR 后,将为PR分配一个或多个reviewers。

|

||||

|

||||

|

||||

<font color=red><b>如果您的 PR 包含较大的更改,例如组件重构或新组件,请编写有关其设计和使用的详细文档。</b></font>

|

||||

|

||||

|

||||

# 代码审查指南

|

||||

|

||||

Commiter将轮流review代码,以确保在合并前至少有一名Commiter

|

||||

|

||||

一些原则:

|

||||

|

||||

- 可读性——重要的代码应该有详细的文档。API 应该有 Javadoc。代码风格应与现有风格保持一致。

|

||||

- 优雅:新的函数、类或组件应该设计得很好。

|

||||

- 可测试性——单元测试用例应该覆盖 80% 的新代码。

|

||||

- 可维护性 - 遵守我们的编码规范。

|

||||

|

||||

|

||||

# 开发者

|

||||

|

||||

## 成为Contributor

|

||||

|

||||

只要成功提交并合并PR , 则为Contributor

|

||||

|

||||

贡献者名单请看:[贡献者名单](./docs/contributer_guide/开发者名单.md)

|

||||

|

||||

## 尝试成为Commiter

|

||||

|

||||

一般来说, 贡献8个重要的补丁并至少让三个不同的人来Review他们(您需要3个Commiter的支持)。

|

||||

然后请人给你提名, 您需要展示您的

|

||||

|

||||

1. 至少8个重要的PR和项目的相关问题

|

||||

2. 与团队合作的能力

|

||||

3. 了解项目的代码库和编码风格

|

||||

4. 编写好代码的能力

|

||||

|

||||

当前的Commiter可以通过在KnowStreaming中的Issue标签 `nomination`(提名)来提名您

|

||||

|

||||

1. 你的名字和姓氏

|

||||

2. 指向您的Git个人资料的链接

|

||||

3. 解释为什么你应该成为Commiter

|

||||

4. 详细说明提名人与您合作的3个PR以及相关问题,这些问题可以证明您的能力。

|

||||

|

||||

另外2个Commiter需要支持您的**提名**,如果5个工作日内没有人反对,您就是提交者,如果有人反对或者想要更多的信息,Commiter会讨论并通常达成共识(5个工作日内) 。

|

||||

|

||||

|

||||

# 开源奖励计划

|

||||

|

||||

|

||||

我们非常欢迎开发者们为KnowStreaming开源项目贡献一份力量,相应也将给予贡献者激励以表认可与感谢。

|

||||

|

||||

|

||||

## 参与贡献

|

||||

|

||||

1. 积极参与 Issue 的讨论,如答疑解惑、提供想法或报告无法解决的错误(Issue)

|

||||

2. 撰写和改进项目的文档(Wiki)

|

||||

3. 提交补丁优化代码(Coding)

|

||||

|

||||

|

||||

## 你将获得

|

||||

|

||||

1. 加入KnowStreaming开源项目贡献者名单并展示

|

||||

2. KnowStreaming开源贡献者证书(纸质&电子版)

|

||||

3. KnowStreaming贡献者精美大礼包(KnowStreamin/滴滴 周边)

|

||||

|

||||

|

||||

## 相关规则

|

||||

|

||||

- Contributer和Commiter都会有对应的证书和对应的礼包

|

||||

- 每季度有KnowStreaming项目团队评选出杰出贡献者,颁发相应证书。

|

||||

- 年末进行年度评选

|

||||

|

||||

贡献者名单请看:[贡献者名单](./docs/contributer_guide/开发者名单.md)

|

||||

@@ -101,7 +101,7 @@

|

||||

|

||||

点击 [这里](CONTRIBUTING.md),了解如何成为 Know Streaming 的贡献者

|

||||

|

||||

|

||||

获取KnowStreaming开源社区证书。

|

||||

|

||||

## 加入技术交流群

|

||||

|

||||

@@ -134,6 +134,11 @@ PS: 提问请尽量把问题一次性描述清楚,并告知环境信息情况

|

||||

|

||||

微信加群:添加`mike_zhangliang`、`PenceXie`的微信号备注KnowStreaming加群。

|

||||

<br/>

|

||||

|

||||

加群之前有劳点一下 star,一个小小的 star 是对KnowStreaming作者们努力建设社区的动力。

|

||||

|

||||

感谢感谢!!!

|

||||

|

||||

<img width="116" alt="wx" src="https://user-images.githubusercontent.com/71620349/192257217-c4ebc16c-3ad9-485d-a914-5911d3a4f46b.png">

|

||||

|

||||

## Star History

|

||||

|

||||

1

docs/contributer_guide/代码规范.md

Normal file

1

docs/contributer_guide/代码规范.md

Normal file

@@ -0,0 +1 @@

|

||||

TODO.

|

||||

43

docs/contributer_guide/开发者名单.md

Normal file

43

docs/contributer_guide/开发者名单.md

Normal file

@@ -0,0 +1,43 @@

|

||||

|

||||

开源贡献者证书发放名单(定期更新)

|

||||

|

||||

|

||||

贡献者名单请看:[贡献者名单]()

|

||||

|

||||

|

||||

|

||||

|姓名|Github|角色|发放日期|

|

||||

|--|--|--|--|

|

||||

|张亮 | [@zhangliangboy](https://github.com/zhangliangboy)|||

|

||||

|谢鹏|[@PenceXie](https://github.com/PenceXie)|||

|

||||

|石臻臻 | [@shirenchuang](https://github.com/shirenchuang)|||

|

||||

|周宇航|[@GraceWalk](https://github.com/GraceWalk)|||

|

||||

|曾巧|[@ZQKC](https://github.com/ZQKC)|||

|

||||

|赵寅锐|[@ZHAOYINRUI](https://github.com/ZHAOYINRUI)|||

|

||||

|王东方|[@wangdongfang-aden](https://github.com/wangdongfang-aden)|||

|

||||

|haoqi123|[@[haoqi123]](https://github.com/haoqi123)|||

|

||||

|17hao|[@17hao](https://github.com/17hao)|||

|

||||

|Huyueeer|[@Huyueeer](https://github.com/Huyueeer)|||

|

||||

|杨光|[@yaangvipguang](https://github.com/yangvipguang)|

|

||||

|王亚聪|[@wangyacongi](https://github.com/wangyacongi)|

|

||||

|WYAOBO|[@WYAOBO](https://github.com/WYAOBO)

|

||||

| Super .Wein(星痕)| [@superspeedone](https://github.com/superspeedone)|||

|

||||

| Yang Jing| [@yangbajing](https://github.com/yangbajing)|||

|

||||

| 刘新元 Liu XinYuan| [@Liu-XinYuan](https://github.com/Liu-XinYuan)|||

|

||||

|Joker | [@LiubeyJokerQueue](https://github.com/JokerQueue)|||

|

||||

|Eason Lau | [@Liubey](https://github.com/Liubey)|||

|

||||

| hailanxin| [@hailanxin](https://github.com/hailanxin)|||

|

||||

| Qi Zhang| [@zzzhangqi](https://github.com/zzzhangqi)|||

|

||||

|Hongten | [@Hongten](https://github.com/Hongten)|||

|

||||

|fengxsong | [@fengxsong](https://github.com/fengxsong)|||

|

||||

|f1558 | [@f1558](https://github.com/f1558)|||

|

||||

| 谢晓东| [@Strangevy](https://github.com/Strangevy)|||

|

||||

| ZhaoXinlong| [@ZhaoXinlong](https://github.com/ZhaoXinlong)|||

|

||||

|xuehaipeng | [@xuehaipeng](https://github.com/xuehaipeng)|||

|

||||

|mrazkong | [@mrazkong](https://github.com/mrazkong)|||

|

||||

|xuzhengxi | [@hyper-xx)](https://github.com/hyper-xx)|||

|

||||

|pierre xiong | [@pierre94](https://github.com/pierre94)|||

|

||||

|

||||

|

||||

|

||||

|

||||

121

docs/contributer_guide/贡献流程.md

Normal file

121

docs/contributer_guide/贡献流程.md

Normal file

@@ -0,0 +1,121 @@

|

||||

|

||||

### 贡献流程

|

||||

|

||||

[贡献源码细则](../CONTRIBUTING.md)

|

||||

|

||||

#### 1. fork didi/KnowStreaming项目到您的github库

|

||||

|

||||

找到你要Fork的项目,例如 [KnowStreaming](https://github.com/didi/KnowStreaming) ,点击Fork按钮。

|

||||

|

||||

|

||||

|

||||

|

||||

#### 2. 克隆或下载您fork的Nacos代码仓库到您本地

|

||||

|

||||

```sh

|

||||

|

||||

git clone { your fork knowstreaming repo address }

|

||||

|

||||

cd KnowStreaming

|

||||

|

||||

```

|

||||

|

||||

#### 3. 添加 didi/KnowStreaming仓库为upstream仓库

|

||||

|

||||

|

||||

```sh

|

||||

|

||||

### 添加源仓库

|

||||

git remote add upstream https://github.com/didi/KnowStreaming

|

||||

|

||||

### 查看是否添加成功

|

||||

git remote -v

|

||||

|

||||

origin ${your fork KnowStreaming repo address} (fetch)

|

||||

origin ${your fork KnowStreaming repo address} (push)

|

||||

upstream https://github.com/didi/KnowStreaming(fetch)

|

||||

upstream https://github.com/didi/KnowStreaming (push)

|

||||

|

||||

### 获取源仓库的基本信息

|

||||

git fetch origin

|

||||

git fetch upstream

|

||||

|

||||

```

|

||||

上面是将didi/KnowStreaming添加为远程仓库, 当前就会有2个远程仓库

|

||||

|

||||

1. origin : 你Fork出来的分叉仓库

|

||||

2. upstream : 源仓库

|

||||

|

||||

git fetch 获取远程仓库的基本信息, 比如 **源仓库**的所有分支就获取到了

|

||||

|

||||

|

||||

#### 4. 同步源仓库开发分支到本地分叉仓库中

|

||||

|

||||

一般开源项目都会有一个给贡献者提交代码的分支,例如 KnowStreaming的分支是 `dev`;

|

||||

|

||||

|

||||

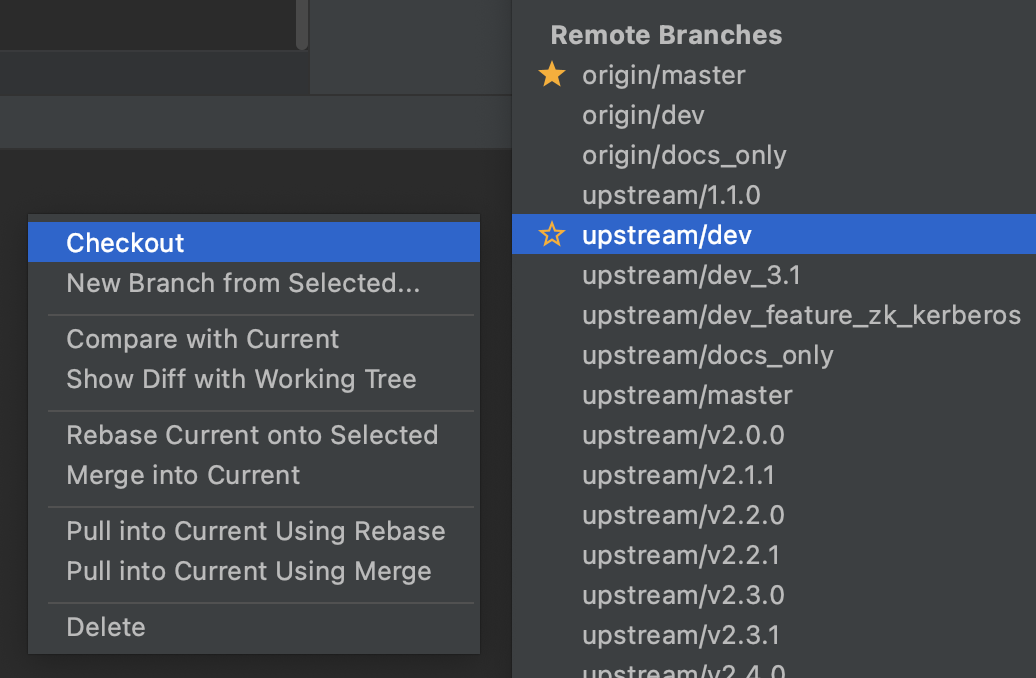

首先我们要将 **源仓库**的开发分支(`dev`) 拉取到本地仓库中

|

||||

```sh

|

||||

|

||||

git checkout -b dev upstream/dev

|

||||

```

|

||||

**或者IDEA的形式创建**

|

||||

|

||||

|

||||

|

||||

#### 5. 在本地新建的开发分支上进行修改

|

||||

|

||||

首先请保证您阅读并正确设置KnowStreaming code style, 相关内容请阅读[KnowStreaming 代码规约 ]()。

|

||||

|

||||

修改时请保证该分支上的修改仅和issue相关,并尽量细化,做到

|

||||

|

||||

<font color=red><b>一个分支只修改一件事,一个PR只修改一件事</b></font>。

|

||||

|

||||

同时,您的提交记录请尽量描述清楚,主要以谓 + 宾进行描述,如:Fix xxx problem/bug。少量简单的提交可以使用For xxx来描述,如:For codestyle。 如果该提交和某个ISSUE相关,可以添加ISSUE号作为前缀,如:For #10000, Fix xxx problem/bug。

|

||||

|

||||

|

||||

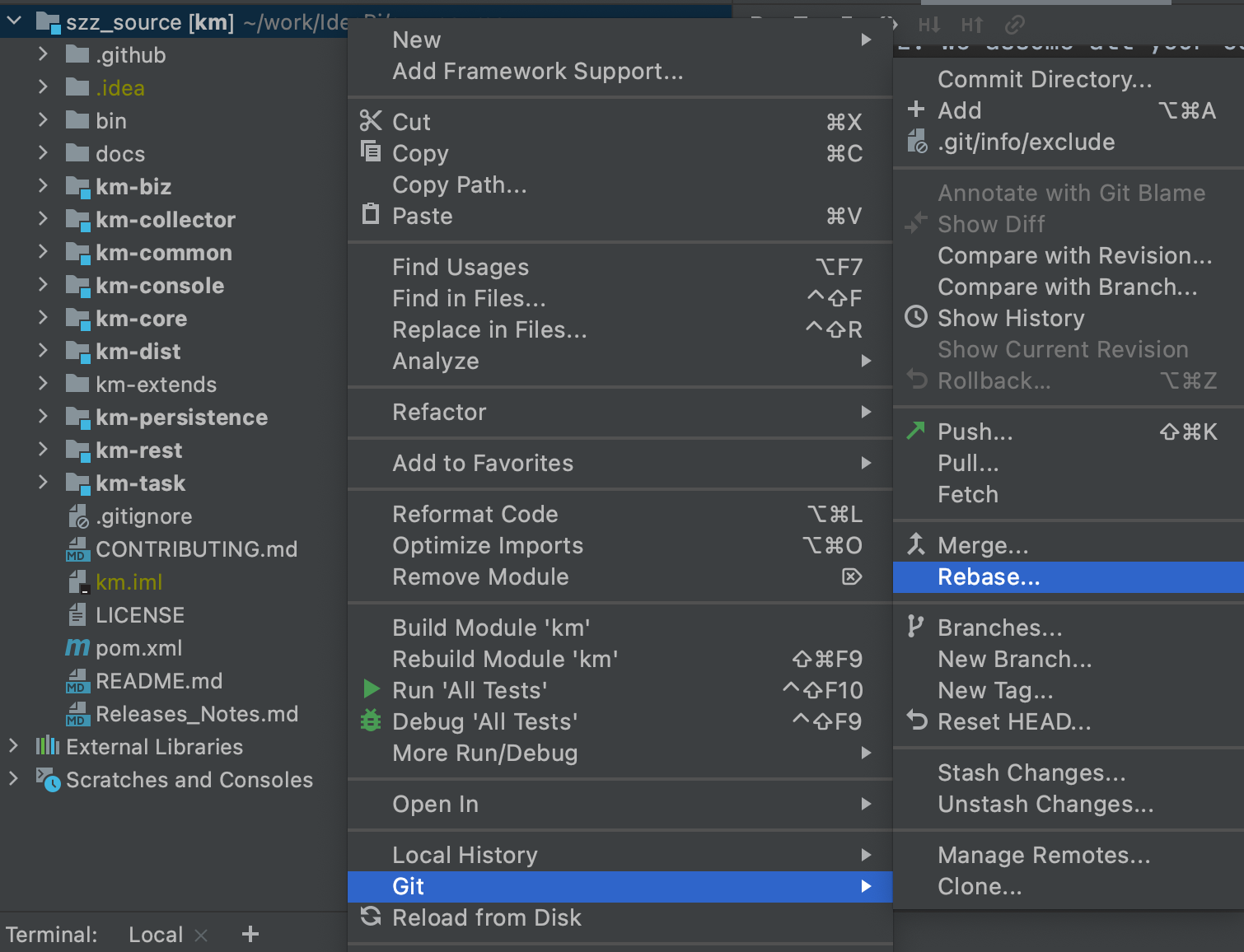

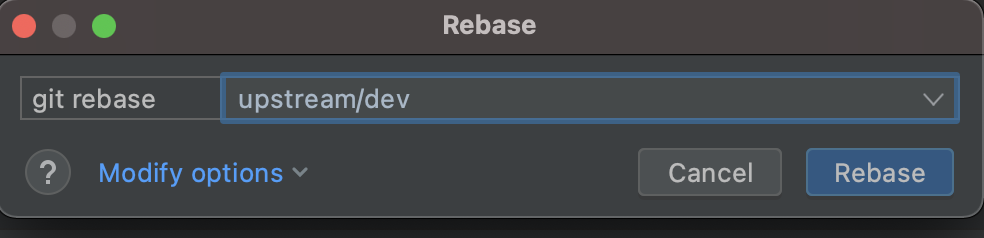

#### 6. Rebase 基础分支和开发分支

|

||||

|

||||

您修改的时候,可能别人的修改已经提交并被合并,此时可能会有冲突,这里请使用rebase命令进行合并解决,主要有2个好处:

|

||||

|

||||

1. 您的提交记录将会非常优雅,不会出现Merge xxxx branch 等字样

|

||||

2. rebase后您分支的提交日志也是一条单链,基本不会出现各种分支交错的情况,回查时更轻松

|

||||

|

||||

```sh

|

||||

git fetch upstream

|

||||

|

||||

git rebase -i upstream/dev

|

||||

|

||||

```

|

||||

**或者在IDEA的操作如下**

|

||||

|

||||

|

||||

选择 源仓库的开发分支

|

||||

|

||||

|

||||

推荐使用IDEA的方式, 有冲突的时候更容易解决冲突问题。

|

||||

|

||||

#### 7. 将您开发完成rebase后的分支,上传到您fork的仓库

|

||||

|

||||

```sh

|

||||

git push origin dev

|

||||

```

|

||||

|

||||

#### 8. 按照PR模板中的清单创建Pull Request

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

选择自己的分支合并到模板分支。

|

||||

|

||||

|

||||

#### 9. 等待合并代码

|

||||

|

||||

提交了PR之后,需要等待PMC、Commiter 来Review代码,如果有问题需要配合修改重新提交。

|

||||

|

||||

如果没有问题会直接合并到开发分支`dev`中。

|

||||

|

||||

注: 如果长时间没有review, 则可以多催促社区来Review代码!

|

||||

|

||||

|

||||

@@ -7,7 +7,22 @@

|

||||

|

||||

### 6.2.0、升级至 `master` 版本

|

||||

|

||||

暂无

|

||||

```sql

|

||||

DROP TABLE IF EXISTS `ks_km_zookeeper`;

|

||||

CREATE TABLE `ks_km_zookeeper` (

|

||||

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT 'id',

|

||||

`cluster_phy_id` bigint(20) NOT NULL DEFAULT '-1' COMMENT '物理集群ID',

|

||||

`host` varchar(128) NOT NULL DEFAULT '' COMMENT 'zookeeper主机名',

|

||||

`port` int(16) NOT NULL DEFAULT '-1' COMMENT 'zookeeper端口',

|

||||

`role` int(16) NOT NULL DEFAULT '-1' COMMENT '角色, leader follower observer',

|

||||

`version` varchar(128) NOT NULL DEFAULT '' COMMENT 'zookeeper版本',

|

||||

`status` int(16) NOT NULL DEFAULT '0' COMMENT '状态: 1存活,0未存活,11存活但是4字命令使用不了',

|

||||

`create_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

|

||||

`update_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '修改时间',

|

||||

PRIMARY KEY (`id`),

|

||||

UNIQUE KEY `uniq_cluster_phy_id_host_port` (`cluster_phy_id`,`host`, `port`)

|

||||

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='Zookeeper信息表';

|

||||

```

|

||||

|

||||

|

||||

### 6.2.1、升级至 `v3.0.0` 版本

|

||||

|

||||

@@ -0,0 +1,19 @@

|

||||

package com.xiaojukeji.know.streaming.km.biz.cluster;

|

||||

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.dto.cluster.ClusterZookeepersOverviewDTO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.PaginationResult;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.Result;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.zookeeper.ClusterZookeepersOverviewVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.zookeeper.ClusterZookeepersStateVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.zookeeper.ZnodeVO;

|

||||

|

||||

/**

|

||||

* 多集群总体状态

|

||||

*/

|

||||

public interface ClusterZookeepersManager {

|

||||

Result<ClusterZookeepersStateVO> getClusterPhyZookeepersState(Long clusterPhyId);

|

||||

|

||||

PaginationResult<ClusterZookeepersOverviewVO> getClusterPhyZookeepersOverview(Long clusterPhyId, ClusterZookeepersOverviewDTO dto);

|

||||

|

||||

Result<ZnodeVO> getZnodeVO(Long clusterPhyId, String path);

|

||||

}

|

||||

@@ -0,0 +1,137 @@

|

||||

package com.xiaojukeji.know.streaming.km.biz.cluster.impl;

|

||||

|

||||

import com.didiglobal.logi.log.ILog;

|

||||

import com.didiglobal.logi.log.LogFactory;

|

||||

import com.xiaojukeji.know.streaming.km.biz.cluster.ClusterZookeepersManager;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.dto.cluster.ClusterZookeepersOverviewDTO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.cluster.ClusterPhy;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.config.ZKConfig;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.metrics.ZookeeperMetrics;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.param.metric.ZookeeperMetricParam;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.PaginationResult;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.Result;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.ResultStatus;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.Znode;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.ZookeeperInfo;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.zookeeper.ClusterZookeepersOverviewVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.zookeeper.ClusterZookeepersStateVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.zookeeper.ZnodeVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.constant.MsgConstant;

|

||||

import com.xiaojukeji.know.streaming.km.common.enums.zookeeper.ZKRoleEnum;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.ConvertUtil;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.PaginationUtil;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.Tuple;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.cluster.ClusterPhyService;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.version.metrics.ZookeeperMetricVersionItems;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.zookeeper.ZnodeService;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.zookeeper.ZookeeperMetricService;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.zookeeper.ZookeeperService;

|

||||

import org.springframework.beans.factory.annotation.Autowired;

|

||||

import org.springframework.stereotype.Service;

|

||||

import java.util.Arrays;

|

||||

import java.util.List;

|

||||

import java.util.stream.Collectors;

|

||||

|

||||

|

||||

@Service

|

||||

public class ClusterZookeepersManagerImpl implements ClusterZookeepersManager {

|

||||

private static final ILog LOGGER = LogFactory.getLog(ClusterZookeepersManagerImpl.class);

|

||||

|

||||

@Autowired

|

||||

private ClusterPhyService clusterPhyService;

|

||||

|

||||

@Autowired

|

||||

private ZookeeperService zookeeperService;

|

||||

|

||||

@Autowired

|

||||

private ZookeeperMetricService zookeeperMetricService;

|

||||

|

||||

@Autowired

|

||||

private ZnodeService znodeService;

|

||||

|

||||

@Override

|

||||

public Result<ClusterZookeepersStateVO> getClusterPhyZookeepersState(Long clusterPhyId) {

|

||||

ClusterPhy clusterPhy = clusterPhyService.getClusterByCluster(clusterPhyId);

|

||||

if (clusterPhy == null) {

|

||||

return Result.buildFromRSAndMsg(ResultStatus.CLUSTER_NOT_EXIST, MsgConstant.getClusterPhyNotExist(clusterPhyId));

|

||||

}

|

||||

|

||||

// // TODO

|

||||

// private Integer healthState;

|

||||

// private Integer healthCheckPassed;

|

||||

// private Integer healthCheckTotal;

|

||||

|

||||

List<ZookeeperInfo> infoList = zookeeperService.listFromDBByCluster(clusterPhyId);

|

||||

|

||||

ClusterZookeepersStateVO vo = new ClusterZookeepersStateVO();

|

||||

vo.setTotalServerCount(infoList.size());

|

||||

vo.setAliveFollowerCount(0);

|

||||

vo.setTotalFollowerCount(0);

|

||||

vo.setAliveObserverCount(0);

|

||||

vo.setTotalObserverCount(0);

|

||||

vo.setAliveServerCount(0);

|

||||

for (ZookeeperInfo info: infoList) {

|

||||

if (info.getRole().equals(ZKRoleEnum.LEADER.getRole())) {

|

||||

vo.setLeaderNode(info.getHost());

|

||||

}

|

||||

|

||||

if (info.getRole().equals(ZKRoleEnum.FOLLOWER.getRole())) {

|

||||

vo.setTotalFollowerCount(vo.getTotalFollowerCount() + 1);

|

||||

vo.setAliveFollowerCount(info.alive()? vo.getAliveFollowerCount() + 1: vo.getAliveFollowerCount());

|

||||

}

|

||||

|

||||

if (info.getRole().equals(ZKRoleEnum.OBSERVER.getRole())) {

|

||||

vo.setTotalObserverCount(vo.getTotalObserverCount() + 1);

|

||||

vo.setAliveObserverCount(info.alive()? vo.getAliveObserverCount() + 1: vo.getAliveObserverCount());

|

||||

}

|

||||

|

||||

if (info.alive()) {

|

||||

vo.setAliveServerCount(vo.getAliveServerCount() + 1);

|

||||

}

|

||||

}

|

||||

|

||||

Result<ZookeeperMetrics> metricsResult = zookeeperMetricService.collectMetricsFromZookeeper(new ZookeeperMetricParam(

|

||||

clusterPhyId,

|

||||

infoList.stream().filter(elem -> elem.alive()).map(item -> new Tuple<String, Integer>(item.getHost(), item.getPort())).collect(Collectors.toList()),

|

||||

ConvertUtil.str2ObjByJson(clusterPhy.getZkProperties(), ZKConfig.class),

|

||||

ZookeeperMetricVersionItems.ZOOKEEPER_METRIC_WATCH_COUNT

|

||||

));

|

||||

if (metricsResult.failed()) {

|

||||

LOGGER.error(

|

||||

"class=ClusterZookeepersManagerImpl||method=getClusterPhyZookeepersState||clusterPhyId={}||errMsg={}",

|

||||

clusterPhyId, metricsResult.getMessage()

|

||||

);

|

||||

return Result.buildSuc(vo);

|

||||

}

|

||||

Float watchCount = metricsResult.getData().getMetric(ZookeeperMetricVersionItems.ZOOKEEPER_METRIC_WATCH_COUNT);

|

||||

vo.setWatchCount(watchCount != null? watchCount.intValue(): null);

|

||||

|

||||

return Result.buildSuc(vo);

|

||||

}

|

||||

|

||||

@Override

|

||||

public PaginationResult<ClusterZookeepersOverviewVO> getClusterPhyZookeepersOverview(Long clusterPhyId, ClusterZookeepersOverviewDTO dto) {

|

||||

//获取集群zookeeper列表

|

||||

List<ClusterZookeepersOverviewVO> clusterZookeepersOverviewVOList = ConvertUtil.list2List(zookeeperService.listFromDBByCluster(clusterPhyId), ClusterZookeepersOverviewVO.class);

|

||||

|

||||

//搜索

|

||||

clusterZookeepersOverviewVOList = PaginationUtil.pageByFuzzyFilter(clusterZookeepersOverviewVOList, dto.getSearchKeywords(), Arrays.asList("host"));

|

||||

|

||||

//分页

|

||||

PaginationResult<ClusterZookeepersOverviewVO> paginationResult = PaginationUtil.pageBySubData(clusterZookeepersOverviewVOList, dto);

|

||||

|

||||

return paginationResult;

|

||||

}

|

||||

|

||||

@Override

|

||||

public Result<ZnodeVO> getZnodeVO(Long clusterPhyId, String path) {

|

||||

Result<Znode> result = znodeService.getZnode(clusterPhyId, path);

|

||||

if (result.failed()) {

|

||||

return Result.buildFromIgnoreData(result);

|

||||

}

|

||||

return Result.buildSuc(ConvertUtil.obj2ObjByJSON(result.getData(), ZnodeVO.class));

|

||||

}

|

||||

|

||||

/**************************************************** private method ****************************************************/

|

||||

|

||||

}

|

||||

@@ -0,0 +1,122 @@

|

||||

package com.xiaojukeji.know.streaming.km.collector.metric;

|

||||

|

||||

import com.didiglobal.logi.log.ILog;

|

||||

import com.didiglobal.logi.log.LogFactory;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.cluster.ClusterPhy;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.config.ZKConfig;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.kafkacontroller.KafkaController;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.param.metric.ZookeeperMetricParam;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.Result;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.version.VersionControlItem;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.Tuple;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.ValidateUtils;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.event.metric.ZookeeperMetricEvent;

|

||||

import com.xiaojukeji.know.streaming.km.common.constant.Constant;

|

||||

import com.xiaojukeji.know.streaming.km.common.enums.version.VersionItemTypeEnum;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.ConvertUtil;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.EnvUtil;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.ZookeeperInfo;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.metrics.ZookeeperMetrics;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.po.metrice.ZookeeperMetricPO;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.kafkacontroller.KafkaControllerService;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.version.VersionControlService;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.zookeeper.ZookeeperMetricService;

|

||||

import com.xiaojukeji.know.streaming.km.core.service.zookeeper.ZookeeperService;

|

||||

import org.springframework.beans.factory.annotation.Autowired;

|

||||

import org.springframework.stereotype.Component;

|

||||

|

||||

import java.util.Arrays;

|

||||

import java.util.List;

|

||||

import java.util.stream.Collectors;

|

||||

|

||||

import static com.xiaojukeji.know.streaming.km.common.enums.version.VersionItemTypeEnum.METRIC_ZOOKEEPER;

|

||||

|

||||

/**

|

||||

* @author didi

|

||||

*/

|

||||

@Component

|

||||

public class ZookeeperMetricCollector extends AbstractMetricCollector<ZookeeperMetricPO> {

|

||||

protected static final ILog LOGGER = LogFactory.getLog("METRIC_LOGGER");

|

||||

|

||||

@Autowired

|

||||

private VersionControlService versionControlService;

|

||||

|

||||

@Autowired

|

||||

private ZookeeperMetricService zookeeperMetricService;

|

||||

|

||||

@Autowired

|

||||

private ZookeeperService zookeeperService;

|

||||

|

||||

@Autowired

|

||||

private KafkaControllerService kafkaControllerService;

|

||||

|

||||

@Override

|

||||

public void collectMetrics(ClusterPhy clusterPhy) {

|

||||

Long startTime = System.currentTimeMillis();

|

||||

Long clusterPhyId = clusterPhy.getId();

|

||||

List<VersionControlItem> items = versionControlService.listVersionControlItem(clusterPhyId, collectorType().getCode());

|

||||

List<ZookeeperInfo> aliveZKList = zookeeperService.listFromDBByCluster(clusterPhyId)

|

||||

.stream()

|

||||

.filter(elem -> Constant.ALIVE.equals(elem.getStatus()))

|

||||

.collect(Collectors.toList());

|

||||

KafkaController kafkaController = kafkaControllerService.getKafkaControllerFromDB(clusterPhyId);

|

||||

|

||||

ZookeeperMetrics metrics = ZookeeperMetrics.initWithMetric(clusterPhyId, Constant.COLLECT_METRICS_COST_TIME_METRICS_NAME, (float)Constant.INVALID_CODE);

|

||||

if (ValidateUtils.isEmptyList(aliveZKList)) {

|

||||

// 没有存活的ZK时,发布事件,然后直接返回

|

||||

publishMetric(new ZookeeperMetricEvent(this, Arrays.asList(metrics)));

|

||||

return;

|

||||

}

|

||||

|

||||

// 构造参数

|

||||

ZookeeperMetricParam param = new ZookeeperMetricParam(

|

||||

clusterPhyId,

|

||||

aliveZKList.stream().map(elem -> new Tuple<String, Integer>(elem.getHost(), elem.getPort())).collect(Collectors.toList()),

|

||||

ConvertUtil.str2ObjByJson(clusterPhy.getZkProperties(), ZKConfig.class),

|

||||

kafkaController == null? Constant.INVALID_CODE: kafkaController.getBrokerId(),

|

||||

null

|

||||

);

|

||||

|

||||

for(VersionControlItem v : items) {

|

||||

try {

|

||||

if(null != metrics.getMetrics().get(v.getName())) {

|

||||

continue;

|

||||

}

|

||||

param.setMetricName(v.getName());

|

||||

|

||||

Result<ZookeeperMetrics> ret = zookeeperMetricService.collectMetricsFromZookeeper(param);

|

||||

if(null == ret || ret.failed() || null == ret.getData()){

|

||||

continue;

|

||||

}

|

||||

|

||||

metrics.putMetric(ret.getData().getMetrics());

|

||||

|

||||

if(!EnvUtil.isOnline()){

|

||||

LOGGER.info(

|

||||

"class=ZookeeperMetricCollector||method=collectMetrics||clusterPhyId={}||metricName={}||metricValue={}",

|

||||

clusterPhyId, v.getName(), ConvertUtil.obj2Json(ret.getData().getMetrics())

|

||||

);

|

||||

}

|

||||

} catch (Exception e){

|

||||

LOGGER.error(

|

||||

"class=ZookeeperMetricCollector||method=collectMetrics||clusterPhyId={}||metricName={}||errMsg=exception!",

|

||||

clusterPhyId, v.getName(), e

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

metrics.putMetric(Constant.COLLECT_METRICS_COST_TIME_METRICS_NAME, (System.currentTimeMillis() - startTime) / 1000.0f);

|

||||

|

||||

publishMetric(new ZookeeperMetricEvent(this, Arrays.asList(metrics)));

|

||||

|

||||

LOGGER.info(

|

||||

"class=ZookeeperMetricCollector||method=collectMetrics||clusterPhyId={}||startTime={}||costTime={}||msg=msg=collect finished.",

|

||||

clusterPhyId, startTime, System.currentTimeMillis() - startTime

|

||||

);

|

||||

}

|

||||

|

||||

@Override

|

||||

public VersionItemTypeEnum collectorType() {

|

||||

return METRIC_ZOOKEEPER;

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,28 @@

|

||||

package com.xiaojukeji.know.streaming.km.collector.sink;

|

||||

|

||||

import com.didiglobal.logi.log.ILog;

|

||||

import com.didiglobal.logi.log.LogFactory;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.ConvertUtil;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.event.metric.ZookeeperMetricEvent;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.po.metrice.ZookeeperMetricPO;

|

||||

import org.springframework.context.ApplicationListener;

|

||||

import org.springframework.stereotype.Component;

|

||||

|

||||

import javax.annotation.PostConstruct;

|

||||

|

||||

import static com.xiaojukeji.know.streaming.km.common.constant.ESIndexConstant.ZOOKEEPER_INDEX;

|

||||

|

||||

@Component

|

||||

public class ZookeeperMetricESSender extends AbstractMetricESSender implements ApplicationListener<ZookeeperMetricEvent> {

|

||||

protected static final ILog LOGGER = LogFactory.getLog("METRIC_LOGGER");

|

||||

|

||||

@PostConstruct

|

||||

public void init(){

|

||||

LOGGER.info("class=ZookeeperMetricESSender||method=init||msg=init finished");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void onApplicationEvent(ZookeeperMetricEvent event) {

|

||||

send2es(ZOOKEEPER_INDEX, ConvertUtil.list2List(event.getZookeeperMetrics(), ZookeeperMetricPO.class));

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,13 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.dto.cluster;

|

||||

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.dto.pagination.PaginationBaseDTO;

|

||||

import lombok.Data;

|

||||

|

||||

/**

|

||||

* @author wyc

|

||||

* @date 2022/9/23

|

||||

*/

|

||||

@Data

|

||||

public class ClusterZookeepersOverviewDTO extends PaginationBaseDTO {

|

||||

|

||||

}

|

||||

@@ -1,8 +1,8 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.config;

|

||||

|

||||

import com.xiaojukeji.know.streaming.km.common.constant.Constant;

|

||||

import io.swagger.annotations.ApiModel;

|

||||

import io.swagger.annotations.ApiModelProperty;

|

||||

import lombok.Data;

|

||||

|

||||

import java.io.Serializable;

|

||||

import java.util.Properties;

|

||||

@@ -11,7 +11,6 @@ import java.util.Properties;

|

||||

* @author zengqiao

|

||||

* @date 22/02/24

|

||||

*/

|

||||

@Data

|

||||

@ApiModel(description = "ZK配置")

|

||||

public class ZKConfig implements Serializable {

|

||||

@ApiModelProperty(value="ZK的jmx配置")

|

||||

@@ -21,11 +20,51 @@ public class ZKConfig implements Serializable {

|

||||

private Boolean openSecure = false;

|

||||

|

||||

@ApiModelProperty(value="ZK的Session超时时间", example = "15000")

|

||||

private Long sessionTimeoutUnitMs = 15000L;

|

||||

private Integer sessionTimeoutUnitMs = 15000;

|

||||

|

||||

@ApiModelProperty(value="ZK的Request超时时间", example = "5000")

|

||||

private Long requestTimeoutUnitMs = 5000L;

|

||||

private Integer requestTimeoutUnitMs = 5000;

|

||||

|

||||

@ApiModelProperty(value="ZK的Request超时时间")

|

||||

private Properties otherProps = new Properties();

|

||||

|

||||

public JmxConfig getJmxConfig() {

|

||||

return jmxConfig == null? new JmxConfig(): jmxConfig;

|

||||

}

|

||||

|

||||

public void setJmxConfig(JmxConfig jmxConfig) {

|

||||

this.jmxConfig = jmxConfig;

|

||||

}

|

||||

|

||||

public Boolean getOpenSecure() {

|

||||

return openSecure != null && openSecure;

|

||||

}

|

||||

|

||||

public void setOpenSecure(Boolean openSecure) {

|

||||

this.openSecure = openSecure;

|

||||

}

|

||||

|

||||

public Integer getSessionTimeoutUnitMs() {

|

||||

return sessionTimeoutUnitMs == null? Constant.DEFAULT_SESSION_TIMEOUT_UNIT_MS: sessionTimeoutUnitMs;

|

||||

}

|

||||

|

||||

public void setSessionTimeoutUnitMs(Integer sessionTimeoutUnitMs) {

|

||||

this.sessionTimeoutUnitMs = sessionTimeoutUnitMs;

|

||||

}

|

||||

|

||||

public Integer getRequestTimeoutUnitMs() {

|

||||

return requestTimeoutUnitMs == null? Constant.DEFAULT_REQUEST_TIMEOUT_UNIT_MS: requestTimeoutUnitMs;

|

||||

}

|

||||

|

||||

public void setRequestTimeoutUnitMs(Integer requestTimeoutUnitMs) {

|

||||

this.requestTimeoutUnitMs = requestTimeoutUnitMs;

|

||||

}

|

||||

|

||||

public Properties getOtherProps() {

|

||||

return otherProps == null? new Properties() : otherProps;

|

||||

}

|

||||

|

||||

public void setOtherProps(Properties otherProps) {

|

||||

this.otherProps = otherProps;

|

||||

}

|

||||

}

|

||||

|

||||

@@ -0,0 +1,28 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.metrics;

|

||||

|

||||

import lombok.Data;

|

||||

import lombok.ToString;

|

||||

|

||||

/**

|

||||

* @author zengqiao

|

||||

* @date 20/6/17

|

||||

*/

|

||||

@Data

|

||||

@ToString

|

||||

public class ZookeeperMetrics extends BaseMetrics {

|

||||

public ZookeeperMetrics(Long clusterPhyId) {

|

||||

super(clusterPhyId);

|

||||

}

|

||||

|

||||

public static ZookeeperMetrics initWithMetric(Long clusterPhyId, String metric, Float value) {

|

||||

ZookeeperMetrics metrics = new ZookeeperMetrics(clusterPhyId);

|

||||

metrics.setClusterPhyId( clusterPhyId );

|

||||

metrics.putMetric(metric, value);

|

||||

return metrics;

|

||||

}

|

||||

|

||||

@Override

|

||||

public String unique() {

|

||||

return "ZK@" + clusterPhyId;

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,47 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.param.metric;

|

||||

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.config.ZKConfig;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.Tuple;

|

||||

import lombok.Data;

|

||||

import lombok.NoArgsConstructor;

|

||||

|

||||

import java.util.List;

|

||||

|

||||

/**

|

||||

* @author didi

|

||||

*/

|

||||

@Data

|

||||

@NoArgsConstructor

|

||||

public class ZookeeperMetricParam extends MetricParam {

|

||||

private Long clusterPhyId;

|

||||

|

||||

private List<Tuple<String, Integer>> zkAddressList;

|

||||

|

||||

private ZKConfig zkConfig;

|

||||

|

||||

private String metricName;

|

||||

|

||||

private Integer kafkaControllerId;

|

||||

|

||||

public ZookeeperMetricParam(Long clusterPhyId,

|

||||

List<Tuple<String, Integer>> zkAddressList,

|

||||

ZKConfig zkConfig,

|

||||

String metricName) {

|

||||

this.clusterPhyId = clusterPhyId;

|

||||

this.zkAddressList = zkAddressList;

|

||||

this.zkConfig = zkConfig;

|

||||

this.metricName = metricName;

|

||||

}

|

||||

|

||||

public ZookeeperMetricParam(Long clusterPhyId,

|

||||

List<Tuple<String, Integer>> zkAddressList,

|

||||

ZKConfig zkConfig,

|

||||

Integer kafkaControllerId,

|

||||

String metricName) {

|

||||

this.clusterPhyId = clusterPhyId;

|

||||

this.zkAddressList = zkAddressList;

|

||||

this.zkConfig = zkConfig;

|

||||

this.kafkaControllerId = kafkaControllerId;

|

||||

this.metricName = metricName;

|

||||

}

|

||||

}

|

||||

@@ -56,6 +56,7 @@ public enum ResultStatus {

|

||||

KAFKA_OPERATE_FAILED(8010, "Kafka操作失败"),

|

||||

MYSQL_OPERATE_FAILED(8020, "MySQL操作失败"),

|

||||

ZK_OPERATE_FAILED(8030, "ZK操作失败"),

|

||||

ZK_FOUR_LETTER_CMD_FORBIDDEN(8031, "ZK四字命令被禁止"),

|

||||

ES_OPERATE_ERROR(8040, "ES操作失败"),

|

||||

HTTP_REQ_ERROR(8050, "第三方http请求异常"),

|

||||

|

||||

|

||||

@@ -23,6 +23,8 @@ public class VersionMetricControlItem extends VersionControlItem{

|

||||

public static final String CATEGORY_PERFORMANCE = "Performance";

|

||||

public static final String CATEGORY_FLOW = "Flow";

|

||||

|

||||

public static final String CATEGORY_CLIENT = "Client";

|

||||

|

||||

/**

|

||||

* 指标单位名称,非指标的没有

|

||||

*/

|

||||

|

||||

@@ -0,0 +1,19 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper;

|

||||

|

||||

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.Tuple;

|

||||

import io.swagger.annotations.ApiModelProperty;

|

||||

import lombok.Data;

|

||||

import org.apache.zookeeper.data.Stat;

|

||||

|

||||

@Data

|

||||

public class Znode {

|

||||

@ApiModelProperty(value = "节点名称", example = "broker")

|

||||

private String name;

|

||||

|

||||

@ApiModelProperty(value = "节点数据", example = "saassad")

|

||||

private String data;

|

||||

|

||||

@ApiModelProperty(value = "节点属性", example = "")

|

||||

private Stat stat;

|

||||

}

|

||||

@@ -0,0 +1,42 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper;

|

||||

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.BaseEntity;

|

||||

import com.xiaojukeji.know.streaming.km.common.constant.Constant;

|

||||

import lombok.Data;

|

||||

|

||||

@Data

|

||||

public class ZookeeperInfo extends BaseEntity {

|

||||

/**

|

||||

* 集群Id

|

||||

*/

|

||||

private Long clusterPhyId;

|

||||

|

||||

/**

|

||||

* 主机

|

||||

*/

|

||||

private String host;

|

||||

|

||||

/**

|

||||

* 端口

|

||||

*/

|

||||

private Integer port;

|

||||

|

||||

/**

|

||||

* 角色

|

||||

*/

|

||||

private String role;

|

||||

|

||||

/**

|

||||

* 版本

|

||||

*/

|

||||

private String version;

|

||||

|

||||

/**

|

||||

* ZK状态

|

||||

*/

|

||||

private Integer status;

|

||||

|

||||

public boolean alive() {

|

||||

return !(Constant.DOWN.equals(status));

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,9 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword;

|

||||

|

||||

import java.io.Serializable;

|

||||

|

||||

/**

|

||||

* 四字命令结果数据的基础类

|

||||

*/

|

||||

public class BaseFourLetterWordCmdData implements Serializable {

|

||||

}

|

||||

@@ -0,0 +1,38 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword;

|

||||

|

||||

import lombok.Data;

|

||||

|

||||

|

||||

/**

|

||||

* clientPort=2183

|

||||

* dataDir=/data1/data/zkData2/version-2

|

||||

* dataLogDir=/data1/data/zkLog2/version-2

|

||||

* tickTime=2000

|

||||

* maxClientCnxns=60

|

||||

* minSessionTimeout=4000

|

||||

* maxSessionTimeout=40000

|

||||

* serverId=2

|

||||

* initLimit=15

|

||||

* syncLimit=10

|

||||

* electionAlg=3

|

||||

* electionPort=4445

|

||||

* quorumPort=4444

|

||||

* peerType=0

|

||||

*/

|

||||

@Data

|

||||

public class ConfigCmdData extends BaseFourLetterWordCmdData {

|

||||

private Long clientPort;

|

||||

private String dataDir;

|

||||

private String dataLogDir;

|

||||

private Long tickTime;

|

||||

private Long maxClientCnxns;

|

||||

private Long minSessionTimeout;

|

||||

private Long maxSessionTimeout;

|

||||

private Integer serverId;

|

||||

private String initLimit;

|

||||

private Long syncLimit;

|

||||

private Long electionAlg;

|

||||

private Long electionPort;

|

||||

private Long quorumPort;

|

||||

private Long peerType;

|

||||

}

|

||||

@@ -0,0 +1,39 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword;

|

||||

|

||||

import lombok.Data;

|

||||

|

||||

/**

|

||||

* zk_version 3.4.6-1569965, built on 02/20/2014 09:09 GMT

|

||||

* zk_avg_latency 0

|

||||

* zk_max_latency 399

|

||||

* zk_min_latency 0

|

||||

* zk_packets_received 234857

|

||||

* zk_packets_sent 234860

|

||||

* zk_num_alive_connections 4

|

||||

* zk_outstanding_requests 0

|

||||

* zk_server_state follower

|

||||

* zk_znode_count 35566

|

||||

* zk_watch_count 39

|

||||

* zk_ephemerals_count 10

|

||||

* zk_approximate_data_size 3356708

|

||||

* zk_open_file_descriptor_count 35

|

||||

* zk_max_file_descriptor_count 819200

|

||||

*/

|

||||

@Data

|

||||

public class MonitorCmdData extends BaseFourLetterWordCmdData {

|

||||

private String zkVersion;

|

||||

private Long zkAvgLatency;

|

||||

private Long zkMaxLatency;

|

||||

private Long zkMinLatency;

|

||||

private Long zkPacketsReceived;

|

||||

private Long zkPacketsSent;

|

||||

private Long zkNumAliveConnections;

|

||||

private Long zkOutstandingRequests;

|

||||

private String zkServerState;

|

||||

private Long zkZnodeCount;

|

||||

private Long zkWatchCount;

|

||||

private Long zkEphemeralsCount;

|

||||

private Long zkApproximateDataSize;

|

||||

private Long zkOpenFileDescriptorCount;

|

||||

private Long zkMaxFileDescriptorCount;

|

||||

}

|

||||

@@ -0,0 +1,30 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword;

|

||||

|

||||

import lombok.Data;

|

||||

|

||||

/**

|

||||

* Zookeeper version: 3.5.9-83df9301aa5c2a5d284a9940177808c01bc35cef, built on 01/06/2021 19:49 GMT

|

||||

* Latency min/avg/max: 0/0/2209

|

||||

* Received: 278202469

|

||||

* Sent: 279449055

|

||||

* Connections: 31

|

||||

* Outstanding: 0

|

||||

* Zxid: 0x20033fc12

|

||||

* Mode: leader

|

||||

* Node count: 10084

|

||||

* Proposal sizes last/min/max: 36/32/31260 leader特有

|

||||

*/

|

||||

@Data

|

||||

public class ServerCmdData extends BaseFourLetterWordCmdData {

|

||||

private String zkVersion;

|

||||

private Long zkAvgLatency;

|

||||

private Long zkMaxLatency;

|

||||

private Long zkMinLatency;

|

||||

private Long zkPacketsReceived;

|

||||

private Long zkPacketsSent;

|

||||

private Long zkNumAliveConnections;

|

||||

private Long zkOutstandingRequests;

|

||||

private String zkServerState;

|

||||

private Long zkZnodeCount;

|

||||

private Long zkZxid;

|

||||

}

|

||||

@@ -0,0 +1,116 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword.parser;

|

||||

|

||||

import com.didiglobal.logi.log.ILog;

|

||||

import com.didiglobal.logi.log.LogFactory;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.result.Result;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword.ConfigCmdData;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.zookeeper.FourLetterWordUtil;

|

||||

import lombok.Data;

|

||||

|

||||

import java.util.HashMap;

|

||||

import java.util.Map;

|

||||

|

||||

/**

|

||||

* clientPort=2183

|

||||

* dataDir=/data1/data/zkData2/version-2

|

||||

* dataLogDir=/data1/data/zkLog2/version-2

|

||||

* tickTime=2000

|

||||

* maxClientCnxns=60

|

||||

* minSessionTimeout=4000

|

||||

* maxSessionTimeout=40000

|

||||

* serverId=2

|

||||

* initLimit=15

|

||||

* syncLimit=10

|

||||

* electionAlg=3

|

||||

* electionPort=4445

|

||||

* quorumPort=4444

|

||||

* peerType=0

|

||||

*/

|

||||

@Data

|

||||

public class ConfigCmdDataParser implements FourLetterWordDataParser<ConfigCmdData> {

|

||||

private static final ILog LOGGER = LogFactory.getLog(ConfigCmdDataParser.class);

|

||||

|

||||

private Result<ConfigCmdData> dataResult = null;

|

||||

|

||||

@Override

|

||||

public String getCmd() {

|

||||

return FourLetterWordUtil.ConfigCmd;

|

||||

}

|

||||

|

||||

@Override

|

||||

public ConfigCmdData parseAndInitData(Long clusterPhyId, String host, int port, String cmdData) {

|

||||

Map<String, String> dataMap = new HashMap<>();

|

||||

for (String elem : cmdData.split("\n")) {

|

||||

if (elem.isEmpty()) {

|

||||

continue;

|

||||

}

|

||||

|

||||

int idx = elem.indexOf('=');

|

||||

if (idx >= 0) {

|

||||

dataMap.put(elem.substring(0, idx), elem.substring(idx + 1).trim());

|

||||

}

|

||||

}

|

||||

|

||||

ConfigCmdData configCmdData = new ConfigCmdData();

|

||||

dataMap.entrySet().stream().forEach(elem -> {

|

||||

try {

|

||||

switch (elem.getKey()) {

|

||||

case "clientPort":

|

||||

configCmdData.setClientPort(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "dataDir":

|

||||

configCmdData.setDataDir(elem.getValue());

|

||||

break;

|

||||

case "dataLogDir":

|

||||

configCmdData.setDataLogDir(elem.getValue());

|

||||

break;

|

||||

case "tickTime":

|

||||

configCmdData.setTickTime(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "maxClientCnxns":

|

||||

configCmdData.setMaxClientCnxns(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "minSessionTimeout":

|

||||

configCmdData.setMinSessionTimeout(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "maxSessionTimeout":

|

||||

configCmdData.setMaxSessionTimeout(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "serverId":

|

||||

configCmdData.setServerId(Integer.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "initLimit":

|

||||

configCmdData.setInitLimit(elem.getValue());

|

||||

break;

|

||||

case "syncLimit":

|

||||

configCmdData.setSyncLimit(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "electionAlg":

|

||||

configCmdData.setElectionAlg(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "electionPort":

|

||||

configCmdData.setElectionPort(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "quorumPort":

|

||||

configCmdData.setQuorumPort(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

case "peerType":

|

||||

configCmdData.setPeerType(Long.valueOf(elem.getValue()));

|

||||

break;

|

||||

default:

|

||||

LOGGER.warn(

|

||||

"class=ConfigCmdDataParser||method=parseAndInitData||name={}||value={}||msg=data not parsed!",

|

||||

elem.getKey(), elem.getValue()

|

||||

);

|

||||

}

|

||||

} catch (Exception e) {

|

||||

LOGGER.error(

|

||||

"class=ConfigCmdDataParser||method=parseAndInitData||clusterPhyId={}||host={}||port={}||name={}||value={}||errMsg=exception!",

|

||||

clusterPhyId, host, port, elem.getKey(), elem.getValue(), e

|

||||

);

|

||||

}

|

||||

});

|

||||

|

||||

return configCmdData;

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,10 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword.parser;

|

||||

|

||||

/**

|

||||

* 四字命令结果解析类

|

||||

*/

|

||||

public interface FourLetterWordDataParser<T> {

|

||||

String getCmd();

|

||||

|

||||

T parseAndInitData(Long clusterPhyId, String host, int port, String cmdData);

|

||||

}

|

||||

@@ -0,0 +1,117 @@

|

||||

package com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword.parser;

|

||||

|

||||

import com.didiglobal.logi.log.ILog;

|

||||

import com.didiglobal.logi.log.LogFactory;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.entity.zookeeper.fourletterword.MonitorCmdData;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.zookeeper.FourLetterWordUtil;

|

||||

import lombok.Data;

|

||||

|

||||

import java.util.HashMap;

|

||||

import java.util.Map;

|

||||

|

||||

/**

|

||||

* zk_version 3.4.6-1569965, built on 02/20/2014 09:09 GMT

|

||||

* zk_avg_latency 0

|

||||

* zk_max_latency 399

|

||||

* zk_min_latency 0

|

||||

* zk_packets_received 234857

|

||||

* zk_packets_sent 234860

|

||||

* zk_num_alive_connections 4

|

||||

* zk_outstanding_requests 0

|

||||

* zk_server_state follower

|

||||

* zk_znode_count 35566

|

||||

* zk_watch_count 39

|

||||

* zk_ephemerals_count 10

|

||||

* zk_approximate_data_size 3356708

|

||||

* zk_open_file_descriptor_count 35

|

||||

* zk_max_file_descriptor_count 819200

|

||||

*/

|

||||

@Data

|

||||

public class MonitorCmdDataParser implements FourLetterWordDataParser<MonitorCmdData> {

|

||||

private static final ILog LOGGER = LogFactory.getLog(MonitorCmdDataParser.class);

|

||||

|

||||

@Override

|

||||

public String getCmd() {

|

||||

return FourLetterWordUtil.MonitorCmd;

|

||||

}

|

||||

|

||||

@Override

|

||||

public MonitorCmdData parseAndInitData(Long clusterPhyId, String host, int port, String cmdData) {

|

||||

Map<String, String> dataMap = new HashMap<>();

|

||||

for (String elem : cmdData.split("\n")) {

|

||||