mirror of

https://github.com/didi/KnowStreaming.git

synced 2025-12-24 11:52:08 +08:00

Compare commits

81 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

0f15c773ef | ||

|

|

02b05fc7c8 | ||

|

|

b16a7b9bff | ||

|

|

e81c0f3040 | ||

|

|

462303fca0 | ||

|

|

4405703e42 | ||

|

|

23e398e121 | ||

|

|

b17bb89d04 | ||

|

|

5590cebf8f | ||

|

|

1fa043f09d | ||

|

|

3bd0af1451 | ||

|

|

1545962745 | ||

|

|

d032571681 | ||

|

|

33fb0acc7e | ||

|

|

1ec68a91e2 | ||

|

|

a23c113a46 | ||

|

|

371ae2c0a5 | ||

|

|

8f8f6ffa27 | ||

|

|

475fe0d91f | ||

|

|

3d74e60d03 | ||

|

|

83ac83bb28 | ||

|

|

8478fb857c | ||

|

|

7074bdaa9f | ||

|

|

58164294cc | ||

|

|

7c0e9df156 | ||

|

|

bd62212ecb | ||

|

|

2292039b42 | ||

|

|

73f8da8d5a | ||

|

|

e51dbe0ca7 | ||

|

|

482a375e31 | ||

|

|

689c5ce455 | ||

|

|

734a020ecc | ||

|

|

44d537f78c | ||

|

|

b4c60eb910 | ||

|

|

e120b32375 | ||

|

|

de54966d30 | ||

|

|

39a6302c18 | ||

|

|

05ceeea4b0 | ||

|

|

9f8e3373a8 | ||

|

|

42521cbae4 | ||

|

|

b23c35197e | ||

|

|

70f28d9ac4 | ||

|

|

912d73d98a | ||

|

|

2a720fce6f | ||

|

|

e4534c359f | ||

|

|

b91bec15f2 | ||

|

|

4d5e4d0f00 | ||

|

|

82c9b6481e | ||

|

|

f195847c68 | ||

|

|

5beb13b17e | ||

|

|

fc604a9eaf | ||

|

|

1afb633b4f | ||

|

|

34d9f9174b | ||

|

|

89405fe003 | ||

|

|

b9ea3865a5 | ||

|

|

b5bd643814 | ||

|

|

1f353e10ce | ||

|

|

055ba9bda6 | ||

|

|

ec19c3b4dd | ||

|

|

37aa526404 | ||

|

|

86c1faa40f | ||

|

|

8dcf15d0f9 | ||

|

|

4f317b76fa | ||

|

|

61672637dc | ||

|

|

ecf6e8f664 | ||

|

|

4115975320 | ||

|

|

21904a8609 | ||

|

|

b2091e9aed | ||

|

|

f2cb5bd77c | ||

|

|

19c61c52e6 | ||

|

|

b327359183 | ||

|

|

9e9bb72e17 | ||

|

|

ad131f5a2c | ||

|

|

39cccd568e | ||

|

|

19b7f6ad8c | ||

|

|

41c000cf47 | ||

|

|

1b8ea61e87 | ||

|

|

4538593236 | ||

|

|

8086ef355b | ||

|

|

60d038fe46 | ||

|

|

ff0f4463be |

1

.gitignore

vendored

1

.gitignore

vendored

@@ -111,3 +111,4 @@ dist/

|

||||

dist/*

|

||||

kafka-manager-web/src/main/resources/templates/

|

||||

.DS_Store

|

||||

kafka-manager-console/package-lock.json

|

||||

|

||||

41

Dockerfile

Normal file

41

Dockerfile

Normal file

@@ -0,0 +1,41 @@

|

||||

ARG MAVEN_VERSION=3.8.4-openjdk-8-slim

|

||||

ARG JAVA_VERSION=8-jdk-alpine3.9

|

||||

FROM maven:${MAVEN_VERSION} AS builder

|

||||

ARG CONSOLE_ENABLE=true

|

||||

|

||||

WORKDIR /opt

|

||||

COPY . .

|

||||

COPY distribution/conf/settings.xml /root/.m2/settings.xml

|

||||

|

||||

# whether to build console

|

||||

RUN set -eux; \

|

||||

if [ $CONSOLE_ENABLE = 'false' ]; then \

|

||||

sed -i "/kafka-manager-console/d" pom.xml; \

|

||||

fi \

|

||||

&& mvn -Dmaven.test.skip=true clean install -U

|

||||

|

||||

FROM openjdk:${JAVA_VERSION}

|

||||

|

||||

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && apk add --no-cache tini

|

||||

|

||||

ENV TZ=Asia/Shanghai

|

||||

ENV AGENT_HOME=/opt/agent/

|

||||

|

||||

COPY --from=builder /opt/kafka-manager-web/target/kafka-manager.jar /opt

|

||||

COPY --from=builder /opt/container/dockerfiles/docker-depends/config.yaml $AGENT_HOME

|

||||

COPY --from=builder /opt/container/dockerfiles/docker-depends/jmx_prometheus_javaagent-0.15.0.jar $AGENT_HOME

|

||||

COPY --from=builder /opt/distribution/conf/application-docker.yml /opt

|

||||

|

||||

WORKDIR /opt

|

||||

|

||||

ENV JAVA_AGENT="-javaagent:$AGENT_HOME/jmx_prometheus_javaagent-0.15.0.jar=9999:$AGENT_HOME/config.yaml"

|

||||

ENV JAVA_HEAP_OPTS="-Xms1024M -Xmx1024M -Xmn100M "

|

||||

ENV JAVA_OPTS="-verbose:gc \

|

||||

-XX:MaxMetaspaceSize=256M -XX:+DisableExplicitGC -XX:+UseStringDeduplication \

|

||||

-XX:+UseG1GC -XX:+HeapDumpOnOutOfMemoryError -XX:-UseContainerSupport"

|

||||

|

||||

EXPOSE 8080 9999

|

||||

|

||||

ENTRYPOINT ["tini", "--"]

|

||||

|

||||

CMD [ "sh", "-c", "java -jar $JAVA_AGENT $JAVA_HEAP_OPTS $JAVA_OPTS kafka-manager.jar --spring.config.location=application-docker.yml"]

|

||||

10

README.md

10

README.md

@@ -5,7 +5,7 @@

|

||||

|

||||

**一站式`Apache Kafka`集群指标监控与运维管控平台**

|

||||

|

||||

`LogiKM开源至今备受关注,考虑到开源项目应该更贴合Apache Kafka未来发展方向,经项目组慎重考虑,预计22年5月份将其品牌升级成Know Streaming,届时项目名称和Logo也将统一更新,感谢大家一如既往的支持,敬请期待!`

|

||||

`LogiKM开源至今备受关注,考虑到开源项目应该更贴合Apache Kafka未来发展方向,经项目组慎重考虑,预计22年下半年将其品牌升级成Know Streaming,届时项目名称和Logo也将统一更新,感谢大家一如既往的支持,敬请期待!`

|

||||

|

||||

阅读本README文档,您可以了解到滴滴Logi-KafkaManager的用户群体、产品定位等信息,并通过体验地址,快速体验Kafka集群指标监控与运维管控的全流程。

|

||||

|

||||

@@ -69,7 +69,7 @@

|

||||

- [kafka最强最全知识图谱](https://www.szzdzhp.com/kafka/)

|

||||

- [滴滴LogiKM新用户入门系列文章专栏 --石臻臻](https://www.szzdzhp.com/categories/LogIKM/)

|

||||

- [kafka实践(十五):滴滴开源Kafka管控平台 LogiKM研究--A叶子叶来](https://blog.csdn.net/yezonggang/article/details/113106244)

|

||||

|

||||

- [基于云原生应用管理平台Rainbond安装 滴滴LogiKM](https://www.rainbond.com/docs/opensource-app/logikm/?channel=logikm)

|

||||

|

||||

## 3 滴滴Logi开源用户交流群

|

||||

|

||||

@@ -77,7 +77,7 @@

|

||||

|

||||

想跟各个大佬交流Kafka Es 等中间件/大数据相关技术请 加微信进群。

|

||||

|

||||

微信加群:添加<font color=red>mike_zhangliang</font>、<font color=red>danke-xie</font>的微信号备注Logi加群或关注公众号 云原生可观测性 回复 "Logi加群"

|

||||

微信加群:添加<font color=red>mike_zhangliang</font>、<font color=red>danke-x</font>的微信号备注Logi加群或关注公众号 云原生可观测性 回复 "Logi加群"

|

||||

|

||||

## 4 知识星球

|

||||

|

||||

@@ -92,7 +92,7 @@

|

||||

<font color=red size=5><b>【Kafka中文社区】</b></font>

|

||||

</center>

|

||||

|

||||

在这里你可以结交各大互联网Kafka大佬以及近2000+Kafka爱好者,一起实现知识共享,实时掌控最新行业资讯,期待您的加入中~https://z.didi.cn/5gSF9

|

||||

在这里你可以结交各大互联网Kafka大佬以及3000+Kafka爱好者,一起实现知识共享,实时掌控最新行业资讯,期待您的加入中~https://z.didi.cn/5gSF9

|

||||

|

||||

<font color=red size=5>有问必答~! </font>

|

||||

|

||||

@@ -104,7 +104,7 @@ PS:提问请尽量把问题一次性描述清楚,并告知环境信息情况

|

||||

|

||||

### 5.1 内部核心人员

|

||||

|

||||

`iceyuhui`、`liuyaguang`、`limengmonty`、`zhangliangmike`、`xiepeng`、`nullhuangyiming`、`zengqiao`、`eilenexuzhe`、`huangjiaweihjw`、`zhaoyinrui`、`marzkonglingxu`、`joysunchao`、`石臻臻`

|

||||

`iceyuhui`、`liuyaguang`、`limengmonty`、`zhangliangmike`、`zhaoqingrong`、`xiepeng`、`nullhuangyiming`、`zengqiao`、`eilenexuzhe`、`huangjiaweihjw`、`zhaoyinrui`、`marzkonglingxu`、`joysunchao`、`石臻臻`

|

||||

|

||||

|

||||

### 5.2 外部贡献者

|

||||

|

||||

@@ -1,29 +0,0 @@

|

||||

FROM openjdk:16-jdk-alpine3.13

|

||||

|

||||

LABEL author="fengxsong"

|

||||

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && apk add --no-cache tini

|

||||

|

||||

ENV VERSION 2.4.2

|

||||

WORKDIR /opt/

|

||||

|

||||

ENV AGENT_HOME /opt/agent/

|

||||

COPY docker-depends/config.yaml $AGENT_HOME

|

||||

COPY docker-depends/jmx_prometheus_javaagent-0.15.0.jar $AGENT_HOME

|

||||

|

||||

ENV JAVA_AGENT="-javaagent:$AGENT_HOME/jmx_prometheus_javaagent-0.15.0.jar=9999:$AGENT_HOME/config.yaml"

|

||||

ENV JAVA_HEAP_OPTS="-Xms1024M -Xmx1024M -Xmn100M "

|

||||

ENV JAVA_OPTS="-verbose:gc \

|

||||

-XX:MaxMetaspaceSize=256M -XX:+DisableExplicitGC -XX:+UseStringDeduplication \

|

||||

-XX:+UseG1GC -XX:+HeapDumpOnOutOfMemoryError -XX:-UseContainerSupport"

|

||||

|

||||

RUN wget https://github.com/didi/Logi-KafkaManager/releases/download/v${VERSION}/kafka-manager-${VERSION}.tar.gz && \

|

||||

tar xvf kafka-manager-${VERSION}.tar.gz && \

|

||||

mv kafka-manager-${VERSION}/kafka-manager.jar /opt/app.jar && \

|

||||

mv kafka-manager-${VERSION}/application.yml /opt/application.yml && \

|

||||

rm -rf kafka-manager-${VERSION}*

|

||||

|

||||

EXPOSE 8080 9999

|

||||

|

||||

ENTRYPOINT ["tini", "--"]

|

||||

|

||||

CMD [ "sh", "-c", "java -jar $JAVA_AGENT $JAVA_HEAP_OPTS $JAVA_OPTS app.jar --spring.config.location=application.yml"]

|

||||

13

container/dockerfiles/mysql/Dockerfile

Normal file

13

container/dockerfiles/mysql/Dockerfile

Normal file

@@ -0,0 +1,13 @@

|

||||

FROM mysql:5.7.37

|

||||

|

||||

COPY mysqld.cnf /etc/mysql/mysql.conf.d/

|

||||

ENV TZ=Asia/Shanghai

|

||||

ENV MYSQL_ROOT_PASSWORD=root

|

||||

|

||||

RUN apt-get update \

|

||||

&& apt -y install wget \

|

||||

&& wget https://ghproxy.com/https://raw.githubusercontent.com/didi/LogiKM/master/distribution/conf/create_mysql_table.sql -O /docker-entrypoint-initdb.d/create_mysql_table.sql

|

||||

|

||||

EXPOSE 3306

|

||||

|

||||

VOLUME ["/var/lib/mysql"]

|

||||

24

container/dockerfiles/mysql/mysqld.cnf

Normal file

24

container/dockerfiles/mysql/mysqld.cnf

Normal file

@@ -0,0 +1,24 @@

|

||||

[client]

|

||||

default-character-set = utf8

|

||||

|

||||

[mysqld]

|

||||

character_set_server = utf8

|

||||

pid-file = /var/run/mysqld/mysqld.pid

|

||||

socket = /var/run/mysqld/mysqld.sock

|

||||

datadir = /var/lib/mysql

|

||||

symbolic-links=0

|

||||

|

||||

max_allowed_packet = 10M

|

||||

sort_buffer_size = 1M

|

||||

read_rnd_buffer_size = 2M

|

||||

max_connections=2000

|

||||

|

||||

lower_case_table_names=1

|

||||

character-set-server=utf8

|

||||

|

||||

max_allowed_packet = 1G

|

||||

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION

|

||||

group_concat_max_len = 102400

|

||||

default-time-zone = '+08:00'

|

||||

[mysql]

|

||||

default-character-set = utf8

|

||||

28

distribution/conf/application-docker.yml

Normal file

28

distribution/conf/application-docker.yml

Normal file

@@ -0,0 +1,28 @@

|

||||

|

||||

## kafka-manager的配置文件,该文件中的配置会覆盖默认配置

|

||||

## 下面的配置信息基本就是jar中的 application.yml默认配置了;

|

||||

## 可以只修改自己变更的配置,其他的删除就行了; 比如只配置一下mysql

|

||||

|

||||

|

||||

server:

|

||||

port: 8080

|

||||

tomcat:

|

||||

accept-count: 1000

|

||||

max-connections: 10000

|

||||

max-threads: 800

|

||||

min-spare-threads: 100

|

||||

|

||||

spring:

|

||||

application:

|

||||

name: kafkamanager

|

||||

version: 2.6.0

|

||||

profiles:

|

||||

active: dev

|

||||

datasource:

|

||||

kafka-manager:

|

||||

jdbc-url: jdbc:mysql://${LOGI_MYSQL_HOST:mysql}:${LOGI_MYSQL_PORT:3306}/${LOGI_MYSQL_DATABASE:logi_kafka_manager}?characterEncoding=UTF-8&useSSL=false&serverTimezone=GMT%2B8

|

||||

username: ${LOGI_MYSQL_USER:root}

|

||||

password: ${LOGI_MYSQL_PASSWORD:root}

|

||||

driver-class-name: com.mysql.cj.jdbc.Driver

|

||||

main:

|

||||

allow-bean-definition-overriding: true

|

||||

@@ -15,7 +15,6 @@ server:

|

||||

spring:

|

||||

application:

|

||||

name: kafkamanager

|

||||

version: 2.6.0

|

||||

profiles:

|

||||

active: dev

|

||||

datasource:

|

||||

|

||||

@@ -15,7 +15,6 @@ server:

|

||||

spring:

|

||||

application:

|

||||

name: kafkamanager

|

||||

version: 2.6.0

|

||||

profiles:

|

||||

active: dev

|

||||

datasource:

|

||||

|

||||

@@ -592,3 +592,66 @@ CREATE TABLE `work_order` (

|

||||

`gmt_modify` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '修改时间',

|

||||

PRIMARY KEY (`id`)

|

||||

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='工单表';

|

||||

|

||||

|

||||

ALTER TABLE `topic_connections` ADD COLUMN `client_id` VARCHAR(1024) NOT NULL DEFAULT '' COMMENT '客户端ID' AFTER `client_version`;

|

||||

|

||||

|

||||

create table ha_active_standby_relation

|

||||

(

|

||||

id bigint unsigned auto_increment comment 'id'

|

||||

primary key,

|

||||

active_cluster_phy_id bigint default -1 not null comment '主集群ID',

|

||||

active_res_name varchar(192) collate utf8_bin default '' not null comment '主资源名称',

|

||||

standby_cluster_phy_id bigint default -1 not null comment '备集群ID',

|

||||

standby_res_name varchar(192) collate utf8_bin default '' not null comment '备资源名称',

|

||||

res_type int default -1 not null comment '资源类型',

|

||||

status int default -1 not null comment '关系状态',

|

||||

unique_field varchar(1024) default '' not null comment '唯一字段',

|

||||

create_time timestamp default CURRENT_TIMESTAMP not null comment '创建时间',

|

||||

modify_time timestamp default CURRENT_TIMESTAMP not null on update CURRENT_TIMESTAMP comment '修改时间',

|

||||

kafka_status int default 0 null comment '高可用配置是否完全建立 1:Kafka上该主备关系正常,0:Kafka上该主备关系异常',

|

||||

constraint uniq_unique_field

|

||||

unique (unique_field)

|

||||

)

|

||||

comment 'HA主备关系表' charset = utf8;

|

||||

|

||||

create index idx_type_active

|

||||

on ha_active_standby_relation (res_type, active_cluster_phy_id);

|

||||

|

||||

create index idx_type_standby

|

||||

on ha_active_standby_relation (res_type, standby_cluster_phy_id);

|

||||

|

||||

create table ha_active_standby_switch_job

|

||||

(

|

||||

id bigint unsigned auto_increment comment 'id'

|

||||

primary key,

|

||||

active_cluster_phy_id bigint default -1 not null comment '主集群ID',

|

||||

standby_cluster_phy_id bigint default -1 not null comment '备集群ID',

|

||||

job_status int default -1 not null comment '任务状态',

|

||||

operator varchar(256) default '' not null comment '操作人',

|

||||

create_time timestamp default CURRENT_TIMESTAMP not null comment '创建时间',

|

||||

modify_time timestamp default CURRENT_TIMESTAMP not null on update CURRENT_TIMESTAMP comment '修改时间',

|

||||

type int default 5 not null comment '1:topic 2:实例 3:逻辑集群 4:物理集群',

|

||||

active_business_id varchar(100) default '-1' not null comment '主业务id(topicName,实例id,逻辑集群id,物理集群id)',

|

||||

standby_business_id varchar(100) default '-1' not null comment '备业务id(topicName,实例id,逻辑集群id,物理集群id)'

|

||||

)

|

||||

comment 'HA主备关系切换-子任务表' charset = utf8;

|

||||

|

||||

|

||||

create table ha_active_standby_switch_sub_job

|

||||

(

|

||||

id bigint unsigned auto_increment comment 'id'

|

||||

primary key,

|

||||

job_id bigint default -1 not null comment '任务ID',

|

||||

active_cluster_phy_id bigint default -1 not null comment '主集群ID',

|

||||

active_res_name varchar(192) collate utf8_bin default '' not null comment '主资源名称',

|

||||

standby_cluster_phy_id bigint default -1 not null comment '备集群ID',

|

||||

standby_res_name varchar(192) collate utf8_bin default '' not null comment '备资源名称',

|

||||

res_type int default -1 not null comment '资源类型',

|

||||

job_status int default -1 not null comment '任务状态',

|

||||

extend_data text null comment '扩展数据',

|

||||

create_time timestamp default CURRENT_TIMESTAMP not null comment '创建时间',

|

||||

modify_time timestamp default CURRENT_TIMESTAMP not null on update CURRENT_TIMESTAMP comment '修改时间'

|

||||

)

|

||||

comment 'HA主备关系-切换任务表' charset = utf8;

|

||||

|

||||

10

distribution/conf/settings.xml

Normal file

10

distribution/conf/settings.xml

Normal file

@@ -0,0 +1,10 @@

|

||||

<settings>

|

||||

<mirrors>

|

||||

<mirror>

|

||||

<id>aliyunmaven</id>

|

||||

<mirrorOf>*</mirrorOf>

|

||||

<name>阿里云公共仓库</name>

|

||||

<url>https://maven.aliyun.com/repository/public</url>

|

||||

</mirror>

|

||||

</mirrors>

|

||||

</settings>

|

||||

47

docs/dev_guide/LogiKM单元测试和集成测试.md

Normal file

47

docs/dev_guide/LogiKM单元测试和集成测试.md

Normal file

@@ -0,0 +1,47 @@

|

||||

|

||||

---

|

||||

|

||||

|

||||

|

||||

**一站式`Apache Kafka`集群指标监控与运维管控平台**

|

||||

|

||||

---

|

||||

|

||||

|

||||

# LogiKM单元测试和集成测试

|

||||

|

||||

## 1、单元测试

|

||||

### 1.1 单元测试介绍

|

||||

单元测试又称模块测试,是针对软件设计的最小单位——程序模块进行正确性检验的测试工作。

|

||||

其目的在于检查每个程序单元能否正确实现详细设计说明中的模块功能、性能、接口和设计约束等要求,

|

||||

发现各模块内部可能存在的各种错误。单元测试需要从程序的内部结构出发设计测试用例。

|

||||

多个模块可以平行地独立进行单元测试。

|

||||

|

||||

### 1.2 LogiKM单元测试思路

|

||||

LogiKM单元测试思路主要是测试Service层的方法,通过罗列方法的各种参数,

|

||||

判断方法返回的结果是否符合预期。单元测试的基类加了@SpringBootTest注解,即每次运行单测用例都启动容器

|

||||

|

||||

### 1.3 LogiKM单元测试注意事项

|

||||

1. 单元测试用例在kafka-manager-core以及kafka-manager-extends下的test包中

|

||||

2. 配置在resources/application.yml,包括运行单元测试用例启用的数据库配置等等

|

||||

3. 编译打包项目时,加上参数-DskipTests可不执行测试用例,例如使用命令行mvn -DskipTests进行打包

|

||||

|

||||

|

||||

|

||||

|

||||

## 2、集成测试

|

||||

### 2.1 集成测试介绍

|

||||

集成测试又称组装测试,是一种黑盒测试。通常在单元测试的基础上,将所有的程序模块进行有序的、递增的测试。

|

||||

集成测试是检验程序单元或部件的接口关系,逐步集成为符合概要设计要求的程序部件或整个系统。

|

||||

|

||||

### 2.2 LogiKM集成测试思路

|

||||

LogiKM集成测试主要思路是对Controller层的接口发送Http请求。

|

||||

通过罗列测试用例,模拟用户的操作,对接口发送Http请求,判断结果是否达到预期。

|

||||

本地运行集成测试用例时,无需加@SpringBootTest注解(即无需每次运行测试用例都启动容器)

|

||||

|

||||

### 2.3 LogiKM集成测试注意事项

|

||||

1. 集成测试用例在kafka-manager-web的test包下

|

||||

2. 因为对某些接口发送Http请求需要先登陆,比较麻烦,可以绕过登陆,方法可见教程见docs -> user_guide -> call_api_bypass_login

|

||||

3. 集成测试的配置在resources/integrationTest-settings.properties文件下,包括集群地址,zk地址的配置等等

|

||||

4. 如果需要运行集成测试用例,需要本地先启动LogiKM项目

|

||||

5. 编译打包项目时,加上参数-DskipTests可不执行测试用例,例如使用命令行mvn -DskipTests进行打包

|

||||

@@ -29,6 +29,7 @@

|

||||

- `JMX`配置错误:见`2、解决方法`。

|

||||

- 存在防火墙或者网络限制:网络通的另外一台机器`telnet`试一下看是否可以连接上。

|

||||

- 需要进行用户名及密码的认证:见`3、解决方法 —— 认证的JMX`。

|

||||

- 当logikm和kafka不在同一台机器上时,kafka的Jmx端口不允许其他机器访问:见`4、解决方法`。

|

||||

|

||||

|

||||

错误日志例子:

|

||||

@@ -99,3 +100,8 @@ SQL的例子:

|

||||

```sql

|

||||

UPDATE cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false }' where id={xxx};

|

||||

```

|

||||

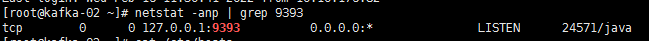

### 4、解决方法 —— 不允许其他机器访问

|

||||

|

||||

|

||||

该图中的127.0.0.1表明该端口只允许本机访问.

|

||||

在cdh中可以点击配置->搜索jmx->寻找broker_java_opts 修改com.sun.management.jmxremote.host和java.rmi.server.hostname为本机ip

|

||||

|

||||

97

docs/didi/Kafka主备切换流程简介.md

Normal file

97

docs/didi/Kafka主备切换流程简介.md

Normal file

@@ -0,0 +1,97 @@

|

||||

|

||||

---

|

||||

|

||||

|

||||

|

||||

**一站式`Apache Kafka`集群指标监控与运维管控平台**

|

||||

|

||||

---

|

||||

|

||||

# Kafka主备切换流程简介

|

||||

|

||||

## 1、客户端读写流程

|

||||

|

||||

在介绍Kafka主备切换流程之前,我们先来了解一下客户端通过我们自研的网关的大致读写流程。

|

||||

|

||||

|

||||

|

||||

|

||||

如上图所示,客户端读写流程大致为:

|

||||

1. 客户端:向网关请求Topic元信息;

|

||||

2. 网关:发现客户端使用的KafkaUser是A集群的KafkaUser,因此将Topic元信息请求转发到A集群;

|

||||

3. A集群:收到网关的Topic元信息,处理并返回给网关;

|

||||

4. 网关:将集群A返回的结果,返回给客户端;

|

||||

5. 客户端:从Topic元信息中,获取到Topic实际位于集群A,然后客户端会连接集群A进行生产消费;

|

||||

|

||||

**备注:客户端为Kafka原生客户端,无任何定制。**

|

||||

|

||||

---

|

||||

|

||||

## 2、主备切换流程

|

||||

|

||||

介绍完基于网关的客户端读写流程之后,我们再来看一下主备高可用版的Kafka,需要如何进行主备切换。

|

||||

|

||||

### 2.1、大体流程

|

||||

|

||||

|

||||

|

||||

图有点多,总结起来就是:

|

||||

1. 先阻止客户端数据的读写;

|

||||

2. 等待主备数据同步完成;

|

||||

3. 调整主备集群数据同步方向;

|

||||

4. 调整配置,引导客户端到备集群进行读写;

|

||||

|

||||

|

||||

### 2.2、详细操作

|

||||

|

||||

看完大体流程,我们再来看一下实际操作的命令。

|

||||

|

||||

```bash

|

||||

1. 阻止用户生产和消费

|

||||

bin/kafka-configs.sh --zookeeper ${主集群A的ZK地址} --entity-type users --entity-name ${客户端使用的kafkaUser} --add-config didi.ha.active.cluster=None --alter

|

||||

|

||||

|

||||

2. 等待FetcherLag 和 Offset 同步

|

||||

无需操作,仅需检查主备Topic的Offset是否一致了。

|

||||

|

||||

|

||||

3. 取消备集群B向主集群A进行同步数据的配置

|

||||

bin/kafka-configs.sh --zookeeper ${备集群B的ZK地址} --entity-type ha-topics --entity-name ${Topic名称} --delete-config didi.ha.remote.cluster --alter

|

||||

|

||||

|

||||

4. 增加主集群A向备集群B进行同步数据的配置

|

||||

bin/kafka-configs.sh --zookeeper ${主集群A的ZK地址} --entity-type ha-topics --entity-name ${Topic名称} --add-config didi.ha.remote.cluster=${备集群B的集群ID} --alter

|

||||

|

||||

|

||||

5. 修改主集群A,备集群B,网关中,kafkaUser对应的集群,从而引导请求走向备集群

|

||||

bin/kafka-configs.sh --zookeeper ${主集群A的ZK地址} --entity-type users --entity-name ${客户端使用的kafkaUser} --add-config didi.ha.active.cluster=${备集群B的集群ID} --alter

|

||||

|

||||

bin/kafka-configs.sh --zookeeper ${备集群B的ZK地址} --entity-type users --entity-name ${客户端使用的kafkaUser} --add-config didi.ha.active.cluster=${备集群B的集群ID} --alter

|

||||

|

||||

bin/kafka-configs.sh --zookeeper ${网关的ZK地址} --entity-type users --entity-name ${客户端使用的kafkaUser} --add-config didi.ha.active.cluster=${备集群B的集群ID} --alter

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## 3、FAQ

|

||||

|

||||

**问题一:使用中,有没有什么需要注意的地方?**

|

||||

|

||||

1. 主备切换是按照KafkaUser维度进行切换的,因此建议**不同服务之间,使用不同的KafkaUser**。这不仅有助于主备切换,也有助于做权限管控等。

|

||||

2. 在建立主备关系的过程中,如果主Topic的数据量比较大,建议逐步建立主备关系,避免一次性建立太多主备关系的Topic导致主集群需要被同步大量数据从而出现压力。

|

||||

|

||||

|

||||

**问题二:消费客户端如果重启之后,会不会导致变成从最旧或者最新的数据开始消费?**

|

||||

|

||||

不会。主备集群,会相互同步__consumer_offsets这个Topic的数据,因此客户端在主集群的消费进度信息,也会被同步到备集群,客户端在备集群进行消费时,也会从上次提交在主集群Topic的位置开始消费。

|

||||

|

||||

|

||||

**问题三:如果是类似Flink任务,是自己维护消费进度的程序,在主备切换之后,会不会存在数据丢失或者重复消费的情况?**

|

||||

|

||||

如果Flink自己管理好了消费进度,那么就不会。因为主备集群之间的数据同步就和一个集群内的副本同步一样,备集群会将主集群Topic中的Offset信息等都同步过来,因此不会。

|

||||

|

||||

|

||||

**问题四:可否做到不重启客户端?**

|

||||

|

||||

即将开发完成的高可用版Kafka二期将具备该能力,敬请期待。

|

||||

|

||||

BIN

docs/didi/assets/Kafka主备切换流程.png

Normal file

BIN

docs/didi/assets/Kafka主备切换流程.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 254 KiB |

BIN

docs/didi/assets/Kafka基于网关的生产消费流程.png

Normal file

BIN

docs/didi/assets/Kafka基于网关的生产消费流程.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 53 KiB |

367

docs/didi/drawio/Kafka主备切换流程.drawio

Normal file

367

docs/didi/drawio/Kafka主备切换流程.drawio

Normal file

@@ -0,0 +1,367 @@

|

||||

<mxfile host="65bd71144e">

|

||||

<diagram id="bhaMuW99Q1BzDTtcfRXp" name="Page-1">

|

||||

<mxGraphModel dx="1384" dy="785" grid="1" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="1169" pageHeight="827" math="0" shadow="0">

|

||||

<root>

|

||||

<mxCell id="0"/>

|

||||

<mxCell id="1" parent="0"/>

|

||||

<mxCell id="81" value="1、主集群拒绝客户端的写入" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FFFFFF;strokeColor=#d79b00;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;fontSize=16;" vertex="1" parent="1">

|

||||

<mxGeometry x="630" y="70" width="490" height="380" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="79" value="主备高可用集群稳定时的状态" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FFFFFF;strokeColor=#d79b00;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;fontSize=16;" vertex="1" parent="1">

|

||||

<mxGeometry x="30" y="70" width="490" height="380" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="27" value="Kafka——主集群A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" parent="1" vertex="1">

|

||||

<mxGeometry x="200" y="100" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="32" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" parent="1" vertex="1">

|

||||

<mxGeometry x="210" y="110" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="33" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" parent="1" vertex="1">

|

||||

<mxGeometry x="210" y="150" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="36" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" parent="1" vertex="1">

|

||||

<mxGeometry x="200" y="220" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="37" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" parent="1" vertex="1">

|

||||

<mxGeometry x="210" y="230" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="38" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" parent="1" vertex="1">

|

||||

<mxGeometry x="210" y="270" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="63" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;" edge="1" parent="1" source="39" target="27">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="440" y="380"/>

|

||||

<mxPoint x="440" y="140"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="64" value="备集群B 不断向 主集群A <br>发送Fetch请求,<br>从而同步主集群A的数据" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="63">

|

||||

<mxGeometry x="-0.05" y="-4" relative="1" as="geometry">

|

||||

<mxPoint x="6" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="39" value="Kafka——备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" parent="1" vertex="1">

|

||||

<mxGeometry x="200" y="340" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="40" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" parent="1" vertex="1">

|

||||

<mxGeometry x="210" y="350" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="41" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" parent="1" vertex="1">

|

||||

<mxGeometry x="210" y="390" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="57" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=default;startArrow=classic;startFill=1;" parent="1" source="42" target="27" edge="1">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="58" value="对主集群进行读写" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" parent="57" vertex="1" connectable="0">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="42" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" parent="1" vertex="1">

|

||||

<mxGeometry x="40" y="240" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="65" value="Kafka——主集群A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="100" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="66" value="Zookeeper(修改ZK)" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FF3333;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="110" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="67" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="150" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="68" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="220" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="69" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="230" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="70" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="270" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="71" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;" edge="1" parent="1" source="73" target="65">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="1040" y="380"/>

|

||||

<mxPoint x="1040" y="140"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="72" value="备集群B 不断向 主集群A<br>发送Fetch请求,<br>从而同步主集群A的数据" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="71">

|

||||

<mxGeometry x="-0.05" y="-4" relative="1" as="geometry">

|

||||

<mxPoint x="6" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="73" value="Kafka——备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="340" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="74" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="350" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="75" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="390" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="76" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=#FF3333;startArrow=none;startFill=0;strokeWidth=3;endArrow=none;endFill=0;dashed=1;" edge="1" parent="1" source="78" target="65">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="77" value="对主集群进行读写会出现失败" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="76">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="78" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="640" y="240" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="82" value="2、等待主备同步完成,避免丢数据" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FFFFFF;strokeColor=#d79b00;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;fontSize=16;" vertex="1" parent="1">

|

||||

<mxGeometry x="630" y="590" width="490" height="380" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="83" value="Kafka——主集群A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="620" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="84" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="630" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="85" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="670" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="86" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="740" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="87" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="750" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="88" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="790" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="89" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;" edge="1" parent="1" source="91" target="83">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="1040" y="900"/>

|

||||

<mxPoint x="1040" y="660"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="90" value="备集群B 不断向 主集群A<br>发送Fetch请求,<br>从而同步主集群A的<br>指定Topic的数据" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="89">

|

||||

<mxGeometry x="-0.05" y="-4" relative="1" as="geometry">

|

||||

<mxPoint x="6" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="91" value="Kafka——备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="860" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="92" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="870" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="93" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="910" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="94" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=#FF3333;startArrow=none;startFill=0;strokeWidth=3;endArrow=none;endFill=0;dashed=1;" edge="1" parent="1" source="96" target="83">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="95" value="对主集群进行读写会出现失败" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="94">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="96" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="640" y="760" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="97" value="3、Topic粒度数据同步方向调整,由主集群A向备集群B同步数据" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FFFFFF;strokeColor=#d79b00;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;fontSize=16;" vertex="1" parent="1">

|

||||

<mxGeometry x="30" y="590" width="490" height="380" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="98" value="Kafka——主集群A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" vertex="1" parent="1">

|

||||

<mxGeometry x="200" y="620" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="99" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="630" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="100" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="670" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="101" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="200" y="740" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="102" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="750" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="103" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="790" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="104" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;endArrow=none;endFill=0;strokeColor=#FF3333;strokeWidth=1;startArrow=classic;startFill=1;" edge="1" parent="1" source="106" target="98">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="440" y="900"/>

|

||||

<mxPoint x="440" y="660"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="105" value="<span style="font-size: 11px;">主集群A 不断向 备集群B</span><br style="font-size: 11px;"><span style="font-size: 11px;">发送Fetch请求,</span><br style="font-size: 11px;"><span style="font-size: 11px;">从而同步备集群B的<br>指定Topic的数据</span>" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="104">

|

||||

<mxGeometry x="-0.05" y="-4" relative="1" as="geometry">

|

||||

<mxPoint x="-4" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="106" value="Kafka——备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="200" y="860" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="107" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="870" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="108" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="910" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="109" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=#FF3333;startArrow=none;startFill=0;strokeWidth=3;endArrow=none;endFill=0;dashed=1;" edge="1" parent="1" source="111" target="98">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="110" value="对主集群进行读写会出现失败" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="109">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="111" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="40" y="760" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="127" value="4、修改ZK,使得客户端使用的KafkaUser对应的集群为备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FFFFFF;strokeColor=#d79b00;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;fontSize=16;" vertex="1" parent="1">

|

||||

<mxGeometry x="30" y="1110" width="490" height="380" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="128" value="Kafka——主集群A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" vertex="1" parent="1">

|

||||

<mxGeometry x="200" y="1140" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="130" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="1190" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="131" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="200" y="1260" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="132" value="Zookeeper(修改ZK)" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FF3333;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="1270" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="133" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="1310" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="134" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;endArrow=none;endFill=0;strokeColor=#000000;strokeWidth=1;startArrow=classic;startFill=1;" edge="1" parent="1" source="136" target="128">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="440" y="1420"/>

|

||||

<mxPoint x="440" y="1180"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="135" value="<span style="color: rgb(0 , 0 , 0) ; font-size: 11px">主集群A 不断向 备集群B</span><br style="color: rgb(0 , 0 , 0) ; font-size: 11px"><span style="color: rgb(0 , 0 , 0) ; font-size: 11px">发送Fetch请求,</span><br style="color: rgb(0 , 0 , 0) ; font-size: 11px"><span style="color: rgb(0 , 0 , 0) ; font-size: 11px">从而同步备集群B的<br>指定Topic的数据</span>" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="134">

|

||||

<mxGeometry x="-0.05" y="-4" relative="1" as="geometry">

|

||||

<mxPoint x="-4" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="136" value="Kafka——备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="200" y="1380" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="138" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="1430" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="139" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=#FF3333;startArrow=none;startFill=0;strokeWidth=3;endArrow=none;endFill=0;dashed=1;" edge="1" parent="1" source="141" target="128">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="140" value="对主集群进行读写会出现失败" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="139">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="141" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="40" y="1280" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="142" value="5、重启客户端,网关将请求转向集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FFFFFF;strokeColor=#d79b00;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;fontSize=16;" vertex="1" parent="1">

|

||||

<mxGeometry x="630" y="1110" width="490" height="380" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="143" value="Kafka——主集群A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="1140" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="144" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="1150" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="145" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="1190" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="146" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="1260" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="148" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="1310" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="149" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;endArrow=none;endFill=0;strokeColor=#000000;strokeWidth=1;startArrow=classic;startFill=1;" edge="1" parent="1" source="151" target="143">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="1040" y="1420"/>

|

||||

<mxPoint x="1040" y="1180"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="150" value="<span style="color: rgb(0 , 0 , 0) ; font-size: 11px">主集群A 不断向 备集群B</span><br style="color: rgb(0 , 0 , 0) ; font-size: 11px"><span style="color: rgb(0 , 0 , 0) ; font-size: 11px">发送Fetch请求,</span><br style="color: rgb(0 , 0 , 0) ; font-size: 11px"><span style="color: rgb(0 , 0 , 0) ; font-size: 11px">从而同步备集群B的<br>指定Topic的数据</span>" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];fontColor=#FF3333;fontSize=13;" vertex="1" connectable="0" parent="149">

|

||||

<mxGeometry x="-0.05" y="-4" relative="1" as="geometry">

|

||||

<mxPoint x="-4" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="151" value="Kafka——备集群B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="800" y="1380" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="152" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="1390" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="153" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="1430" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="156" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="640" y="1280" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="157" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=default;startArrow=classic;startFill=1;exitX=0.5;exitY=1;exitDx=0;exitDy=0;" edge="1" parent="1" source="156" target="151">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<mxPoint x="529.9966666666667" y="1400" as="sourcePoint"/>

|

||||

<mxPoint x="613.3299999999999" y="1300" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="158" value="对B集群进行读写" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="157">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="159" value="Zookeeper(修改ZK)" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FF3333;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="1150" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="160" value="Zookeeper(修改ZK)" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#FF3333;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="210" y="1390" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="161" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="810" y="1270" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="162" value="" style="shape=flexArrow;endArrow=classic;html=1;fontSize=13;fontColor=#FF3333;strokeColor=#000000;strokeWidth=1;fillColor=#9999FF;" edge="1" parent="1">

|

||||

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

||||

<mxPoint x="550" y="259.5" as="sourcePoint"/>

|

||||

<mxPoint x="600" y="259.5" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="163" value="" style="shape=flexArrow;endArrow=classic;html=1;fontSize=13;fontColor=#FF3333;strokeColor=#000000;strokeWidth=1;fillColor=#9999FF;" edge="1" parent="1">

|

||||

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

||||

<mxPoint x="879.5" y="490" as="sourcePoint"/>

|

||||

<mxPoint x="879.5" y="540" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="164" value="" style="shape=flexArrow;endArrow=classic;html=1;fontSize=13;fontColor=#FF3333;strokeColor=#000000;strokeWidth=1;fillColor=#9999FF;" edge="1" parent="1">

|

||||

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

||||

<mxPoint x="274.5" y="1010" as="sourcePoint"/>

|

||||

<mxPoint x="274.5" y="1060" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="165" value="" style="shape=flexArrow;endArrow=classic;html=1;fontSize=13;fontColor=#FF3333;strokeColor=#000000;strokeWidth=1;fillColor=#9999FF;" edge="1" parent="1">

|

||||

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

||||

<mxPoint x="550" y="1309" as="sourcePoint"/>

|

||||

<mxPoint x="600" y="1309" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="167" value="" style="shape=flexArrow;endArrow=classic;html=1;fontSize=13;fontColor=#FF3333;strokeColor=#000000;strokeWidth=1;fillColor=#9999FF;" edge="1" parent="1">

|

||||

<mxGeometry width="50" height="50" relative="1" as="geometry">

|

||||

<mxPoint x="606" y="779.5" as="sourcePoint"/>

|

||||

<mxPoint x="550" y="779.5" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

</root>

|

||||

</mxGraphModel>

|

||||

</diagram>

|

||||

</mxfile>

|

||||

95

docs/didi/drawio/Kafka基于网关的生产消费流程.drawio

Normal file

95

docs/didi/drawio/Kafka基于网关的生产消费流程.drawio

Normal file

@@ -0,0 +1,95 @@

|

||||

<mxfile host="65bd71144e">

|

||||

<diagram id="bhaMuW99Q1BzDTtcfRXp" name="Page-1">

|

||||

<mxGraphModel dx="1344" dy="785" grid="1" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="1169" pageHeight="827" math="0" shadow="0">

|

||||

<root>

|

||||

<mxCell id="0"/>

|

||||

<mxCell id="1" parent="0"/>

|

||||

<mxCell id="27" value="Kafka集群--A" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=top;align=center;verticalAlign=bottom;" vertex="1" parent="1">

|

||||

<mxGeometry x="320" y="40" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="32" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="330" y="50" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="33" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="330" y="90" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="47" style="edgeStyle=orthogonalEdgeStyle;html=1;entryX=1;entryY=0.25;entryDx=0;entryDy=0;exitX=1;exitY=0.75;exitDx=0;exitDy=0;" edge="1" parent="1" source="36" target="27">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<Array as="points">

|

||||

<mxPoint x="560" y="260"/>

|

||||

<mxPoint x="560" y="60"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="51" value="2、网关发现是A集群的KafkaUser,<br>网关将请求转发到A集群" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="47">

|

||||

<mxGeometry x="-0.0444" y="-1" relative="1" as="geometry">

|

||||

<mxPoint x="49" y="72" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="55" style="edgeStyle=orthogonalEdgeStyle;html=1;exitX=0;exitY=0.5;exitDx=0;exitDy=0;entryX=1;entryY=0.5;entryDx=0;entryDy=0;" edge="1" parent="1" source="36" target="42">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="56" value="4、网关返回Topic元信息" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="55">

|

||||

<mxGeometry x="0.2125" relative="1" as="geometry">

|

||||

<mxPoint x="17" y="-10" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="36" value="Kafka网关" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="320" y="200" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="37" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="330" y="210" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="38" value="Kafka-Gateways" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="330" y="250" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="39" value="Kafka集群--B" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#cdeb8b;strokeColor=#36393d;labelPosition=center;verticalLabelPosition=bottom;align=center;verticalAlign=top;" vertex="1" parent="1">

|

||||

<mxGeometry x="320" y="360" width="160" height="80" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="40" value="Zookeeper" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#dae8fc;strokeColor=#6c8ebf;" vertex="1" parent="1">

|

||||

<mxGeometry x="330" y="370" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="41" value="Kafka-Brokers" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="330" y="410" width="140" height="20" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="57" style="html=1;entryX=0;entryY=0.5;entryDx=0;entryDy=0;strokeColor=default;startArrow=classic;startFill=1;" edge="1" parent="1" source="42" target="27">

|

||||

<mxGeometry relative="1" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="58" value="5、通过Topic元信息,<br>客户端直接访问A集群进行生产消费" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="57">

|

||||

<mxGeometry x="-0.0724" y="1" relative="1" as="geometry">

|

||||

<mxPoint x="-6" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="42" value="Kafka-Client" style="rounded=0;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=1;fillColor=#ffe6cc;strokeColor=#d79b00;" vertex="1" parent="1">

|

||||

<mxGeometry x="40" y="220" width="120" height="40" as="geometry"/>

|

||||

</mxCell>

|

||||

<mxCell id="48" style="html=1;entryX=0;entryY=0.75;entryDx=0;entryDy=0;exitX=0.5;exitY=1;exitDx=0;exitDy=0;edgeStyle=orthogonalEdgeStyle;" edge="1" parent="1" source="42" target="36">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<mxPoint x="490" y="250" as="sourcePoint"/>

|

||||

<mxPoint x="490" y="90" as="targetPoint"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="50" value="1、请求Topic元信息" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="48">

|

||||

<mxGeometry x="-0.3373" y="-1" relative="1" as="geometry">

|

||||

<mxPoint x="17" y="7" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="49" style="edgeStyle=orthogonalEdgeStyle;html=1;entryX=1;entryY=0.25;entryDx=0;entryDy=0;exitX=1;exitY=0.75;exitDx=0;exitDy=0;" edge="1" parent="1" source="27" target="36">

|

||||

<mxGeometry relative="1" as="geometry">

|

||||

<mxPoint x="640" y="60" as="sourcePoint"/>

|

||||

<mxPoint x="490" y="70" as="targetPoint"/>

|

||||

<Array as="points">

|

||||

<mxPoint x="520" y="100"/>

|

||||

<mxPoint x="520" y="220"/>

|

||||

</Array>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

<mxCell id="52" value="3、A集群返回<br>Topic元信息给网关" style="edgeLabel;html=1;align=center;verticalAlign=middle;resizable=0;points=[];" vertex="1" connectable="0" parent="49">

|

||||

<mxGeometry x="-0.03" y="-1" relative="1" as="geometry">

|

||||

<mxPoint x="-19" y="3" as="offset"/>

|

||||

</mxGeometry>

|

||||

</mxCell>

|

||||

</root>

|

||||

</mxGraphModel>

|

||||

</diagram>

|

||||

</mxfile>

|

||||

132

docs/install_guide/install_guide_docker_cn.md

Normal file

132

docs/install_guide/install_guide_docker_cn.md

Normal file

@@ -0,0 +1,132 @@

|

||||

---

|

||||

|

||||

|

||||

|

||||

**一站式`Apache Kafka`集群指标监控与运维管控平台**

|

||||

|

||||

---

|

||||

|

||||

|

||||

## 基于Docker部署Logikm

|

||||

|

||||

为了方便用户快速的在自己的环境搭建Logikm,可使用docker快速搭建

|

||||

|

||||

### 部署Mysql

|

||||

|

||||

```shell

|

||||

docker run --name mysql -p 3306:3306 -d registry.cn-hangzhou.aliyuncs.com/zqqq/logikm-mysql:5.7.37

|

||||

```

|

||||

|

||||

可选变量参考[文档](https://hub.docker.com/_/mysql)

|

||||

|

||||

默认参数

|

||||

|

||||

* MYSQL_ROOT_PASSWORD:root

|

||||

|

||||

|

||||

|

||||

### 部署Logikm Allinone

|

||||

|

||||

> 前后端部署在一起

|

||||

|

||||

```shell