mirror of

https://github.com/didi/KnowStreaming.git

synced 2025-12-26 21:52:13 +08:00

Compare commits

214 Commits

v3.0.0-bet

...

v3.0.0-bet

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

41637dc1e5 | ||

|

|

feac0a058f | ||

|

|

27eeac9fd4 | ||

|

|

a14db4b194 | ||

|

|

54ee271a47 | ||

|

|

a3a9be4f7f | ||

|

|

d4f0a832f3 | ||

|

|

7dc533372c | ||

|

|

1737d87713 | ||

|

|

dbb98dea11 | ||

|

|

802b382b36 | ||

|

|

fc82999d45 | ||

|

|

08aa000c07 | ||

|

|

39015b5100 | ||

|

|

0d635ad419 | ||

|

|

9133205915 | ||

|

|

725ac10c3d | ||

|

|

2b76358c8f | ||

|

|

833c360698 | ||

|

|

7da1e67b01 | ||

|

|

7eb86a47dd | ||

|

|

d67e383c28 | ||

|

|

8749d3e1f5 | ||

|

|

30fba21c48 | ||

|

|

d83d35aee9 | ||

|

|

1d3caeea7d | ||

|

|

fbfa0d2d2a | ||

|

|

e626b99090 | ||

|

|

203859b71b | ||

|

|

9a25c22f3a | ||

|

|

0a03f41a7c | ||

|

|

56191939c8 | ||

|

|

beb754aaaa | ||

|

|

f234f740ca | ||

|

|

e14679694c | ||

|

|

e06712397e | ||

|

|

b6c6df7ffc | ||

|

|

375c6f56c9 | ||

|

|

0bf85c97b5 | ||

|

|

630e582321 | ||

|

|

a89fe23bdd | ||

|

|

a7a5fa9a31 | ||

|

|

c73a7eee2f | ||

|

|

121f8468d5 | ||

|

|

7b0b6936e0 | ||

|

|

597ea04a96 | ||

|

|

f7f90aeaaa | ||

|

|

227479f695 | ||

|

|

6477fb3fe0 | ||

|

|

4223f4f3c4 | ||

|

|

7288874d72 | ||

|

|

68f76f2daf | ||

|

|

fe6ddebc49 | ||

|

|

12b5acd073 | ||

|

|

a6f1fe07b3 | ||

|

|

85e3f2a946 | ||

|

|

d4f416de14 | ||

|

|

0d9a6702c1 | ||

|

|

d11285cdbf | ||

|

|

5f1f33d2b9 | ||

|

|

474daf752d | ||

|

|

27d1b92690 | ||

|

|

65499443c2 | ||

|

|

993afa4c19 | ||

|

|

6515dd28aa | ||

|

|

028d891c32 | ||

|

|

0df55ec22d | ||

|

|

579f64774d | ||

|

|

792f8d939d | ||

|

|

e4fb02fcda | ||

|

|

0c14c641d0 | ||

|

|

dba671fd1e | ||

|

|

80d1693722 | ||

|

|

26014a11b2 | ||

|

|

848fddd55a | ||

|

|

97f5f05f1a | ||

|

|

25b82810f2 | ||

|

|

9b1e506fa7 | ||

|

|

7a42996e97 | ||

|

|

dbfcebcf67 | ||

|

|

37c3f69a28 | ||

|

|

5d412890b4 | ||

|

|

1e318a4c40 | ||

|

|

d4549176ec | ||

|

|

61efdf492f | ||

|

|

67ea4d44c8 | ||

|

|

fdae05a4aa | ||

|

|

5efb837ee8 | ||

|

|

584b626d93 | ||

|

|

de25a4ed8e | ||

|

|

2e852e5ca6 | ||

|

|

b11000715a | ||

|

|

b3f8b46f0f | ||

|

|

8d22a0664a | ||

|

|

20756a3453 | ||

|

|

c9b4d45a64 | ||

|

|

83f7f5468b | ||

|

|

59c042ad67 | ||

|

|

d550fc5068 | ||

|

|

6effba69a0 | ||

|

|

9b46956259 | ||

|

|

b5a4a732da | ||

|

|

487862367e | ||

|

|

5b63b9ce67 | ||

|

|

afbcd3e1df | ||

|

|

12b82c1395 | ||

|

|

863b765e0d | ||

|

|

731429c51c | ||

|

|

66f3bc61fe | ||

|

|

4efe35dd51 | ||

|

|

c92461ef93 | ||

|

|

405e6e0c1d | ||

|

|

0d227aef49 | ||

|

|

0e49002f42 | ||

|

|

2e016800e0 | ||

|

|

09f317b991 | ||

|

|

5a48cb1547 | ||

|

|

f632febf33 | ||

|

|

3c53467943 | ||

|

|

d358c0f4f7 | ||

|

|

de977a5b32 | ||

|

|

703d685d59 | ||

|

|

31a5f17408 | ||

|

|

c40ae3c455 | ||

|

|

b71a34279e | ||

|

|

8f8c0c4eda | ||

|

|

3a384f0e34 | ||

|

|

cf7bc11cbd | ||

|

|

be60ae8399 | ||

|

|

8e50d145d5 | ||

|

|

7a3d15525c | ||

|

|

64f32d8b24 | ||

|

|

949d6ba605 | ||

|

|

ceb8db09f4 | ||

|

|

ed05a0ebb8 | ||

|

|

a7cbb76655 | ||

|

|

93cbfa0b1f | ||

|

|

6120613a98 | ||

|

|

dbd00db159 | ||

|

|

befde952f5 | ||

|

|

1aa759e5be | ||

|

|

13354145fc | ||

|

|

2de27719c1 | ||

|

|

21db57b537 | ||

|

|

dfe8d09477 | ||

|

|

90dfa22c64 | ||

|

|

0f35427645 | ||

|

|

7909f60ff8 | ||

|

|

9a1a8a4c30 | ||

|

|

fa7ad64140 | ||

|

|

0b376bd69c | ||

|

|

8a0c23339d | ||

|

|

e7ab3aff16 | ||

|

|

d0948797b9 | ||

|

|

04a5e17451 | ||

|

|

47065c8042 | ||

|

|

488c778736 | ||

|

|

d10a7bcc75 | ||

|

|

afe44a2537 | ||

|

|

9eadafe850 | ||

|

|

dab3eefcc0 | ||

|

|

2b9a6b28d8 | ||

|

|

465f98ca2b | ||

|

|

a0312be4fd | ||

|

|

4a5161372b | ||

|

|

4c9921f752 | ||

|

|

6dd72d40ee | ||

|

|

db49c234bb | ||

|

|

4a9df0c4d9 | ||

|

|

461573c2ba | ||

|

|

291992753f | ||

|

|

fcefe7ac38 | ||

|

|

7da712fcff | ||

|

|

2fd8687624 | ||

|

|

639b1f8336 | ||

|

|

ab3b83e42a | ||

|

|

4818629c40 | ||

|

|

61784c860a | ||

|

|

d5667254f2 | ||

|

|

af2b93983f | ||

|

|

8281301cbd | ||

|

|

0043ab8371 | ||

|

|

500eaace82 | ||

|

|

28e8540c78 | ||

|

|

69adf682e2 | ||

|

|

69cd1ff6e1 | ||

|

|

415d67cc32 | ||

|

|

46a2fec79b | ||

|

|

560b322fca | ||

|

|

effe17ac85 | ||

|

|

7699acfc1b | ||

|

|

6e058240b3 | ||

|

|

f005c6bc44 | ||

|

|

7be462599f | ||

|

|

271ab432d9 | ||

|

|

4114777a4e | ||

|

|

9189a54442 | ||

|

|

b95ee762e3 | ||

|

|

9e3c4dc06b | ||

|

|

1891a3ac86 | ||

|

|

9ecdcac06d | ||

|

|

790cb6a2e1 | ||

|

|

4a98e5f025 | ||

|

|

507abc1d84 | ||

|

|

9b732fbbad | ||

|

|

220f1c6fc3 | ||

|

|

7a950c67b6 | ||

|

|

78f625dc8c | ||

|

|

211d26a3ed | ||

|

|

dce2bc6326 | ||

|

|

90e5d7f6f0 | ||

|

|

fc835e09c6 | ||

|

|

c6e782a637 | ||

|

|

1ddfbfc833 |

10

README.md

10

README.md

@@ -51,16 +51,16 @@

|

||||

- 无需侵入改造 `Apache Kafka` ,一键便能纳管 `0.10.x` ~ `3.x.x` 众多版本的Kafka,包括 `ZK` 或 `Raft` 运行模式的版本,同时在兼容架构上具备良好的扩展性,帮助您提升集群管理水平;

|

||||

|

||||

- 🌪️ **零成本、界面化**

|

||||

- 提炼高频 CLI 能力,设计合理的产品路径,提供清新美观的 GUI 界面,支持 Cluster、Broker、Topic、Group、Message、ACL 等组件 GUI 管理,普通用户5分钟即可上手;

|

||||

- 提炼高频 CLI 能力,设计合理的产品路径,提供清新美观的 GUI 界面,支持 Cluster、Broker、Zookeeper、Topic、ConsumerGroup、Message、ACL、Connect 等组件 GUI 管理,普通用户5分钟即可上手;

|

||||

|

||||

- 👏 **云原生、插件化**

|

||||

- 基于云原生构建,具备水平扩展能力,只需要增加节点即可获取更强的采集及对外服务能力,提供众多可热插拔的企业级特性,覆盖可观测性生态整合、资源治理、多活容灾等核心场景;

|

||||

|

||||

- 🚀 **专业能力**

|

||||

- 集群管理:支持集群一键纳管,健康分析、核心组件观测 等功能;

|

||||

- 集群管理:支持一键纳管,健康分析、核心组件观测 等功能;

|

||||

- 观测提升:多维度指标观测大盘、观测指标最佳实践 等功能;

|

||||

- 异常巡检:集群多维度健康巡检、集群多维度健康分 等功能;

|

||||

- 能力增强:Topic扩缩副本、Topic副本迁移 等功能;

|

||||

- 能力增强:集群负载均衡、Topic扩缩副本、Topic副本迁移 等功能;

|

||||

|

||||

|

||||

|

||||

@@ -133,3 +133,7 @@ PS: 提问请尽量把问题一次性描述清楚,并告知环境信息情况

|

||||

**`2、微信群`**

|

||||

|

||||

微信加群:添加`mike_zhangliang`、`PenceXie`的微信号备注KnowStreaming加群。

|

||||

|

||||

## Star History

|

||||

|

||||

[](https://star-history.com/#didi/KnowStreaming&Date)

|

||||

|

||||

@@ -1,6 +1,132 @@

|

||||

|

||||

|

||||

## v3.0.0-beta

|

||||

## v3.0.0-beta.3

|

||||

|

||||

**文档**

|

||||

- FAQ 补充权限识别失败问题的说明

|

||||

- 同步更新文档,保持与官网一致

|

||||

|

||||

|

||||

**Bug修复**

|

||||

- Offset 信息获取时,过滤掉无 Leader 的分区

|

||||

- 升级 oshi-core 版本至 5.6.1 版本,修复 Windows 系统获取系统指标失败问题

|

||||

- 修复 JMX 连接被关闭后,未进行重建的问题

|

||||

- 修复因 DB 中 Broker 信息不存在导致 TotalLogSize 指标获取时抛空指针问题

|

||||

- 修复 dml-logi.sql 中,SQL 注释错误的问题

|

||||

- 修复 startup.sh 中,识别操作系统类型错误的问题

|

||||

- 修复配置管理页面删除配置失败的问题

|

||||

- 修复系统管理应用文件引用路径

|

||||

- 修复 Topic Messages 详情提示信息点击跳转 404 的问题

|

||||

- 修复扩副本时,当前副本数不显示问题

|

||||

|

||||

|

||||

**体验优化**

|

||||

- Topic-Messages 页面,增加返回数据的排序以及按照Earliest/Latest的获取方式

|

||||

- 优化 GroupOffsetResetEnum 类名为 OffsetTypeEnum,使得类名含义更准确

|

||||

- 移动 KafkaZKDAO 类,及 Kafka Znode 实体类的位置,使得 Kafka Zookeeper DAO 更加内聚及便于识别

|

||||

- 后端补充 Overview 页面指标排序的功能

|

||||

- 前端 Webpack 配置优化

|

||||

- Cluster Overview 图表取消放大展示功能

|

||||

- 列表页增加手动刷新功能

|

||||

- 接入/编辑集群,优化 JMX-PORT,Version 信息的回显,优化JMX信息的展示

|

||||

- 提高登录页面图片展示清晰度

|

||||

- 部分样式和文案优化

|

||||

|

||||

---

|

||||

|

||||

## v3.0.0-beta.2

|

||||

|

||||

**文档**

|

||||

- 新增登录系统对接文档

|

||||

- 优化前端工程打包构建部分文档说明

|

||||

- FAQ补充KnowStreaming连接特定JMX IP的说明

|

||||

|

||||

|

||||

**Bug修复**

|

||||

- 修复logi_security_oplog表字段过短,导致删除Topic等操作无法记录的问题

|

||||

- 修复ES查询时,抛java.lang.NumberFormatException: For input string: "{"value":0,"relation":"eq"}" 问题

|

||||

- 修复LogStartOffset和LogEndOffset指标单位错误问题

|

||||

- 修复进行副本变更时,旧副本数为NULL的问题

|

||||

- 修复集群Group列表,在第二页搜索时,搜索时返回的分页信息错误问题

|

||||

- 修复重置Offset时,返回的错误信息提示不一致的问题

|

||||

- 修复集群查看,系统查看,LoadRebalance等页面权限点缺失问题

|

||||

- 修复查询不存在的Topic时,错误信息提示不明显的问题

|

||||

- 修复Windows用户打包前端工程报错的问题

|

||||

- package-lock.json锁定前端依赖版本号,修复因依赖自动升级导致打包失败等问题

|

||||

- 系统管理子应用,补充后端返回的Code码拦截,解决后端接口返回报错不展示的问题

|

||||

- 修复用户登出后,依旧可以访问系统的问题

|

||||

- 修复巡检任务配置时,数值显示错误的问题

|

||||

- 修复Broker/Topic Overview 图表和图表详情问题

|

||||

- 修复Job扩缩副本任务明细数据错误的问题

|

||||

- 修复重置Offset时,分区ID,Offset数值无限制问题

|

||||

- 修复扩缩/迁移副本时,无法选中Kafka系统Topic的问题

|

||||

- 修复Topic的Config页面,编辑表单时不能正确回显当前值的问题

|

||||

- 修复Broker Card返回数据后依旧展示加载态的问题

|

||||

|

||||

|

||||

|

||||

**体验优化**

|

||||

- 优化默认用户密码为 admin/admin

|

||||

- 缩短新增集群后,集群信息加载的耗时

|

||||

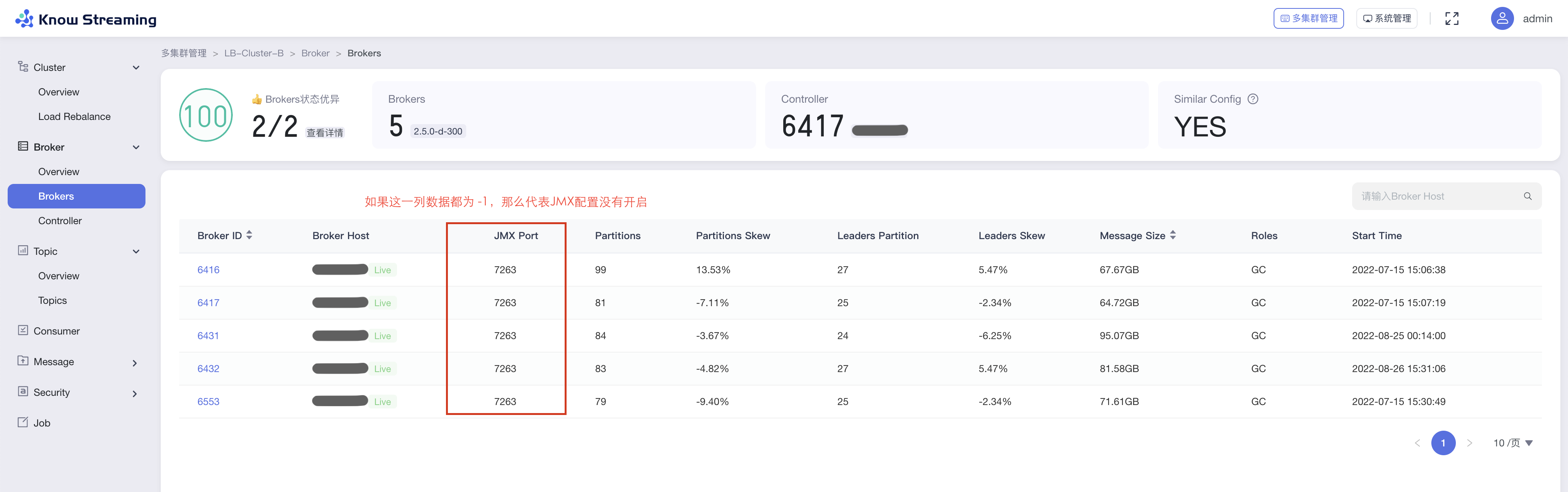

- 集群Broker列表,增加Controller角色信息

|

||||

- 副本变更任务结束后,增加进行优先副本选举的操作

|

||||

- Task模块任务分为Metrics、Common、Metadata三类任务,每类任务配备独立线程池,减少对Job模块的线程池,以及不同类任务之间的相互影响

|

||||

- 删除代码中存在的多余无用文件

|

||||

- 自动新增ES索引模版及近7天索引,减少用户搭建时需要做的事项

|

||||

- 优化前端工程打包流程

|

||||

- 优化登录页文案,页面左侧栏内容,单集群详情样式,Topic列表趋势图等

|

||||

- 首次进入Broker/Topic图表详情时,进行预缓存数据从而优化体验

|

||||

- 优化Topic详情Partition Tab的展示

|

||||

- 多集群列表页增加编辑功能

|

||||

- 优化副本变更时,迁移时间支持分钟级别粒度

|

||||

- logi-security版本升级至2.10.13

|

||||

- logi-elasticsearch-client版本升级至1.0.24

|

||||

|

||||

|

||||

**能力提升**

|

||||

- 支持Ldap登录认证

|

||||

|

||||

---

|

||||

|

||||

## v3.0.0-beta.1

|

||||

|

||||

**文档**

|

||||

- 新增Task模块说明文档

|

||||

- FAQ补充 `Specified key was too long; max key length is 767 bytes ` 错误说明

|

||||

- FAQ补充 `出现ESIndexNotFoundException报错` 错误说明

|

||||

|

||||

|

||||

**Bug修复**

|

||||

- 修复 Consumer 点击 Stop 未停止检索的问题

|

||||

- 修复创建/编辑角色权限报错问题

|

||||

- 修复多集群管理/单集群详情均衡卡片状态错误问题

|

||||

- 修复版本列表未排序问题

|

||||

- 修复Raft集群Controller信息不断记录问题

|

||||

- 修复部分版本消费组描述信息获取失败问题

|

||||

- 修复分区Offset获取失败的日志中,缺少Topic名称信息问题

|

||||

- 修复GitHub图地址错误,及图裂问题

|

||||

- 修复Broker默认使用的地址和注释不一致问题

|

||||

- 修复 Consumer 列表分页不生效问题

|

||||

- 修复操作记录表operation_methods字段缺少默认值问题

|

||||

- 修复集群均衡表中move_broker_list字段无效的问题

|

||||

- 修复KafkaUser、KafkaACL信息获取时,日志一直重复提示不支持问题

|

||||

- 修复指标缺失时,曲线出现掉底的问题

|

||||

|

||||

|

||||

**体验优化**

|

||||

- 优化前端构建时间和打包体积,增加依赖打包的分包策略

|

||||

- 优化产品样式和文案展示

|

||||

- 优化ES客户端数为可配置

|

||||

- 优化日志中大量出现的MySQL Key冲突日志

|

||||

|

||||

|

||||

**能力提升**

|

||||

- 增加周期任务,用于主动创建缺少的ES模版及索引的能力,减少额外的脚本操作

|

||||

- 增加JMX连接的Broker地址可选择的能力

|

||||

|

||||

---

|

||||

|

||||

## v3.0.0-beta.0

|

||||

|

||||

**1、多集群管理**

|

||||

|

||||

|

||||

@@ -9,7 +9,7 @@ error_exit ()

|

||||

[ ! -e "$JAVA_HOME/bin/java" ] && unset JAVA_HOME

|

||||

|

||||

if [ -z "$JAVA_HOME" ]; then

|

||||

if $darwin; then

|

||||

if [ "Darwin" = "$(uname -s)" ]; then

|

||||

|

||||

if [ -x '/usr/libexec/java_home' ] ; then

|

||||

export JAVA_HOME=`/usr/libexec/java_home`

|

||||

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 9.5 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 183 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 50 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 59 KiB |

264

docs/dev_guide/Task模块简介.md

Normal file

264

docs/dev_guide/Task模块简介.md

Normal file

@@ -0,0 +1,264 @@

|

||||

# Task模块简介

|

||||

|

||||

## 1、Task简介

|

||||

|

||||

在 KnowStreaming 中(下面简称KS),Task模块主要是用于执行一些周期任务,包括Cluster、Broker、Topic等指标的定时采集,集群元数据定时更新至DB,集群状态的健康巡检等。在KS中,与Task模块相关的代码,我们都统一存放在km-task模块中。

|

||||

|

||||

Task模块是基于 LogiCommon 中的Logi-Job组件实现的任务周期执行,Logi-Job 的功能类似 XXX-Job,它是 XXX-Job 在 KnowStreaming 的内嵌实现,主要用于简化 KnowStreaming 的部署。

|

||||

Logi-Job 的任务总共有两种执行模式,分别是:

|

||||

|

||||

+ 广播模式:同一KS集群下,同一任务周期中,所有KS主机都会执行该定时任务。

|

||||

+ 抢占模式:同一KS集群下,同一任务周期中,仅有某一台KS主机会执行该任务。

|

||||

|

||||

KS集群范围定义:连接同一个DB,且application.yml中的spring.logi-job.app-name的名称一样的KS主机为同一KS集群。

|

||||

|

||||

## 2、使用指南

|

||||

|

||||

Task模块基于Logi-Job的广播模式与抢占模式,分别实现了任务的抢占执行、重复执行以及均衡执行,他们之间的差别是:

|

||||

|

||||

+ 抢占执行:同一个KS集群,同一个任务执行周期中,仅有一台KS主机执行该任务;

|

||||

+ 重复执行:同一个KS集群,同一个任务执行周期中,所有KS主机都执行该任务。比如3台KS主机,3个Kafka集群,此时每台KS主机都会去采集这3个Kafka集群的指标;

|

||||

+ 均衡执行:同一个KS集群,同一个任务执行周期中,每台KS主机仅执行该任务的一部分,所有的KS主机共同协作完成了任务。比如3台KS主机,3个Kafka集群,稳定运行情况下,每台KS主机将仅采集1个Kafka集群的指标,3台KS主机共同完成3个Kafka集群指标的采集。

|

||||

|

||||

下面我们看一下具体例子。

|

||||

|

||||

### 2.1、抢占模式——抢占执行

|

||||

|

||||

功能说明:

|

||||

|

||||

+ 同一个KS集群,同一个任务执行周期中,仅有一台KS主机执行该任务。

|

||||

|

||||

代码例子:

|

||||

|

||||

```java

|

||||

// 1、实现Job接口,重写excute方法;

|

||||

// 2、在类上添加@Task注解,并且配置好信息,指定为随机抢占模式;

|

||||

// 效果:KS集群中,每5秒,会有一台KS主机输出 "测试定时任务运行中";

|

||||

@Task(name = "TestJob",

|

||||

description = "测试定时任务",

|

||||

cron = "*/5 * * * * ?",

|

||||

autoRegister = true,

|

||||

consensual = ConsensualEnum.RANDOM, // 这里一定要设置为RANDOM

|

||||

timeout = 6 * 60)

|

||||

public class TestJob implements Job {

|

||||

|

||||

@Override

|

||||

public TaskResult execute(JobContext jobContext) throws Exception {

|

||||

|

||||

System.out.println("测试定时任务运行中");

|

||||

return new TaskResult();

|

||||

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 2.2、广播模式——重复执行

|

||||

|

||||

功能说明:

|

||||

|

||||

+ 同一个KS集群,同一个任务执行周期中,所有KS主机都执行该任务。比如3台KS主机,3个Kafka集群,此时每台KS主机都会去重复采集这3个Kafka集群的指标。

|

||||

|

||||

代码例子:

|

||||

|

||||

```java

|

||||

// 1、实现Job接口,重写excute方法;

|

||||

// 2、在类上添加@Task注解,并且配置好信息,指定为广播抢占模式;

|

||||

// 效果:KS集群中,每5秒,每台KS主机都会输出 "测试定时任务运行中";

|

||||

@Task(name = "TestJob",

|

||||

description = "测试定时任务",

|

||||

cron = "*/5 * * * * ?",

|

||||

autoRegister = true,

|

||||

consensual = ConsensualEnum.BROADCAST, // 这里一定要设置为BROADCAST

|

||||

timeout = 6 * 60)

|

||||

public class TestJob implements Job {

|

||||

|

||||

@Override

|

||||

public TaskResult execute(JobContext jobContext) throws Exception {

|

||||

|

||||

System.out.println("测试定时任务运行中");

|

||||

return new TaskResult();

|

||||

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 2.3、广播模式——均衡执行

|

||||

|

||||

功能说明:

|

||||

|

||||

+ 同一个KS集群,同一个任务执行周期中,每台KS主机仅执行该任务的一部分,所有的KS主机共同协作完成了任务。比如3台KS主机,3个Kafka集群,稳定运行情况下,每台KS主机将仅采集1个Kafka集群的指标,3台KS主机共同完成3个Kafka集群指标的采集。

|

||||

|

||||

代码例子:

|

||||

|

||||

+ 该模式有点特殊,是KS基于Logi-Job的广播模式,做的一个扩展,以下为一个使用例子:

|

||||

|

||||

```java

|

||||

// 1、继承AbstractClusterPhyDispatchTask,实现processSubTask方法;

|

||||

// 2、在类上添加@Task注解,并且配置好信息,指定为广播模式;

|

||||

// 效果:在本样例中,每隔1分钟ks会将所有的kafka集群列表在ks集群主机内均衡拆分,每台主机会将分发到自身的Kafka集群依次执行processSubTask方法,实现KS集群的任务协同处理。

|

||||

@Task(name = "kmJobTask",

|

||||

description = "km job 模块调度执行任务",

|

||||

cron = "0 0/1 * * * ? *",

|

||||

autoRegister = true,

|

||||

consensual = ConsensualEnum.BROADCAST,

|

||||

timeout = 6 * 60)

|

||||

public class KMJobTask extends AbstractClusterPhyDispatchTask {

|

||||

|

||||

@Autowired

|

||||

private JobService jobService;

|

||||

|

||||

@Override

|

||||

protected TaskResult processSubTask(ClusterPhy clusterPhy, long triggerTimeUnitMs) throws Exception {

|

||||

jobService.scheduleJobByClusterId(clusterPhy.getId());

|

||||

return TaskResult.SUCCESS;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

## 3、原理简介

|

||||

|

||||

### 3.1、Task注解说明

|

||||

|

||||

```java

|

||||

public @interface Task {

|

||||

String name() default ""; //任务名称

|

||||

String description() default ""; //任务描述

|

||||

String owner() default "system"; //拥有者

|

||||

String cron() default ""; //定时执行的时间策略

|

||||

int retryTimes() default 0; //失败以后所能重试的最大次数

|

||||

long timeout() default 0; //在超时时间里重试

|

||||

//是否自动注册任务到数据库中

|

||||

//如果设置为false,需要手动去数据库km_task表注册定时任务信息。数据库记录和@Task注解缺一不可

|

||||

boolean autoRegister() default false;

|

||||

//执行模式:广播、随机抢占

|

||||

//广播模式:同一集群下的所有服务器都会执行该定时任务

|

||||

//随机抢占模式:同一集群下随机一台服务器执行该任务

|

||||

ConsensualEnum consensual() default ConsensualEnum.RANDOM;

|

||||

}

|

||||

```

|

||||

|

||||

### 3.2、数据库表介绍

|

||||

|

||||

+ logi_task:记录项目中的定时任务信息,一个定时任务对应一条记录。

|

||||

+ logi_job:具体任务执行信息。

|

||||

+ logi_job_log:定时任务的执行日志。

|

||||

+ logi_worker:记录机器信息,实现集群控制。

|

||||

|

||||

### 3.3、均衡执行简介

|

||||

|

||||

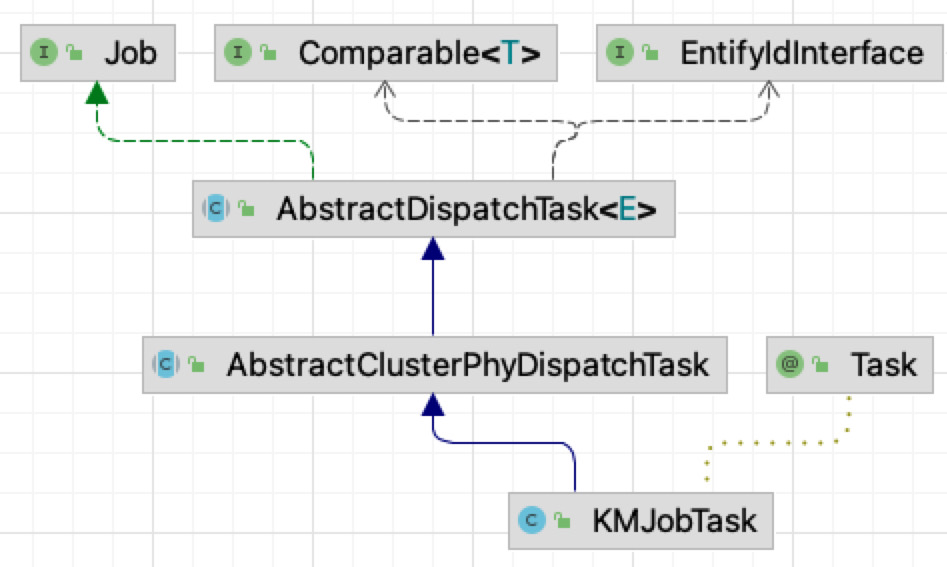

#### 3.3.1、类关系图

|

||||

|

||||

这里以KMJobTask为例,简单介绍KM中的定时任务实现逻辑。

|

||||

|

||||

|

||||

|

||||

+ Job:使用logi组件实现定时任务,必须实现该接口。

|

||||

+ Comparable & EntufyIdInterface:比较接口,实现任务的排序逻辑。

|

||||

+ AbstractDispatchTask:实现广播模式下,任务的均衡分发。

|

||||

+ AbstractClusterPhyDispatchTask:对分发到当前服务器的集群列表进行枚举。

|

||||

+ KMJobTask:实现对单个集群的定时任务处理。

|

||||

|

||||

#### 3.3.2、关键类代码

|

||||

|

||||

+ **AbstractDispatchTask类**

|

||||

|

||||

```java

|

||||

// 实现Job接口的抽象类,进行任务的负载均衡执行

|

||||

public abstract class AbstractDispatchTask<E extends Comparable & EntifyIdInterface> implements Job {

|

||||

|

||||

// 罗列所有的任务

|

||||

protected abstract List<E> listAllTasks();

|

||||

|

||||

// 执行被分配给该KS主机的任务

|

||||

protected abstract TaskResult processTask(List<E> subTaskList, long triggerTimeUnitMs);

|

||||

|

||||

// 被Logi-Job触发执行该方法

|

||||

// 该方法进行任务的分配

|

||||

@Override

|

||||

public TaskResult execute(JobContext jobContext) {

|

||||

try {

|

||||

|

||||

long triggerTimeUnitMs = System.currentTimeMillis();

|

||||

|

||||

// 获取所有的任务

|

||||

List<E> allTaskList = this.listAllTasks();

|

||||

|

||||

// 计算当前KS机器需要执行的任务

|

||||

List<E> subTaskList = this.selectTask(allTaskList, jobContext.getAllWorkerCodes(), jobContext.getCurrentWorkerCode());

|

||||

|

||||

// 进行任务处理

|

||||

return this.processTask(subTaskList, triggerTimeUnitMs);

|

||||

} catch (Exception e) {

|

||||

// ...

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

+ **AbstractClusterPhyDispatchTask类**

|

||||

|

||||

```java

|

||||

// 继承AbstractDispatchTask的抽象类,对Kafka集群进行负载均衡执行

|

||||

public abstract class AbstractClusterPhyDispatchTask extends AbstractDispatchTask<ClusterPhy> {

|

||||

|

||||

// 执行被分配的任务,具体由子类实现

|

||||

protected abstract TaskResult processSubTask(ClusterPhy clusterPhy, long triggerTimeUnitMs) throws Exception;

|

||||

|

||||

// 返回所有的Kafka集群

|

||||

@Override

|

||||

public List<ClusterPhy> listAllTasks() {

|

||||

return clusterPhyService.listAllClusters();

|

||||

}

|

||||

|

||||

// 执行被分配给该KS主机的Kafka集群任务

|

||||

@Override

|

||||

public TaskResult processTask(List<ClusterPhy> subTaskList, long triggerTimeUnitMs) { // ... }

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

+ **KMJobTask类**

|

||||

|

||||

```java

|

||||

// 加上@Task注解,并配置任务执行信息

|

||||

@Task(name = "kmJobTask",

|

||||

description = "km job 模块调度执行任务",

|

||||

cron = "0 0/1 * * * ? *",

|

||||

autoRegister = true,

|

||||

consensual = ConsensualEnum.BROADCAST,

|

||||

timeout = 6 * 60)

|

||||

// 继承AbstractClusterPhyDispatchTask类

|

||||

public class KMJobTask extends AbstractClusterPhyDispatchTask {

|

||||

|

||||

@Autowired

|

||||

private JobService jobService;

|

||||

|

||||

// 执行该Kafka集群的Job模块的任务

|

||||

@Override

|

||||

protected TaskResult processSubTask(ClusterPhy clusterPhy, long triggerTimeUnitMs) throws Exception {

|

||||

jobService.scheduleJobByClusterId(clusterPhy.getId());

|

||||

return TaskResult.SUCCESS;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

#### 3.3.3、均衡执行总结

|

||||

|

||||

均衡执行的实现原理总结起来就是以下几点:

|

||||

|

||||

+ Logi-Job设置为广播模式,触发所有的KS主机执行任务;

|

||||

+ 每台KS主机,被触发执行后,按照统一的规则,对任务列表,KS集群主机列表进行排序。然后按照顺序将任务列表均衡的分配给排序后的KS集群主机。KS集群稳定运行情况下,这一步保证了每台KS主机之间分配到的任务列表不重复,不丢失。

|

||||

+ 最后每台KS主机,执行被分配到的任务。

|

||||

|

||||

## 4、注意事项

|

||||

|

||||

+ 不能100%保证任务在一个周期内,且仅且执行一次,可能出现重复执行或丢失的情况,所以必须严格是且仅且执行一次的任务,不建议基于Logi-Job进行任务控制。

|

||||

+ 尽量让Logi-Job仅负责任务的触发,后续的执行建议放到自己创建的线程池中进行。

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 600 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 228 KiB |

@@ -36,7 +36,7 @@ KS-KM 根据其需要纳管的 kafka 版本,按照上述三个维度构建了

|

||||

|

||||

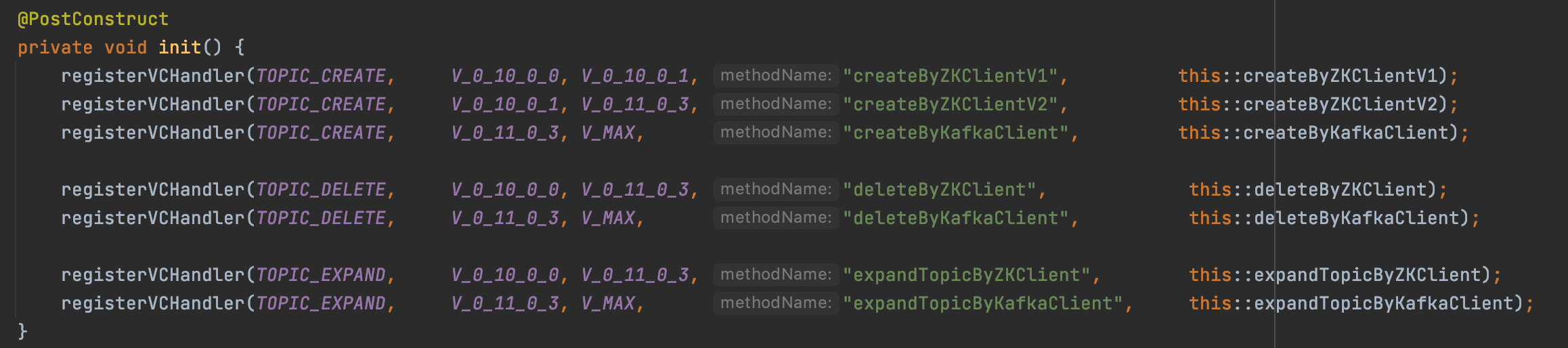

KS-KM 的每个版本针对需要纳管的 kafka 版本列表,事先分析各个版本的差异性和产品需求,同时 KS-KM 构建了一套专门处理兼容性的服务,来进行兼容性的注册、字典构建、处理器分发等操作,其中版本兼容性处理器是来具体处理不同 kafka 版本差异性的地方。

|

||||

|

||||

|

||||

|

||||

|

||||

如上图所示,KS-KM 的 topic 服务在面对不同 kafka 版本时,其 topic 的创建、删除、扩容由于 kafka 版本自身的差异,导致 KnowStreaming 的处理也不一样,所以需要根据不同的 kafka 版本来实现不同的兼容性处理器,同时向 KnowStreaming 的兼容服务进行兼容性的注册,构建兼容性字典,后续在 KnowStreaming 的运行过程中,针对不同的 kafka 版本即可分发到不同的处理器中执行。

|

||||

|

||||

|

||||

@@ -29,7 +29,7 @@

|

||||

- 初始化 MySQL 表及数据

|

||||

- 初始化 Elasticsearch 索引

|

||||

|

||||

具体见:[快速开始](./1-quick-start.md) 中的最后一步,部署 KnowStreaming 服务中的初始化相关工作。

|

||||

具体见:[单机部署手册](../install_guide/单机部署手册.md) 中的最后一步,部署 KnowStreaming 服务中的初始化相关工作。

|

||||

|

||||

### 6.1.4、本地启动

|

||||

|

||||

@@ -73,7 +73,7 @@ km-rest/src/main/java/com/xiaojukeji/know/streaming/km/rest/KnowStreaming.java

|

||||

IDEA 更多具体的配置如下图所示:

|

||||

|

||||

<p align="center">

|

||||

<img src="./assets/startup_using_source_code/IDEA配置.jpg" width = "512" height = "318" div align=center />

|

||||

<img src="http://img-ys011.didistatic.com/static/dc2img/do1_BW1RzgEMh4n6L4dL4ncl" width = "512" height = "318" div align=center />

|

||||

</p>

|

||||

|

||||

**第四步:启动项目**

|

||||

@@ -84,7 +84,7 @@ IDEA 更多具体的配置如下图所示:

|

||||

|

||||

`Know Streaming` 启动之后,可以访问一些信息,包括:

|

||||

|

||||

- 产品页面:http://localhost:8080 ,默认账号密码:`admin` / `admin2022_` 进行登录。

|

||||

- 产品页面:http://localhost:8080 ,默认账号密码:`admin` / `admin2022_` 进行登录。`v3.0.0-beta.2`版本开始,默认账号密码为`admin` / `admin`;

|

||||

- 接口地址:http://localhost:8080/swagger-ui.html 查看后端提供的相关接口。

|

||||

|

||||

更多信息,详见:[KnowStreaming 官网](https://knowstreaming.com/)

|

||||

199

docs/dev_guide/登录系统对接.md

Normal file

199

docs/dev_guide/登录系统对接.md

Normal file

@@ -0,0 +1,199 @@

|

||||

|

||||

|

||||

|

||||

|

||||

## 登录系统对接

|

||||

|

||||

[KnowStreaming](https://github.com/didi/KnowStreaming)(以下简称KS) 除了实现基于本地MySQL的用户登录认证方式外,还已经实现了基于Ldap的登录认证。

|

||||

|

||||

但是,登录认证系统并非仅此两种。因此,为了具有更好的拓展性,KS具有自定义登陆认证逻辑,快速对接已有系统的特性。

|

||||

|

||||

在KS中,我们将登陆认证相关的一些文件放在[km-extends](https://github.com/didi/KnowStreaming/tree/master/km-extends)模块下的[km-account](https://github.com/didi/KnowStreaming/tree/master/km-extends/km-account)模块里。

|

||||

|

||||

本文将介绍KS如何快速对接自有的用户登录认证系统。

|

||||

|

||||

### 对接步骤

|

||||

|

||||

- 创建一个登陆认证类,实现[LogiCommon](https://github.com/didi/LogiCommon)的LoginExtend接口;

|

||||

- 将[application.yml](https://github.com/didi/KnowStreaming/blob/master/km-rest/src/main/resources/application.yml)中的spring.logi-security.login-extend-bean-name字段改为登陆认证类的bean名称;

|

||||

|

||||

```Java

|

||||

//LoginExtend 接口

|

||||

public interface LoginExtend {

|

||||

|

||||

/**

|

||||

* 验证登录信息,同时记住登录状态

|

||||

*/

|

||||

UserBriefVO verifyLogin(AccountLoginDTO var1, HttpServletRequest var2, HttpServletResponse var3) throws LogiSecurityException;

|

||||

|

||||

/**

|

||||

* 登出接口,清楚登录状态

|

||||

*/

|

||||

Result<Boolean> logout(HttpServletRequest var1, HttpServletResponse var2);

|

||||

|

||||

/**

|

||||

* 检查是否已经登录

|

||||

*/

|

||||

boolean interceptorCheck(HttpServletRequest var1, HttpServletResponse var2, String var3, List<String> var4) throws IOException;

|

||||

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 对接例子

|

||||

|

||||

我们以Ldap对接为例,说明KS如何对接登录认证系统。

|

||||

|

||||

+ 编写[LdapLoginServiceImpl](https://github.com/didi/KnowStreaming/blob/master/km-extends/km-account/src/main/java/com/xiaojukeji/know/streaming/km/account/login/ldap/LdapLoginServiceImpl.java)类,实现LoginExtend接口。

|

||||

+ 设置[application.yml](https://github.com/didi/KnowStreaming/blob/master/km-rest/src/main/resources/application.yml)中的spring.logi-security.login-extend-bean-name=ksLdapLoginService。

|

||||

|

||||

完成上述两步即可实现KS对接Ldap认证登陆。

|

||||

|

||||

```Java

|

||||

@Service("ksLdapLoginService")

|

||||

public class LdapLoginServiceImpl implements LoginExtend {

|

||||

|

||||

|

||||

@Override

|

||||

public UserBriefVO verifyLogin(AccountLoginDTO loginDTO,

|

||||

HttpServletRequest request,

|

||||

HttpServletResponse response) throws LogiSecurityException {

|

||||

String decodePasswd = AESUtils.decrypt(loginDTO.getPw());

|

||||

|

||||

// 去LDAP验证账密

|

||||

LdapPrincipal ldapAttrsInfo = ldapAuthentication.authenticate(loginDTO.getUserName(), decodePasswd);

|

||||

if (ldapAttrsInfo == null) {

|

||||

// 用户不存在,正常来说上如果有问题,上一步会直接抛出异常

|

||||

throw new LogiSecurityException(ResultCode.USER_NOT_EXISTS);

|

||||

}

|

||||

|

||||

// 进行业务相关操作

|

||||

|

||||

// 记录登录状态,Ldap因为无法记录登录状态,因此有KnowStreaming进行记录

|

||||

initLoginContext(request, response, loginDTO.getUserName(), user.getId());

|

||||

return CopyBeanUtil.copy(user, UserBriefVO.class);

|

||||

}

|

||||

|

||||

@Override

|

||||

public Result<Boolean> logout(HttpServletRequest request, HttpServletResponse response) {

|

||||

|

||||

//清理cookie和session

|

||||

|

||||

return Result.buildSucc(Boolean.TRUE);

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean interceptorCheck(HttpServletRequest request, HttpServletResponse response, String requestMappingValue, List<String> whiteMappingValues) throws IOException {

|

||||

|

||||

// 检查是否已经登录

|

||||

String userName = HttpRequestUtil.getOperator(request);

|

||||

if (StringUtils.isEmpty(userName)) {

|

||||

// 未登录,则进行登出

|

||||

logout(request, response);

|

||||

return Boolean.FALSE;

|

||||

}

|

||||

|

||||

return Boolean.TRUE;

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 实现原理

|

||||

|

||||

因为登陆和登出整体实现逻辑是一致的,所以我们以登陆逻辑为例进行介绍。

|

||||

|

||||

+ 登陆原理

|

||||

|

||||

登陆走的是[LogiCommon](https://github.com/didi/LogiCommon)自带的LoginController。

|

||||

|

||||

```java

|

||||

@RestController

|

||||

public class LoginController {

|

||||

|

||||

|

||||

//登陆接口

|

||||

@PostMapping({"/login"})

|

||||

public Result<UserBriefVO> login(HttpServletRequest request, HttpServletResponse response, @RequestBody AccountLoginDTO loginDTO) {

|

||||

try {

|

||||

//登陆认证

|

||||

UserBriefVO userBriefVO = this.loginService.verifyLogin(loginDTO, request, response);

|

||||

return Result.success(userBriefVO);

|

||||

|

||||

} catch (LogiSecurityException var5) {

|

||||

return Result.fail(var5);

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

而登陆操作是调用LoginServiceImpl类来实现,但是具体由哪个登陆认证类来执行登陆操作却由loginExtendBeanTool来指定。

|

||||

|

||||

```java

|

||||

//LoginServiceImpl类

|

||||

@Service

|

||||

public class LoginServiceImpl implements LoginService {

|

||||

|

||||

//实现登陆操作,但是具体哪个登陆类由loginExtendBeanTool来管理

|

||||

public UserBriefVO verifyLogin(AccountLoginDTO loginDTO, HttpServletRequest request, HttpServletResponse response) throws LogiSecurityException {

|

||||

|

||||

return this.loginExtendBeanTool.getLoginExtendImpl().verifyLogin(loginDTO, request, response);

|

||||

}

|

||||

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

而loginExtendBeanTool类会优先去查找用户指定的登陆认证类,如果失败则调用默认的登陆认证函数。

|

||||

|

||||

```java

|

||||

//LoginExtendBeanTool类

|

||||

@Component("logiSecurityLoginExtendBeanTool")

|

||||

public class LoginExtendBeanTool {

|

||||

|

||||

public LoginExtend getLoginExtendImpl() {

|

||||

LoginExtend loginExtend;

|

||||

//先调用用户指定登陆类,如果失败则调用系统默认登陆认证

|

||||

try {

|

||||

//调用的类由spring.logi-security.login-extend-bean-name指定

|

||||

loginExtend = this.getCustomLoginExtendImplBean();

|

||||

} catch (UnsupportedOperationException var3) {

|

||||

loginExtend = this.getDefaultLoginExtendImplBean();

|

||||

}

|

||||

|

||||

return loginExtend;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

+ 认证原理

|

||||

|

||||

认证的实现则比较简单,向Spring中注册我们的拦截器PermissionInterceptor。

|

||||

|

||||

拦截器会调用LoginServiceImpl类的拦截方法,LoginServiceImpl后续处理逻辑就和前面登陆是一致的。

|

||||

|

||||

```java

|

||||

public class PermissionInterceptor implements HandlerInterceptor {

|

||||

|

||||

|

||||

/**

|

||||

* 拦截预处理

|

||||

* @return boolean false:拦截, 不向下执行, true:放行

|

||||

*/

|

||||

@Override

|

||||

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

|

||||

|

||||

//免登录相关校验,如果验证通过,提前返回

|

||||

|

||||

//走拦截函数,进行普通用户验证

|

||||

return loginService.interceptorCheck(request, response, classRequestMappingValue, whiteMappingValues);

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

|

||||

@@ -1,25 +1,20 @@

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## JMX-连接失败问题解决

|

||||

|

||||

- [JMX-连接失败问题解决](#jmx-连接失败问题解决)

|

||||

- [1、问题&说明](#1问题说明)

|

||||

- [2、解决方法](#2解决方法)

|

||||

- [3、解决方法 —— 认证的JMX](#3解决方法--认证的jmx)

|

||||

|

||||

集群正常接入Logi-KafkaManager之后,即可以看到集群的Broker列表,此时如果查看不了Topic的实时流量,或者是Broker的实时流量信息时,那么大概率就是JMX连接的问题了。

|

||||

集群正常接入`KnowStreaming`之后,即可以看到集群的Broker列表,此时如果查看不了Topic的实时流量,或者是Broker的实时流量信息时,那么大概率就是`JMX`连接的问题了。

|

||||

|

||||

下面我们按照步骤来一步一步的检查。

|

||||

|

||||

### 1、问题&说明

|

||||

### 1、问题说明

|

||||

|

||||

**类型一:JMX配置未开启**

|

||||

|

||||

未开启时,直接到`2、解决方法`查看如何开启即可。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

**类型二:配置错误**

|

||||

@@ -43,6 +38,26 @@ java.rmi.ConnectException: Connection refused to host: 192.168.0.1; nested excep

|

||||

java.rmi.ConnectException: Connection refused to host: 127.0.0.1;; nested exception is:

|

||||

```

|

||||

|

||||

**类型三:连接特定IP**

|

||||

|

||||

Broker 配置了内外网,而JMX在配置时,可能配置了内网IP或者外网IP,此时 `KnowStreaming` 需要连接到特定网络的IP才可以进行访问。

|

||||

|

||||

比如:

|

||||

|

||||

Broker在ZK的存储结构如下所示,我们期望连接到 `endpoints` 中标记为 `INTERNAL` 的地址,但是 `KnowStreaming` 却连接了 `EXTERNAL` 的地址,此时可以看 `4、解决方法 —— JMX连接特定网络` 进行解决。

|

||||

|

||||

```json

|

||||

{

|

||||

"listener_security_protocol_map": {"EXTERNAL":"SASL_PLAINTEXT","INTERNAL":"SASL_PLAINTEXT"},

|

||||

"endpoints": ["EXTERNAL://192.168.0.1:7092","INTERNAL://192.168.0.2:7093"],

|

||||

"jmx_port": 8099,

|

||||

"host": "192.168.0.1",

|

||||

"timestamp": "1627289710439",

|

||||

"port": -1,

|

||||

"version": 4

|

||||

}

|

||||

```

|

||||

|

||||

### 2、解决方法

|

||||

|

||||

这里仅介绍一下比较通用的解决方式,如若有更好的方式,欢迎大家指导告知一下。

|

||||

@@ -76,26 +91,36 @@ fi

|

||||

|

||||

如果您是直接看的这个部分,建议先看一下上一节:`2、解决方法`以确保`JMX`的配置没有问题了。

|

||||

|

||||

在JMX的配置等都没有问题的情况下,如果是因为认证的原因导致连接不了的,此时可以使用下面介绍的方法进行解决。

|

||||

在`JMX`的配置等都没有问题的情况下,如果是因为认证的原因导致连接不了的,可以在集群接入界面配置你的`JMX`认证信息。

|

||||

|

||||

**当前这块后端刚刚开发完成,可能还不够完善,有问题随时沟通。**

|

||||

<img src='http://img-ys011.didistatic.com/static/dc2img/do1_EUU352qMEX1Jdp7pxizp' width=350>

|

||||

|

||||

`Logi-KafkaManager 2.2.0+`之后的版本后端已经支持`JMX`认证方式的连接,但是还没有界面,此时我们可以往`cluster`表的`jmx_properties`字段写入`JMX`的认证信息。

|

||||

|

||||

这个数据是`json`格式的字符串,例子如下所示:

|

||||

|

||||

### 4、解决方法 —— JMX连接特定网络

|

||||

|

||||

可以手动往`ks_km_physical_cluster`表的`jmx_properties`字段增加一个`useWhichEndpoint`字段,从而控制 `KnowStreaming` 连接到特定的JMX IP及PORT。

|

||||

|

||||

`jmx_properties`格式:

|

||||

```json

|

||||

{

|

||||

"maxConn": 10, # KM对单台Broker的最大JMX连接数

|

||||

"username": "xxxxx", # 用户名

|

||||

"password": "xxxx", # 密码

|

||||

"maxConn": 100, # KM对单台Broker的最大JMX连接数

|

||||

"username": "xxxxx", # 用户名,可以不填写

|

||||

"password": "xxxx", # 密码,可以不填写

|

||||

"openSSL": true, # 开启SSL, true表示开启ssl, false表示关闭

|

||||

"useWhichEndpoint": "EXTERNAL" #指定要连接的网络名称,填写EXTERNAL就是连接endpoints里面的EXTERNAL地址

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

SQL的例子:

|

||||

SQL例子:

|

||||

```sql

|

||||

UPDATE cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false }' where id={xxx};

|

||||

```

|

||||

UPDATE ks_km_physical_cluster SET jmx_properties='{ "maxConn": 10, "username": "xxxxx", "password": "xxxx", "openSSL": false , "useWhichEndpoint": "xxx"}' where id={xxx};

|

||||

```

|

||||

|

||||

注意:

|

||||

|

||||

+ 目前此功能只支持采用 `ZK` 做分布式协调的kafka集群。

|

||||

|

||||

|

||||

@@ -6,9 +6,10 @@

|

||||

|

||||

### 2.1.1、安装说明

|

||||

|

||||

- 以 `v3.0.0-bete` 版本为例进行部署;

|

||||

- 以 `v3.0.0-beta.1` 版本为例进行部署;

|

||||

- 以 CentOS-7 为例,系统基础配置要求 4C-8G;

|

||||

- 部署完成后,可通过浏览器:`IP:PORT` 进行访问,默认端口是 `8080`,系统默认账号密码: `admin` / `admin2022_`;

|

||||

- 部署完成后,可通过浏览器:`IP:PORT` 进行访问,默认端口是 `8080`,系统默认账号密码: `admin` / `admin2022_`。

|

||||

- `v3.0.0-beta.2`版本开始,默认账号密码为`admin` / `admin`;

|

||||

- 本文为单机部署,如需分布式部署,[请联系我们](https://knowstreaming.com/support-center)

|

||||

|

||||

**软件依赖**

|

||||

@@ -19,7 +20,7 @@

|

||||

| ElasticSearch | v7.6+ | 8060 |

|

||||

| JDK | v8+ | - |

|

||||

| CentOS | v6+ | - |

|

||||

| Ubantu | v16+ | - |

|

||||

| Ubuntu | v16+ | - |

|

||||

|

||||

|

||||

|

||||

@@ -29,7 +30,7 @@

|

||||

|

||||

```bash

|

||||

# 在服务器中下载安装脚本, 该脚本中会在当前目录下,重新安装MySQL。重装后的mysql密码存放在当前目录的mysql.password文件中。

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/deploy_KnowStreaming.sh

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/deploy_KnowStreaming-3.0.0-beta.1.sh

|

||||

|

||||

# 执行脚本

|

||||

sh deploy_KnowStreaming.sh

|

||||

@@ -42,10 +43,10 @@ sh deploy_KnowStreaming.sh

|

||||

|

||||

```bash

|

||||

# 将安装包下载到本地且传输到目标服务器

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/KnowStreaming-3.0.0-beta—offline.tar.gz

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/KnowStreaming-3.0.0-beta.1-offline.tar.gz

|

||||

|

||||

# 解压安装包

|

||||

tar -zxf KnowStreaming-3.0.0-beta—offline.tar.gz

|

||||

tar -zxf KnowStreaming-3.0.0-beta.1-offline.tar.gz

|

||||

|

||||

# 执行安装脚本

|

||||

sh deploy_KnowStreaming-offline.sh

|

||||

@@ -58,28 +59,129 @@ sh deploy_KnowStreaming-offline.sh

|

||||

|

||||

### 2.1.3、容器部署

|

||||

|

||||

#### 2.1.3.1、Helm

|

||||

|

||||

**环境依赖**

|

||||

|

||||

- Kubernetes >= 1.14 ,Helm >= 2.17.0

|

||||

|

||||

- 默认配置为全部安装( ElasticSearch + MySQL + KnowStreaming)

|

||||

- 默认依赖全部安装,ElasticSearch(3 节点集群模式) + MySQL(单机) + KnowStreaming-manager + KnowStreaming-ui

|

||||

|

||||

- 如果使用已有的 ElasticSearch(7.6.x) 和 MySQL(5.7) 只需调整 values.yaml 部分参数即可

|

||||

- 使用已有的 ElasticSearch(7.6.x) 和 MySQL(5.7) 只需调整 values.yaml 部分参数即可

|

||||

|

||||

**安装命令**

|

||||

|

||||

```bash

|

||||

# 下载安装包

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/knowstreaming-3.0.0-hlem.tgz

|

||||

|

||||

# 解压安装包

|

||||

tar -zxf knowstreaming-3.0.0-hlem.tgz

|

||||

|

||||

# 执行命令(NAMESPACE需要更改为已存在的)

|

||||

helm install -n [NAMESPACE] knowstreaming knowstreaming-manager/

|

||||

# 相关镜像在Docker Hub都可以下载

|

||||

# 快速安装(NAMESPACE需要更改为已存在的,安装启动需要几分钟初始化请稍等~)

|

||||

helm install -n [NAMESPACE] [NAME] http://download.knowstreaming.com/charts/knowstreaming-manager-0.1.3.tgz

|

||||

|

||||

# 获取KnowStreaming前端ui的service. 默认nodeport方式.

|

||||

# (http://nodeIP:nodeport,默认用户名密码:admin/admin2022_)

|

||||

# `v3.0.0-beta.2`版本开始,默认账号密码为`admin` / `admin`;

|

||||

|

||||

# 添加仓库

|

||||

helm repo add knowstreaming http://download.knowstreaming.com/charts

|

||||

|

||||

# 拉取最新版本

|

||||

helm pull knowstreaming/knowstreaming-manager

|

||||

```

|

||||

|

||||

|

||||

|

||||

#### 2.1.3.2、Docker Compose

|

||||

```yml

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

|

||||

knowstreaming-manager:

|

||||

image: knowstreaming/knowstreaming-manager:0.2.0-test

|

||||

container_name: knowstreaming-manager

|

||||

privileged: true

|

||||

restart: always

|

||||

depends_on:

|

||||

- elasticsearch-single

|

||||

- knowstreaming-mysql

|

||||

expose:

|

||||

- 80

|

||||

command:

|

||||

- /bin/sh

|

||||

- /ks-start.sh

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

|

||||

SERVER_MYSQL_ADDRESS: knowstreaming-mysql:3306

|

||||

SERVER_MYSQL_DB: know_streaming

|

||||

SERVER_MYSQL_USER: root

|

||||

SERVER_MYSQL_PASSWORD: admin2022_

|

||||

|

||||

SERVER_ES_ADDRESS: elasticsearch-single:9200

|

||||

|

||||

JAVA_OPTS: -Xmx1g -Xms1g

|

||||

|

||||

# extra_hosts:

|

||||

# - "hostname:x.x.x.x"

|

||||

# volumes:

|

||||

# - /ks/manage/log:/logs

|

||||

knowstreaming-ui:

|

||||

image: knowstreaming/knowstreaming-ui:0.2.0-test1

|

||||

container_name: knowstreaming-ui

|

||||

restart: always

|

||||

ports:

|

||||

- '18092:80'

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

depends_on:

|

||||

- knowstreaming-manager

|

||||

# extra_hosts:

|

||||

# - "hostname:x.x.x.x"

|

||||

|

||||

elasticsearch-single:

|

||||

image: docker.io/library/elasticsearch:7.6.2

|

||||

container_name: elasticsearch-single

|

||||

restart: always

|

||||

expose:

|

||||

- 9200

|

||||

- 9300

|

||||

# ports:

|

||||

# - '9200:9200'

|

||||

# - '9300:9300'

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

ES_JAVA_OPTS: -Xms512m -Xmx512m

|

||||

discovery.type: single-node

|

||||

# volumes:

|

||||

# - /ks/es/data:/usr/share/elasticsearch/data

|

||||

|

||||

knowstreaming-init:

|

||||

image: knowstreaming/knowstreaming-manager:0.2.0-test

|

||||

container_name: knowstreaming_init

|

||||

depends_on:

|

||||

- elasticsearch-single

|

||||

command:

|

||||

- /bin/bash

|

||||

- /es_template_create.sh

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

SERVER_ES_ADDRESS: elasticsearch-single:9200

|

||||

|

||||

|

||||

knowstreaming-mysql:

|

||||

image: knowstreaming/knowstreaming-mysql:0.2.0-test

|

||||

container_name: knowstreaming-mysql

|

||||

restart: always

|

||||

environment:

|

||||

TZ: Asia/Shanghai

|

||||

MYSQL_ROOT_PASSWORD: admin2022_

|

||||

MYSQL_DATABASE: know_streaming

|

||||

MYSQL_ROOT_HOST: '%'

|

||||

expose:

|

||||

- 3306

|

||||

# ports:

|

||||

# - '3306:3306'

|

||||

# volumes:

|

||||

# - /ks/mysql/data:/data/mysql

|

||||

```

|

||||

|

||||

|

||||

@@ -219,10 +321,10 @@ sh /data/elasticsearch/control.sh status

|

||||

|

||||

```bash

|

||||

# 下载安装包

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/KnowStreaming-3.0.0-beta.tar.gz

|

||||

wget https://s3-gzpu.didistatic.com/pub/knowstreaming/KnowStreaming-3.0.0-beta.1.tar.gz

|

||||

|

||||

# 解压安装包到指定目录

|

||||

tar -zxf KnowStreaming-3.0.0-beta.tar.gz -C /data/

|

||||

tar -zxf KnowStreaming-3.0.0-beta.1.tar.gz -C /data/

|

||||

|

||||

# 修改启动脚本并加入systemd管理

|

||||

cd /data/KnowStreaming/

|

||||

@@ -236,7 +338,7 @@ mysql -uroot -pDidi_km_678 know_streaming < ./init/sql/dml-ks-km.sql

|

||||

mysql -uroot -pDidi_km_678 know_streaming < ./init/sql/dml-logi.sql

|

||||

|

||||

# 创建elasticsearch初始化数据

|

||||

sh ./init/template/template.sh

|

||||

sh ./bin/init_es_template.sh

|

||||

|

||||

# 修改配置文件

|

||||

vim ./conf/application.yml

|

||||

|

||||

@@ -1,6 +1,4 @@

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

# `Know Streaming` 源码编译打包手册

|

||||

|

||||

@@ -11,7 +9,7 @@

|

||||

`windows7+`、`Linux`、`Mac`

|

||||

|

||||

**环境依赖**

|

||||

|

||||

|

||||

- Maven 3.6.3 (后端)

|

||||

- Node v12.20.0/v14.17.3 (前端)

|

||||

- Java 8+ (后端)

|

||||

@@ -25,27 +23,23 @@

|

||||

|

||||

具体见下面描述。

|

||||

|

||||

|

||||

|

||||

### 2.1、前后端合并打包

|

||||

|

||||

1. 下载源码;

|

||||

2. 进入 `KS-KM` 工程目录,执行 `mvn -Prelease-package -Dmaven.test.skip=true clean install -U` 命令;

|

||||

3. 打包命令执行完成后,会在 `km-dist/target` 目录下面生成一个 `KnowStreaming-*.tar.gz` 的安装包。

|

||||

|

||||

|

||||

### 2.2、前端单独打包

|

||||

### 2.2、前端单独打包

|

||||

|

||||

1. 下载源码;

|

||||

2. 进入 `KS-KM/km-console` 工程目录;

|

||||

3. 执行 `npm run build`命令,会在 `KS-KM/km-console` 目录下生成一个名为 `pub` 的前端静态资源包;

|

||||

2. 跳转到 [前端打包构建文档](https://github.com/didi/KnowStreaming/blob/master/km-console/README.md) 按步骤进行。打包成功后,会在 `km-rest/src/main/resources` 目录下生成名为 `templates` 的前端静态资源包;

|

||||

3. 如果上一步过程中报错,请查看 [FAQ](https://github.com/didi/KnowStreaming/blob/master/docs/user_guide/faq.md) 第 8.10 条;

|

||||

|

||||

|

||||

|

||||

### 2.3、后端单独打包

|

||||

### 2.3、后端单独打包

|

||||

|

||||

1. 下载源码;

|

||||

2. 修改顶层 `pom.xml` ,去掉其中的 `km-console` 模块,如下所示;

|

||||

|

||||

```xml

|

||||

<modules>

|

||||

<!-- <module>km-console</module>-->

|

||||

@@ -62,10 +56,7 @@

|

||||

<module>km-rest</module>

|

||||

<module>km-dist</module>

|

||||

</modules>

|

||||

```

|

||||

```

|

||||

|

||||

3. 执行 `mvn -U clean package -Dmaven.test.skip=true`命令;

|

||||

4. 执行完成之后会在 `KS-KM/km-rest/target` 目录下面生成一个 `ks-km.jar` 即为KS的后端部署的Jar包,也可以执行 `mvn -Prelease-package -Dmaven.test.skip=true clean install -U` 生成的tar包也仅有后端服务的功能;

|

||||

|

||||

|

||||

|

||||

|

||||

4. 执行完成之后会在 `KS-KM/km-rest/target` 目录下面生成一个 `ks-km.jar` 即为 KS 的后端部署的 Jar 包,也可以执行 `mvn -Prelease-package -Dmaven.test.skip=true clean install -U` 生成的 tar 包也仅有后端服务的功能;

|

||||

|

||||

@@ -1,6 +1,102 @@

|

||||

## 6.2、版本升级手册

|

||||

|

||||

**`2.x`版本 升级至 `3.0.0`版本**

|

||||

注意:如果想升级至具体版本,需要将你当前版本至你期望使用版本的变更统统执行一遍,然后才能正常使用。

|

||||

|

||||

### 6.2.0、升级至 `master` 版本

|

||||

|

||||

暂无

|

||||

|

||||

### 6.2.1、升级至 `v3.0.0-beta.2`版本

|

||||

|

||||

**配置变更**

|

||||

|

||||

```yaml

|

||||

|

||||

# 新增配置

|

||||

spring:

|

||||

logi-security: # know-streaming 依赖的 logi-security 模块的数据库的配置,默认与 know-streaming 的数据库配置保持一致即可

|

||||

login-extend-bean-name: logiSecurityDefaultLoginExtendImpl # 使用的登录系统Service的Bean名称,无需修改

|

||||

|

||||

# 线程池大小相关配置,在task模块中,新增了三类线程池,

|

||||

# 从而减少不同类型任务之间的相互影响,以及减少对logi-job内的线程池的影响

|

||||

thread-pool:

|

||||

task: # 任务模块的配置

|

||||

metrics: # metrics采集任务配置

|

||||

thread-num: 18 # metrics采集任务线程池核心线程数

|

||||

queue-size: 180 # metrics采集任务线程池队列大小

|

||||

metadata: # metadata同步任务配置

|

||||

thread-num: 27 # metadata同步任务线程池核心线程数

|

||||

queue-size: 270 # metadata同步任务线程池队列大小

|

||||

common: # 剩余其他任务配置

|

||||

thread-num: 15 # 剩余其他任务线程池核心线程数

|

||||

queue-size: 150 # 剩余其他任务线程池队列大小

|

||||

|

||||

# 删除配置,下列配置将不再使用

|

||||

thread-pool:

|

||||

task: # 任务模块的配置

|

||||

heaven: # 采集任务配置

|

||||

thread-num: 20 # 采集任务线程池核心线程数

|

||||

queue-size: 1000 # 采集任务线程池队列大小

|

||||

|

||||

```

|

||||

|

||||

**SQL 变更**

|

||||

|

||||

```sql

|

||||

-- 多集群管理权限2022-09-06新增

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2000', '多集群管理查看', '1593', '1', '2', '多集群管理查看', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2002', 'Topic-迁移副本', '1593', '1', '2', 'Topic-迁移副本', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2004', 'Topic-扩缩副本', '1593', '1', '2', 'Topic-扩缩副本', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2006', 'Cluster-LoadReBalance-周期均衡', '1593', '1', '2', 'Cluster-LoadReBalance-周期均衡', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2008', 'Cluster-LoadReBalance-立即均衡', '1593', '1', '2', 'Cluster-LoadReBalance-立即均衡', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('2010', 'Cluster-LoadReBalance-设置集群规格', '1593', '1', '2', 'Cluster-LoadReBalance-设置集群规格', '0', 'know-streaming');

|

||||

|

||||

|

||||

-- 系统管理权限2022-09-06新增

|

||||

INSERT INTO `logi_security_permission` (`id`, `permission_name`, `parent_id`, `leaf`, `level`, `description`, `is_delete`, `app_name`) VALUES ('3000', '系统管理查看', '1595', '1', '2', '系统管理查看', '0', 'know-streaming');

|

||||

|

||||

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2000', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2002', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2004', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2006', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2008', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '2010', '0', 'know-streaming');

|

||||

INSERT INTO `logi_security_role_permission` (`role_id`, `permission_id`, `is_delete`, `app_name`) VALUES ('1677', '3000', '0', 'know-streaming');

|

||||

|

||||

-- 修改字段长度

|

||||

ALTER TABLE `logi_security_oplog`

|

||||

CHANGE COLUMN `operator_ip` `operator_ip` VARCHAR(64) NOT NULL COMMENT '操作者ip' ,

|

||||

CHANGE COLUMN `operator` `operator` VARCHAR(64) NULL DEFAULT NULL COMMENT '操作者账号' ,

|

||||

CHANGE COLUMN `operate_page` `operate_page` VARCHAR(64) NOT NULL DEFAULT '' COMMENT '操作页面' ,

|

||||

CHANGE COLUMN `operate_type` `operate_type` VARCHAR(64) NOT NULL COMMENT '操作类型' ,

|

||||

CHANGE COLUMN `target_type` `target_type` VARCHAR(64) NOT NULL COMMENT '对象分类' ,

|

||||

CHANGE COLUMN `target` `target` VARCHAR(1024) NOT NULL COMMENT '操作对象' ,

|

||||

CHANGE COLUMN `operation_methods` `operation_methods` VARCHAR(64) NOT NULL DEFAULT '' COMMENT '操作方式' ;

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### 6.2.2、升级至 `v3.0.0-beta.1`版本

|

||||

|

||||

**SQL 变更**

|

||||

|

||||

1、在`ks_km_broker`表增加了一个监听信息字段。

|

||||

2、为`logi_security_oplog`表 operation_methods 字段设置默认值''。

|

||||

因此需要执行下面的 sql 对数据库表进行更新。

|

||||

|

||||

```sql

|

||||

ALTER TABLE `ks_km_broker`

|

||||

ADD COLUMN `endpoint_map` VARCHAR(1024) NOT NULL DEFAULT '' COMMENT '监听信息' AFTER `update_time`;

|

||||

|

||||

ALTER TABLE `logi_security_oplog`

|

||||

ALTER COLUMN `operation_methods` set default '';

|

||||

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### 6.2.3、`2.x`版本 升级至 `v3.0.0-beta.0`版本

|

||||

|

||||

**升级步骤:**

|

||||

|

||||

@@ -24,14 +120,14 @@

|

||||

UPDATE ks_km_topic

|

||||

INNER JOIN

|

||||

(SELECT

|

||||

topic.cluster_id AS cluster_id,

|

||||

topic.topic_name AS topic_name,

|

||||

topic.description AS description

|

||||

topic.cluster_id AS cluster_id,

|

||||

topic.topic_name AS topic_name,

|

||||

topic.description AS description

|

||||

FROM topic WHERE description != ''

|

||||

) AS t

|

||||

|

||||

ON ks_km_topic.cluster_phy_id = t.cluster_id

|

||||

AND ks_km_topic.topic_name = t.topic_name

|

||||

AND ks_km_topic.id > 0

|

||||

SET ks_km_topic.description = t.description;

|

||||

ON ks_km_topic.cluster_phy_id = t.cluster_id

|

||||

AND ks_km_topic.topic_name = t.topic_name

|

||||

AND ks_km_topic.id > 0

|

||||

SET ks_km_topic.description = t.description;

|

||||

```

|

||||

@@ -1,5 +1,4 @@

|

||||

|

||||

# FAQ

|

||||

# FAQ

|

||||

|

||||

## 8.1、支持哪些 Kafka 版本?

|

||||

|

||||

@@ -109,3 +108,77 @@ SECURITY.TRICK_USERS

|

||||

设置完成上面两步之后,就可以直接调用需要登录的接口了。

|

||||

|

||||

但是还有一点需要注意,绕过的用户仅能调用他有权限的接口,比如一个普通用户,那么他就只能调用普通的接口,不能去调用运维人员的接口。

|

||||

|

||||

## 8.8、Specified key was too long; max key length is 767 bytes

|

||||

|

||||

**原因:** 不同版本的 InoDB 引擎,参数‘innodb_large_prefix’默认值不同,即在 5.6 默认值为 OFF,5.7 默认值为 ON。

|

||||

|

||||

对于引擎为 InnoDB,innodb_large_prefix=OFF,且行格式为 Antelope 即支持 REDUNDANT 或 COMPACT 时,索引键前缀长度最大为 767 字节。innodb_large_prefix=ON,且行格式为 Barracuda 即支持 DYNAMIC 或 COMPRESSED 时,索引键前缀长度最大为 3072 字节。

|

||||

|

||||

**解决方案:**

|

||||

|

||||

- 减少 varchar 字符大小低于 767/4=191。

|

||||

- 将字符集改为 latin1(一个字符=一个字节)。

|

||||

- 开启‘innodb_large_prefix’,修改默认行格式‘innodb_file_format’为 Barracuda,并设置 row_format=dynamic。

|

||||

|

||||

## 8.9、出现 ESIndexNotFoundEXception 报错

|

||||

|

||||

**原因 :**没有创建 ES 索引模版

|

||||

|

||||

**解决方案:**执行 init_es_template.sh 脚本,创建 ES 索引模版即可。

|

||||

|

||||

## 8.10、km-console 打包构建失败

|

||||

|

||||

首先,**请确保您正在使用最新版本**,版本列表见 [Tags](https://github.com/didi/KnowStreaming/tags)。如果不是最新版本,请升级后再尝试有无问题。

|

||||

|

||||

常见的原因是由于工程依赖没有正常安装,导致在打包过程中缺少依赖,造成打包失败。您可以检查是否有以下文件夹,且文件夹内是否有内容

|

||||

|

||||

```

|

||||

KnowStreaming/km-console/node_modules

|

||||

KnowStreaming/km-console/packages/layout-clusters-fe/node_modules

|

||||

KnowStreaming/km-console/packages/config-manager-fe/node_modules

|

||||

```

|

||||

|

||||

如果发现没有对应的 `node_modules` 目录或着目录内容为空,说明依赖没有安装成功。请按以下步骤操作,

|

||||

|

||||

1. 手动删除上述三个文件夹(如果有)

|

||||

|

||||

2. 如果之前是通过 `mvn install` 打包 `km-console`,请到项目根目录(KnowStreaming)下重新输入该指令进行打包。观察打包过程有无报错。如有报错,请见步骤 4。

|

||||

|

||||

3. 如果是通过本地独立构建前端工程的方式(指直接执行 `npm run build`),请进入 `KnowStreaming/km-console` 目录,执行下述步骤(注意:执行时请确保您在使用 `node v12` 版本)

|

||||

|

||||

a. 执行 `npm run i`。如有报错,请见步骤 4。

|

||||

|

||||

b. 执行 `npm run build`。如有报错,请见步骤 4。

|

||||

|

||||

4. 麻烦联系我们协助解决。推荐提供以下信息,方面我们快速定位问题,示例如下。

|

||||

|

||||

```

|

||||

操作系统: Mac

|

||||

命令行终端:bash

|

||||

Node 版本: v12.22.12

|

||||

复现步骤: 1. -> 2.

|

||||

错误截图:

|

||||

```

|

||||

|

||||

## 8.11、在 `km-console` 目录下执行 `npm run start` 时看不到应用构建和热加载过程?如何启动单个应用?

|

||||

|

||||

需要到具体的应用中执行 `npm run start`,例如 `cd packages/layout-clusters-fe` 后,执行 `npm run start`。

|

||||

|

||||

应用启动后需要到基座应用中查看(需要启动基座应用,即 layout-clusters-fe)。

|

||||

|

||||

|

||||

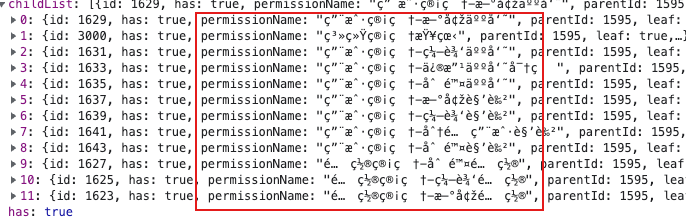

## 8.12、权限识别失败问题

|

||||

1、使用admin账号登陆KnowStreaming时,点击系统管理-用户管理-角色管理-新增角色,查看页面是否正常。

|

||||

|

||||

<img src="http://img-ys011.didistatic.com/static/dc2img/do1_gwGfjN9N92UxzHU8dfzr" width = "400" >

|

||||

|

||||

2、查看'/logi-security/api/v1/permission/tree'接口返回值,出现如下图所示乱码现象。

|

||||

|

||||

|

||||

3、查看logi_security_permission表,看看是否出现了中文乱码现象。

|

||||

|

||||

根据以上几点,我们可以确定是由于数据库乱码造成的权限识别失败问题。

|

||||

|

||||

+ 原因:由于数据库编码和我们提供的脚本不一致,数据库里的数据发生了乱码,因此出现权限识别失败问题。

|

||||

+ 解决方案:清空数据库数据,将数据库字符集调整为utf8,最后重新执行[dml-logi.sql](https://github.com/didi/KnowStreaming/blob/master/km-dist/init/sql/dml-logi.sql)脚本导入数据即可。

|

||||

|

||||

@@ -11,7 +11,7 @@

|

||||

|

||||

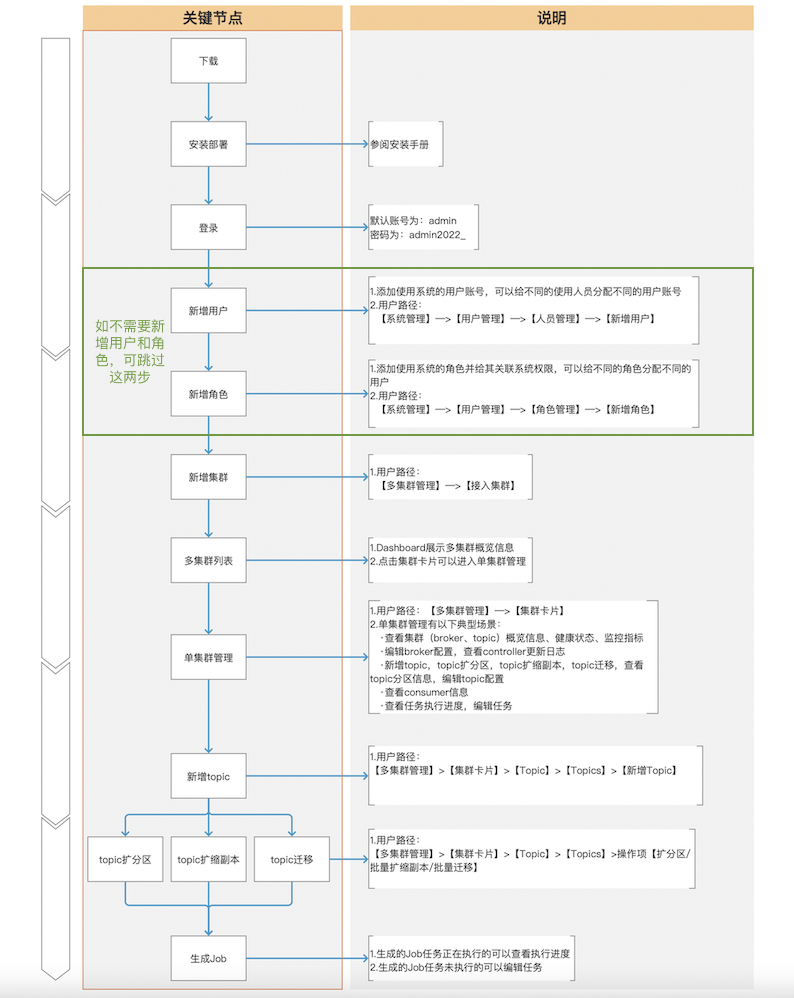

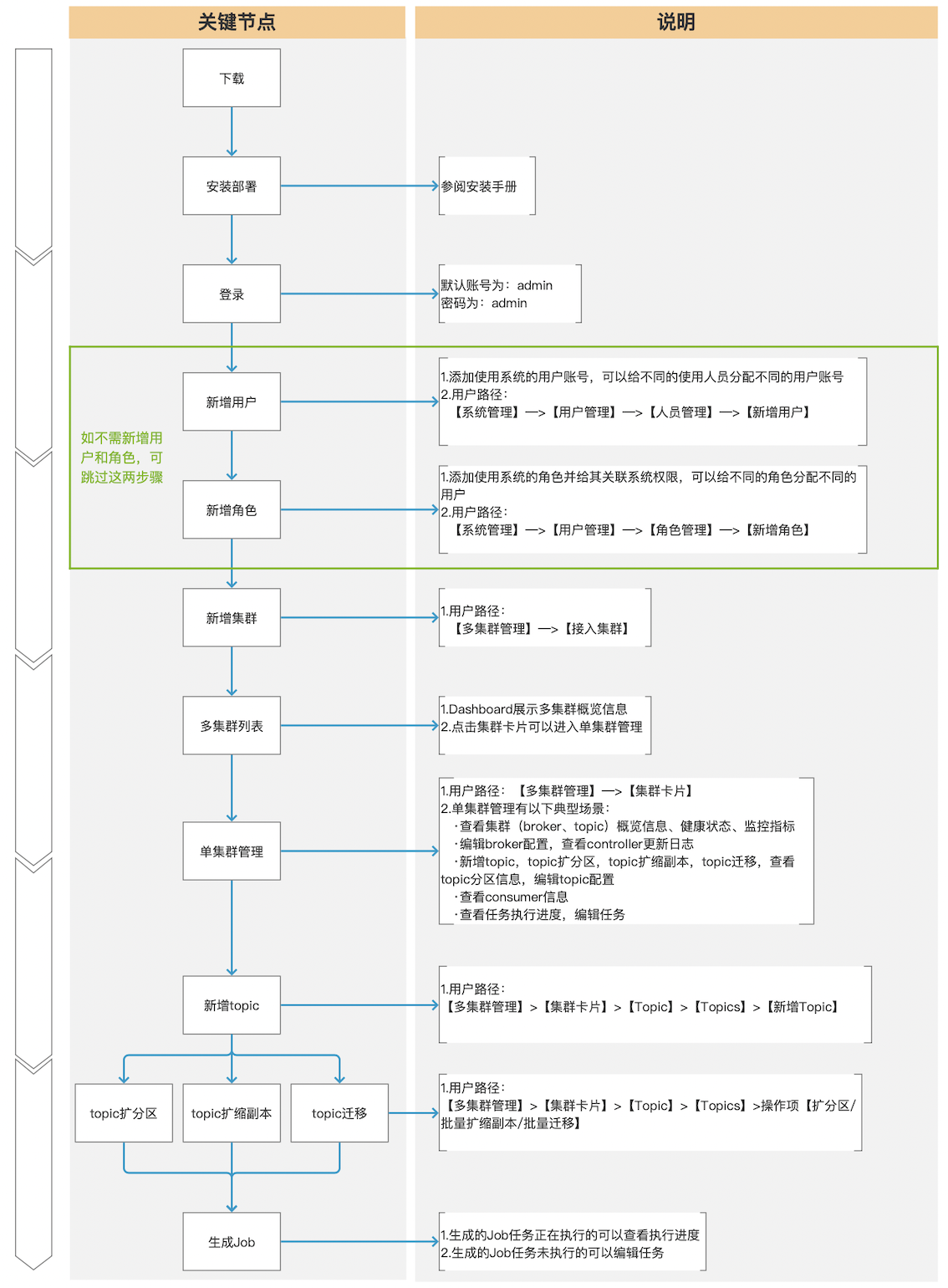

下面是用户第一次使用我们产品的典型体验路径:

|

||||

|

||||

|

||||

|

||||

|

||||

## 5.3、常用功能

|

||||

|

||||

|

||||

@@ -14,6 +14,7 @@ import com.xiaojukeji.know.streaming.km.common.bean.entity.topic.Topic;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.cluster.res.ClusterBrokersOverviewVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.cluster.res.ClusterBrokersStateVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.kafkacontroller.KafkaControllerVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.constant.KafkaConstant;

|

||||

import com.xiaojukeji.know.streaming.km.common.enums.SortTypeEnum;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.PaginationMetricsUtil;

|

||||

import com.xiaojukeji.know.streaming.km.common.utils.PaginationUtil;

|

||||

@@ -71,6 +72,9 @@ public class ClusterBrokersManagerImpl implements ClusterBrokersManager {

|

||||

Topic groupTopic = topicService.getTopic(clusterPhyId, org.apache.kafka.common.internals.Topic.GROUP_METADATA_TOPIC_NAME);

|

||||

Topic transactionTopic = topicService.getTopic(clusterPhyId, org.apache.kafka.common.internals.Topic.TRANSACTION_STATE_TOPIC_NAME);

|

||||

|

||||

//获取controller信息

|

||||

KafkaController kafkaController = kafkaControllerService.getKafkaControllerFromDB(clusterPhyId);

|

||||

|

||||

// 格式转换

|

||||

return PaginationResult.buildSuc(

|

||||

this.convert2ClusterBrokersOverviewVOList(

|

||||

@@ -78,7 +82,8 @@ public class ClusterBrokersManagerImpl implements ClusterBrokersManager {

|

||||

brokerList,

|

||||

metricsResult.getData(),

|

||||

groupTopic,

|

||||

transactionTopic

|

||||

transactionTopic,

|

||||

kafkaController

|

||||

),

|

||||

paginationResult

|

||||

);

|

||||

@@ -159,7 +164,8 @@ public class ClusterBrokersManagerImpl implements ClusterBrokersManager {

|

||||

List<Broker> brokerList,

|

||||

List<BrokerMetrics> metricsList,

|

||||

Topic groupTopic,

|

||||

Topic transactionTopic) {

|

||||

Topic transactionTopic,

|

||||

KafkaController kafkaController) {

|

||||

Map<Integer, BrokerMetrics> metricsMap = metricsList == null? new HashMap<>(): metricsList.stream().collect(Collectors.toMap(BrokerMetrics::getBrokerId, Function.identity()));

|

||||

|

||||

Map<Integer, Broker> brokerMap = brokerList == null? new HashMap<>(): brokerList.stream().collect(Collectors.toMap(Broker::getBrokerId, Function.identity()));

|

||||

@@ -169,12 +175,12 @@ public class ClusterBrokersManagerImpl implements ClusterBrokersManager {

|

||||

Broker broker = brokerMap.get(brokerId);

|

||||

BrokerMetrics brokerMetrics = metricsMap.get(brokerId);

|

||||

|

||||

voList.add(this.convert2ClusterBrokersOverviewVO(brokerId, broker, brokerMetrics, groupTopic, transactionTopic));

|

||||

voList.add(this.convert2ClusterBrokersOverviewVO(brokerId, broker, brokerMetrics, groupTopic, transactionTopic, kafkaController));

|

||||

}

|

||||

return voList;

|

||||

}

|

||||

|

||||

private ClusterBrokersOverviewVO convert2ClusterBrokersOverviewVO(Integer brokerId, Broker broker, BrokerMetrics brokerMetrics, Topic groupTopic, Topic transactionTopic) {

|

||||

private ClusterBrokersOverviewVO convert2ClusterBrokersOverviewVO(Integer brokerId, Broker broker, BrokerMetrics brokerMetrics, Topic groupTopic, Topic transactionTopic, KafkaController kafkaController) {

|

||||

ClusterBrokersOverviewVO clusterBrokersOverviewVO = new ClusterBrokersOverviewVO();

|

||||

clusterBrokersOverviewVO.setBrokerId(brokerId);

|

||||

if (broker != null) {

|

||||

@@ -192,6 +198,9 @@ public class ClusterBrokersManagerImpl implements ClusterBrokersManager {

|

||||

if (transactionTopic != null && transactionTopic.getBrokerIdSet().contains(brokerId)) {

|

||||

clusterBrokersOverviewVO.getKafkaRoleList().add(transactionTopic.getTopicName());

|

||||

}

|

||||

if (kafkaController != null && kafkaController.getBrokerId().equals(brokerId)) {

|

||||

clusterBrokersOverviewVO.getKafkaRoleList().add(KafkaConstant.CONTROLLER_ROLE);

|

||||

}

|

||||

|

||||

clusterBrokersOverviewVO.setLatestMetrics(brokerMetrics);

|

||||

return clusterBrokersOverviewVO;

|

||||

|

||||

@@ -19,7 +19,8 @@ import com.xiaojukeji.know.streaming.km.common.bean.vo.group.GroupTopicConsumedD

|

||||

import com.xiaojukeji.know.streaming.km.common.bean.vo.group.GroupTopicOverviewVO;

|

||||

import com.xiaojukeji.know.streaming.km.common.constant.MsgConstant;

|

||||