Compare commits

3 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

66e3da5d2f | ||

|

|

5f2adfe74e | ||

|

|

cecfde906a |

43

.github/workflows/ci_build.yml

vendored

@@ -1,43 +0,0 @@

|

|||||||

name: KnowStreaming Build

|

|

||||||

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches: [ "*" ]

|

|

||||||

pull_request:

|

|

||||||

branches: [ "*" ]

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

build:

|

|

||||||

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

|

|

||||||

- name: Set up JDK 11

|

|

||||||

uses: actions/setup-java@v3

|

|

||||||

with:

|

|

||||||

java-version: '11'

|

|

||||||

distribution: 'temurin'

|

|

||||||

cache: maven

|

|

||||||

|

|

||||||

- name: Setup Node

|

|

||||||

uses: actions/setup-node@v1

|

|

||||||

with:

|

|

||||||

node-version: '12.22.12'

|

|

||||||

|

|

||||||

- name: Build With Maven

|

|

||||||

run: mvn -Prelease-package -Dmaven.test.skip=true clean install -U

|

|

||||||

|

|

||||||

- name: Get KnowStreaming Version

|

|

||||||

if: ${{ success() }}

|

|

||||||

run: |

|

|

||||||

version=`mvn -Dexec.executable='echo' -Dexec.args='${project.version}' --non-recursive exec:exec -q`

|

|

||||||

echo "VERSION=${version}" >> $GITHUB_ENV

|

|

||||||

|

|

||||||

- name: Upload Binary Package

|

|

||||||

if: ${{ success() }}

|

|

||||||

uses: actions/upload-artifact@v3

|

|

||||||

with:

|

|

||||||

name: KnowStreaming-${{ env.VERSION }}.tar.gz

|

|

||||||

path: km-dist/target/KnowStreaming-${{ env.VERSION }}.tar.gz

|

|

||||||

@@ -1,74 +0,0 @@

|

|||||||

|

|

||||||

# Contributor Covenant Code of Conduct

|

|

||||||

|

|

||||||

## Our Pledge

|

|

||||||

|

|

||||||

In the interest of fostering an open and welcoming environment, we as

|

|

||||||

contributors and maintainers pledge to making participation in our project, and

|

|

||||||

our community a harassment-free experience for everyone, regardless of age, body

|

|

||||||

size, disability, ethnicity, gender identity and expression, level of experience,

|

|

||||||

education, socio-economic status, nationality, personal appearance, race,

|

|

||||||

religion, or sexual identity and orientation.

|

|

||||||

|

|

||||||

## Our Standards

|

|

||||||

|

|

||||||

Examples of behavior that contributes to creating a positive environment

|

|

||||||

include:

|

|

||||||

|

|

||||||

* Using welcoming and inclusive language

|

|

||||||

* Being respectful of differing viewpoints and experiences

|

|

||||||

* Gracefully accepting constructive criticism

|

|

||||||

* Focusing on what is best for the community

|

|

||||||

* Showing empathy towards other community members

|

|

||||||

|

|

||||||

Examples of unacceptable behavior by participants include:

|

|

||||||

|

|

||||||

* The use of sexualized language or imagery and unwelcome sexual attention or

|

|

||||||

advances

|

|

||||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

|

||||||

* Public or private harassment

|

|

||||||

* Publishing others' private information, such as a physical or electronic

|

|

||||||

address, without explicit permission

|

|

||||||

* Other conduct which could reasonably be considered inappropriate in a

|

|

||||||

professional setting

|

|

||||||

|

|

||||||

## Our Responsibilities

|

|

||||||

|

|

||||||

Project maintainers are responsible for clarifying the standards of acceptable

|

|

||||||

behavior and are expected to take appropriate and fair corrective action in

|

|

||||||

response to any instances of unacceptable behavior.

|

|

||||||

|

|

||||||

Project maintainers have the right and responsibility to remove, edit, or

|

|

||||||

reject comments, commits, code, wiki edits, issues, and other contributions

|

|

||||||

that are not aligned to this Code of Conduct, or to ban temporarily or

|

|

||||||

permanently any contributor for other behaviors that they deem inappropriate,

|

|

||||||

threatening, offensive, or harmful.

|

|

||||||

|

|

||||||

## Scope

|

|

||||||

|

|

||||||

This Code of Conduct applies both within project spaces and in public spaces

|

|

||||||

when an individual is representing the project or its community. Examples of

|

|

||||||

representing a project or community include using an official project e-mail

|

|

||||||

address, posting via an official social media account, or acting as an appointed

|

|

||||||

representative at an online or offline event. Representation of a project may be

|

|

||||||

further defined and clarified by project maintainers.

|

|

||||||

|

|

||||||

## Enforcement

|

|

||||||

|

|

||||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

|

||||||

reported by contacting the project team at https://knowstreaming.com/support-center . All

|

|

||||||

complaints will be reviewed and investigated and will result in a response that

|

|

||||||

is deemed necessary and appropriate to the circumstances. The project team is

|

|

||||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

|

||||||

Further details of specific enforcement policies may be posted separately.

|

|

||||||

|

|

||||||

Project maintainers who do not follow or enforce the Code of Conduct in good

|

|

||||||

faith may face temporary or permanent repercussions as determined by other

|

|

||||||

members of the project's leadership.

|

|

||||||

|

|

||||||

## Attribution

|

|

||||||

|

|

||||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

|

||||||

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

|

||||||

|

|

||||||

[homepage]: https://www.contributor-covenant.org

|

|

||||||

150

CONTRIBUTING.md

@@ -1,150 +0,0 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

# 为KnowStreaming做贡献

|

|

||||||

|

|

||||||

|

|

||||||

欢迎👏🏻来到KnowStreaming!本文档是关于如何为KnowStreaming做出贡献的指南。

|

|

||||||

|

|

||||||

如果您发现不正确或遗漏的内容, 请留下意见/建议。

|

|

||||||

|

|

||||||

## 行为守则

|

|

||||||

请务必阅读并遵守我们的 [行为准则](./CODE_OF_CONDUCT.md).

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 贡献

|

|

||||||

|

|

||||||

**KnowStreaming** 欢迎任何角色的新参与者,包括 **User** 、**Contributor**、**Committer**、**PMC** 。

|

|

||||||

|

|

||||||

我们鼓励新人积极加入 **KnowStreaming** 项目,从User到Contributor、Committer ,甚至是 PMC 角色。

|

|

||||||

|

|

||||||

为了做到这一点,新人需要积极地为 **KnowStreaming** 项目做出贡献。以下介绍如何对 **KnowStreaming** 进行贡献。

|

|

||||||

|

|

||||||

|

|

||||||

### 创建/打开 Issue

|

|

||||||

|

|

||||||

如果您在文档中发现拼写错误、在代码中**发现错误**或想要**新功能**或想要**提供建议**,您可以在 GitHub 上[创建一个Issue](https://github.com/didi/KnowStreaming/issues/new/choose) 进行报告。

|

|

||||||

|

|

||||||

|

|

||||||

如果您想直接贡献, 您可以选择下面标签的问题。

|

|

||||||

|

|

||||||

- [contribution welcome](https://github.com/didi/KnowStreaming/labels/contribution%20welcome) : 非常需要解决/新增 的Issues

|

|

||||||

- [good first issue](https://github.com/didi/KnowStreaming/labels/good%20first%20issue): 对新人比较友好, 新人可以拿这个Issue来练练手热热身。

|

|

||||||

|

|

||||||

<font color=red ><b> 请注意,任何 PR 都必须与有效issue相关联。否则,PR 将被拒绝。</b></font>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 开始你的贡献

|

|

||||||

|

|

||||||

**分支介绍**

|

|

||||||

|

|

||||||

我们将 `dev`分支作为开发分支, 说明这是一个不稳定的分支。

|

|

||||||

|

|

||||||

此外,我们的分支模型符合 [https://nvie.com/posts/a-successful-git-branching-model/](https://nvie.com/posts/a-successful-git-branching-model/). 我们强烈建议新人在创建PR之前先阅读上述文章。

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**贡献流程**

|

|

||||||

|

|

||||||

为方便描述,我们这里定义一下2个名词:

|

|

||||||

|

|

||||||

自己Fork出来的仓库是私人仓库, 我们这里称之为 :**分叉仓库**

|

|

||||||

Fork的源项目,我们称之为:**源仓库**

|

|

||||||

|

|

||||||

|

|

||||||

现在,如果您准备好创建PR, 以下是贡献者的工作流程:

|

|

||||||

|

|

||||||

1. Fork [KnowStreaming](https://github.com/didi/KnowStreaming) 项目到自己的仓库

|

|

||||||

|

|

||||||

2. 从源仓库的`dev`拉取并创建自己的本地分支,例如: `dev`

|

|

||||||

3. 在本地分支上对代码进行修改

|

|

||||||

4. Rebase 开发分支, 并解决冲突

|

|

||||||

5. commit 并 push 您的更改到您自己的**分叉仓库**

|

|

||||||

6. 创建一个 Pull Request 到**源仓库**的`dev`分支中。

|

|

||||||

7. 等待回复。如果回复的慢,请无情的催促。

|

|

||||||

|

|

||||||

|

|

||||||

更为详细的贡献流程请看:[贡献流程](./docs/contributer_guide/贡献流程.md)

|

|

||||||

|

|

||||||

创建Pull Request时:

|

|

||||||

|

|

||||||

1. 请遵循 PR的 [模板](./.github/PULL_REQUEST_TEMPLATE.md)

|

|

||||||

2. 请确保 PR 有相应的issue。

|

|

||||||

3. 如果您的 PR 包含较大的更改,例如组件重构或新组件,请编写有关其设计和使用的详细文档(在对应的issue中)。

|

|

||||||

4. 注意单个 PR 不能太大。如果需要进行大量更改,最好将更改分成几个单独的 PR。

|

|

||||||

5. 在合并PR之前,尽量的将最终的提交信息清晰简洁, 将多次修改的提交尽可能的合并为一次提交。

|

|

||||||

6. 创建 PR 后,将为PR分配一个或多个reviewers。

|

|

||||||

|

|

||||||

|

|

||||||

<font color=red><b>如果您的 PR 包含较大的更改,例如组件重构或新组件,请编写有关其设计和使用的详细文档。</b></font>

|

|

||||||

|

|

||||||

|

|

||||||

# 代码审查指南

|

|

||||||

|

|

||||||

Commiter将轮流review代码,以确保在合并前至少有一名Commiter

|

|

||||||

|

|

||||||

一些原则:

|

|

||||||

|

|

||||||

- 可读性——重要的代码应该有详细的文档。API 应该有 Javadoc。代码风格应与现有风格保持一致。

|

|

||||||

- 优雅:新的函数、类或组件应该设计得很好。

|

|

||||||

- 可测试性——单元测试用例应该覆盖 80% 的新代码。

|

|

||||||

- 可维护性 - 遵守我们的编码规范。

|

|

||||||

|

|

||||||

|

|

||||||

# 开发者

|

|

||||||

|

|

||||||

## 成为Contributor

|

|

||||||

|

|

||||||

只要成功提交并合并PR , 则为Contributor

|

|

||||||

|

|

||||||

贡献者名单请看:[贡献者名单](./docs/contributer_guide/开发者名单.md)

|

|

||||||

|

|

||||||

## 尝试成为Commiter

|

|

||||||

|

|

||||||

一般来说, 贡献8个重要的补丁并至少让三个不同的人来Review他们(您需要3个Commiter的支持)。

|

|

||||||

然后请人给你提名, 您需要展示您的

|

|

||||||

|

|

||||||

1. 至少8个重要的PR和项目的相关问题

|

|

||||||

2. 与团队合作的能力

|

|

||||||

3. 了解项目的代码库和编码风格

|

|

||||||

4. 编写好代码的能力

|

|

||||||

|

|

||||||

当前的Commiter可以通过在KnowStreaming中的Issue标签 `nomination`(提名)来提名您

|

|

||||||

|

|

||||||

1. 你的名字和姓氏

|

|

||||||

2. 指向您的Git个人资料的链接

|

|

||||||

3. 解释为什么你应该成为Commiter

|

|

||||||

4. 详细说明提名人与您合作的3个PR以及相关问题,这些问题可以证明您的能力。

|

|

||||||

|

|

||||||

另外2个Commiter需要支持您的**提名**,如果5个工作日内没有人反对,您就是提交者,如果有人反对或者想要更多的信息,Commiter会讨论并通常达成共识(5个工作日内) 。

|

|

||||||

|

|

||||||

|

|

||||||

# 开源奖励计划

|

|

||||||

|

|

||||||

|

|

||||||

我们非常欢迎开发者们为KnowStreaming开源项目贡献一份力量,相应也将给予贡献者激励以表认可与感谢。

|

|

||||||

|

|

||||||

|

|

||||||

## 参与贡献

|

|

||||||

|

|

||||||

1. 积极参与 Issue 的讨论,如答疑解惑、提供想法或报告无法解决的错误(Issue)

|

|

||||||

2. 撰写和改进项目的文档(Wiki)

|

|

||||||

3. 提交补丁优化代码(Coding)

|

|

||||||

|

|

||||||

|

|

||||||

## 你将获得

|

|

||||||

|

|

||||||

1. 加入KnowStreaming开源项目贡献者名单并展示

|

|

||||||

2. KnowStreaming开源贡献者证书(纸质&电子版)

|

|

||||||

3. KnowStreaming贡献者精美大礼包(KnowStreamin/滴滴 周边)

|

|

||||||

|

|

||||||

|

|

||||||

## 相关规则

|

|

||||||

|

|

||||||

- Contributer和Commiter都会有对应的证书和对应的礼包

|

|

||||||

- 每季度有KnowStreaming项目团队评选出杰出贡献者,颁发相应证书。

|

|

||||||

- 年末进行年度评选

|

|

||||||

|

|

||||||

贡献者名单请看:[贡献者名单](./docs/contributer_guide/开发者名单.md)

|

|

||||||

BIN

KS-PRD-3.0-beta1.docx

Normal file

BIN

KS-PRD-3.0-beta2.docx

Normal file

BIN

KS-PRD-3.1-ZK.docx

Normal file

BIN

KS-PRD-3.2-Connect.docx

Normal file

BIN

KS-PRD-3.3-MM2.docx

Normal file

161

README.md

@@ -1,161 +0,0 @@

|

|||||||

|

|

||||||

<p align="center">

|

|

||||||

<img src="https://user-images.githubusercontent.com/71620349/185368586-aed82d30-1534-453d-86ff-ecfa9d0f35bd.png" width = "256" div align=center />

|

|

||||||

|

|

||||||

</p>

|

|

||||||

|

|

||||||

<p align="center">

|

|

||||||

<a href="https://knowstreaming.com">产品官网</a> |

|

|

||||||

<a href="https://github.com/didi/KnowStreaming/releases">下载地址</a> |

|

|

||||||

<a href="https://doc.knowstreaming.com/product">文档资源</a> |

|

|

||||||

<a href="https://demo.knowstreaming.com">体验环境</a>

|

|

||||||

</p>

|

|

||||||

|

|

||||||

<p align="center">

|

|

||||||

<!--最近一次提交时间-->

|

|

||||||

<a href="https://img.shields.io/github/last-commit/didi/KnowStreaming">

|

|

||||||

<img src="https://img.shields.io/github/last-commit/didi/KnowStreaming" alt="LastCommit">

|

|

||||||

</a>

|

|

||||||

|

|

||||||

<!--最新版本-->

|

|

||||||

<a href="https://github.com/didi/KnowStreaming/blob/master/LICENSE">

|

|

||||||

<img src="https://img.shields.io/github/v/release/didi/KnowStreaming" alt="License">

|

|

||||||

</a>

|

|

||||||

|

|

||||||

<!--License信息-->

|

|

||||||

<a href="https://github.com/didi/KnowStreaming/blob/master/LICENSE">

|

|

||||||

<img src="https://img.shields.io/github/license/didi/KnowStreaming" alt="License">

|

|

||||||

</a>

|

|

||||||

|

|

||||||

<!--Open-Issue-->

|

|

||||||

<a href="https://github.com/didi/KnowStreaming/issues">

|

|

||||||

<img src="https://img.shields.io/github/issues-raw/didi/KnowStreaming" alt="Issues">

|

|

||||||

</a>

|

|

||||||

|

|

||||||

<!--知识星球-->

|

|

||||||

<a href="https://z.didi.cn/5gSF9">

|

|

||||||

<img src="https://img.shields.io/badge/join-%E7%9F%A5%E8%AF%86%E6%98%9F%E7%90%83-red" alt="Slack">

|

|

||||||

</a>

|

|

||||||

|

|

||||||

</p>

|

|

||||||

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

|

|

||||||

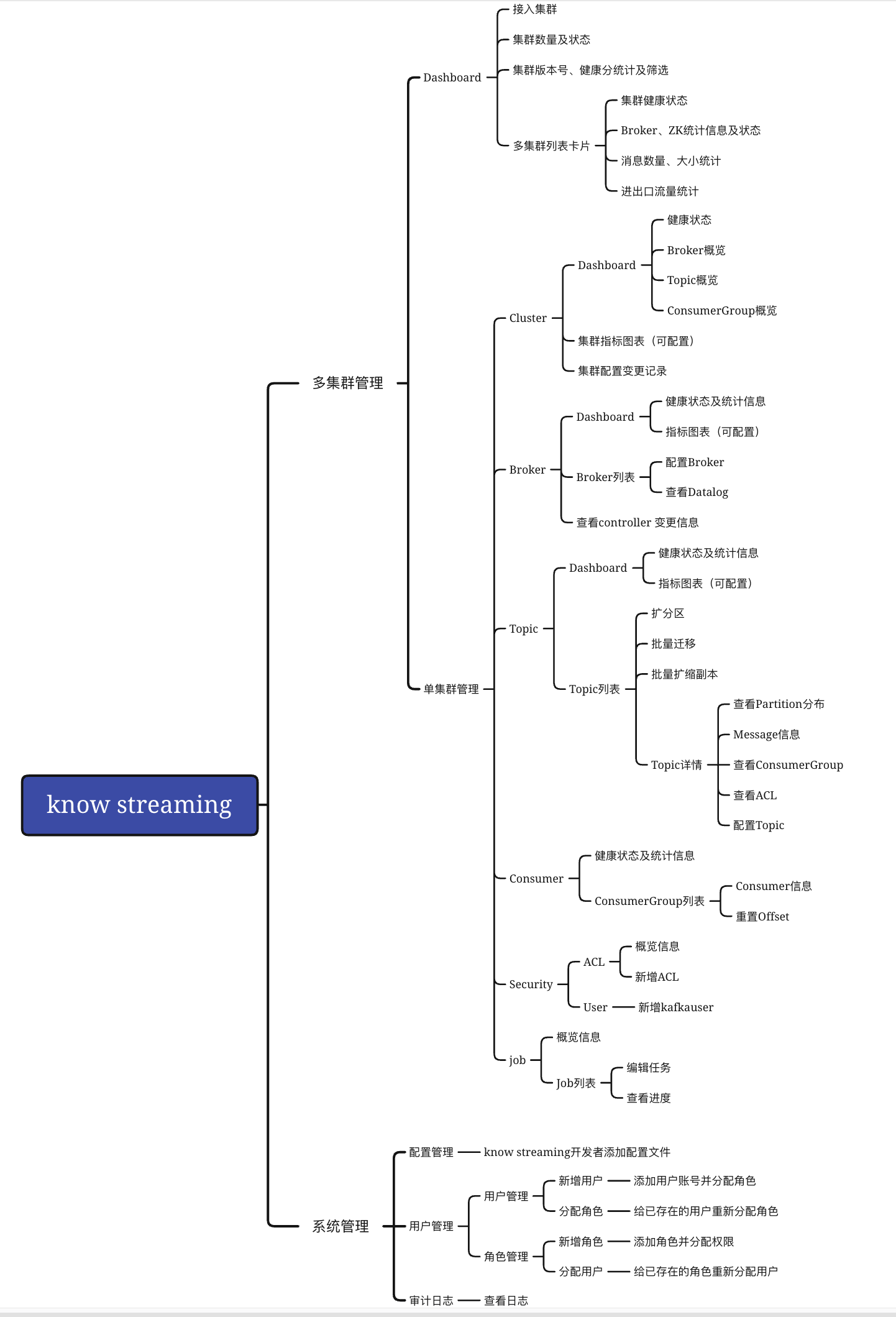

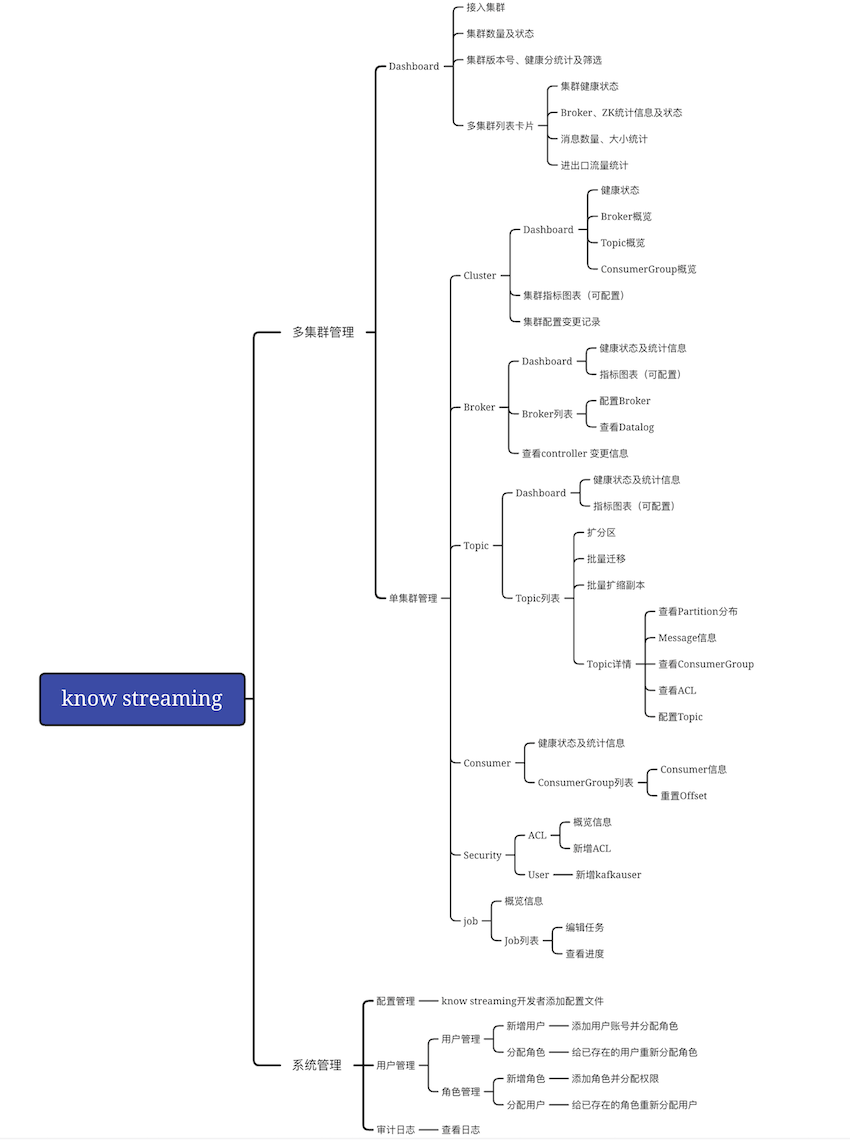

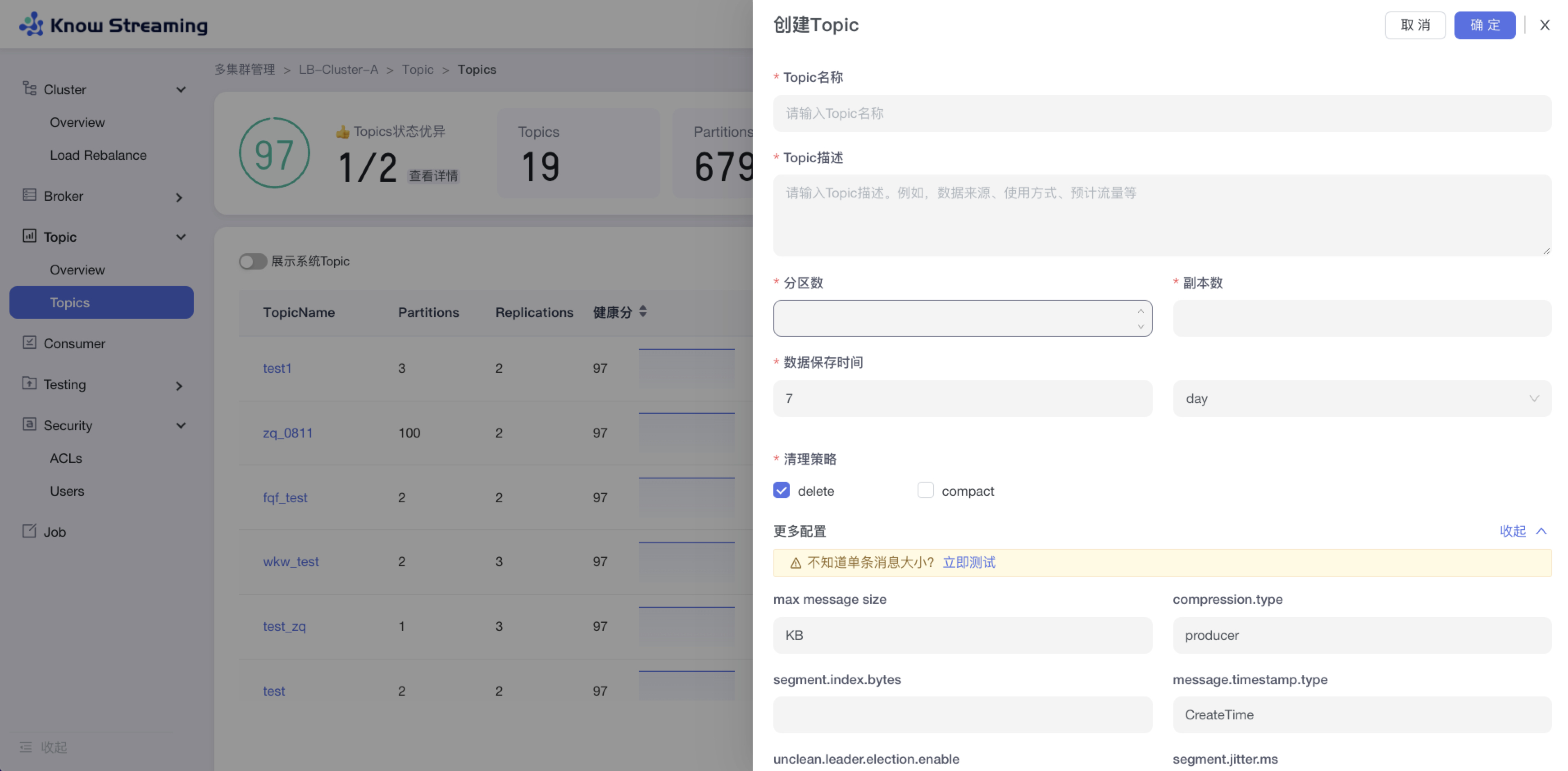

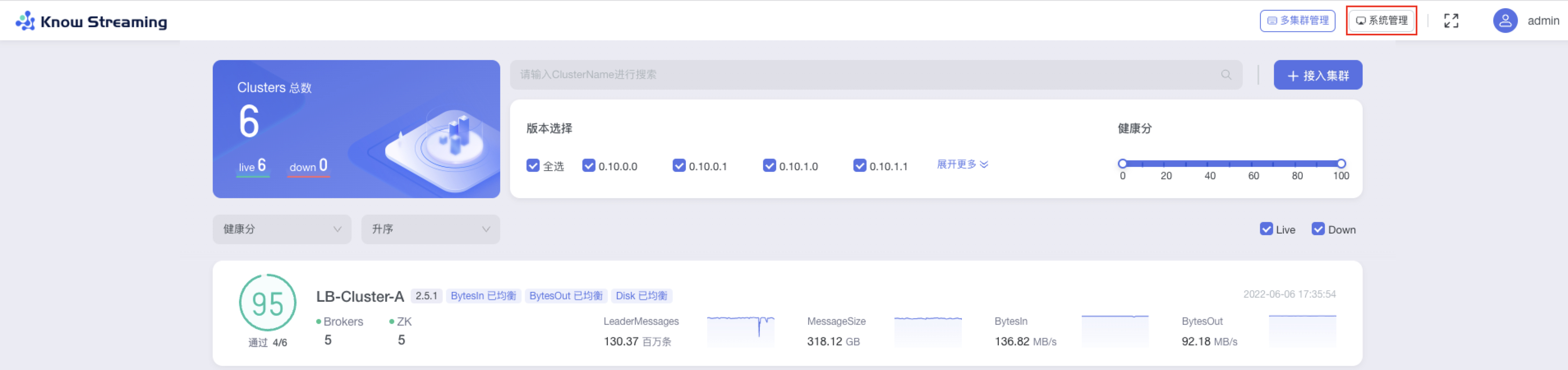

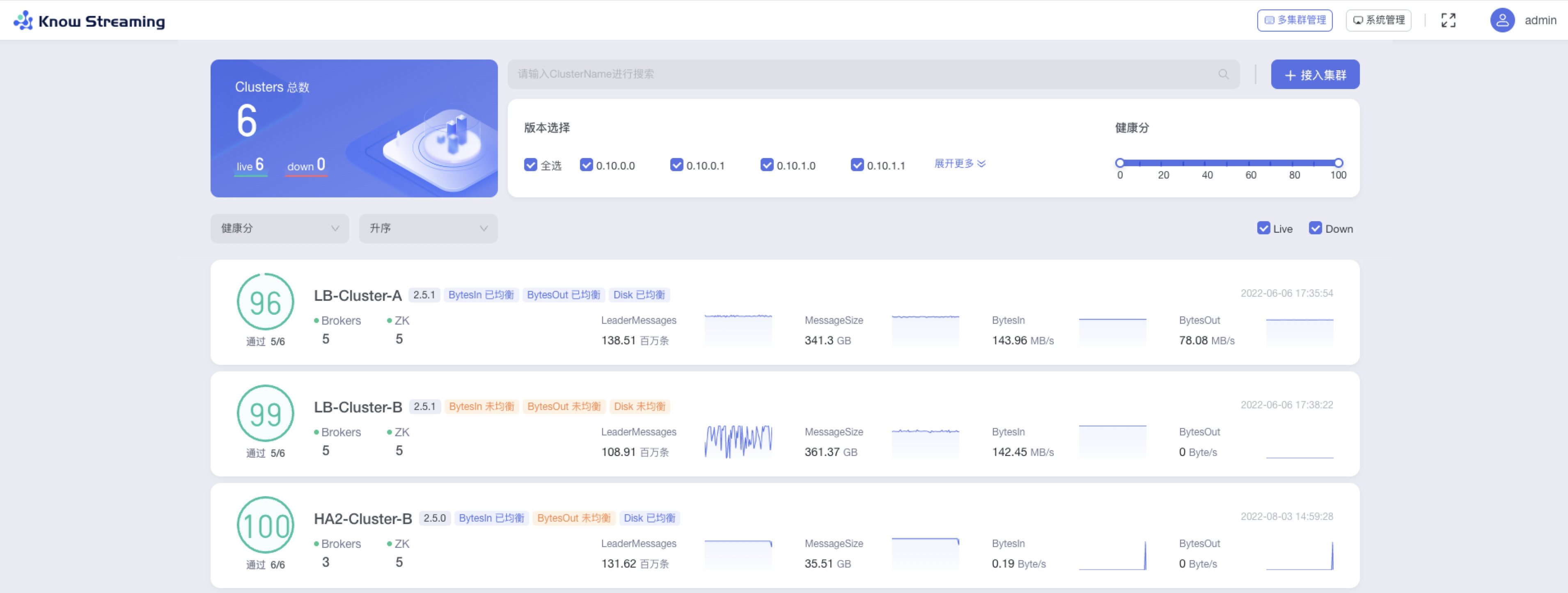

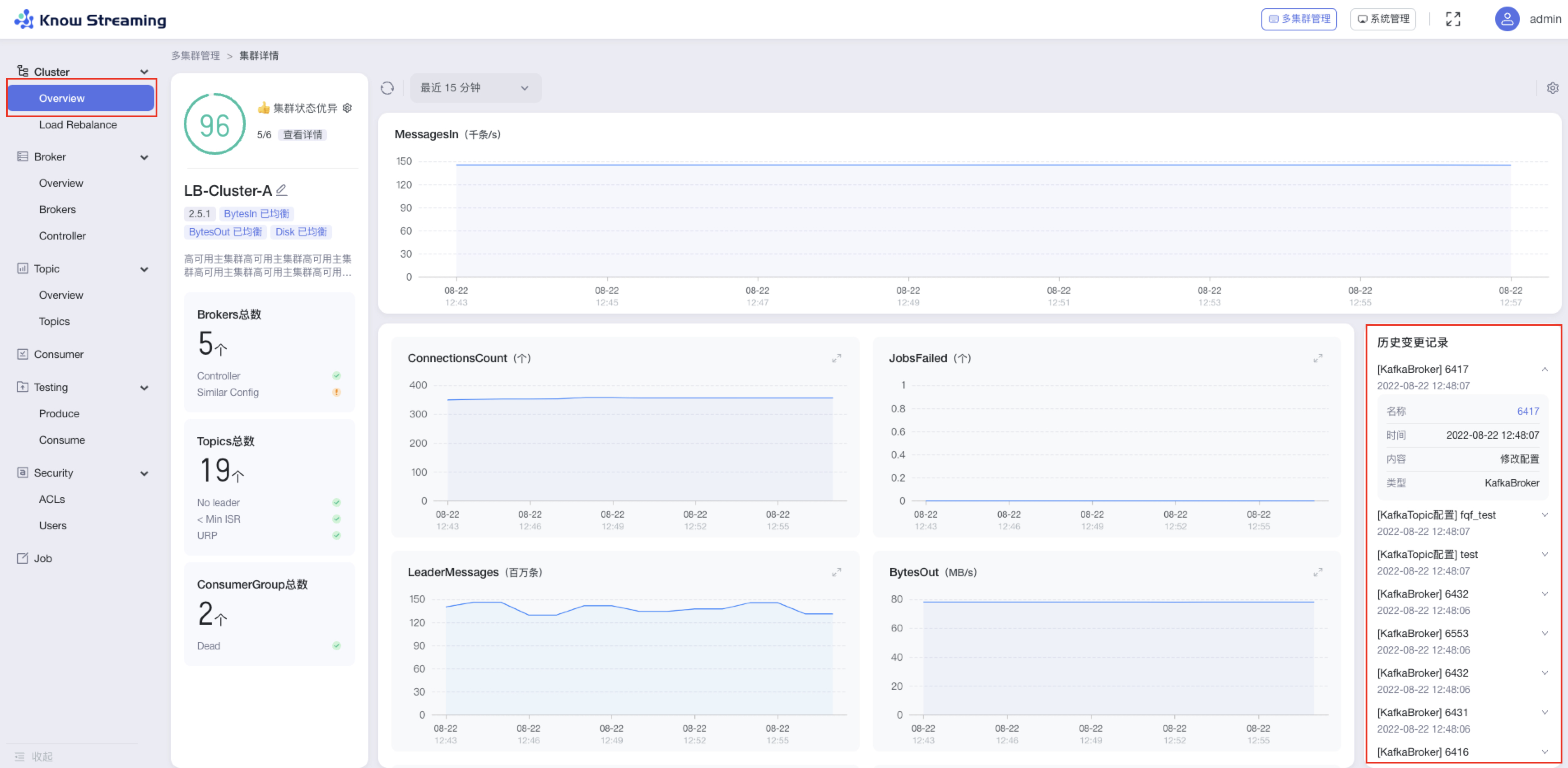

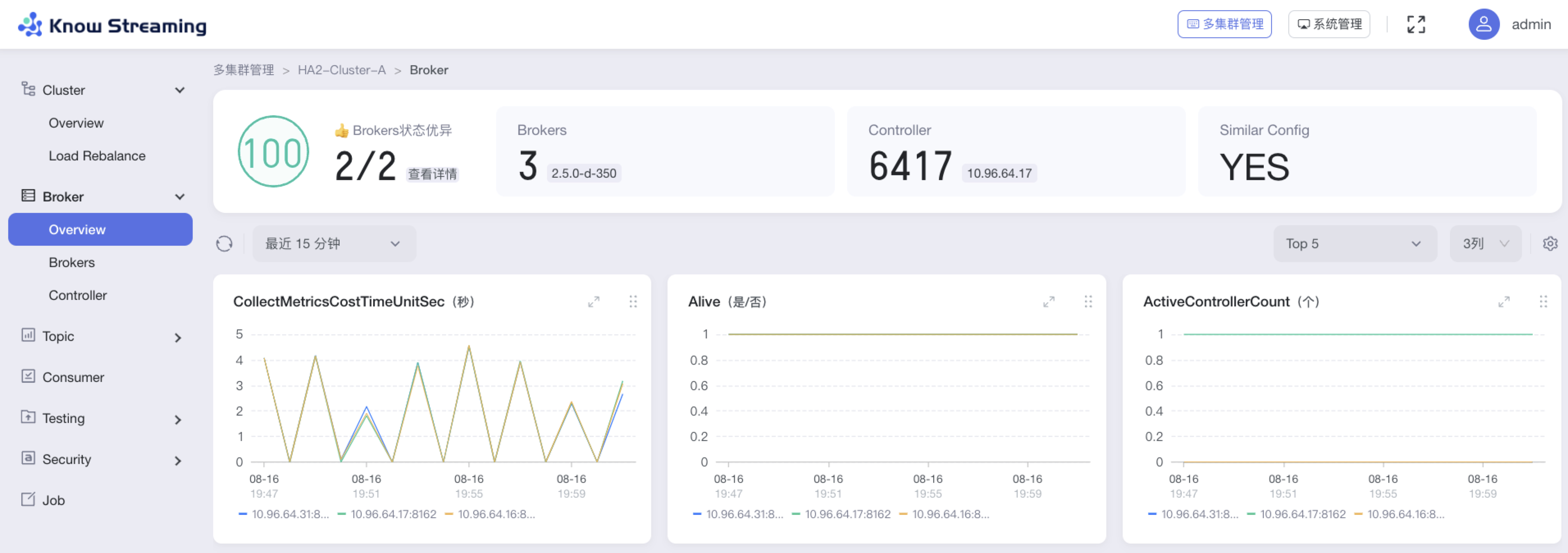

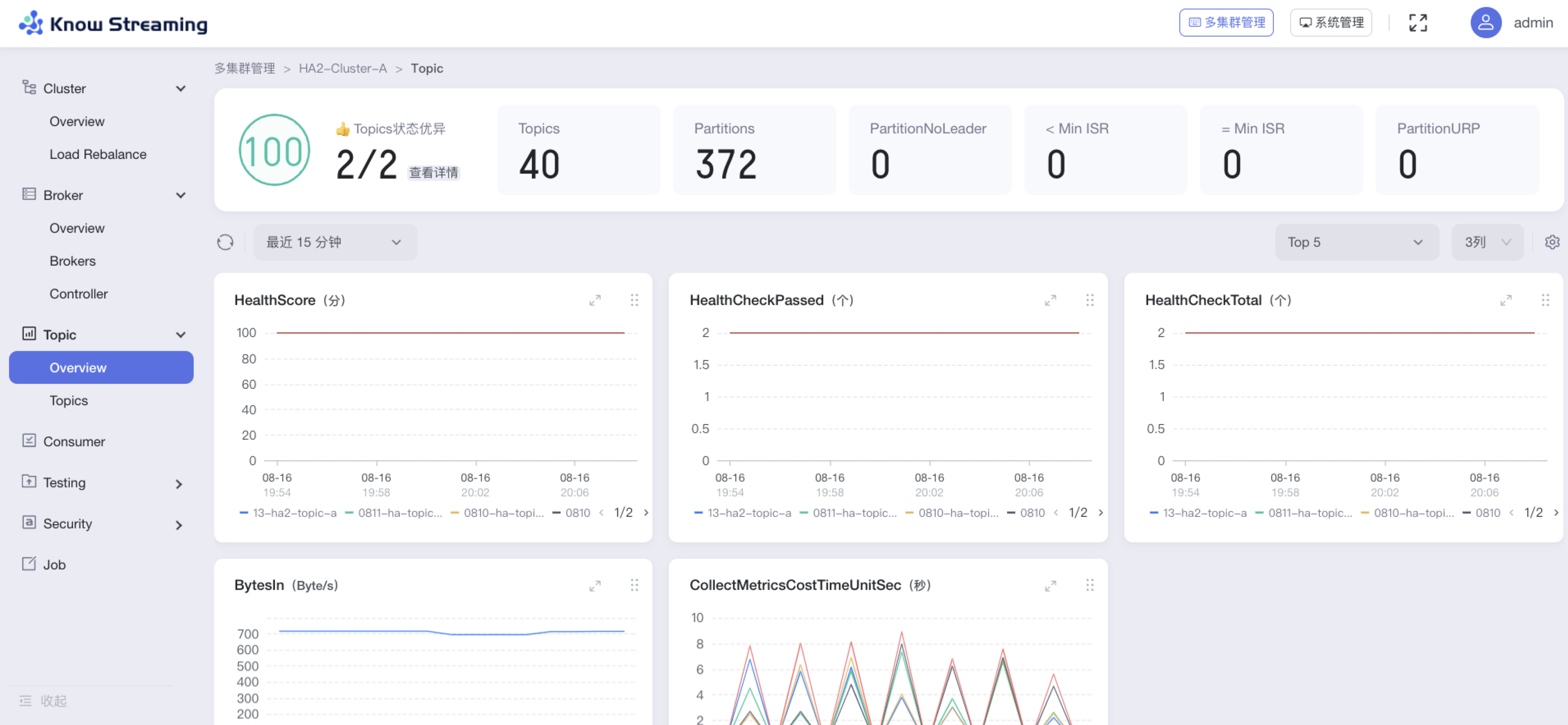

## `Know Streaming` 简介

|

|

||||||

|

|

||||||

`Know Streaming`是一套云原生的Kafka管控平台,脱胎于众多互联网内部多年的Kafka运营实践经验,专注于Kafka运维管控、监控告警、资源治理、多活容灾等核心场景。在用户体验、监控、运维管控上进行了平台化、可视化、智能化的建设,提供一系列特色的功能,极大地方便了用户和运维人员的日常使用,让普通运维人员都能成为Kafka专家。

|

|

||||||

|

|

||||||

我们现在正在收集 Know Streaming 用户信息,以帮助我们进一步改进 Know Streaming。

|

|

||||||

请在 [issue#663](https://github.com/didi/KnowStreaming/issues/663) 上提供您的使用信息来支持我们:[谁在使用 Know Streaming](https://github.com/didi/KnowStreaming/issues/663)

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

整体具有以下特点:

|

|

||||||

|

|

||||||

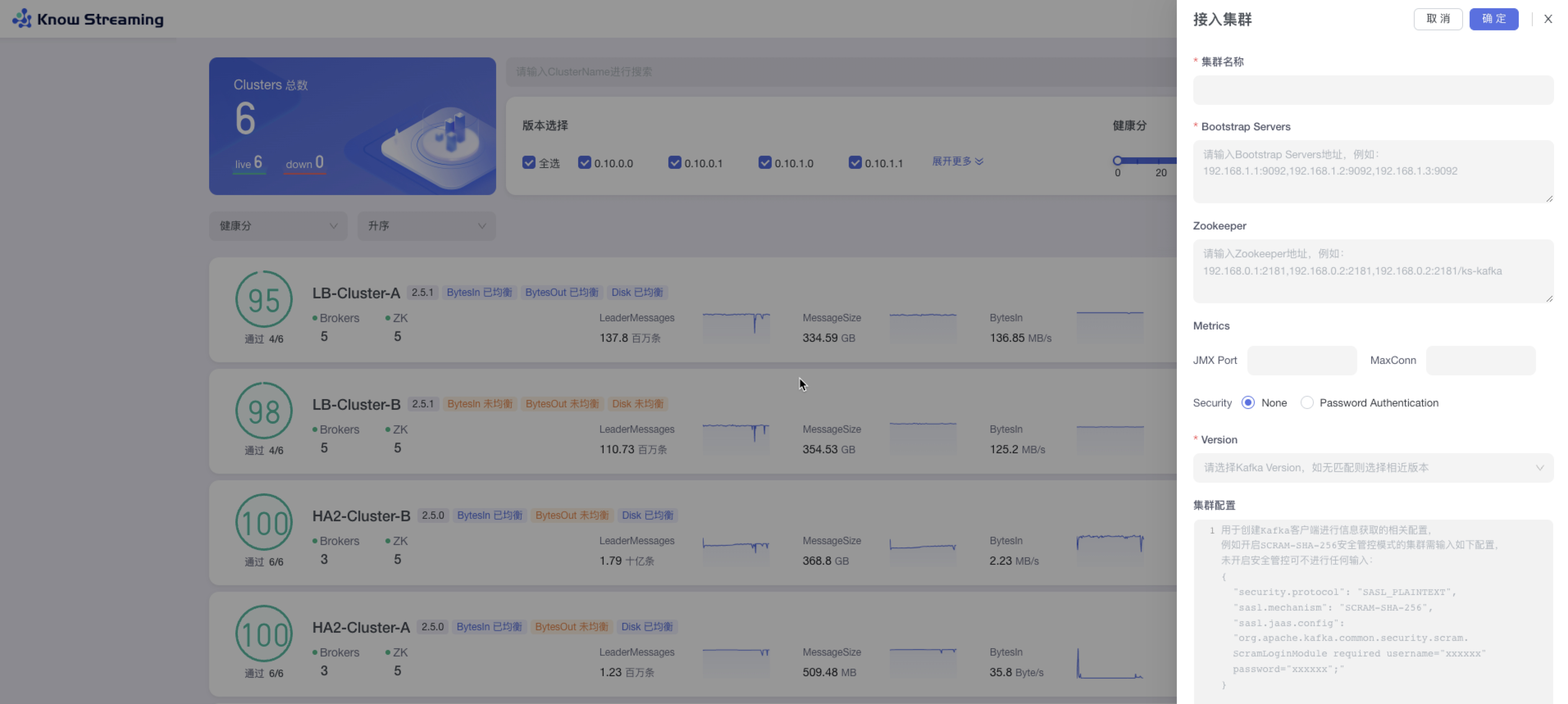

- 👀 **零侵入、全覆盖**

|

|

||||||

- 无需侵入改造 `Apache Kafka` ,一键便能纳管 `0.10.x` ~ `3.x.x` 众多版本的Kafka,包括 `ZK` 或 `Raft` 运行模式的版本,同时在兼容架构上具备良好的扩展性,帮助您提升集群管理水平;

|

|

||||||

|

|

||||||

- 🌪️ **零成本、界面化**

|

|

||||||

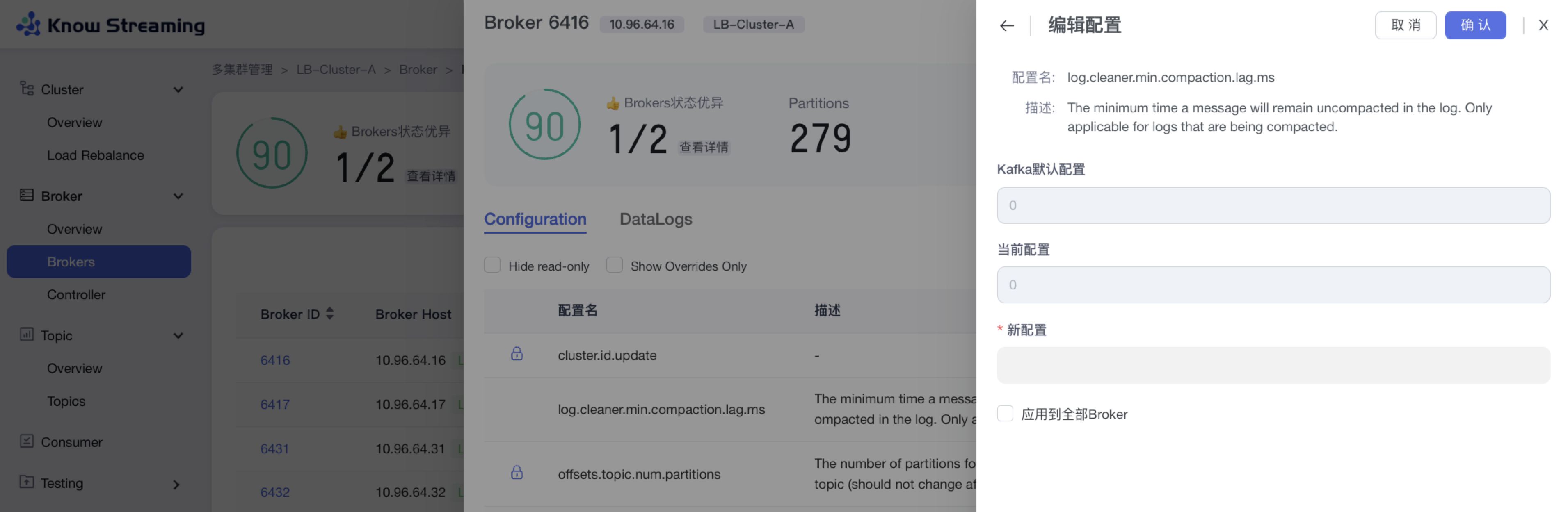

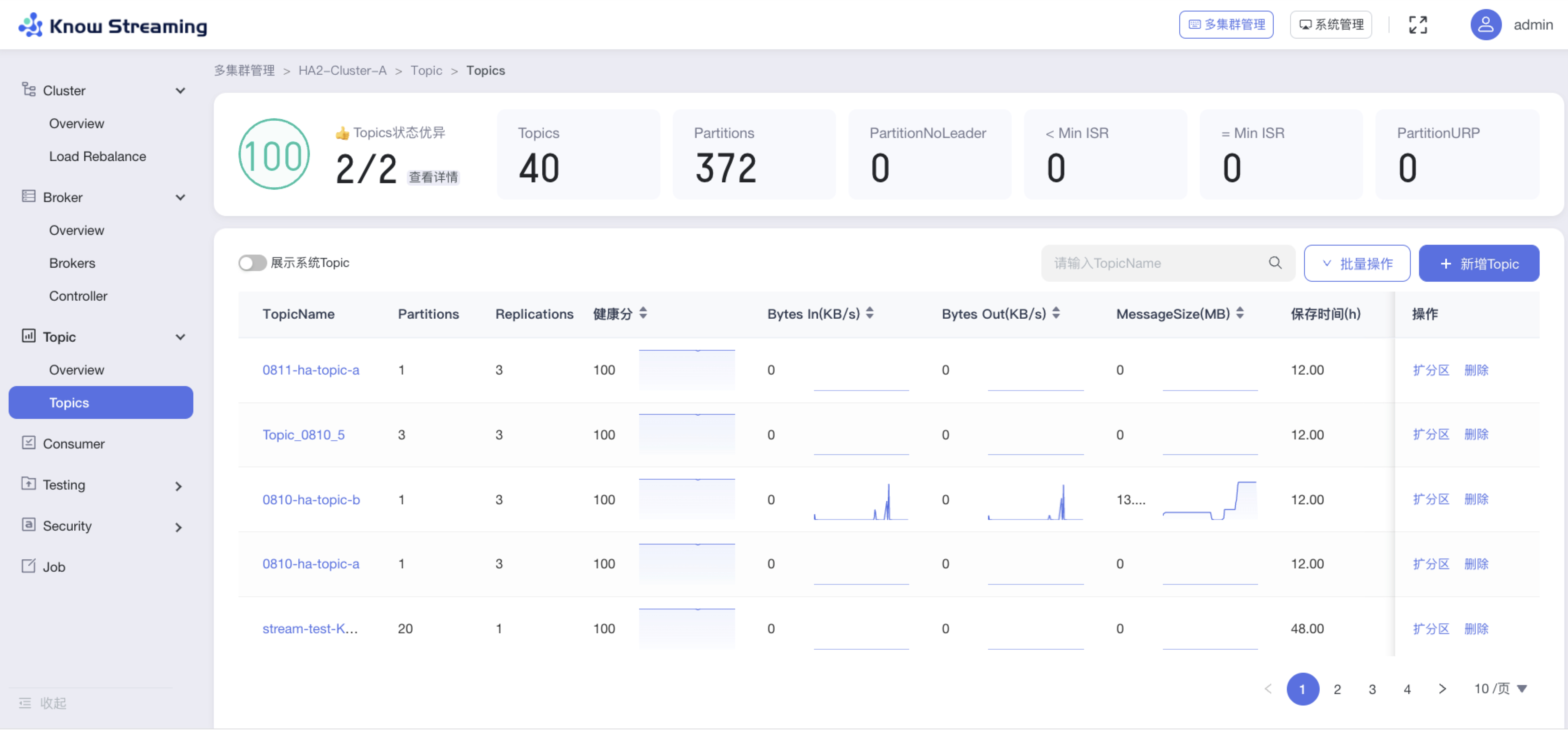

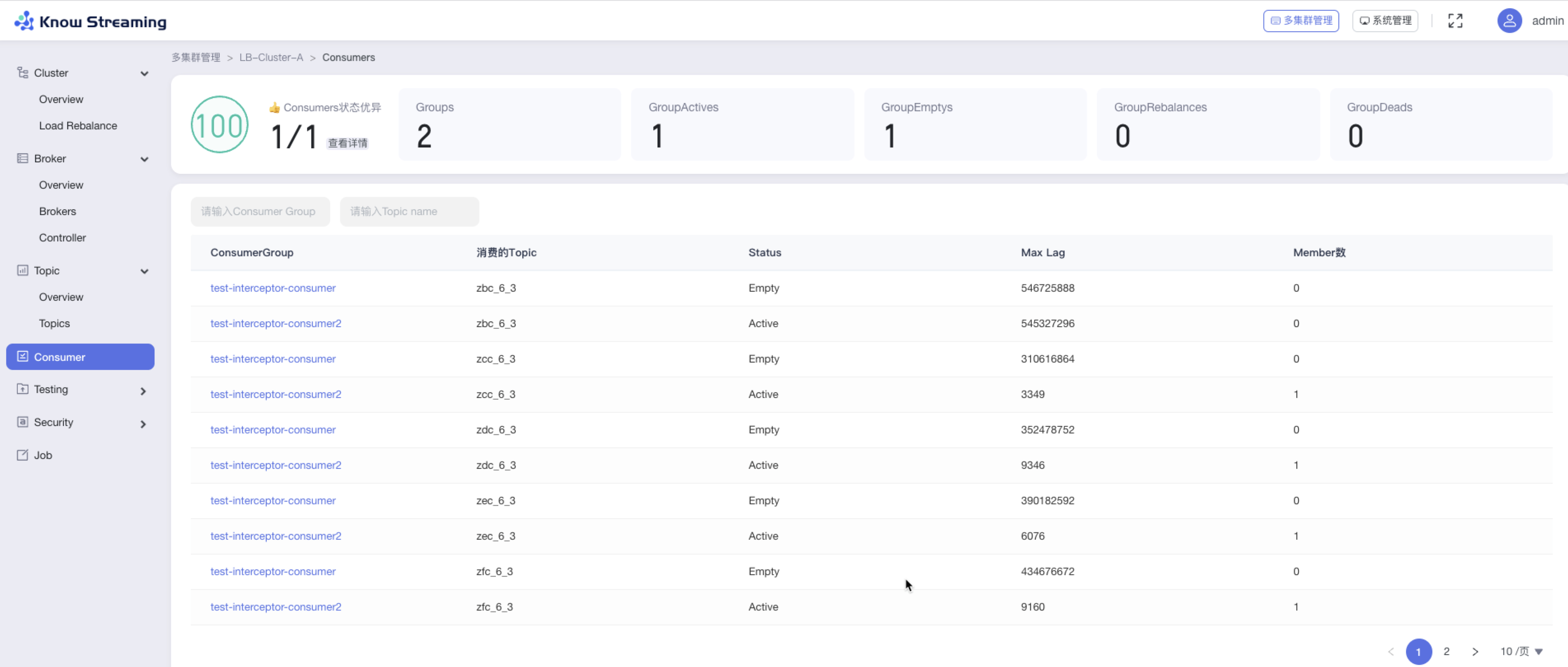

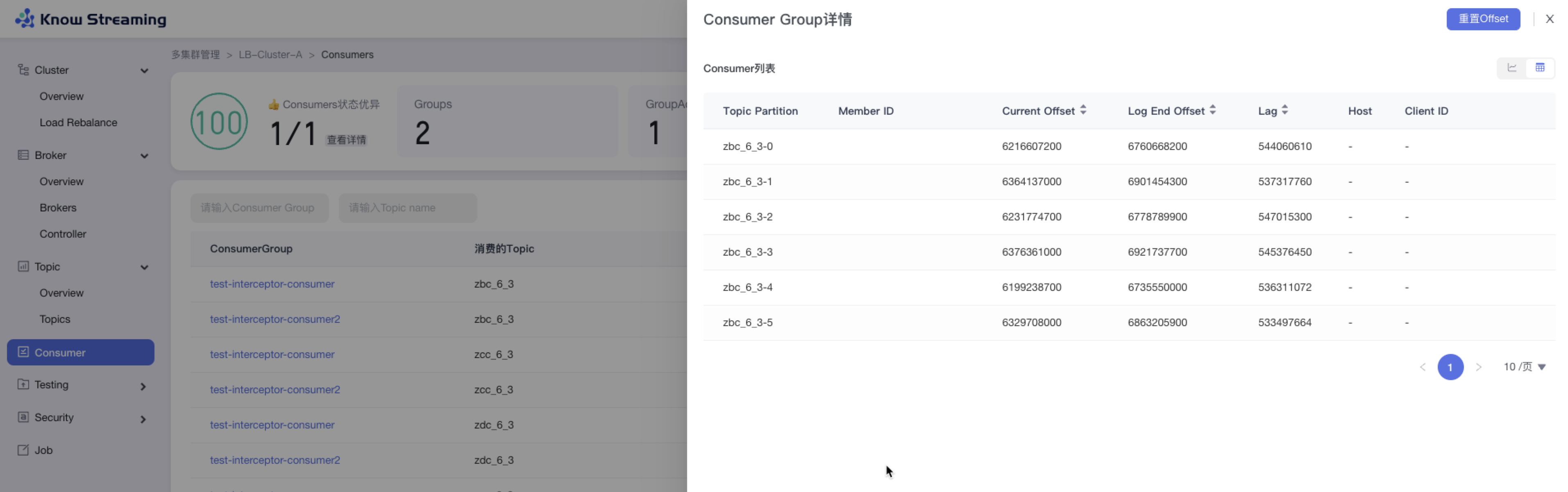

- 提炼高频 CLI 能力,设计合理的产品路径,提供清新美观的 GUI 界面,支持 Cluster、Broker、Zookeeper、Topic、ConsumerGroup、Message、ACL、Connect 等组件 GUI 管理,普通用户5分钟即可上手;

|

|

||||||

|

|

||||||

- 👏 **云原生、插件化**

|

|

||||||

- 基于云原生构建,具备水平扩展能力,只需要增加节点即可获取更强的采集及对外服务能力,提供众多可热插拔的企业级特性,覆盖可观测性生态整合、资源治理、多活容灾等核心场景;

|

|

||||||

|

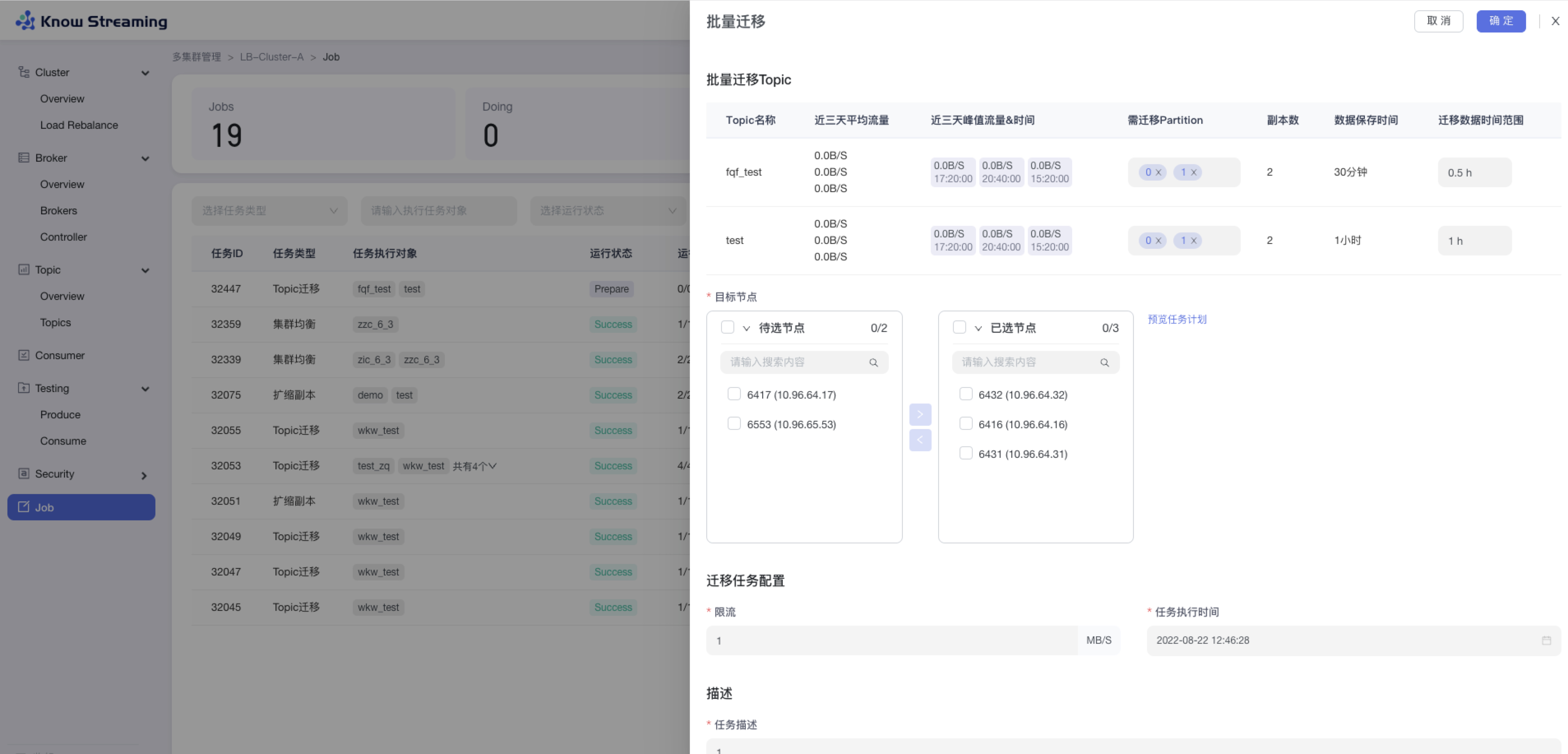

|

||||||

- 🚀 **专业能力**

|

|

||||||

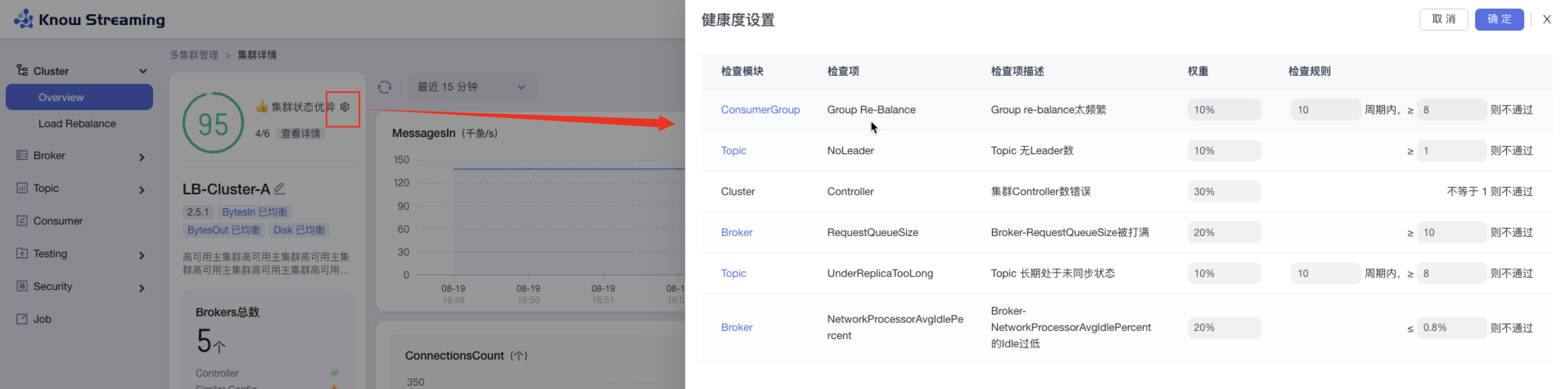

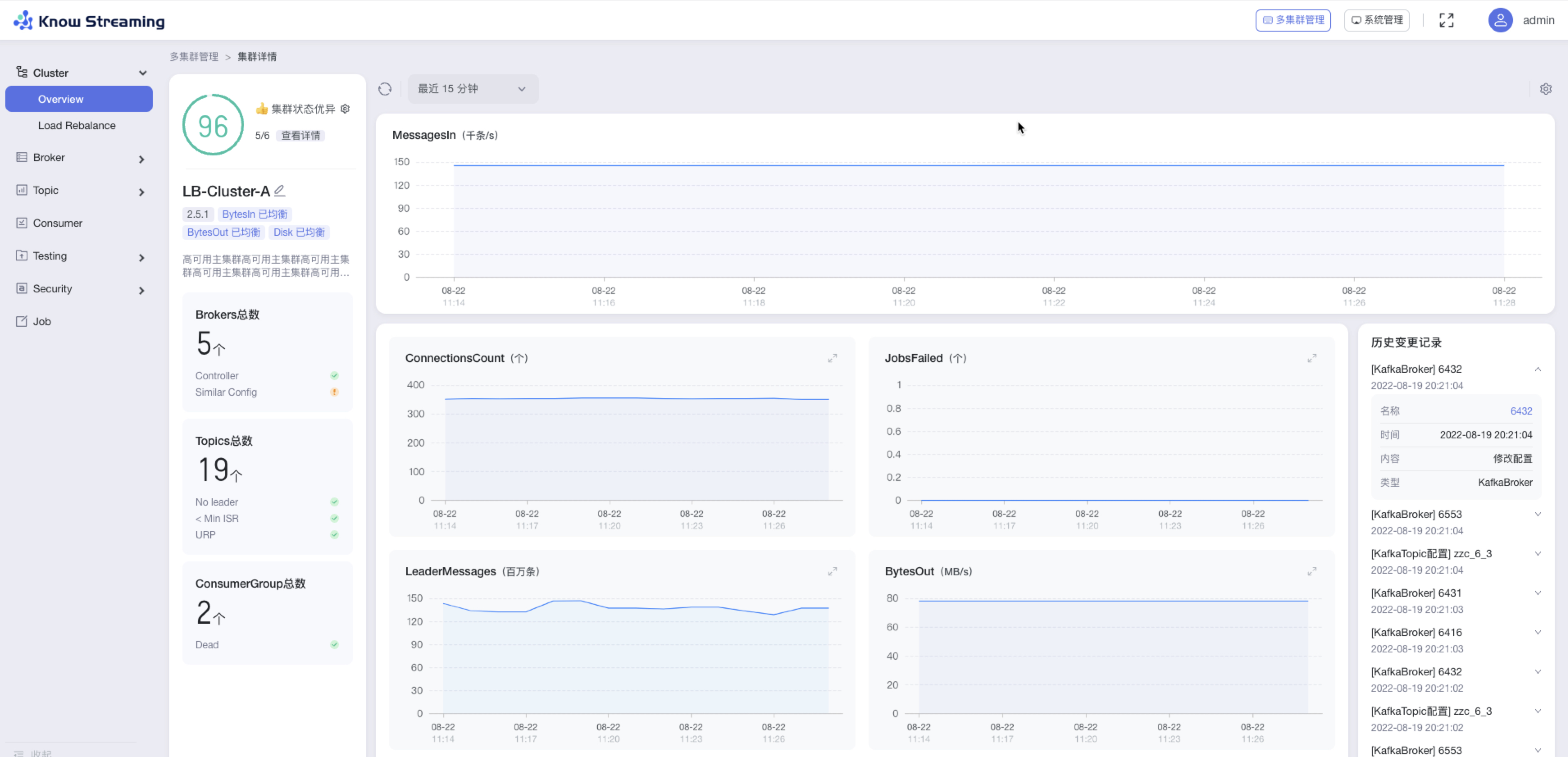

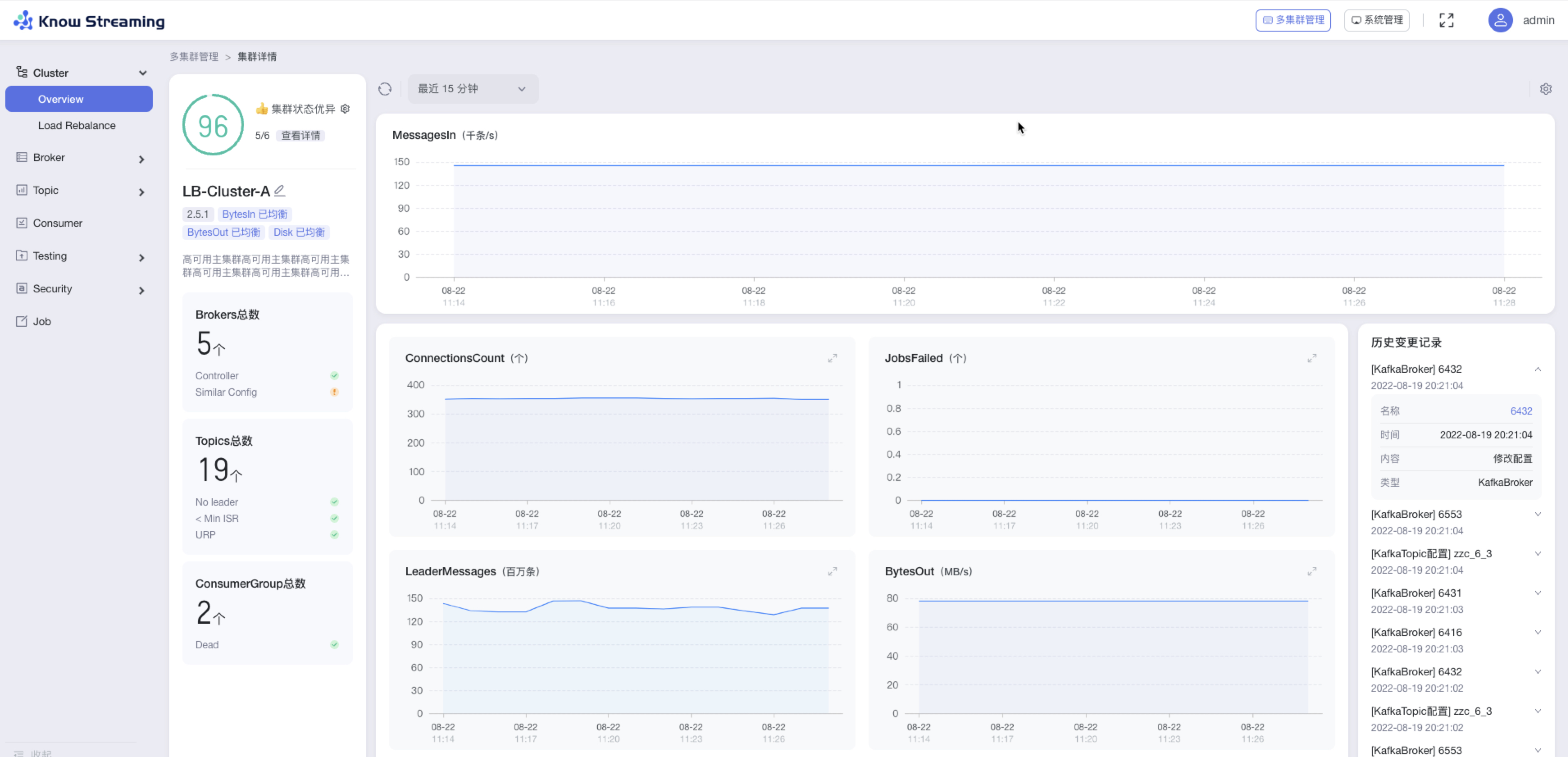

- 集群管理:支持一键纳管,健康分析、核心组件观测 等功能;

|

|

||||||

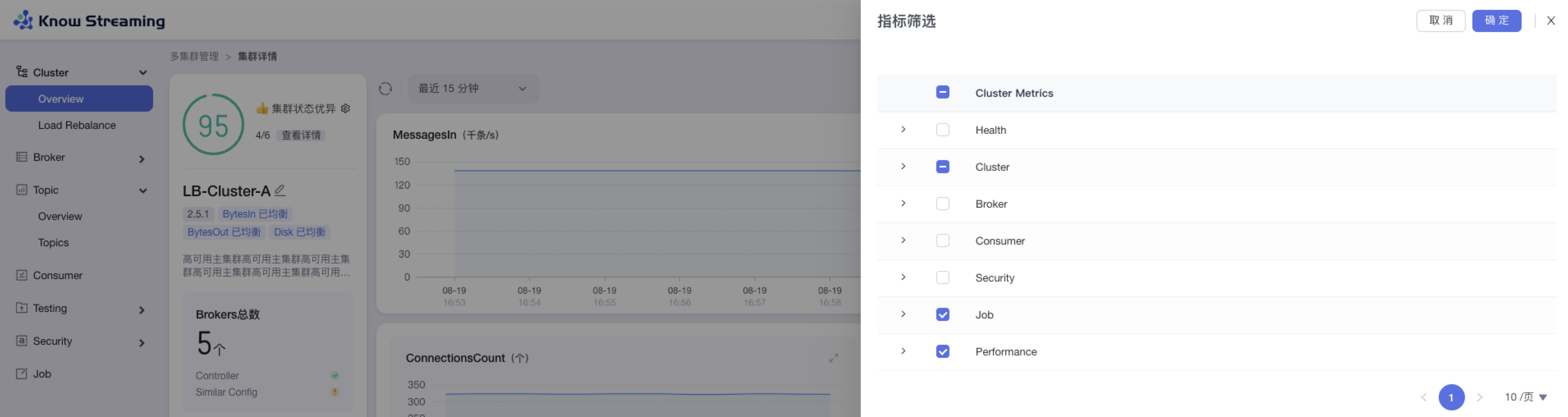

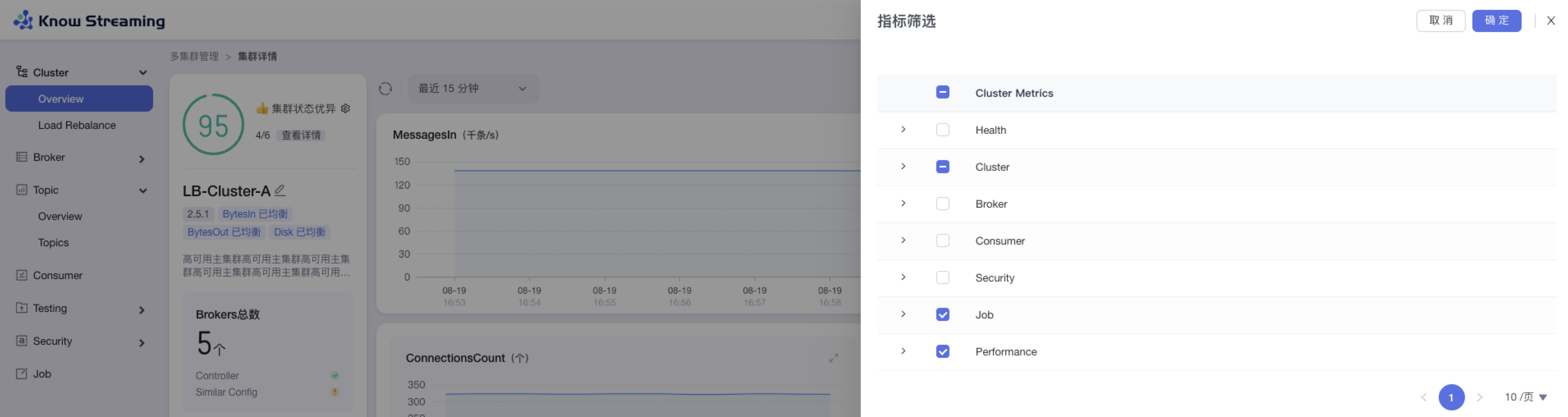

- 观测提升:多维度指标观测大盘、观测指标最佳实践 等功能;

|

|

||||||

- 异常巡检:集群多维度健康巡检、集群多维度健康分 等功能;

|

|

||||||

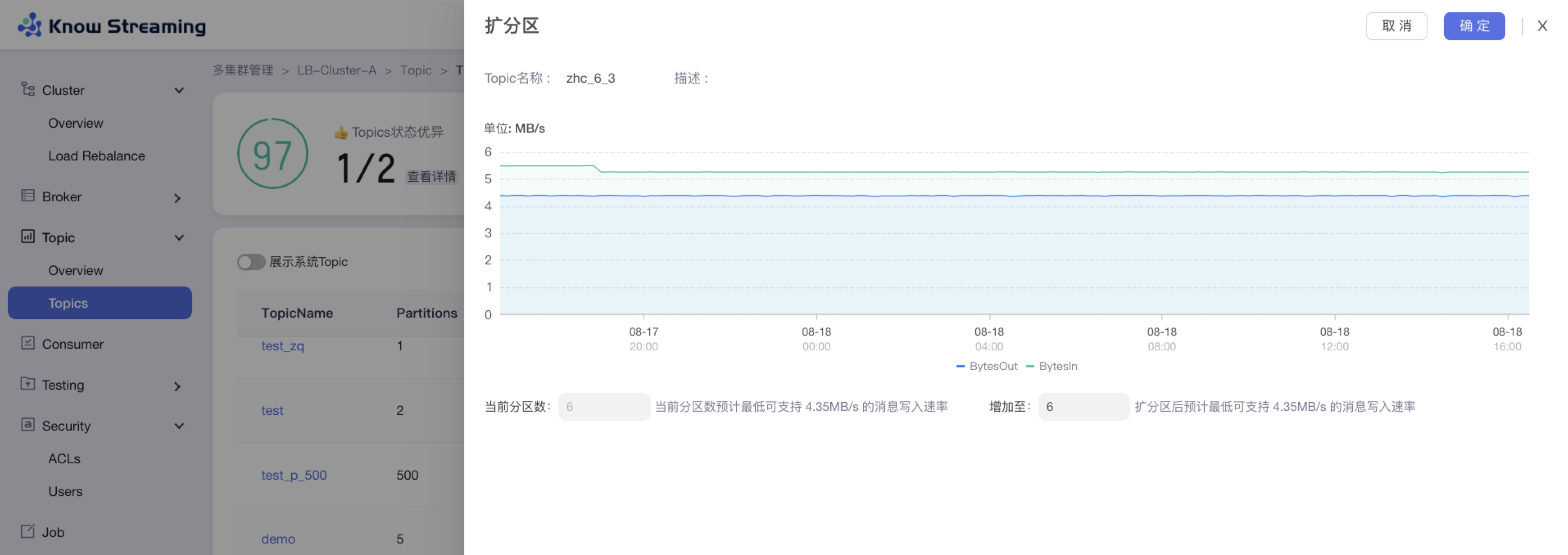

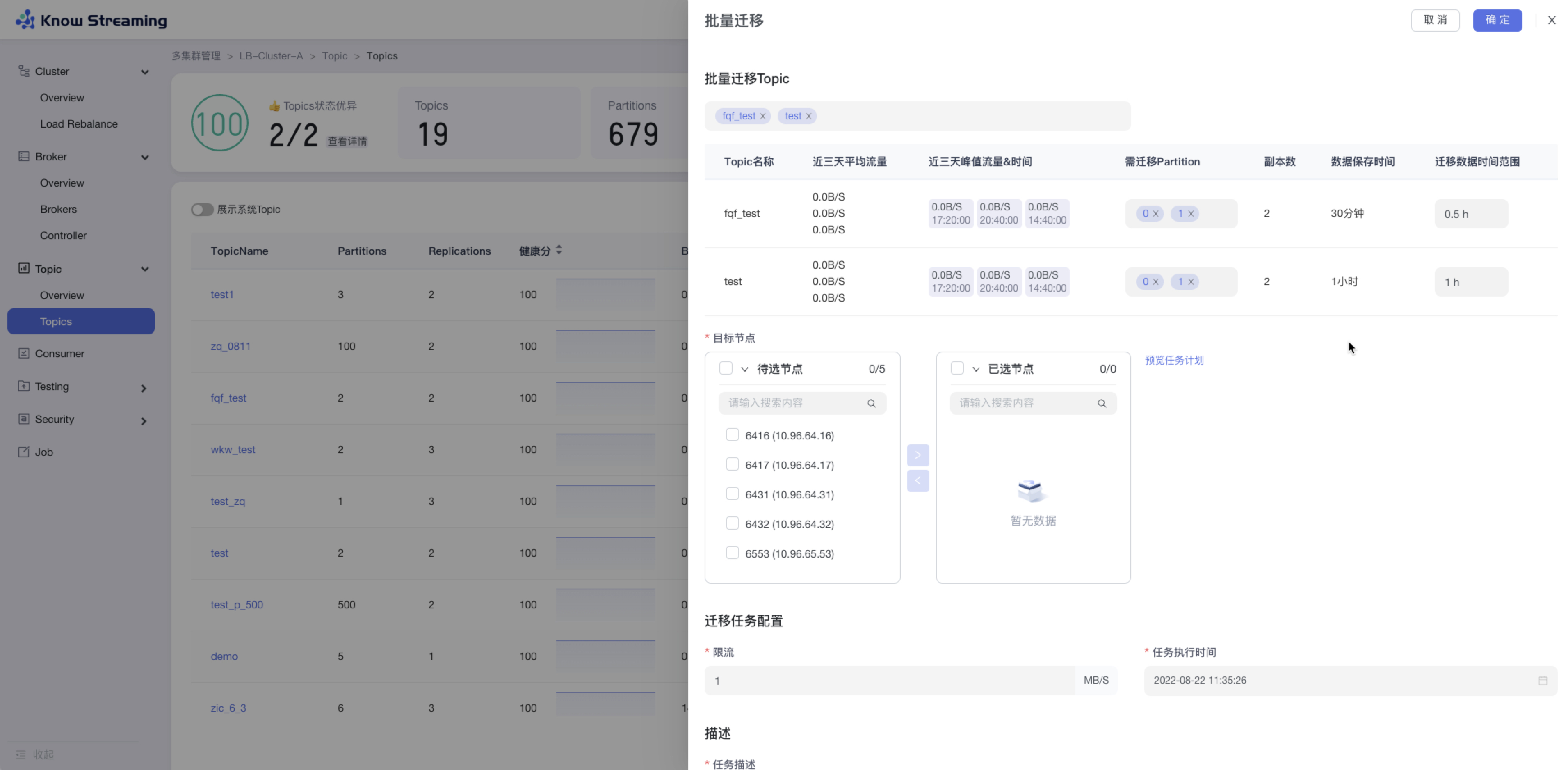

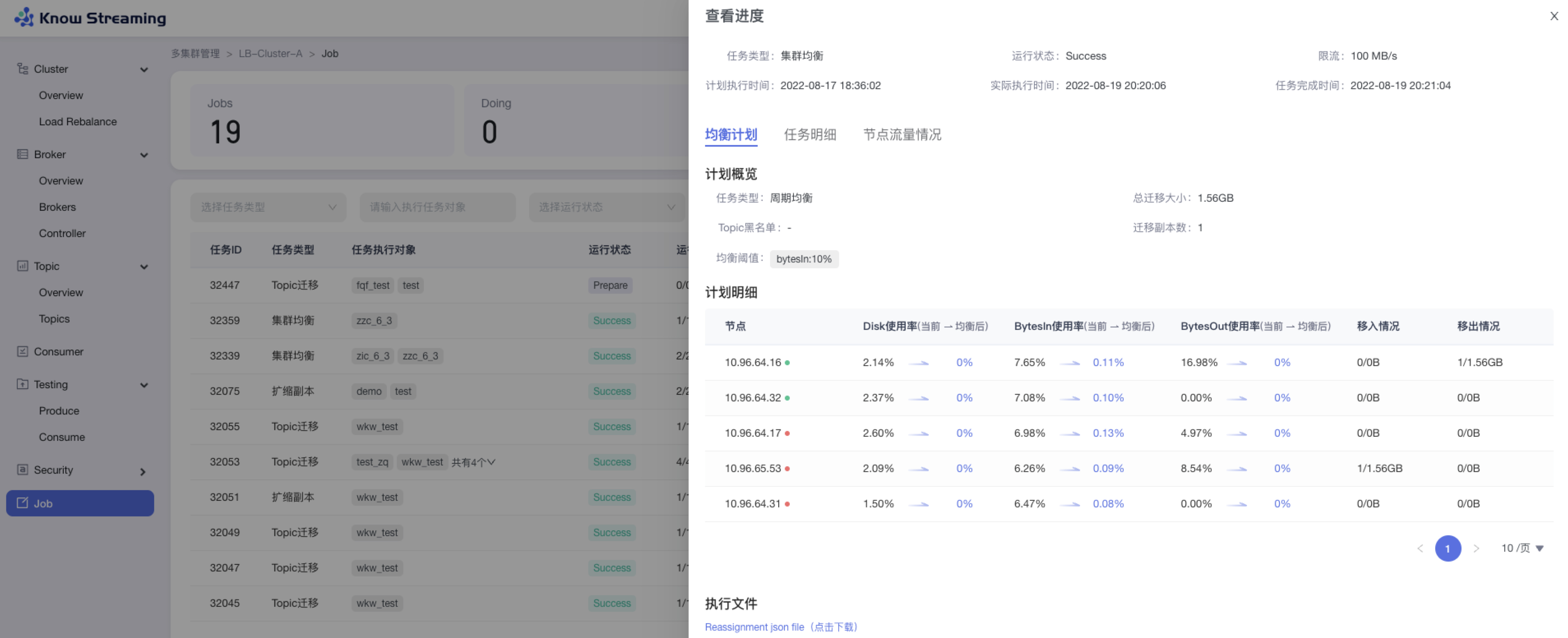

- 能力增强:集群负载均衡、Topic扩缩副本、Topic副本迁移 等功能;

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

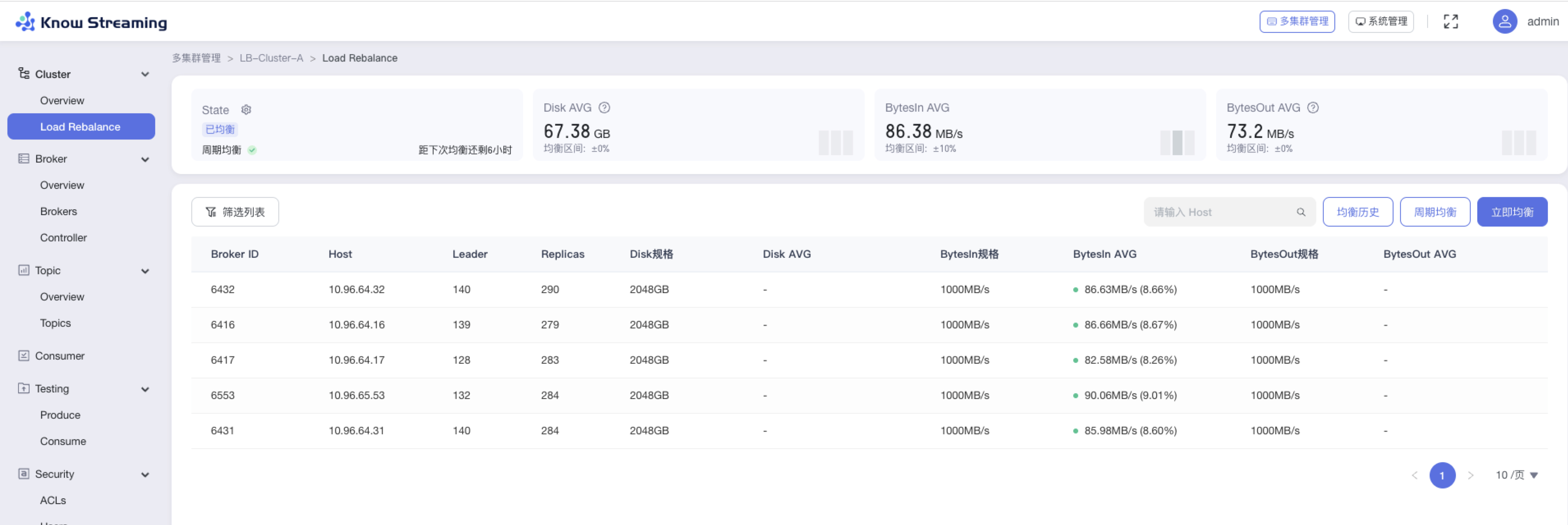

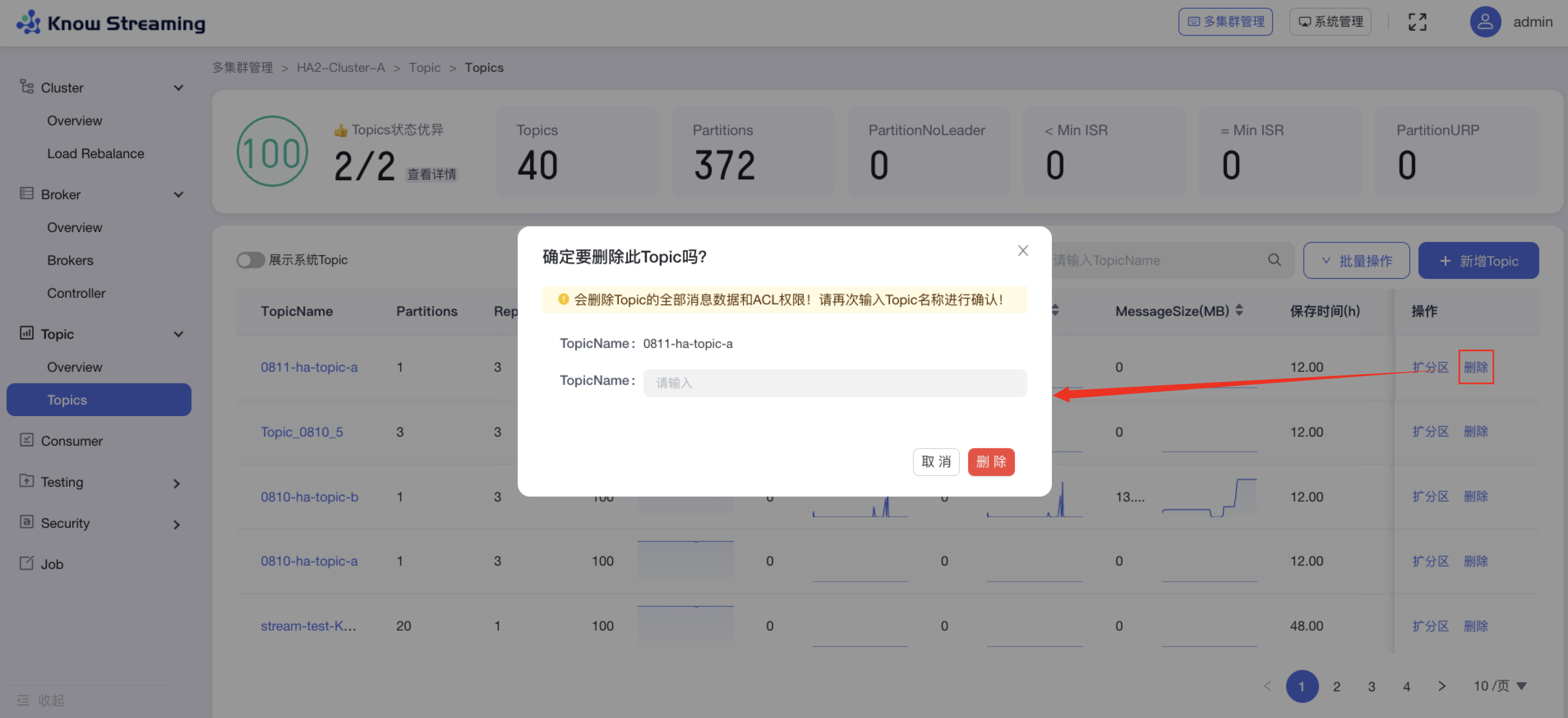

**产品图**

|

|

||||||

|

|

||||||

<p align="center">

|

|

||||||

|

|

||||||

<img src="http://img-ys011.didistatic.com/static/dc2img/do1_sPmS4SNLX9m1zlpmHaLJ" width = "768" height = "473" div align=center />

|

|

||||||

|

|

||||||

</p>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 文档资源

|

|

||||||

|

|

||||||

**`开发相关手册`**

|

|

||||||

|

|

||||||

- [打包编译手册](docs/install_guide/源码编译打包手册.md)

|

|

||||||

- [单机部署手册](docs/install_guide/单机部署手册.md)

|

|

||||||

- [版本升级手册](docs/install_guide/版本升级手册.md)

|

|

||||||

- [本地源码启动手册](docs/dev_guide/本地源码启动手册.md)

|

|

||||||

- [页面无数据排查手册](docs/dev_guide/页面无数据排查手册.md)

|

|

||||||

|

|

||||||

**`产品相关手册`**

|

|

||||||

|

|

||||||

- [产品使用指南](docs/user_guide/用户使用手册.md)

|

|

||||||

- [2.x与3.x新旧对比手册](docs/user_guide/新旧对比手册.md)

|

|

||||||

- [FAQ](docs/user_guide/faq.md)

|

|

||||||

|

|

||||||

|

|

||||||

**点击 [这里](https://doc.knowstreaming.com/product),也可以从官网获取到更多文档**

|

|

||||||

|

|

||||||

**`产品网址`**

|

|

||||||

- [产品官网:https://knowstreaming.com](https://knowstreaming.com)

|

|

||||||

- [体验环境:https://demo.knowstreaming.com](https://demo.knowstreaming.com),登陆账号:admin/admin

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 成为社区贡献者

|

|

||||||

|

|

||||||

1. [贡献源码](https://doc.knowstreaming.com/product/10-contribution) 了解如何成为 Know Streaming 的贡献者

|

|

||||||

2. [具体贡献流程](https://doc.knowstreaming.com/product/10-contribution#102-贡献流程)

|

|

||||||

3. [开源激励计划](https://doc.knowstreaming.com/product/10-contribution#105-开源激励计划)

|

|

||||||

4. [贡献者名单](https://doc.knowstreaming.com/product/10-contribution#106-贡献者名单)

|

|

||||||

|

|

||||||

|

|

||||||

获取KnowStreaming开源社区证书。

|

|

||||||

|

|

||||||

## 加入技术交流群

|

|

||||||

|

|

||||||

**`1、知识星球`**

|

|

||||||

|

|

||||||

<p align="left">

|

|

||||||

<img src="https://user-images.githubusercontent.com/71620349/185357284-fdff1dad-c5e9-4ddf-9a82-0be1c970980d.JPG" height = "180" div align=left />

|

|

||||||

</p>

|

|

||||||

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

<br/>

|

|

||||||

|

|

||||||

👍 我们正在组建国内最大,最权威的 **[Kafka中文社区](https://z.didi.cn/5gSF9)**

|

|

||||||

|

|

||||||

在这里你可以结交各大互联网的 Kafka大佬 以及 4000+ Kafka爱好者,一起实现知识共享,实时掌控最新行业资讯,期待 👏 您的加入中~ https://z.didi.cn/5gSF9

|

|

||||||

|

|

||||||

有问必答~! 互动有礼~!

|

|

||||||

|

|

||||||

PS: 提问请尽量把问题一次性描述清楚,并告知环境信息情况~!如使用版本、操作步骤、报错/警告信息等,方便大V们快速解答~

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**`2、微信群`**

|

|

||||||

|

|

||||||

微信加群:添加`PenceXie` 、`szzdzhp001`的微信号备注KnowStreaming加群。

|

|

||||||

<br/>

|

|

||||||

|

|

||||||

加群之前有劳点一下 star,一个小小的 star 是对KnowStreaming作者们努力建设社区的动力。

|

|

||||||

|

|

||||||

感谢感谢!!!

|

|

||||||

|

|

||||||

<img width="116" alt="wx" src="https://user-images.githubusercontent.com/71620349/192257217-c4ebc16c-3ad9-485d-a914-5911d3a4f46b.png">

|

|

||||||

|

|

||||||

## Star History

|

|

||||||

|

|

||||||

[](https://star-history.com/#didi/KnowStreaming&Date)

|

|

||||||

|

|

||||||

@@ -1,646 +0,0 @@

|

|||||||

|

|

||||||

## v3.4.0

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**问题修复**

|

|

||||||

- [Bugfix]修复 Overview 指标文案错误的错误 ([#1190](https://github.com/didi/KnowStreaming/issues/1190))

|

|

||||||

- [Bugfix]修复删除 Kafka 集群后,Connect 集群任务出现 NPE 问题 ([#1129](https://github.com/didi/KnowStreaming/issues/1129))

|

|

||||||

- [Bugfix]修复在 Ldap 登录时,设置 auth-user-registration: false 会导致空指针的问题 ([#1117](https://github.com/didi/KnowStreaming/issues/1117))

|

|

||||||

- [Bugfix]修复 Ldap 登录,调用 user.getId() 出现 NPE 的问题 ([#1108](https://github.com/didi/KnowStreaming/issues/1108))

|

|

||||||

- [Bugfix]修复前端新增角色失败等问题 ([#1107](https://github.com/didi/KnowStreaming/issues/1107))

|

|

||||||

- [Bugfix]修复 ZK 四字命令解析错误的问题

|

|

||||||

- [Bugfix]修复 zk standalone 模式下,状态获取错误的问题

|

|

||||||

- [Bugfix]修复 Broker 元信息解析方法未调用导致接入集群失败的问题 ([#993](https://github.com/didi/KnowStreaming/issues/993))

|

|

||||||

- [Bugfix]修复 ConsumerAssignment 类型转换错误的问题

|

|

||||||

- [Bugfix]修复对 Connect 集群的 clusterUrl 的动态更新导致配置不生效的问题 ([#1079](https://github.com/didi/KnowStreaming/issues/1079))

|

|

||||||

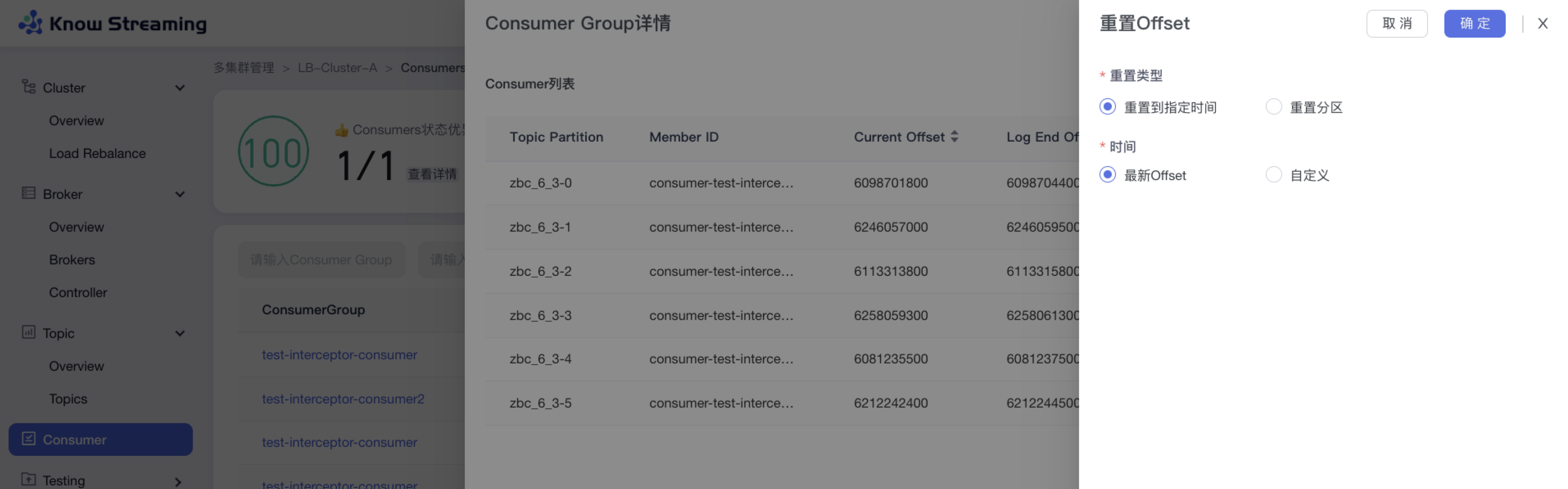

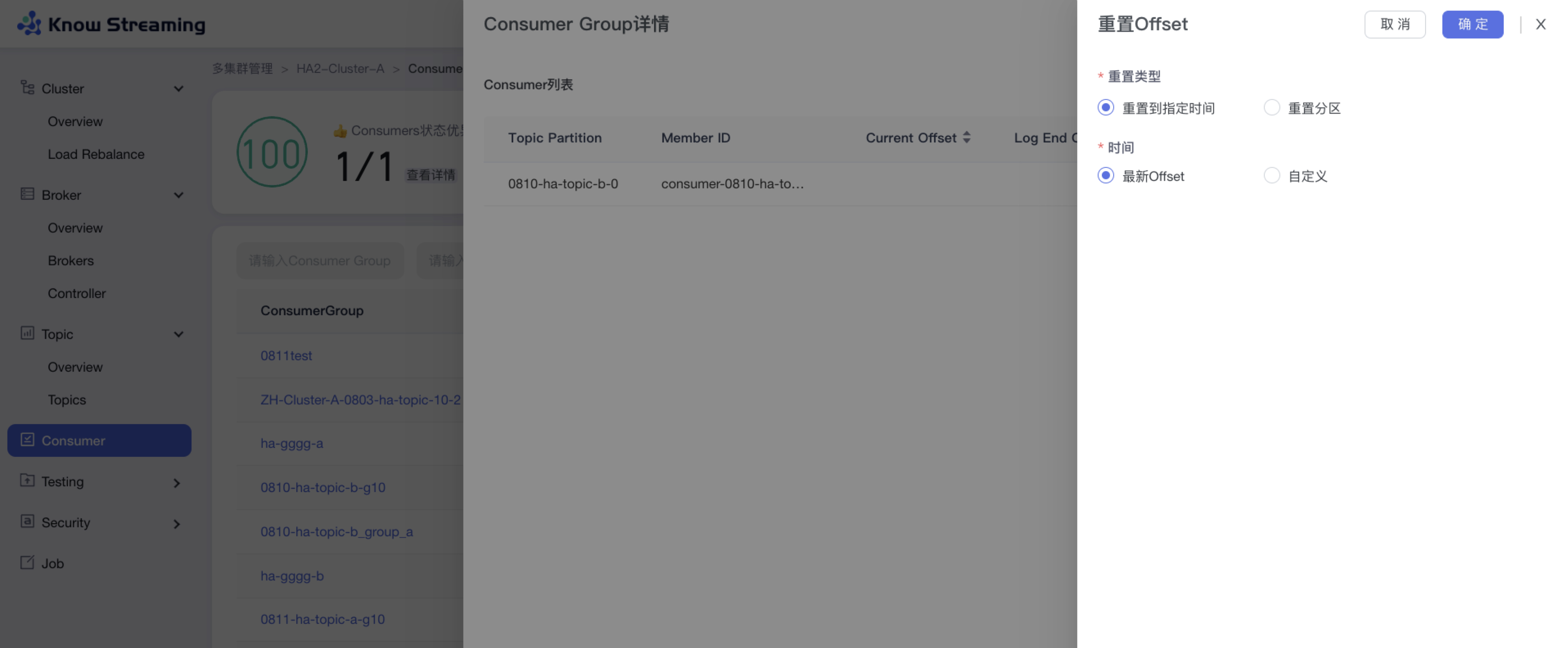

- [Bugfix]修复消费组不支持重置到最旧 Offset 的问题 ([#1059](https://github.com/didi/KnowStreaming/issues/1059))

|

|

||||||

- [Bugfix]后端增加查看 User 密码的权限点 ([#1095](https://github.com/didi/KnowStreaming/issues/1095))

|

|

||||||

- [Bugfix]修复 Connect-JMX 端口维护信息错误的问题 ([#1146](https://github.com/didi/KnowStreaming/issues/1146))

|

|

||||||

- [Bugfix]修复系统管理子应用无法正常启动的问题 ([#1167](https://github.com/didi/KnowStreaming/issues/1167))

|

|

||||||

- [Bugfix]修复 Security 模块,权限点缺失问题 ([#1069](https://github.com/didi/KnowStreaming/issues/1069)), ([#1154](https://github.com/didi/KnowStreaming/issues/1154))

|

|

||||||

- [Bugfix]修复 Connect-Worker Jmx 不生效的问题 ([#1067](https://github.com/didi/KnowStreaming/issues/1067))

|

|

||||||

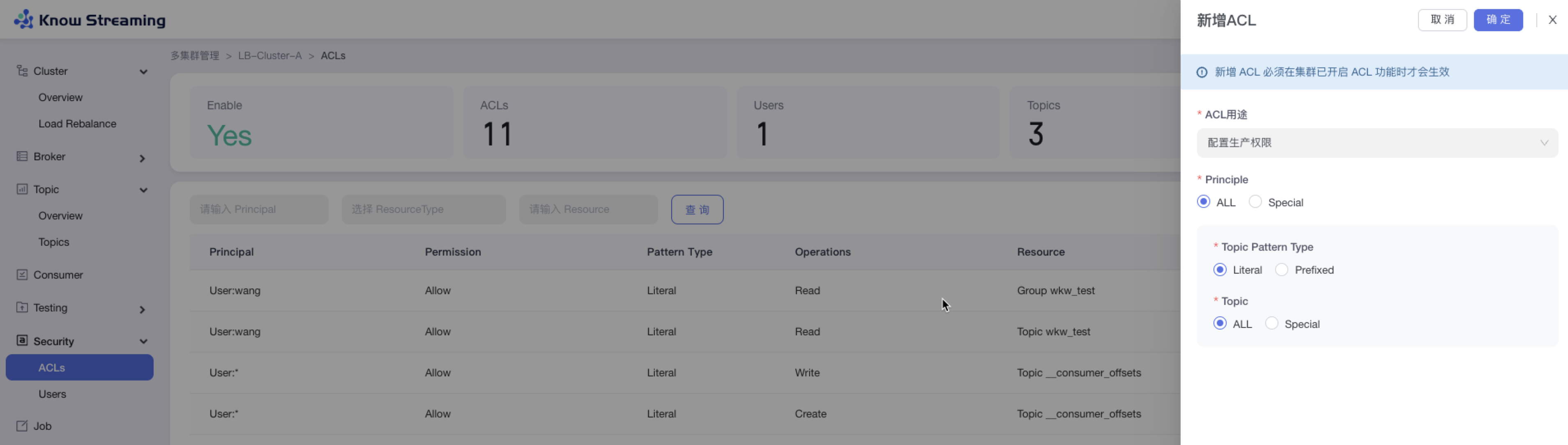

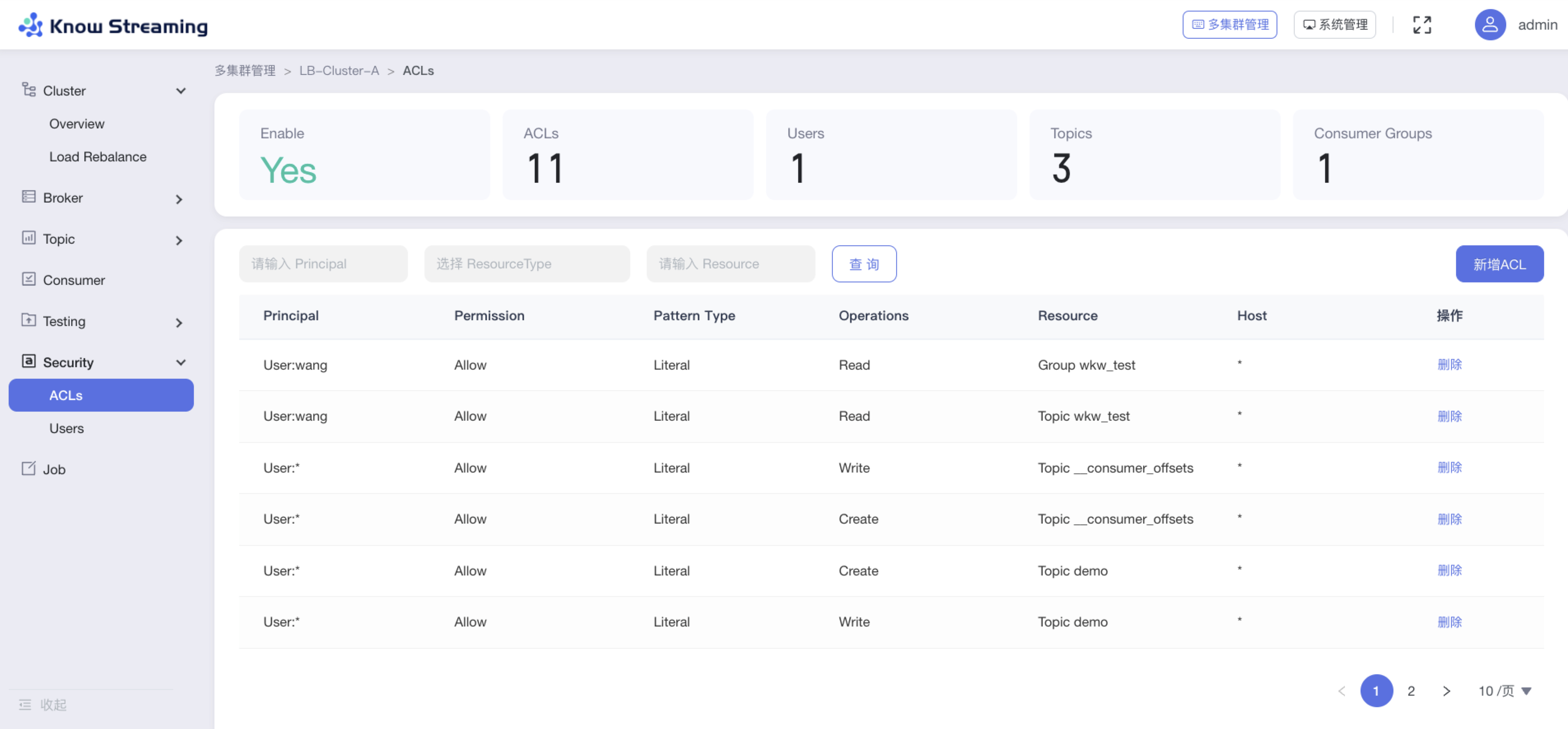

- [Bugfix]修复权限 ACL 管理中,消费组列表展示错误的问题 ([#1037](https://github.com/didi/KnowStreaming/issues/1037))

|

|

||||||

- [Bugfix]修复 Connect 模块没有默认勾选指标的问题([#1022](https://github.com/didi/KnowStreaming/issues/1022))

|

|

||||||

- [Bugfix]修复 es 索引 create/delete 死循环的问题 ([#1021](https://github.com/didi/KnowStreaming/issues/1021))

|

|

||||||

- [Bugfix]修复 Connect-GroupDescription 解析失败的问题 ([#1015](https://github.com/didi/KnowStreaming/issues/1015))

|

|

||||||

- [Bugfix]修复 Prometheus 开放接口中,Partition 指标 tag 缺失的问题 ([#1014](https://github.com/didi/KnowStreaming/issues/1014))

|

|

||||||

- [Bugfix]修复 Topic 消息展示,offset 为 0 不显示的问题 ([#1192](https://github.com/didi/KnowStreaming/issues/1192))

|

|

||||||

- [Bugfix]修复重置offset接口调用过多问题

|

|

||||||

- [Bugfix]Connect 提交任务变更为只保存用户修改的配置,并修复 JSON 模式下配置展示不全的问题 ([#1158](https://github.com/didi/KnowStreaming/issues/1158))

|

|

||||||

- [Bugfix]修复消费组 Offset 重置后,提示重置成功,但是前端不刷新数据,Offset 无变化的问题 ([#1090](https://github.com/didi/KnowStreaming/issues/1090))

|

|

||||||

- [Bugfix]修复未勾选系统管理查看权限,但是依然可以查看系统管理的问题 ([#1105](https://github.com/didi/KnowStreaming/issues/1105))

|

|

||||||

|

|

||||||

|

|

||||||

**产品优化**

|

|

||||||

- [Optimize]补充接入集群时,可选的 Kafka 版本列表 ([#1204](https://github.com/didi/KnowStreaming/issues/1204))

|

|

||||||

- [Optimize]GroupTopic 信息修改为实时获取 ([#1196](https://github.com/didi/KnowStreaming/issues/1196))

|

|

||||||

- [Optimize]增加 AdminClient 观测信息 ([#1111](https://github.com/didi/KnowStreaming/issues/1111))

|

|

||||||

- [Optimize]增加 Connector 运行状态指标 ([#1110](https://github.com/didi/KnowStreaming/issues/1110))

|

|

||||||

- [Optimize]统一 DB 元信息更新格式 ([#1127](https://github.com/didi/KnowStreaming/issues/1127)), ([#1125](https://github.com/didi/KnowStreaming/issues/1125)), ([#1006](https://github.com/didi/KnowStreaming/issues/1006))

|

|

||||||

- [Optimize]日志输出增加支持 MDC,方便用户在 logback.xml 中 json 格式化日志 ([#1032](https://github.com/didi/KnowStreaming/issues/1032))

|

|

||||||

- [Optimize]Jmx 相关日志优化 ([#1082](https://github.com/didi/KnowStreaming/issues/1082))

|

|

||||||

- [Optimize]Topic-Partitions增加主动超时功能 ([#1076](https://github.com/didi/KnowStreaming/issues/1076))

|

|

||||||

- [Optimize]Topic-Messages页面后端增加按照Partition和Offset纬度的排序 ([#1075](https://github.com/didi/KnowStreaming/issues/1075))

|

|

||||||

- [Optimize]Connect-JSON模式下的JSON格式和官方API的格式不一致 ([#1080](https://github.com/didi/KnowStreaming/issues/1080)), ([#1153](https://github.com/didi/KnowStreaming/issues/1153)), ([#1192](https://github.com/didi/KnowStreaming/issues/1192))

|

|

||||||

- [Optimize]登录页面展示的 star 数量修改为最新的数量

|

|

||||||

- [Optimize]Group 列表的 maxLag 指标调整为实时获取 ([#1074](https://github.com/didi/KnowStreaming/issues/1074))

|

|

||||||

- [Optimize]Connector增加重启、编辑、删除等权限点 ([#1066](https://github.com/didi/KnowStreaming/issues/1066)), ([#1147](https://github.com/didi/KnowStreaming/issues/1147))

|

|

||||||

- [Optimize]优化 pom.xml 中,KS版本的标签名

|

|

||||||

- [Optimize]优化集群Brokers中, Controller显示存在延迟的问题 ([#1162](https://github.com/didi/KnowStreaming/issues/1162))

|

|

||||||

- [Optimize]bump jackson version to 2.13.5

|

|

||||||

- [Optimize]权限新增 ACL,自定义权限配置,资源 TransactionalId 优化 ([#1192](https://github.com/didi/KnowStreaming/issues/1192))

|

|

||||||

- [Optimize]Connect 样式优化

|

|

||||||

- [Optimize]消费组详情控制数据实时刷新

|

|

||||||

|

|

||||||

|

|

||||||

**功能新增**

|

|

||||||

- [Feature]新增删除 Group 或 GroupOffset 功能 ([#1064](https://github.com/didi/KnowStreaming/issues/1064)), ([#1084](https://github.com/didi/KnowStreaming/issues/1084)), ([#1040](https://github.com/didi/KnowStreaming/issues/1040)), ([#1144](https://github.com/didi/KnowStreaming/issues/1144))

|

|

||||||

- [Feature]增加 Truncate 数据功能 ([#1062](https://github.com/didi/KnowStreaming/issues/1062)), ([#1043](https://github.com/didi/KnowStreaming/issues/1043)), ([#1145](https://github.com/didi/KnowStreaming/issues/1145))

|

|

||||||

- [Feature]支持指定 Server 的具体 Jmx 端口 ([#965](https://github.com/didi/KnowStreaming/issues/965))

|

|

||||||

|

|

||||||

|

|

||||||

**文档更新**

|

|

||||||

- [Doc]FAQ 补充 ES 8.x 版本使用说明 ([#1189](https://github.com/didi/KnowStreaming/issues/1189))

|

|

||||||

- [Doc]补充启动失败的说明 ([#1126](https://github.com/didi/KnowStreaming/issues/1126))

|

|

||||||

- [Doc]补充 ZK 无数据排查说明 ([#1004](https://github.com/didi/KnowStreaming/issues/1004))

|

|

||||||

- [Doc]无数据排查文档,补充 ES 集群 Shard 满的异常日志

|

|

||||||

- [Doc]README 补充页面无数据排查手册链接

|

|

||||||

- [Doc]补充连接特定 Jmx 端口的说明 ([#965](https://github.com/didi/KnowStreaming/issues/965))

|

|

||||||

- [Doc]补充 zk_properties 字段的使用说明 ([#1003](https://github.com/didi/KnowStreaming/issues/1003))

|

|

||||||

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

|

|

||||||

## v3.3.0

|

|

||||||

|

|

||||||

**问题修复**

|

|

||||||

- 修复 Connect 的 JMX-Port 配置未生效问题;

|

|

||||||

- 修复 不存在 Connector 时,OverView 页面的数据一直处于加载中的问题;

|

|

||||||

- 修复 Group 分区信息,分页时展示不全的问题;

|

|

||||||

- 修复采集副本指标时,参数传递错误的问题;

|

|

||||||

- 修复用户信息修改后,用户列表会抛出空指针异常的问题;

|

|

||||||

- 修复 Topic 详情页面,查看消息时,选择分区不生效问题;

|

|

||||||

- 修复对 ZK 客户端进行配置后不生效的问题;

|

|

||||||

- 修复 connect 模块,指标中缺少健康巡检项通过数的问题;

|

|

||||||

- 修复 connect 模块,指标获取方法存在映射错误的问题;

|

|

||||||

- 修复 connect 模块,max 纬度指标获取错误的问题;

|

|

||||||

- 修复 Topic 指标大盘 TopN 指标显示信息错误的问题;

|

|

||||||

- 修复 Broker Similar Config 显示错误的问题;

|

|

||||||

- 修复解析 ZK 四字命令时,数据类型设置错误导致空指针的问题;

|

|

||||||

- 修复新增 Topic 时,清理策略选项版本控制错误的问题;

|

|

||||||

- 修复新接入集群时 Controller-Host 信息不显示的问题;

|

|

||||||

- 修复 Connector 和 MM2 列表搜索不生效的问题;

|

|

||||||

- 修复 Zookeeper 页面,Leader 显示存在异常的问题;

|

|

||||||

- 修复前端打包失败的问题;

|

|

||||||

|

|

||||||

|

|

||||||

**产品优化**

|

|

||||||

- ZK Overview 页面补充默认展示的指标;

|

|

||||||

- 统一初始化 ES 索引模版的脚本为 init_es_template.sh,同时新增缺失的 connect 索引模版初始化脚本,去除多余的 replica 和 zookeper 索引模版初始化脚本;

|

|

||||||

- 指标大盘页面,优化指标筛选操作后,无指标数据的指标卡片由不显示改为显示,并增加无数据的兜底;

|

|

||||||

- 删除从 ES 读写 replica 指标的相关代码;

|

|

||||||

- 优化 Topic 健康巡检的日志,明确错误的原因;

|

|

||||||

- 优化无 ZK 模块时,巡检详情忽略对 ZK 的展示;

|

|

||||||

- 优化本地缓存大小为可配置;

|

|

||||||

- Task 模块中的返回中,补充任务的分组信息;

|

|

||||||

- FAQ 补充 Ldap 的配置说明;

|

|

||||||

- FAQ 补充接入 Kerberos 认证的 Kafka 集群的配置说明;

|

|

||||||

- ks_km_kafka_change_record 表增加时间纬度的索引,优化查询性能;

|

|

||||||

- 优化 ZK 健康巡检的日志,便于问题的排查;

|

|

||||||

|

|

||||||

**功能新增**

|

|

||||||

- 新增基于滴滴 Kafka 的 Topic 复制功能(需使用滴滴 Kafka 才可具备该能力);

|

|

||||||

- Topic 指标大盘,新增 Topic 复制相关的指标;

|

|

||||||

- 新增基于 TestContainers 的单测;

|

|

||||||

|

|

||||||

|

|

||||||

**Kafka MM2 Beta版 (v3.3.0版本新增发布)**

|

|

||||||

- MM2 任务的增删改查;

|

|

||||||

- MM2 任务的指标大盘;

|

|

||||||

- MM2 任务的健康状态;

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

|

|

||||||

## v3.2.0

|

|

||||||

|

|

||||||

**问题修复**

|

|

||||||

- 修复健康巡检结果更新至 DB 时,出现死锁问题;

|

|

||||||

- 修复 KafkaJMXClient 类中,logger错误的问题;

|

|

||||||

- 后端修复 Topic 过期策略在 0.10.1.0 版本能多选的问题,实际应该只能二选一;

|

|

||||||

- 修复接入集群时,不填写集群配置会报错的问题;

|

|

||||||

- 升级 spring-context 至 5.3.19 版本,修复安全漏洞;

|

|

||||||

- 修复 Broker & Topic 修改配置时,多版本兼容配置的版本信息错误的问题;

|

|

||||||

- 修复 Topic 列表的健康分为健康状态;

|

|

||||||

- 修复 Broker LogSize 指标存储名称错误导致查询不到的问题;

|

|

||||||

- 修复 Prometheus 中,缺少 Group 部分指标的问题;

|

|

||||||

- 修复因缺少健康状态指标导致集群数错误的问题;

|

|

||||||

- 修复后台任务记录操作日志时,因缺少操作用户信息导致出现异常的问题;

|

|

||||||

- 修复 Replica 指标查询时,DSL 错误的问题;

|

|

||||||

- 关闭 errorLogger,修复错误日志重复输出的问题;

|

|

||||||

- 修复系统管理更新用户信息失败的问题;

|

|

||||||

- 修复因原AR信息丢失,导致迁移任务一直处于执行中的错误;

|

|

||||||

- 修复集群 Topic 列表实时数据查询时,出现失败的问题;

|

|

||||||

- 修复集群 Topic 列表,页面白屏问题;

|

|

||||||

- 修复副本变更时,因AR数据异常,导致数组访问越界的问题;

|

|

||||||

|

|

||||||

|

|

||||||

**产品优化**

|

|

||||||

- 优化健康巡检为按照资源维度多线程并发处理;

|

|

||||||

- 统一日志输出格式,并优化部分输出的日志;

|

|

||||||

- 优化 ZK 四字命令结果解析过程中,容易引起误解的 WARN 日志;

|

|

||||||

- 优化 Zookeeper 详情中,目录结构的搜索文案;

|

|

||||||

- 优化线程池的名称,方便第三方系统进行相关问题的分析;

|

|

||||||

- 去除 ESClient 的并发访问控制,降低 ESClient 创建数及提升利用率;

|

|

||||||

- 优化 Topic Messages 抽屉文案;

|

|

||||||

- 优化 ZK 健康巡检失败时的错误日志信息;

|

|

||||||

- 提高 Offset 信息获取的超时时间,降低并发过高时出现请求超时的概率;

|

|

||||||

- 优化 Topic & Partition 元信息的更新策略,降低对 DB 连接的占用;

|

|

||||||

- 优化 Sonar 代码扫码问题;

|

|

||||||

- 优化分区 Offset 指标的采集;

|

|

||||||

- 优化前端图表相关组件逻辑;

|

|

||||||

- 优化产品主题色;

|

|

||||||

- Consumer 列表刷新按钮新增 hover 提示;

|

|

||||||

- 优化配置 Topic 的消息大小时的测试弹框体验;

|

|

||||||

- 优化 Overview 页面 TopN 查询的流程;

|

|

||||||

|

|

||||||

|

|

||||||

**功能新增**

|

|

||||||

- 新增页面无数据排查文档;

|

|

||||||

- 增加 ES 索引删除的功能;

|

|

||||||

- 支持拆分API服务和Job服务部署;

|

|

||||||

|

|

||||||

|

|

||||||

**Kafka Connect Beta版 (v3.2.0版本新增发布)**

|

|

||||||

- Connect 集群的纳管;

|

|

||||||

- Connector 的增删改查;

|

|

||||||

- Connect 集群 & Connector 的指标大盘;

|

|

||||||

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

|

|

||||||

## v3.1.0

|

|

||||||

|

|

||||||

**Bug修复**

|

|

||||||

- 修复重置 Group Offset 的提示信息中,缺少Dead状态也可进行重置的描述;

|

|

||||||

- 修复新建 Topic 后,立即查看 Topic Messages 信息时,会提示 Topic 不存在的问题;

|

|

||||||

- 修复副本变更时,优先副本选举未被正常处罚执行的问题;

|

|

||||||

- 修复 git 目录不存在时,打包不能正常进行的问题;

|

|

||||||

- 修复 KRaft 模式的 Kafka 集群,JMX PORT 显示 -1 的问题;

|

|

||||||

|

|

||||||

|

|

||||||

**体验优化**

|

|

||||||

- 优化Cluster、Broker、Topic、Group的健康分为健康状态;

|

|

||||||

- 去除健康巡检配置中的权重信息;

|

|

||||||

- 错误提示页面展示优化;

|

|

||||||

- 前端打包编译依赖默认使用 taobao 镜像;

|

|

||||||

- 重新设计优化导航栏的 icon ;

|

|

||||||

|

|

||||||

|

|

||||||

**新增**

|

|

||||||

- 个人头像下拉信息中,新增产品版本信息;

|

|

||||||

- 多集群列表页面,新增集群健康状态分布信息;

|

|

||||||

|

|

||||||

|

|

||||||

**Kafka ZK 部分 (v3.1.0版本正式发布)**

|

|

||||||

- 新增 ZK 集群的指标大盘信息;

|

|

||||||

- 新增 ZK 集群的服务状态概览信息;

|

|

||||||

- 新增 ZK 集群的服务节点列表信息;

|

|

||||||

- 新增 Kafka 在 ZK 的存储数据查看功能;

|

|

||||||

- 新增 ZK 的健康巡检及健康状态计算;

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

|

|

||||||

## v3.0.1

|

|

||||||

|

|

||||||

**Bug修复**

|

|

||||||

- 修复重置 Group Offset 时,提示信息中缺少 Dead 状态也可进行重置的信息;

|

|

||||||

- 修复 Ldap 某个属性不存在时,会直接抛出空指针导致登陆失败的问题;

|

|

||||||

- 修复集群 Topic 列表页,健康分详情信息中,检查时间展示错误的问题;

|

|

||||||

- 修复更新健康检查结果时,出现死锁的问题;

|

|

||||||

- 修复 Replica 索引模版错误的问题;

|

|

||||||

- 修复 FAQ 文档中的错误链接;

|

|

||||||

- 修复 Broker 的 TopN 指标不存在时,页面数据不展示的问题;

|

|

||||||

- 修复 Group 详情页,图表时间范围选择不生效的问题;

|

|

||||||

|

|

||||||

|

|

||||||

**体验优化**

|

|

||||||

- 集群 Group 列表按照 Group 维度进行展示;

|

|

||||||

- 优化避免因 ES 中该指标不存在,导致日志中出现大量空指针的问题;

|

|

||||||

- 优化全局 Message & Notification 展示效果;

|

|

||||||

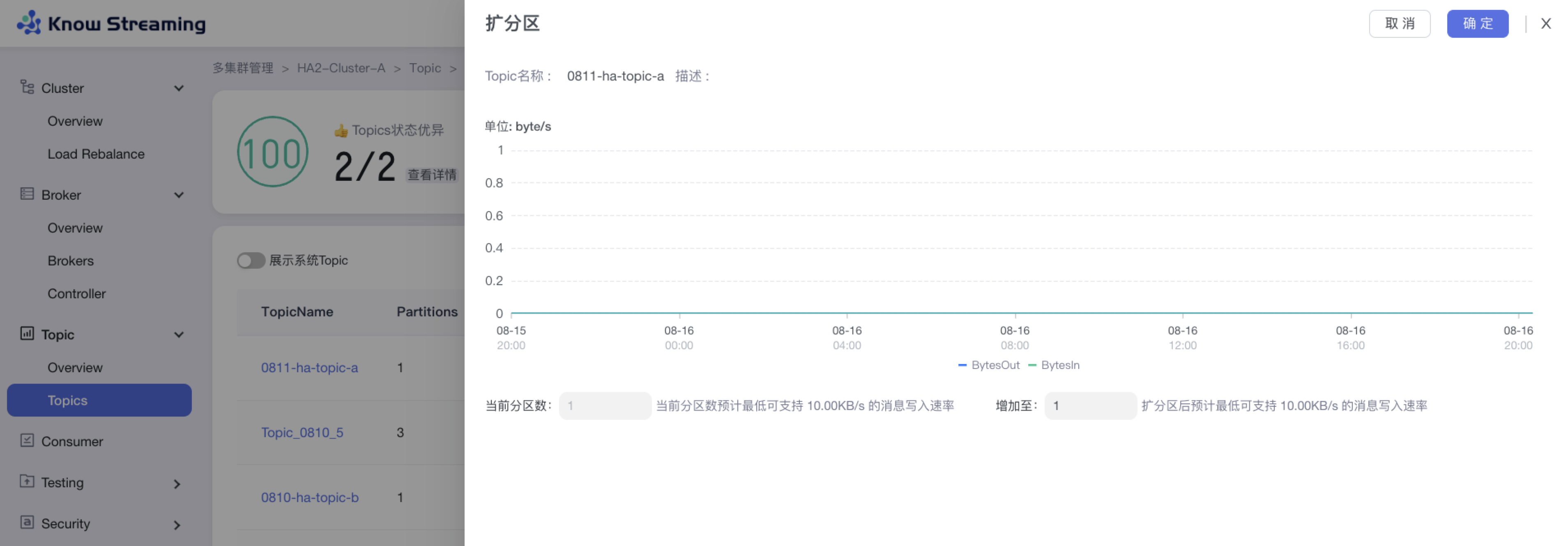

- 优化 Topic 扩分区名称 & 描述展示;

|

|

||||||

|

|

||||||

|

|

||||||

**新增**

|

|

||||||

- Broker 列表页面,新增 JMX 是否成功连接的信息;

|

|

||||||

|

|

||||||

|

|

||||||

**ZK 部分(未完全发布)**

|

|

||||||

- 后端补充 Kafka ZK 指标采集,Kafka ZK 信息获取相关功能;

|

|

||||||

- 增加本地缓存,避免同一采集周期内 ZK 指标重复采集;

|

|

||||||

- 增加 ZK 节点采集失败跳过策略,避免不断对存在问题的节点不断尝试;

|

|

||||||

- 修复 zkAvgLatency 指标转 Long 时抛出异常问题;

|

|

||||||

- 修复 ks_km_zookeeper 表中,role 字段类型错误问题;

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## v3.0.0

|

|

||||||

|

|

||||||

**Bug修复**

|

|

||||||

- 修复 Group 指标防重复采集不生效问题

|

|

||||||

- 修复自动创建 ES 索引模版失败问题

|

|

||||||

- 修复 Group+Topic 列表中存在已删除Topic的问题

|

|

||||||

- 修复使用 MySQL-8 ,因兼容问题, start_time 信息为 NULL 时,会导致创建任务失败的问题

|

|

||||||

- 修复 Group 信息表更新时,出现死锁的问题

|

|

||||||

- 修复图表补点逻辑与图表时间范围不适配的问题

|

|

||||||

|

|

||||||

|

|

||||||

**体验优化**

|

|

||||||

- 按照资源类别,拆分健康巡检任务

|

|

||||||

- 优化 Group 详情页的指标为实时获取

|

|

||||||

- 图表拖拽排序支持用户级存储

|

|

||||||

- 多集群列表 ZK 信息展示兼容无 ZK 情况

|

|

||||||

- Topic 详情消息预览支持复制功能

|

|

||||||

- 部分内容大数字支持千位分割符展示

|

|

||||||

|

|

||||||

|

|

||||||

**新增**

|

|

||||||

- 集群信息中,新增 Zookeeper 客户端配置字段

|

|

||||||

- 集群信息中,新增 Kafka 集群运行模式字段

|

|

||||||

- 新增 docker-compose 的部署方式

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## v3.0.0-beta.3

|

|

||||||

|

|

||||||

**文档**

|

|

||||||

- FAQ 补充权限识别失败问题的说明

|

|

||||||

- 同步更新文档,保持与官网一致

|

|

||||||

|

|

||||||

|

|

||||||

**Bug修复**

|

|

||||||

- Offset 信息获取时,过滤掉无 Leader 的分区

|

|

||||||

- 升级 oshi-core 版本至 5.6.1 版本,修复 Windows 系统获取系统指标失败问题

|

|

||||||

- 修复 JMX 连接被关闭后,未进行重建的问题

|

|

||||||

- 修复因 DB 中 Broker 信息不存在导致 TotalLogSize 指标获取时抛空指针问题

|

|

||||||

- 修复 dml-logi.sql 中,SQL 注释错误的问题

|

|

||||||

- 修复 startup.sh 中,识别操作系统类型错误的问题

|

|

||||||

- 修复配置管理页面删除配置失败的问题

|

|

||||||

- 修复系统管理应用文件引用路径

|

|

||||||

- 修复 Topic Messages 详情提示信息点击跳转 404 的问题

|

|

||||||

- 修复扩副本时,当前副本数不显示问题

|

|

||||||

|

|

||||||

|

|

||||||

**体验优化**

|

|

||||||

- Topic-Messages 页面,增加返回数据的排序以及按照Earliest/Latest的获取方式

|

|

||||||

- 优化 GroupOffsetResetEnum 类名为 OffsetTypeEnum,使得类名含义更准确

|

|

||||||

- 移动 KafkaZKDAO 类,及 Kafka Znode 实体类的位置,使得 Kafka Zookeeper DAO 更加内聚及便于识别

|

|

||||||

- 后端补充 Overview 页面指标排序的功能

|

|

||||||

- 前端 Webpack 配置优化

|

|

||||||

- Cluster Overview 图表取消放大展示功能

|

|

||||||

- 列表页增加手动刷新功能

|

|

||||||

- 接入/编辑集群,优化 JMX-PORT,Version 信息的回显,优化JMX信息的展示

|

|

||||||

- 提高登录页面图片展示清晰度

|

|

||||||

- 部分样式和文案优化

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## v3.0.0-beta.2

|

|

||||||

|

|

||||||

**文档**

|

|

||||||

- 新增登录系统对接文档

|

|

||||||

- 优化前端工程打包构建部分文档说明

|

|

||||||

- FAQ补充KnowStreaming连接特定JMX IP的说明

|

|

||||||

|

|

||||||

|

|

||||||

**Bug修复**

|

|

||||||

- 修复logi_security_oplog表字段过短,导致删除Topic等操作无法记录的问题

|

|

||||||

- 修复ES查询时,抛java.lang.NumberFormatException: For input string: "{"value":0,"relation":"eq"}" 问题

|

|

||||||

- 修复LogStartOffset和LogEndOffset指标单位错误问题

|

|

||||||

- 修复进行副本变更时,旧副本数为NULL的问题

|

|

||||||

- 修复集群Group列表,在第二页搜索时,搜索时返回的分页信息错误问题

|

|

||||||

- 修复重置Offset时,返回的错误信息提示不一致的问题

|

|

||||||

- 修复集群查看,系统查看,LoadRebalance等页面权限点缺失问题

|

|

||||||

- 修复查询不存在的Topic时,错误信息提示不明显的问题

|

|

||||||

- 修复Windows用户打包前端工程报错的问题

|

|

||||||

- package-lock.json锁定前端依赖版本号,修复因依赖自动升级导致打包失败等问题

|

|

||||||

- 系统管理子应用,补充后端返回的Code码拦截,解决后端接口返回报错不展示的问题

|

|

||||||

- 修复用户登出后,依旧可以访问系统的问题

|

|

||||||

- 修复巡检任务配置时,数值显示错误的问题

|

|

||||||

- 修复Broker/Topic Overview 图表和图表详情问题

|

|

||||||

- 修复Job扩缩副本任务明细数据错误的问题

|

|

||||||

- 修复重置Offset时,分区ID,Offset数值无限制问题

|

|

||||||

- 修复扩缩/迁移副本时,无法选中Kafka系统Topic的问题

|

|

||||||

- 修复Topic的Config页面,编辑表单时不能正确回显当前值的问题

|

|

||||||

- 修复Broker Card返回数据后依旧展示加载态的问题

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**体验优化**

|

|

||||||

- 优化默认用户密码为 admin/admin

|

|

||||||

- 缩短新增集群后,集群信息加载的耗时

|

|

||||||

- 集群Broker列表,增加Controller角色信息

|

|

||||||

- 副本变更任务结束后,增加进行优先副本选举的操作

|

|

||||||

- Task模块任务分为Metrics、Common、Metadata三类任务,每类任务配备独立线程池,减少对Job模块的线程池,以及不同类任务之间的相互影响

|

|

||||||

- 删除代码中存在的多余无用文件

|

|

||||||

- 自动新增ES索引模版及近7天索引,减少用户搭建时需要做的事项

|

|

||||||

- 优化前端工程打包流程

|

|

||||||

- 优化登录页文案,页面左侧栏内容,单集群详情样式,Topic列表趋势图等

|

|

||||||

- 首次进入Broker/Topic图表详情时,进行预缓存数据从而优化体验

|

|

||||||

- 优化Topic详情Partition Tab的展示

|

|

||||||

- 多集群列表页增加编辑功能

|

|

||||||

- 优化副本变更时,迁移时间支持分钟级别粒度

|

|

||||||

- logi-security版本升级至2.10.13

|

|

||||||

- logi-elasticsearch-client版本升级至1.0.24

|

|

||||||

|

|

||||||

|

|

||||||

**能力提升**

|

|

||||||

- 支持Ldap登录认证

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## v3.0.0-beta.1

|

|

||||||

|

|

||||||

**文档**

|

|

||||||

- 新增Task模块说明文档

|

|

||||||

- FAQ补充 `Specified key was too long; max key length is 767 bytes ` 错误说明

|

|

||||||

- FAQ补充 `出现ESIndexNotFoundException报错` 错误说明

|

|

||||||

|

|

||||||

|

|

||||||

**Bug修复**

|

|

||||||

- 修复 Consumer 点击 Stop 未停止检索的问题

|

|

||||||

- 修复创建/编辑角色权限报错问题

|

|

||||||

- 修复多集群管理/单集群详情均衡卡片状态错误问题

|

|

||||||

- 修复版本列表未排序问题

|

|

||||||

- 修复Raft集群Controller信息不断记录问题

|

|

||||||

- 修复部分版本消费组描述信息获取失败问题

|

|

||||||

- 修复分区Offset获取失败的日志中,缺少Topic名称信息问题

|

|

||||||

- 修复GitHub图地址错误,及图裂问题

|

|

||||||

- 修复Broker默认使用的地址和注释不一致问题

|

|

||||||

- 修复 Consumer 列表分页不生效问题

|

|

||||||

- 修复操作记录表operation_methods字段缺少默认值问题

|

|

||||||

- 修复集群均衡表中move_broker_list字段无效的问题

|

|

||||||

- 修复KafkaUser、KafkaACL信息获取时,日志一直重复提示不支持问题

|

|

||||||

- 修复指标缺失时,曲线出现掉底的问题

|

|

||||||

|

|

||||||

|

|

||||||

**体验优化**

|

|

||||||

- 优化前端构建时间和打包体积,增加依赖打包的分包策略

|

|

||||||

- 优化产品样式和文案展示

|

|

||||||

- 优化ES客户端数为可配置

|

|

||||||

- 优化日志中大量出现的MySQL Key冲突日志

|

|

||||||

|

|

||||||

|

|

||||||

**能力提升**

|

|

||||||

- 增加周期任务,用于主动创建缺少的ES模版及索引的能力,减少额外的脚本操作

|

|

||||||

- 增加JMX连接的Broker地址可选择的能力

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## v3.0.0-beta.0

|

|

||||||

|

|

||||||

**1、多集群管理**

|

|

||||||

|

|

||||||

- 增加健康监测体系、关键组件&指标 GUI 展示

|

|

||||||

- 增加 2.8.x 以上 Kafka 集群接入,覆盖 0.10.x-3.x

|

|

||||||

- 删除逻辑集群、共享集群、Region 概念

|

|

||||||

|

|

||||||

**2、Cluster 管理**

|

|

||||||

|

|

||||||

- 增加集群概览信息、集群配置变更记录

|

|

||||||

- 增加 Cluster 健康分,健康检查规则支持自定义配置

|

|

||||||

- 增加 Cluster 关键指标统计和 GUI 展示,支持自定义配置

|

|

||||||

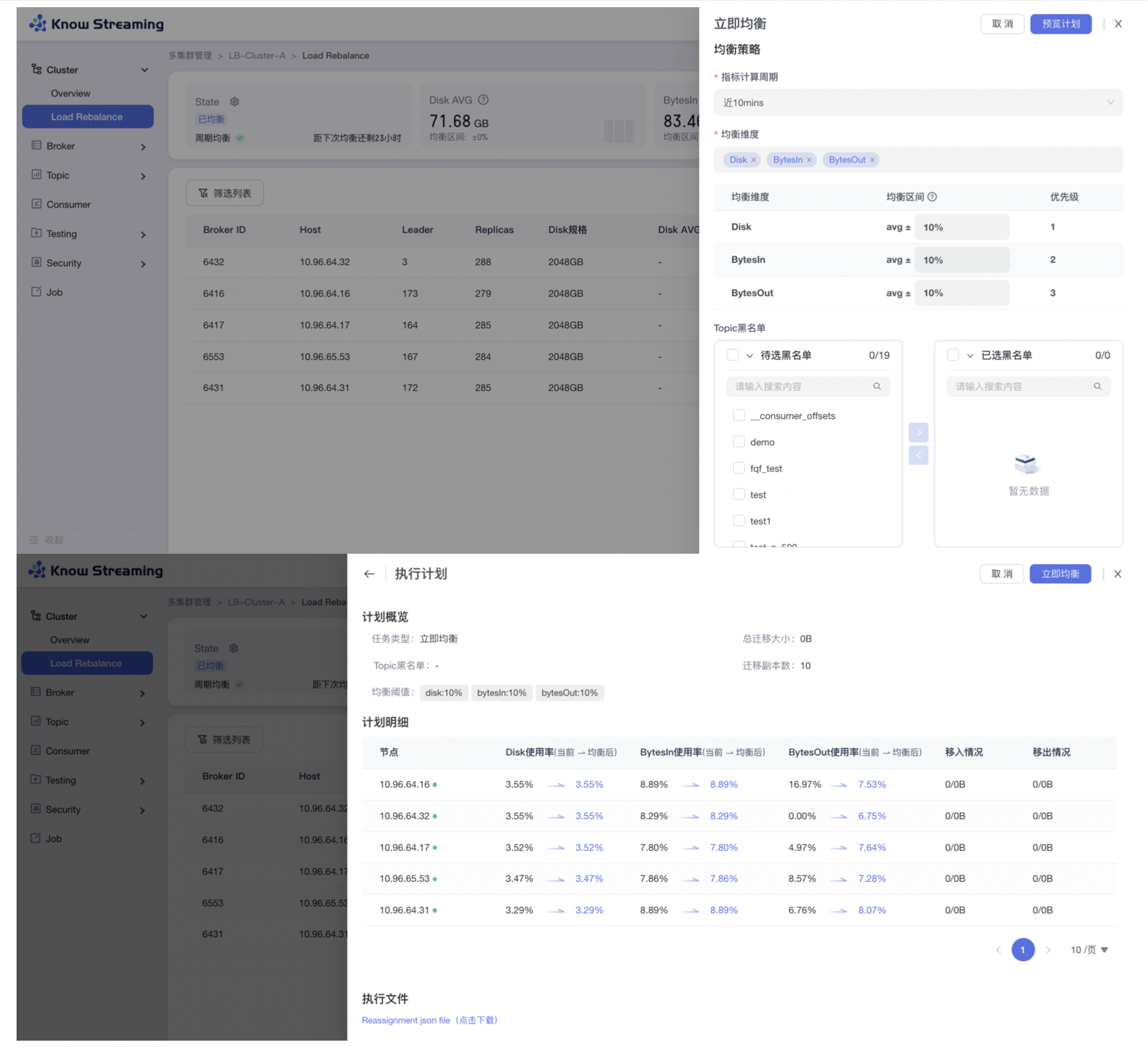

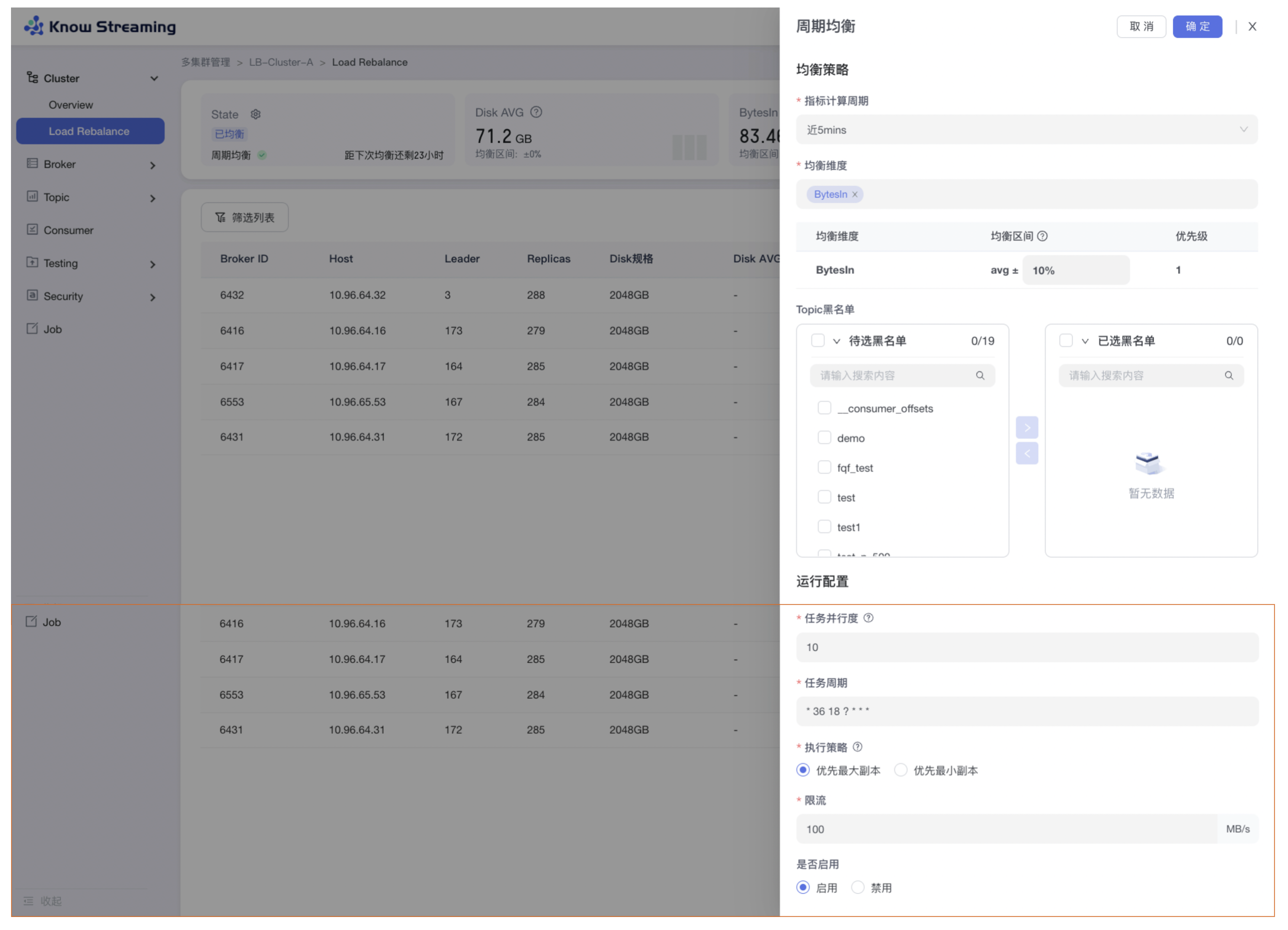

- 增加 Cluster 层 I/O、Disk 的 Load Reblance 功能,支持定时均衡任务(企业版)

|

|

||||||

- 删除限流、鉴权功能

|

|

||||||

- 删除 APPID 概念

|

|

||||||

|

|

||||||

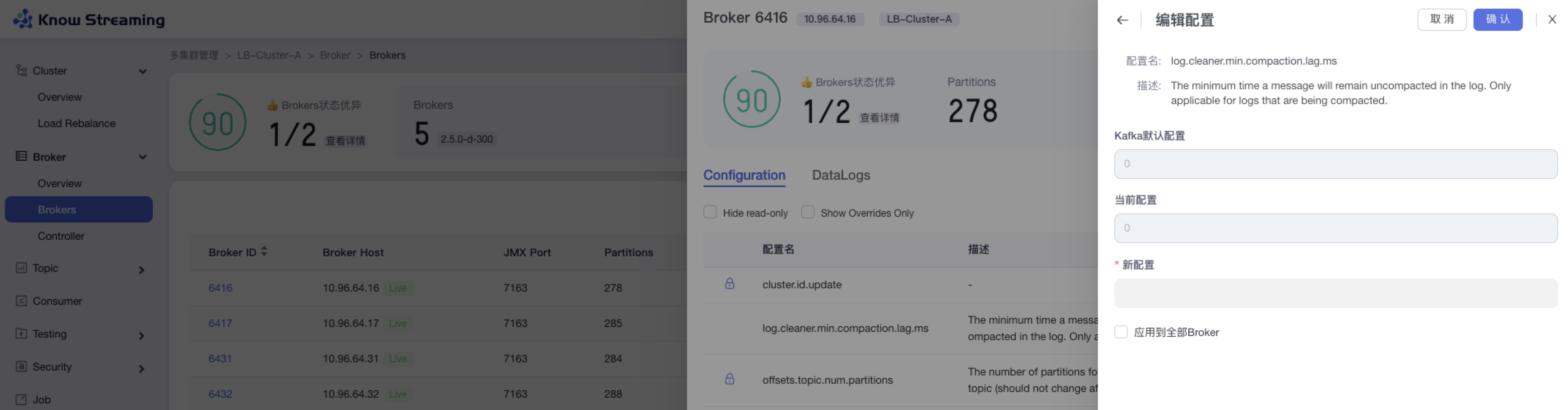

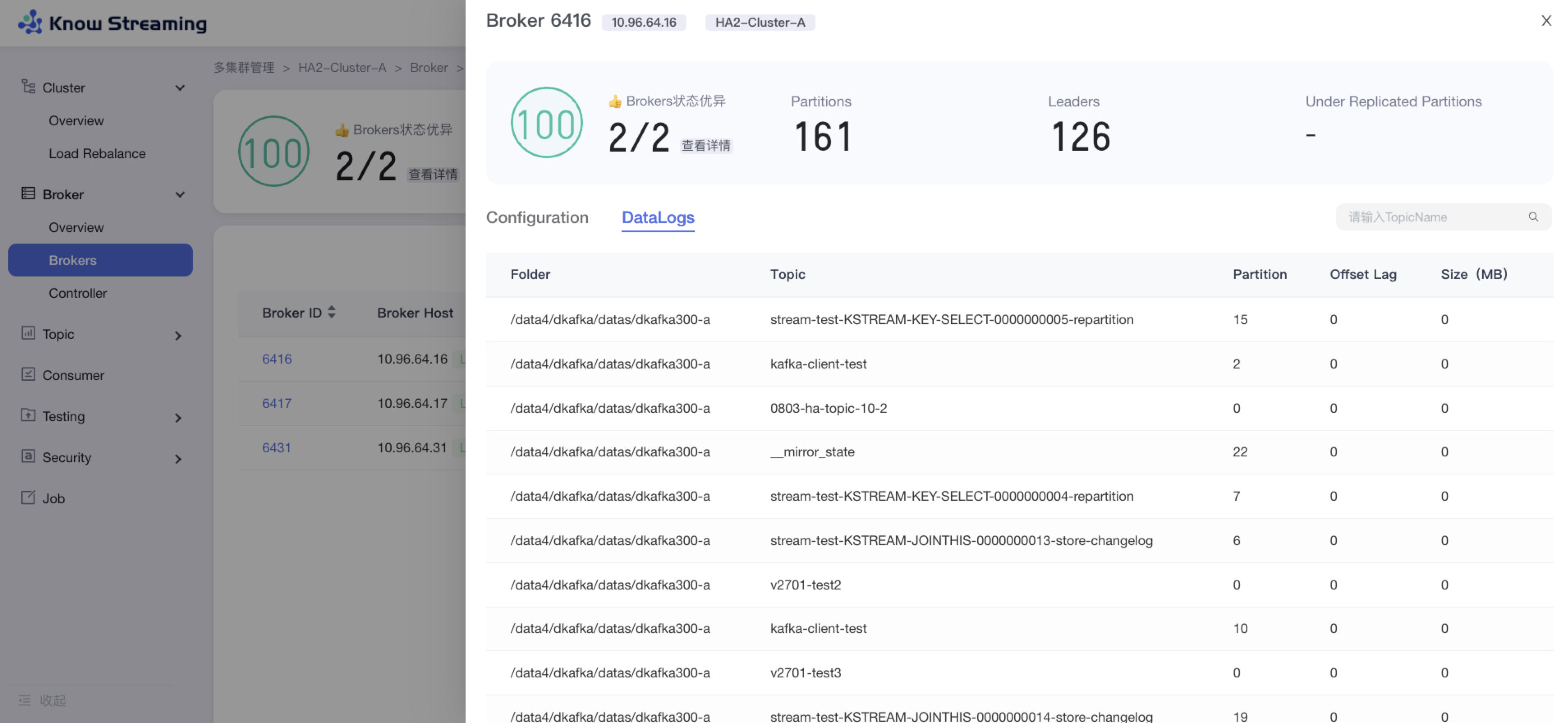

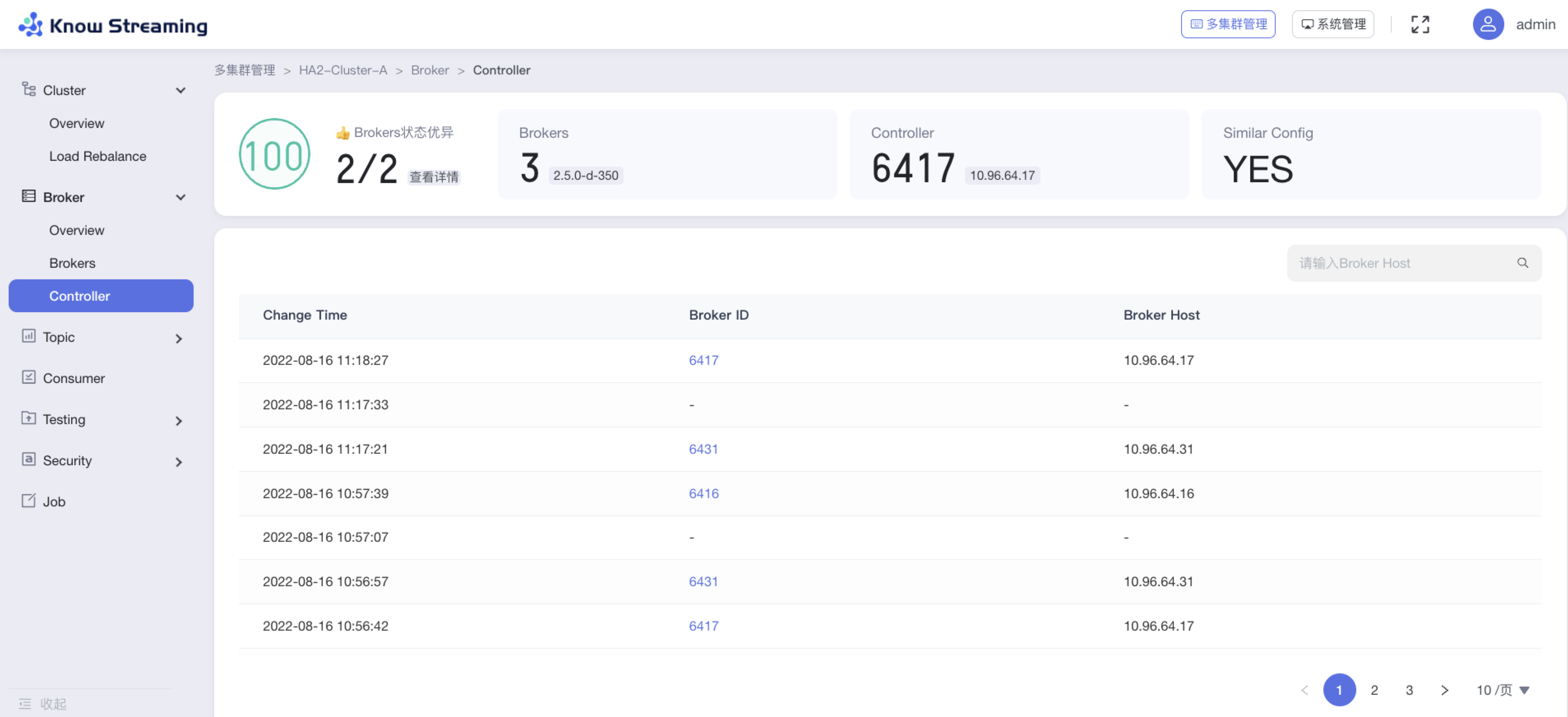

**3、Broker 管理**

|

|

||||||

|

|

||||||

- 增加 Broker 健康分

|

|

||||||

- 增加 Broker 关键指标统计和 GUI 展示,支持自定义配置

|

|

||||||

- 增加 Broker 参数配置功能,需重启生效

|

|

||||||

- 增加 Controller 变更记录

|

|

||||||

- 增加 Broker Datalogs 记录

|

|

||||||

- 删除 Leader Rebalance 功能

|

|

||||||

- 删除 Broker 优先副本选举

|

|

||||||

|

|

||||||

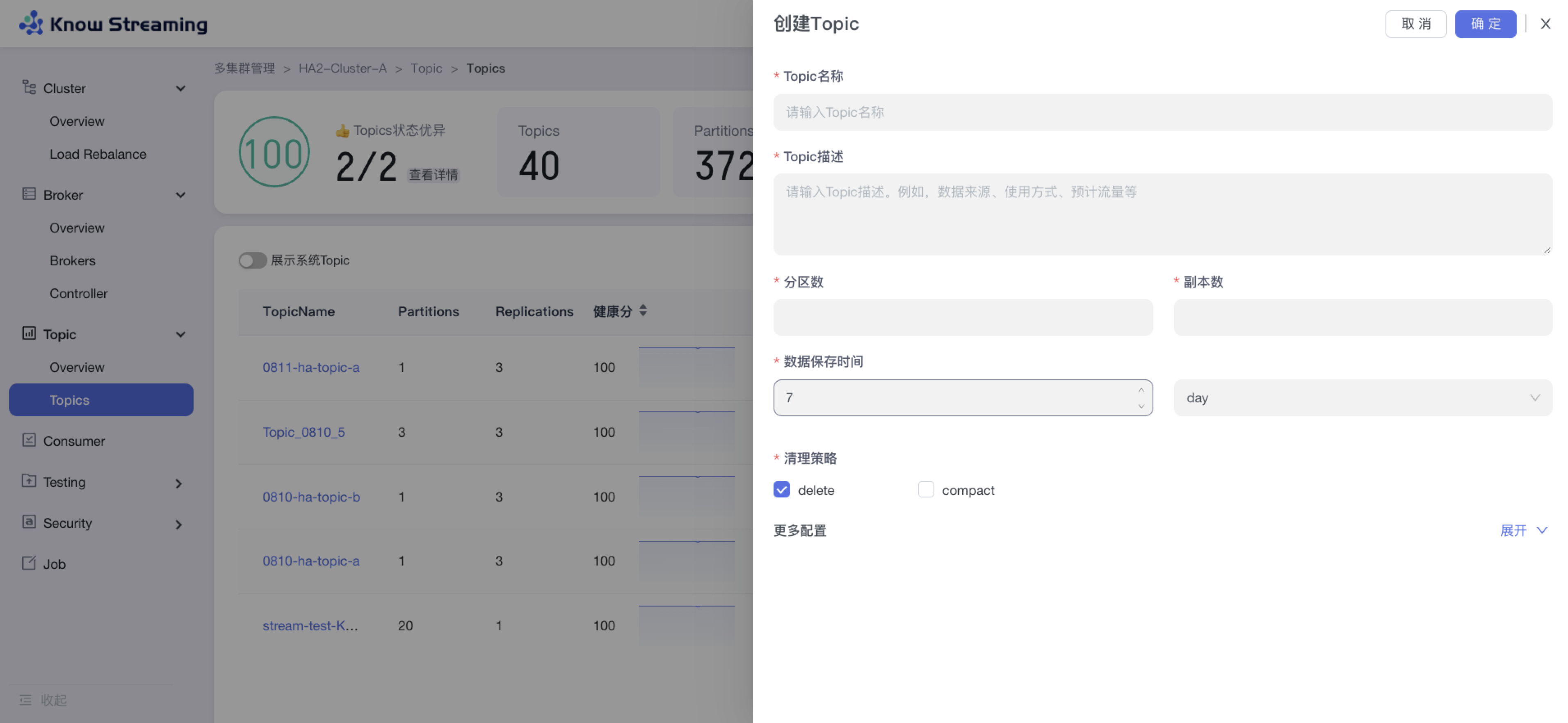

**4、Topic 管理**

|

|

||||||

|

|

||||||

- 增加 Topic 健康分

|

|

||||||

- 增加 Topic 关键指标统计和 GUI 展示,支持自定义配置

|

|

||||||

- 增加 Topic 参数配置功能,可实时生效

|

|

||||||

- 增加 Topic 批量迁移、Topic 批量扩缩副本功能

|

|

||||||

- 增加查看系统 Topic 功能

|

|

||||||

- 优化 Partition 分布的 GUI 展示

|

|

||||||

- 优化 Topic Message 数据采样

|

|

||||||

- 删除 Topic 过期概念

|

|

||||||

- 删除 Topic 申请配额功能

|

|

||||||

|

|

||||||

**5、Consumer 管理**

|

|

||||||

|

|

||||||

- 优化了 ConsumerGroup 展示形式,增加 Consumer Lag 的 GUI 展示

|

|

||||||

|

|

||||||

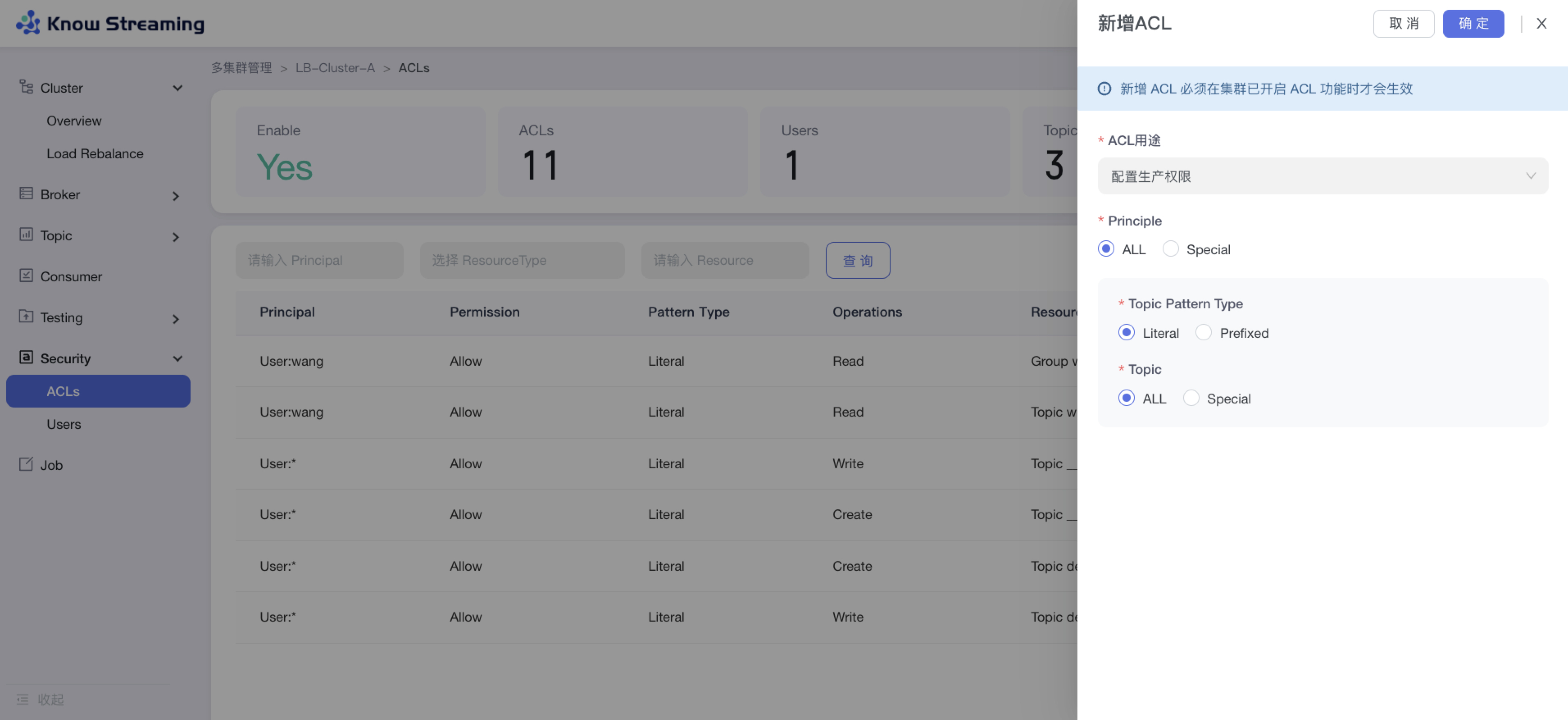

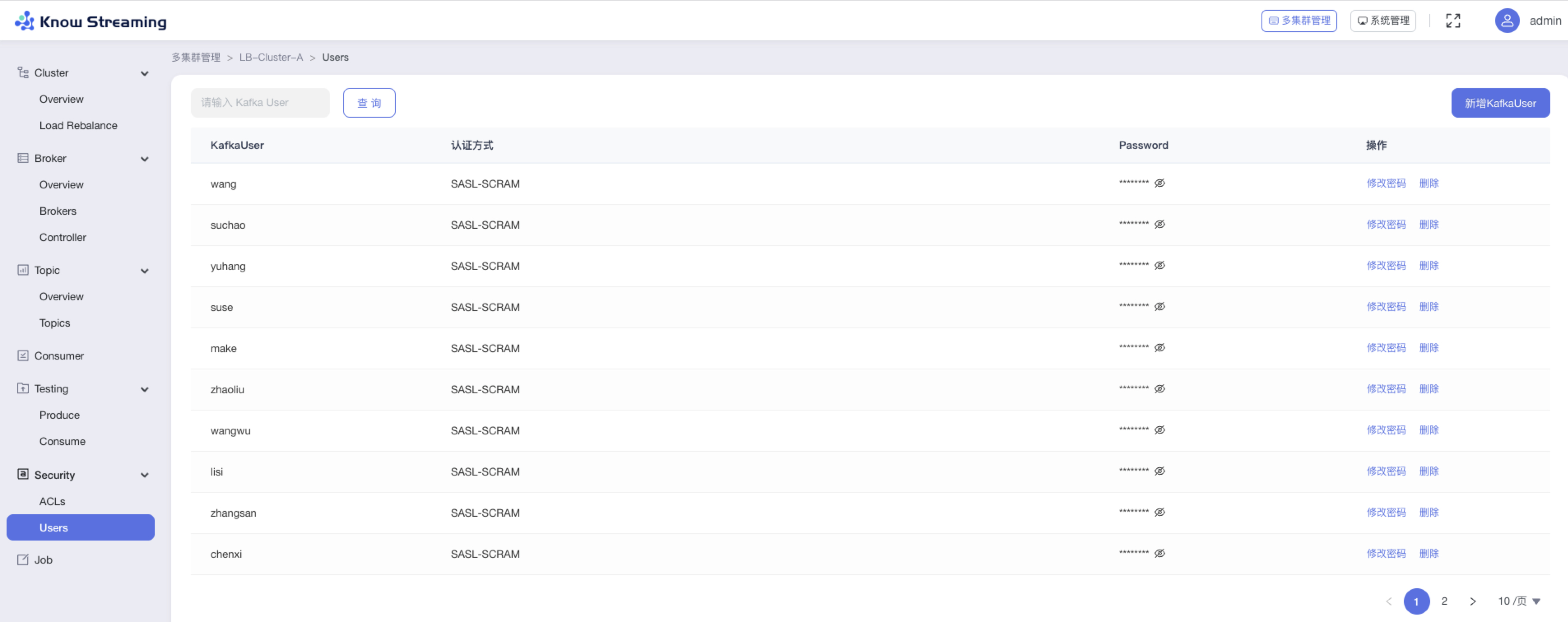

**6、ACL 管理**

|

|

||||||

|

|

||||||

- 增加原生 ACL GUI 配置功能,可配置生产、消费、自定义多种组合权限

|

|

||||||

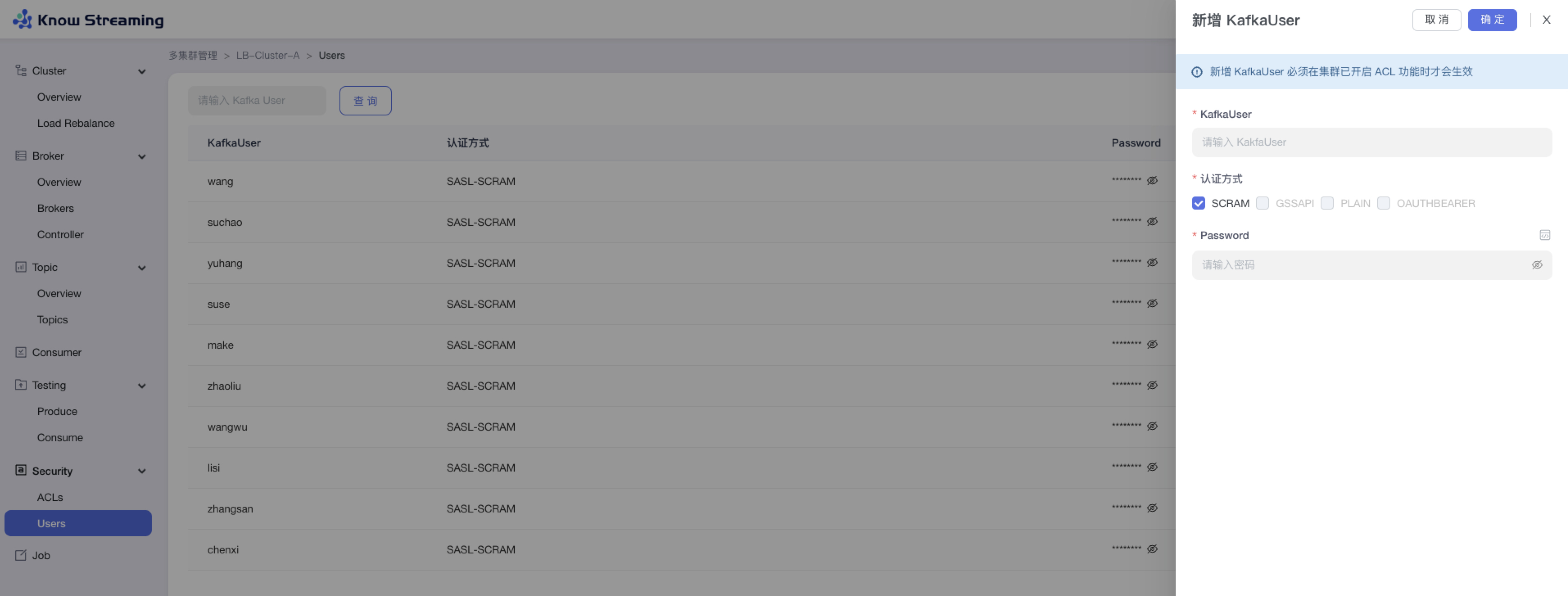

- 增加 KafkaUser 功能,可自定义新增 KafkaUser

|

|

||||||

|

|

||||||

**7、消息测试(企业版)**

|

|

||||||

|

|

||||||

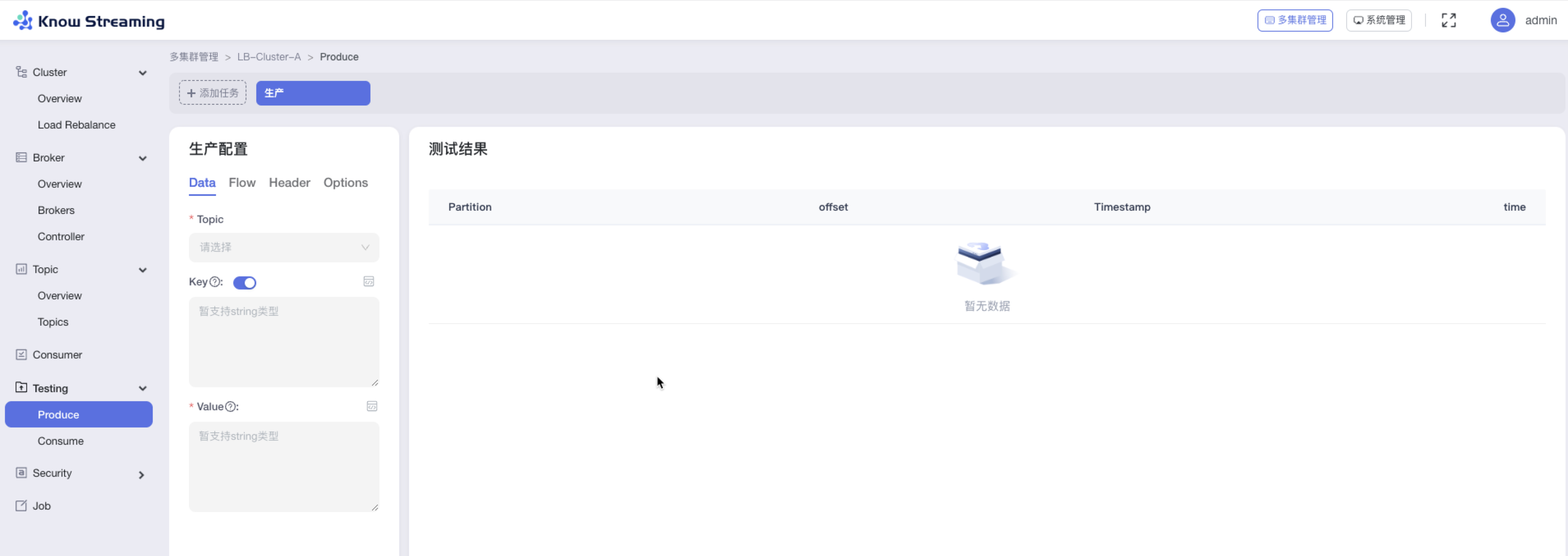

- 增加生产者消息模拟器,支持 Data、Flow、Header、Options 自定义配置(企业版)

|

|

||||||

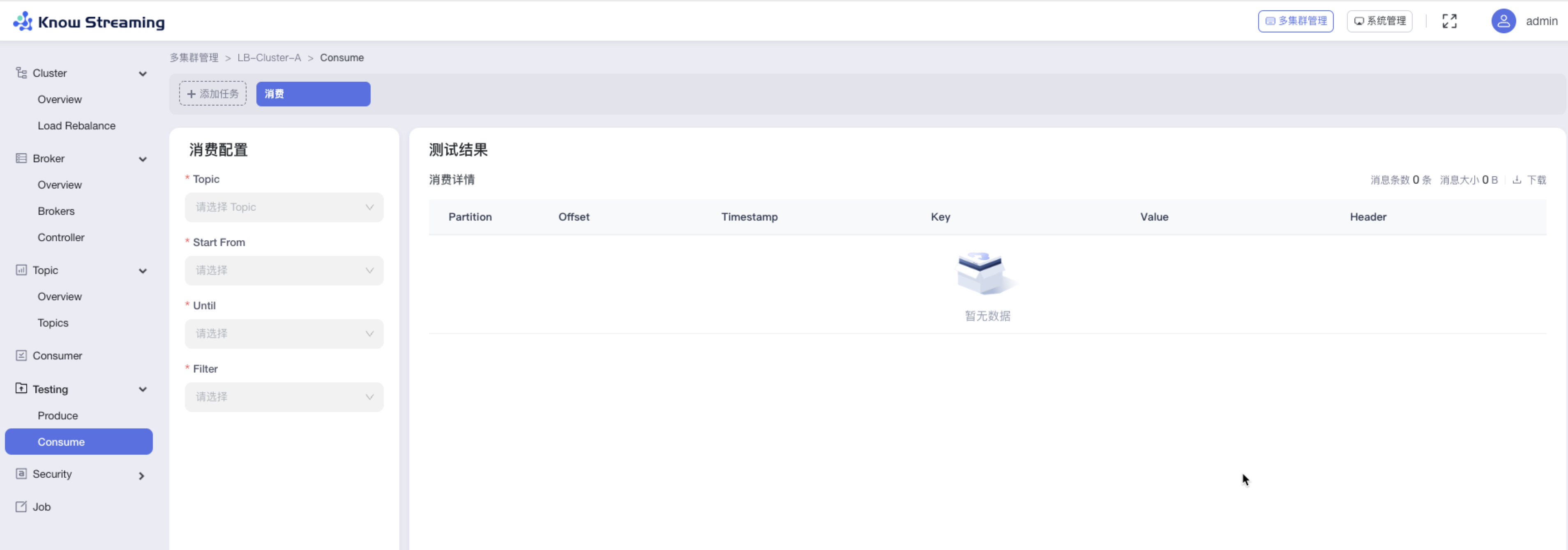

- 增加消费者消息模拟器,支持 Data、Flow、Header、Options 自定义配置(企业版)

|

|

||||||

|

|

||||||

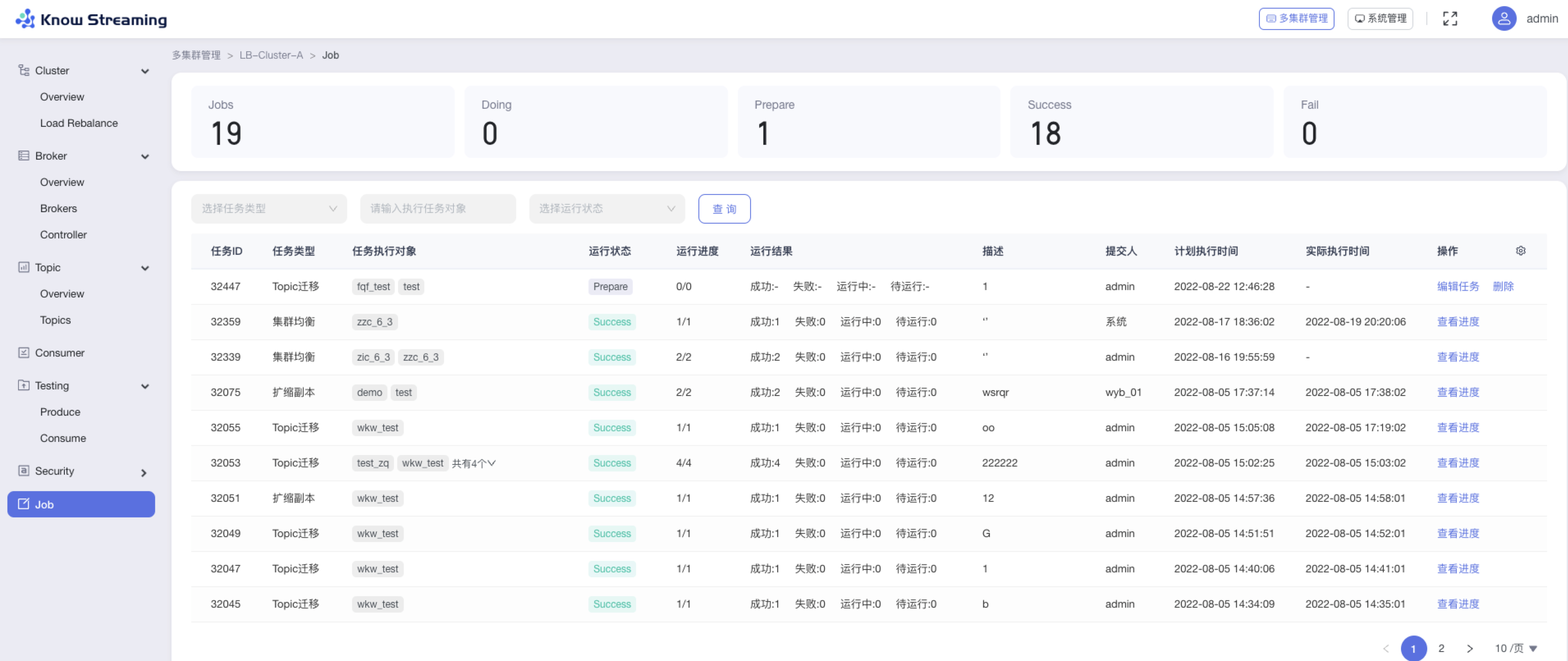

**8、Job**

|

|

||||||

|

|

||||||

- 优化 Job 模块,支持任务进度管理

|

|

||||||

|

|

||||||

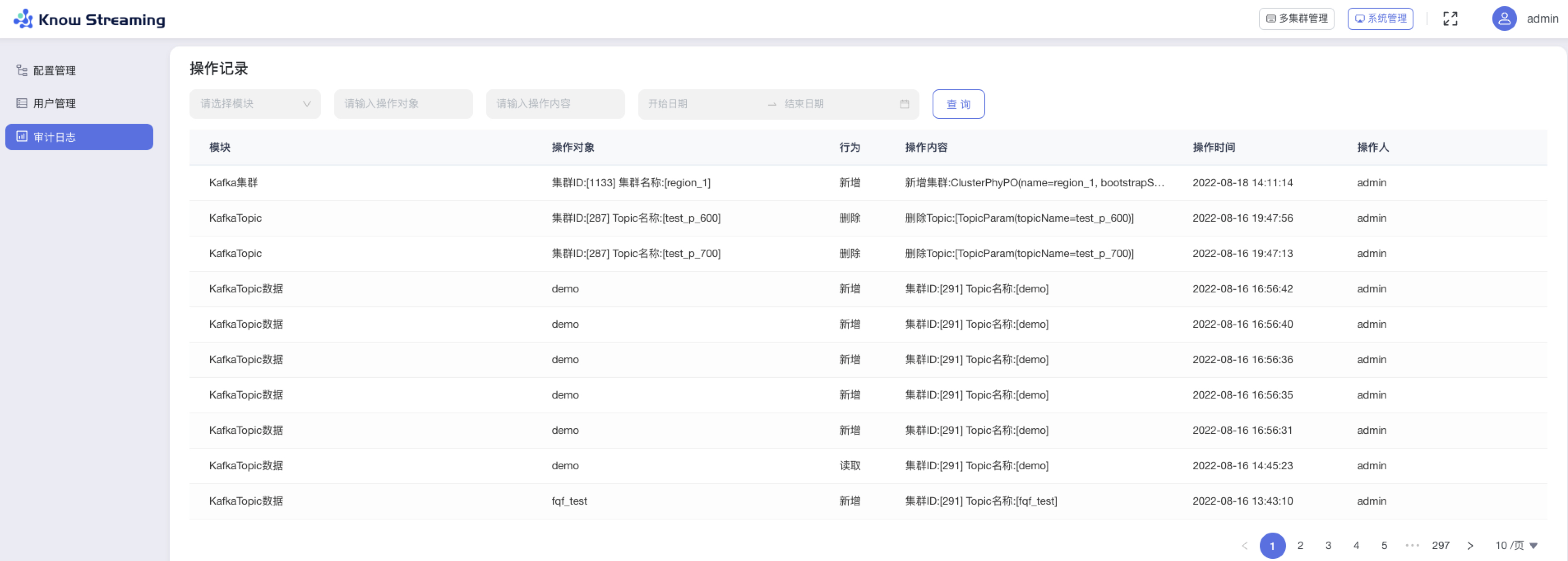

**9、系统管理**

|

|

||||||

|

|

||||||

- 优化用户、角色管理体系,支持自定义角色配置页面及操作权限

|

|

||||||

- 优化审计日志信息

|

|

||||||

- 删除多租户体系

|

|

||||||

- 删除工单流程

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

## v2.6.0

|

|

||||||

|

|

||||||

版本上线时间:2022-01-24

|

|

||||||

|

|

||||||

### 能力提升

|

|

||||||

- 增加简单回退工具类

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

- 补充周期任务说明文档

|

|

||||||

- 补充集群安装部署使用说明文档

|

|

||||||

- 升级Swagger、SpringFramework、SpringBoot、EChats版本

|

|

||||||

- 优化Task模块的日志输出

|

|

||||||

- 优化corn表达式解析失败后退出无任何日志提示问题

|

|

||||||

- Ldap用户接入时,增加部门及邮箱信息等

|

|

||||||

- 对Jmx模块,增加连接失败后的回退机制及错误日志优化

|

|

||||||

- 增加线程池、客户端池可配置

|

|

||||||

- 删除无用的jmx_prometheus_javaagent-0.14.0.jar

|

|

||||||

- 优化迁移任务名称

|

|

||||||

- 优化创建Region时,Region容量信息不能立即被更新问题

|

|

||||||

- 引入lombok

|

|

||||||

- 更新视频教程

|

|

||||||

- 优化kcm_script.sh脚本中的LogiKM地址为可通过程序传入

|

|

||||||

- 第三方接口及网关接口,增加是否跳过登录的开关

|

|

||||||

- extends模块相关配置调整为非必须在application.yml中配置

|

|

||||||

|

|

||||||

### bug修复

|

|

||||||

- 修复批量往DB写入空指标数组时报SQL语法异常的问题

|

|

||||||

- 修复网关增加配置及修改配置时,version不变化问题

|

|

||||||

- 修复集群列表页,提示框遮挡问题

|

|

||||||

- 修复对高版本Broker元信息协议解析失败的问题

|

|

||||||

- 修复Dockerfile执行时提示缺少application.yml文件的问题

|

|

||||||

- 修复逻辑集群更新时,会报空指针的问题

|

|

||||||

|

|

||||||

|

|

||||||

## v2.5.0

|

|

||||||

|

|

||||||

版本上线时间:2021-07-10

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

- 更改产品名为LogiKM

|

|

||||||

- 更新产品图标

|

|

||||||

|

|

||||||

|

|

||||||

## v2.4.1+

|

|

||||||

|

|

||||||

版本上线时间:2021-05-21

|

|

||||||

|

|

||||||

### 能力提升

|

|

||||||

- 增加直接增加权限和配额的接口(v2.4.1)

|

|

||||||

- 增加接口调用可绕过登录的功能(v2.4.1)

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

- Tomcat 版本提升至8.5.66(v2.4.2)

|

|

||||||

- op接口优化,拆分util接口为topic、leader两类接口(v2.4.1)

|

|

||||||

- 简化Gateway配置的Key长度(v2.4.1)

|

|

||||||

|

|

||||||

### bug修复

|

|

||||||

- 修复页面展示版本错误问题(v2.4.2)

|

|

||||||

|

|

||||||

|

|

||||||

## v2.4.0

|

|

||||||

|

|

||||||

版本上线时间:2021-05-18

|

|

||||||

|

|

||||||

|

|

||||||

### 能力提升

|

|

||||||

|

|

||||||

- 增加App与Topic自动化审批开关

|

|

||||||

- Broker元信息中增加Rack信息

|

|

||||||

- 升级MySQL 驱动,支持MySQL 8+

|

|

||||||

- 增加操作记录查询界面

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

|

|

||||||

- FAQ告警组说明优化

|

|

||||||

- 用户手册共享及 独享集群概念优化

|

|

||||||

- 用户管理界面,前端限制用户删除自己

|

|

||||||

|

|

||||||

### bug修复

|

|

||||||

|

|

||||||

- 修复op-util类中创建Topic失败的接口

|

|

||||||

- 周期同步Topic到DB的任务修复,将Topic列表查询从缓存调整为直接查DB

|

|

||||||

- 应用下线审批失败的功能修复,将权限为0(无权限)的数据进行过滤

|

|

||||||

- 修复登录及权限绕过的漏洞

|

|

||||||

- 修复研发角色展示接入集群、暂停监控等按钮的问题

|

|

||||||

|

|

||||||

|

|

||||||

## v2.3.0

|

|

||||||

|

|

||||||

版本上线时间:2021-02-08

|

|

||||||

|

|

||||||

|

|

||||||

### 能力提升

|

|

||||||

|

|

||||||

- 新增支持docker化部署

|

|

||||||

- 可指定Broker作为候选controller

|

|

||||||

- 可新增并管理网关配置

|

|

||||||

- 可获取消费组状态

|

|

||||||

- 增加集群的JMX认证

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

|

|

||||||

- 优化编辑用户角色、修改密码的流程

|

|

||||||

- 新增consumerID的搜索功能

|

|

||||||

- 优化“Topic连接信息”、“消费组重置消费偏移”、“修改Topic保存时间”的文案提示

|

|

||||||

- 在相应位置增加《资源申请文档》链接

|

|

||||||

|

|

||||||

### bug修复

|

|

||||||

|

|

||||||

- 修复Broker监控图表时间轴展示错误的问题

|

|

||||||

- 修复创建夜莺监控告警规则时,使用的告警周期的单位不正确的问题

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## v2.2.0

|

|

||||||

|

|

||||||

版本上线时间:2021-01-25

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 能力提升

|

|

||||||

|

|

||||||

- 优化工单批量操作流程

|

|

||||||

- 增加获取Topic75分位/99分位的实时耗时数据

|

|

||||||

- 增加定时任务,可将无主未落DB的Topic定期写入DB

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

|

|

||||||

- 在相应位置增加《集群接入文档》链接

|

|

||||||

- 优化物理集群、逻辑集群含义

|

|

||||||

- 在Topic详情页、Topic扩分区操作弹窗增加展示Topic所属Region的信息

|

|

||||||

- 优化Topic审批时,Topic数据保存时间的配置流程

|

|

||||||

- 优化Topic/应用申请、审批时的错误提示文案

|

|

||||||

- 优化Topic数据采样的操作项文案

|

|

||||||

- 优化运维人员删除Topic时的提示文案

|

|

||||||

- 优化运维人员删除Region的删除逻辑与提示文案

|

|

||||||

- 优化运维人员删除逻辑集群的提示文案

|

|

||||||

- 优化上传集群配置文件时的文件类型限制条件

|

|

||||||

|

|

||||||

### bug修复

|

|

||||||

|

|

||||||

- 修复填写应用名称时校验特殊字符出错的问题

|

|

||||||

- 修复普通用户越权访问应用详情的问题

|

|

||||||

- 修复由于Kafka版本升级,导致的数据压缩格式无法获取的问题

|

|

||||||

- 修复删除逻辑集群或Topic之后,界面依旧展示的问题

|

|

||||||

- 修复进行Leader rebalance操作时执行结果重复提示的问题

|

|

||||||

|

|

||||||

|

|

||||||

## v2.1.0

|

|

||||||

|

|

||||||

版本上线时间:2020-12-19

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 体验优化

|

|

||||||

|

|

||||||

- 优化页面加载时的背景样式

|

|

||||||

- 优化普通用户申请Topic权限的流程

|

|

||||||

- 优化Topic申请配额、申请分区的权限限制

|

|

||||||

- 优化取消Topic权限的文案提示

|

|

||||||

- 优化申请配额表单的表单项名称

|

|

||||||

- 优化重置消费偏移的操作流程

|

|

||||||

- 优化创建Topic迁移任务的表单内容

|

|

||||||

- 优化Topic扩分区操作的弹窗界面样式

|

|

||||||

- 优化集群Broker监控可视化图表样式

|

|

||||||

- 优化创建逻辑集群的表单内容

|

|

||||||

- 优化集群安全协议的提示文案

|

|

||||||

|

|

||||||

### bug修复

|

|

||||||

|

|

||||||

- 修复偶发性重置消费偏移失败的问题

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -1,16 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

|

|

||||||

cd `dirname $0`/../libs

|

|

||||||

target_dir=`pwd`

|

|

||||||

|

|

||||||

pid=`ps ax | grep -i 'ks-km' | grep ${target_dir} | grep java | grep -v grep | awk '{print $1}'`

|

|

||||||

if [ -z "$pid" ] ; then

|

|

||||||

echo "No ks-km running."

|

|

||||||

exit -1;

|

|

||||||

fi

|

|

||||||

|

|

||||||

echo "The ks-km (${pid}) is running..."

|

|

||||||

|

|

||||||

kill ${pid}

|

|

||||||

|

|

||||||

echo "Send shutdown request to ks-km (${pid}) OK"

|

|

||||||

@@ -1,82 +0,0 @@

|

|||||||

error_exit ()

|

|

||||||

{

|

|

||||||

echo "ERROR: $1 !!"

|

|

||||||

exit 1

|

|

||||||

}

|

|

||||||

|

|

||||||

[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=$HOME/jdk/java

|

|

||||||

[ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/usr/java

|

|

||||||

[ ! -e "$JAVA_HOME/bin/java" ] && unset JAVA_HOME

|

|

||||||

|

|

||||||

if [ -z "$JAVA_HOME" ]; then

|

|

||||||

if [ "Darwin" = "$(uname -s)" ]; then

|

|

||||||

|

|

||||||

if [ -x '/usr/libexec/java_home' ] ; then

|

|

||||||

export JAVA_HOME=`/usr/libexec/java_home`

|

|

||||||

|

|

||||||

elif [ -d "/System/Library/Frameworks/JavaVM.framework/Versions/CurrentJDK/Home" ]; then

|

|

||||||

export JAVA_HOME="/System/Library/Frameworks/JavaVM.framework/Versions/CurrentJDK/Home"

|

|

||||||

fi

|

|

||||||

else

|

|

||||||

JAVA_PATH=`dirname $(readlink -f $(which javac))`

|

|

||||||

if [ "x$JAVA_PATH" != "x" ]; then

|

|

||||||

export JAVA_HOME=`dirname $JAVA_PATH 2>/dev/null`

|

|

||||||

fi

|

|

||||||

fi

|

|

||||||

if [ -z "$JAVA_HOME" ]; then

|

|

||||||

error_exit "Please set the JAVA_HOME variable in your environment, We need java(x64)! jdk8 or later is better!"

|

|

||||||

fi

|

|

||||||

fi

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

export WEB_SERVER="ks-km"

|

|

||||||

export JAVA_HOME

|

|

||||||

export JAVA="$JAVA_HOME/bin/java"

|

|

||||||

export BASE_DIR=`cd $(dirname $0)/..; pwd`

|

|

||||||

export CUSTOM_SEARCH_LOCATIONS=file:${BASE_DIR}/conf/

|

|

||||||

|

|

||||||

|

|

||||||

#===========================================================================================

|

|

||||||

# JVM Configuration

|

|

||||||

#===========================================================================================

|

|

||||||

|

|

||||||

JAVA_OPT="${JAVA_OPT} -server -Xms2g -Xmx2g -Xmn1g -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=320m"

|

|

||||||

JAVA_OPT="${JAVA_OPT} -XX:-OmitStackTraceInFastThrow -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=${BASE_DIR}/logs/java_heapdump.hprof"

|

|

||||||

|

|

||||||

## jdk版本高的情况 有些 参数废弃了

|

|

||||||

JAVA_MAJOR_VERSION=$($JAVA -version 2>&1 | sed -E -n 's/.* version "([0-9]*).*$/\1/p')

|

|

||||||

if [[ "$JAVA_MAJOR_VERSION" -ge "9" ]] ; then

|

|

||||||

JAVA_OPT="${JAVA_OPT} -Xlog:gc*:file=${BASE_DIR}/logs/km_gc.log:time,tags:filecount=10,filesize=102400"

|

|

||||||

else

|

|

||||||

JAVA_OPT="${JAVA_OPT} -Djava.ext.dirs=${JAVA_HOME}/jre/lib/ext:${JAVA_HOME}/lib/ext"

|

|

||||||

JAVA_OPT="${JAVA_OPT} -Xloggc:${BASE_DIR}/logs/km_gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M"

|

|

||||||

|

|

||||||

fi

|

|

||||||

|

|

||||||

JAVA_OPT="${JAVA_OPT} -jar ${BASE_DIR}/libs/${WEB_SERVER}.jar"

|

|

||||||

JAVA_OPT="${JAVA_OPT} --spring.config.additional-location=${CUSTOM_SEARCH_LOCATIONS}"

|

|

||||||

JAVA_OPT="${JAVA_OPT} --logging.config=${BASE_DIR}/conf/logback-spring.xml"

|

|

||||||

JAVA_OPT="${JAVA_OPT} --server.max-http-header-size=524288"

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

if [ ! -d "${BASE_DIR}/logs" ]; then

|

|

||||||

mkdir ${BASE_DIR}/logs

|

|

||||||

fi

|

|

||||||

|

|

||||||

echo "$JAVA ${JAVA_OPT}"

|

|

||||||

|

|

||||||

# check the start.out log output file

|

|

||||||

if [ ! -f "${BASE_DIR}/logs/start.out" ]; then

|

|

||||||

touch "${BASE_DIR}/logs/start.out"

|

|

||||||

fi

|

|

||||||

|

|

||||||

# start

|

|

||||||

echo -e "---- 启动脚本 ------\n $JAVA ${JAVA_OPT}" > ${BASE_DIR}/logs/start.out 2>&1 &

|

|

||||||

|

|

||||||

|

|

||||||

nohup $JAVA ${JAVA_OPT} >> ${BASE_DIR}/logs/start.out 2>&1 &

|

|

||||||

|

|

||||||

echo "${WEB_SERVER} is starting,you can check the ${BASE_DIR}/logs/start.out"

|

|

||||||

@@ -1,111 +0,0 @@

|

|||||||

<mxfile host="65bd71144e">

|

|

||||||

<diagram id="vxzhwhZdNVAY19FZ4dgb" name="Page-1">

|

|

||||||

<mxGraphModel dx="1194" dy="733" grid="0" gridSize="10" guides="1" tooltips="1" connect="1" arrows="1" fold="1" page="1" pageScale="1" pageWidth="1169" pageHeight="827" math="0" shadow="0">

|

|

||||||

<root>

|

|

||||||

<mxCell id="0"/>

|

|

||||||

<mxCell id="1" parent="0"/>

|

|

||||||

<mxCell id="4" style="edgeStyle=none;html=1;exitX=0.5;exitY=1;exitDx=0;exitDy=0;startArrow=none;strokeWidth=2;strokeColor=#6666FF;" edge="1" parent="1" source="16">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="200" y="540" as="targetPoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="7" style="edgeStyle=none;html=1;exitX=1;exitY=0.5;exitDx=0;exitDy=0;exitPerimeter=0;strokeColor=#33FF33;strokeWidth=2;" edge="1" parent="1" source="2">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="360" y="240" as="targetPoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="5" style="edgeStyle=none;html=1;startArrow=none;strokeColor=#33FF33;strokeWidth=2;" edge="1" parent="1">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="200" y="400" as="targetPoint"/>

|

|

||||||

<mxPoint x="360" y="360" as="sourcePoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="3" value="C3" style="verticalLabelPosition=middle;verticalAlign=middle;html=1;shape=mxgraph.flowchart.on-page_reference;labelPosition=center;align=center;strokeColor=#FF8000;strokeWidth=2;" vertex="1" parent="1">

|

|

||||||

<mxGeometry x="340" y="280" width="40" height="40" as="geometry"/>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="18" style="edgeStyle=none;html=1;entryX=0.5;entryY=0;entryDx=0;entryDy=0;entryPerimeter=0;endArrow=none;endFill=0;strokeColor=#FF8000;strokeWidth=2;" edge="1" parent="1" source="8" target="3">

|

|

||||||

<mxGeometry relative="1" as="geometry"/>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="8" value="fix_928" style="rounded=1;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=0;" vertex="1" parent="1">

|

|

||||||

<mxGeometry x="320" y="40" width="80" height="40" as="geometry"/>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="9" value="github_master" style="rounded=1;whiteSpace=wrap;html=1;absoluteArcSize=1;arcSize=14;strokeWidth=0;" vertex="1" parent="1">

|

|

||||||

<mxGeometry x="160" y="40" width="80" height="40" as="geometry"/>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="10" value="" style="edgeStyle=none;html=1;exitX=0.5;exitY=1;exitDx=0;exitDy=0;endArrow=classic;startArrow=none;endFill=1;strokeWidth=2;strokeColor=#6666FF;" edge="1" parent="1" source="11" target="2">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="200" y="640" as="targetPoint"/>

|

|

||||||

<mxPoint x="200" y="80" as="sourcePoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="2" value="C2" style="verticalLabelPosition=middle;verticalAlign=middle;html=1;shape=mxgraph.flowchart.on-page_reference;labelPosition=center;align=center;strokeColor=#6666FF;strokeWidth=2;" vertex="1" parent="1">

|

|

||||||

<mxGeometry x="180" y="200" width="40" height="40" as="geometry"/>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="12" value="" style="edgeStyle=none;html=1;exitX=0.5;exitY=1;exitDx=0;exitDy=0;endArrow=classic;endFill=1;strokeWidth=2;strokeColor=#6666FF;" edge="1" parent="1" source="9" target="11">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="200" y="200" as="targetPoint"/>

|

|

||||||

<mxPoint x="200" y="80" as="sourcePoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="11" value="C1" style="verticalLabelPosition=middle;verticalAlign=middle;html=1;shape=mxgraph.flowchart.on-page_reference;labelPosition=center;align=center;strokeColor=#6666FF;strokeWidth=2;" vertex="1" parent="1">

|

|

||||||

<mxGeometry x="180" y="120" width="40" height="40" as="geometry"/>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="23" style="edgeStyle=none;html=1;exitX=0.5;exitY=1;exitDx=0;exitDy=0;exitPerimeter=0;endArrow=none;endFill=0;strokeColor=#FF8000;strokeWidth=2;" edge="1" parent="1" source="3">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="360" y="360" as="targetPoint"/>

|

|

||||||

<mxPoint x="360" y="400" as="sourcePoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="17" value="" style="edgeStyle=none;html=1;exitX=0.5;exitY=1;exitDx=0;exitDy=0;startArrow=none;endArrow=none;strokeWidth=2;strokeColor=#6666FF;" edge="1" parent="1" source="2" target="16">

|

|

||||||

<mxGeometry relative="1" as="geometry">

|

|

||||||

<mxPoint x="200" y="640" as="targetPoint"/>

|

|

||||||

<mxPoint x="200" y="240" as="sourcePoint"/>

|

|

||||||

</mxGeometry>

|

|

||||||

</mxCell>

|

|

||||||

<mxCell id="16" value="C4" style="verticalLabelPosition=middle;verticalAlign=middle;html=1;shape=mxgraph.flowchart.on-page_reference;labelPosition=center;align=center;strokeColor=#6666FF;strokeWidth=2;" vertex="1" parent="1">

|

|