mirror of

https://github.com/didi/KnowStreaming.git

synced 2026-01-16 21:34:31 +08:00

Add km module kafka

This commit is contained in:

243

docs/zh/Kafka分享/Kafka Controller /Controller与Brokers之间的网络通信.md

Normal file

243

docs/zh/Kafka分享/Kafka Controller /Controller与Brokers之间的网络通信.md

Normal file

@@ -0,0 +1,243 @@

|

||||

|

||||

## 前言

|

||||

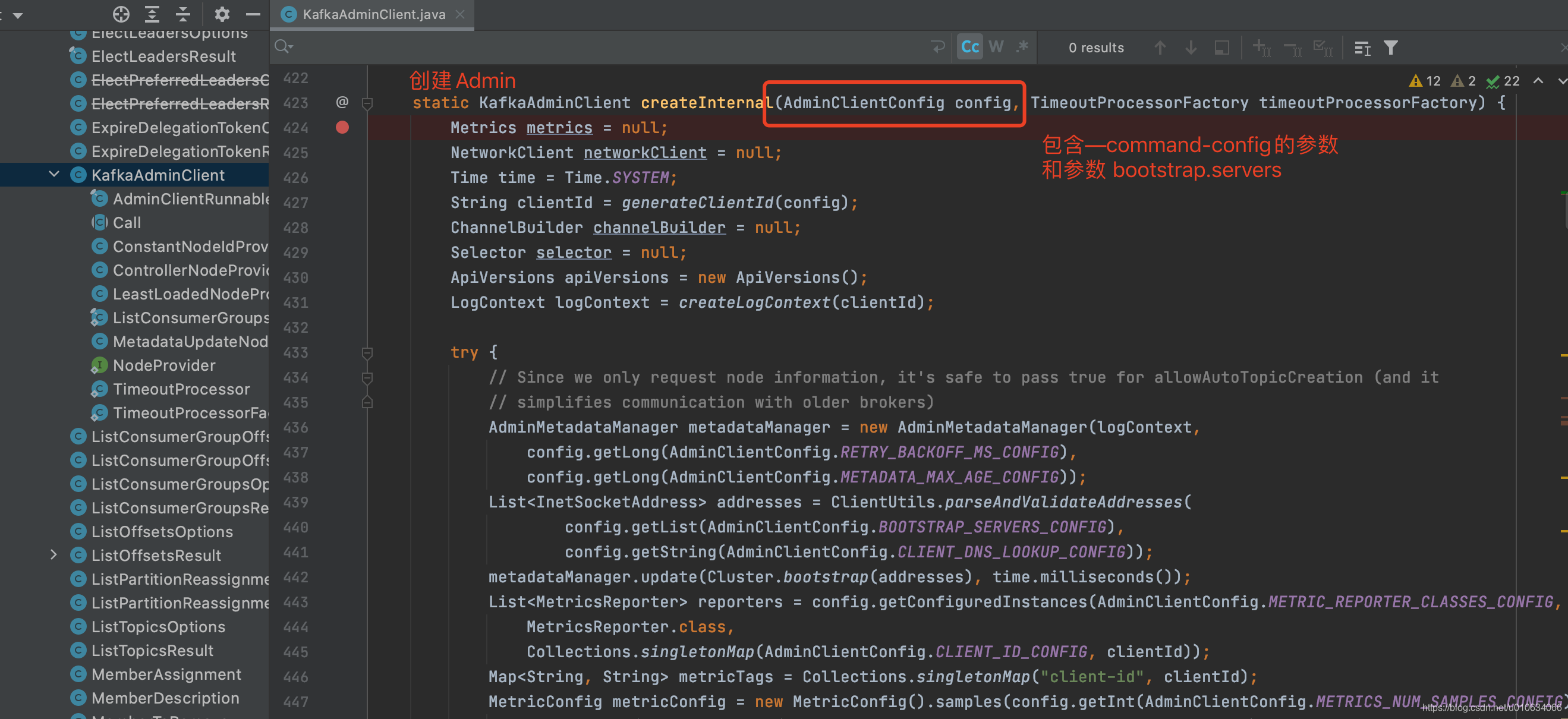

之前我们有解析过[【kafka源码】Controller启动过程以及选举流程源码分析](), 其中在分析过程中,Broker在当选Controller之后,需要初始化Controller的上下文中, 有关于Controller与Broker之间的网络通信的部分我没有细讲,因为这个部分我想单独来讲;所以今天 我们就来好好分析分析**Controller与Brokers之间的网络通信**

|

||||

|

||||

## 源码分析

|

||||

### 1. 源码入口 ControllerChannelManager.startup()

|

||||

调用链路

|

||||

->`KafkaController.processStartup`

|

||||

->`KafkaController.elect()`

|

||||

->`KafkaController.onControllerFailover()`

|

||||

->`KafkaController.initializeControllerContext()`

|

||||

```scala

|

||||

def startup() = {

|

||||

// 把所有存活的Broker全部调用 addNewBroker这个方法

|

||||

controllerContext.liveOrShuttingDownBrokers.foreach(addNewBroker)

|

||||

|

||||

brokerLock synchronized {

|

||||

//开启 网络请求线程

|

||||

brokerStateInfo.foreach(brokerState => startRequestSendThread(brokerState._1))

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 2. addNewBroker 构造broker的连接信息

|

||||

> 将所有存活的brokers 构造一些对象例如`NetworkClient`、`RequestSendThread` 等等之类的都封装到对象`ControllerBrokerStateInfo`中;

|

||||

> 由`brokerStateInfo`持有对象 key=brokerId; value = `ControllerBrokerStateInfo`

|

||||

|

||||

```scala

|

||||

private def addNewBroker(broker: Broker): Unit = {

|

||||

// 省略部分代码

|

||||

val threadName = threadNamePrefix match {

|

||||

case None => s"Controller-${config.brokerId}-to-broker-${broker.id}-send-thread"

|

||||

case Some(name) => s"$name:Controller-${config.brokerId}-to-broker-${broker.id}-send-thread"

|

||||

}

|

||||

|

||||

val requestRateAndQueueTimeMetrics = newTimer(

|

||||

RequestRateAndQueueTimeMetricName, TimeUnit.MILLISECONDS, TimeUnit.SECONDS, brokerMetricTags(broker.id)

|

||||

)

|

||||

|

||||

//构造请求发送线程

|

||||

val requestThread = new RequestSendThread(config.brokerId, controllerContext, messageQueue, networkClient,

|

||||

brokerNode, config, time, requestRateAndQueueTimeMetrics, stateChangeLogger, threadName)

|

||||

requestThread.setDaemon(false)

|

||||

|

||||

val queueSizeGauge = newGauge(QueueSizeMetricName, () => messageQueue.size, brokerMetricTags(broker.id))

|

||||

//封装好对象 缓存在brokerStateInfo中

|

||||

brokerStateInfo.put(broker.id, ControllerBrokerStateInfo(networkClient, brokerNode, messageQueue,

|

||||

requestThread, queueSizeGauge, requestRateAndQueueTimeMetrics, reconfigurableChannelBuilder))

|

||||

}

|

||||

```

|

||||

1. 将所有存活broker 封装成一个个`ControllerBrokerStateInfo`对象保存在缓存中; 对象中包含了`RequestSendThread` 请求发送线程 对象; 什么时候执行发送线程 ,我们下面分析

|

||||

2. `messageQueue:` 一个阻塞队列,里面放的都是待执行的请求,里面的对象`QueueItem` 封装了

|

||||

请求接口`ApiKeys`,`AbstractControlRequest`请求体对象;`AbstractResponse` 回调函数和`enqueueTimeMs`入队时间

|

||||

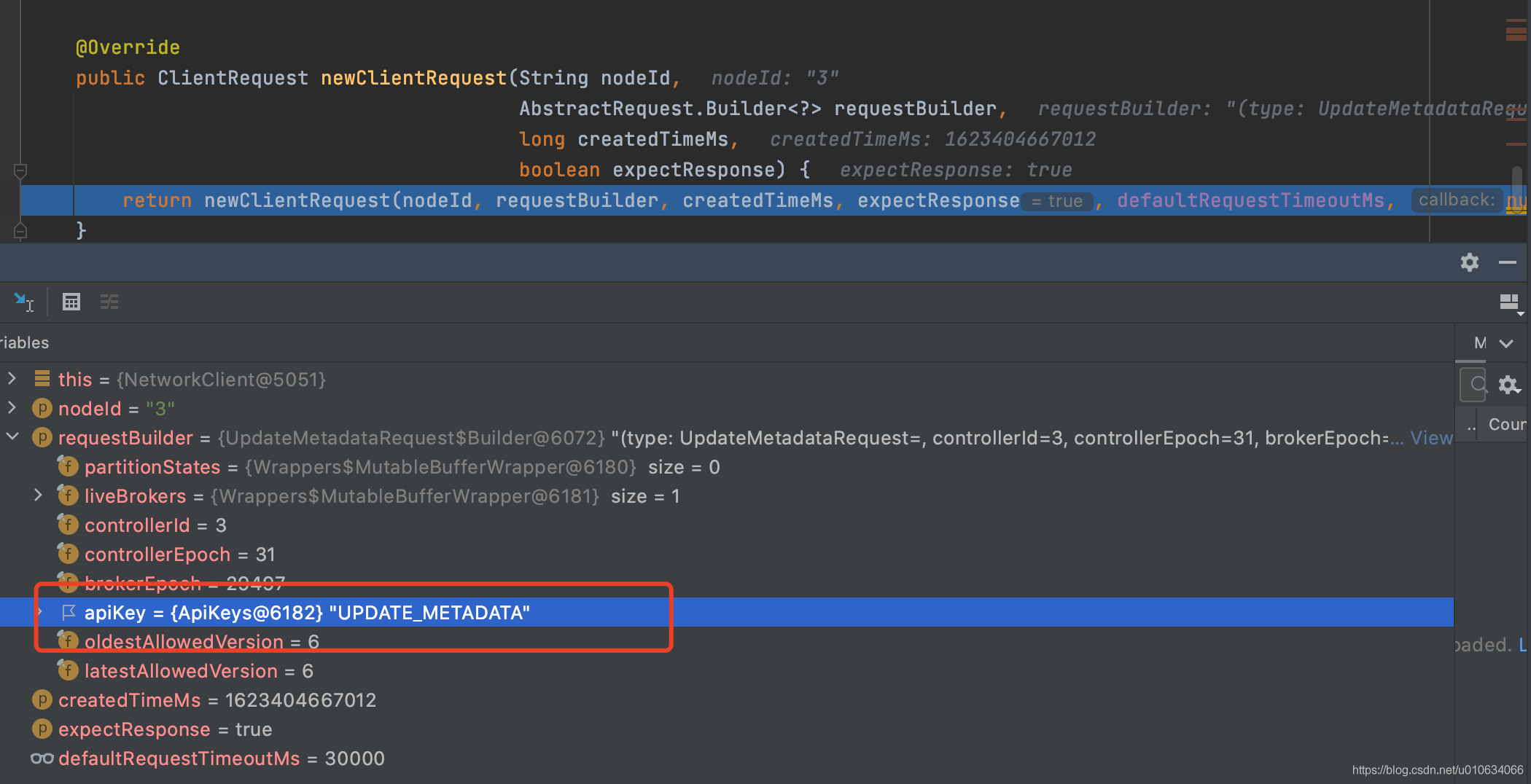

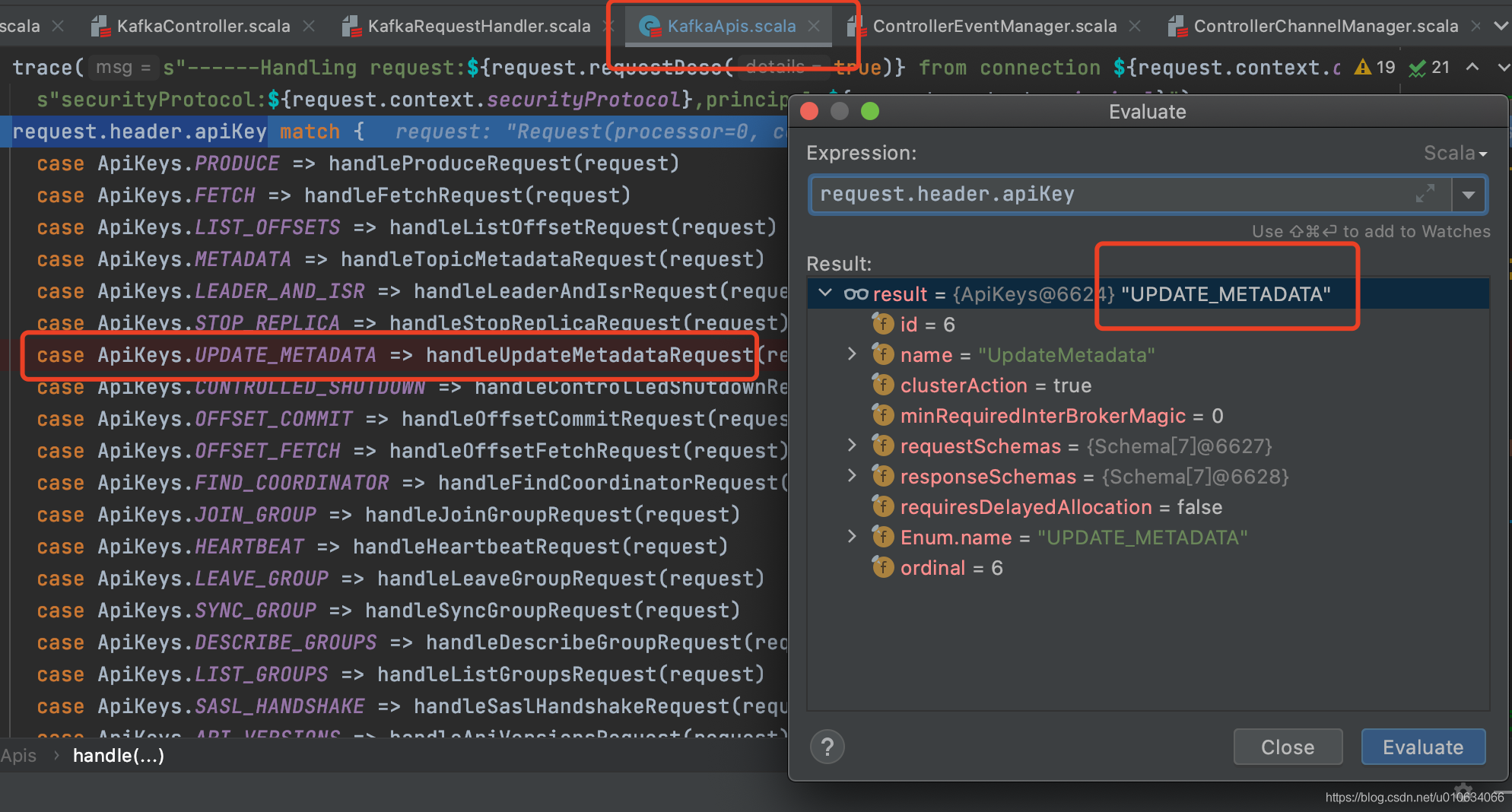

3. `RequestSendThread` 发送请求的线程 , 跟Broker们的网络连接就是通过这里进行的;比如下图中向Brokers们(当然包含自己)发送`UPDATE_METADATA`更新元数据的请求

|

||||

|

||||

|

||||

|

||||

### 3. startRequestSendThread 启动网络请求线程

|

||||

>把所有跟Broker连接的网络请求线程开起来

|

||||

```scala

|

||||

protected def startRequestSendThread(brokerId: Int): Unit = {

|

||||

val requestThread = brokerStateInfo(brokerId).requestSendThread

|

||||

if (requestThread.getState == Thread.State.NEW)

|

||||

requestThread.start()

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

线程执行代码块 ; 以下省略了部分代码

|

||||

```scala

|

||||

override def doWork(): Unit = {

|

||||

|

||||

def backoff(): Unit = pause(100, TimeUnit.MILLISECONDS)

|

||||

|

||||

//从阻塞请求队列里面获取有没有待执行的请求

|

||||

val QueueItem(apiKey, requestBuilder, callback, enqueueTimeMs) = queue.take()

|

||||

requestRateAndQueueTimeMetrics.update(time.milliseconds() - enqueueTimeMs, TimeUnit.MILLISECONDS)

|

||||

|

||||

var clientResponse: ClientResponse = null

|

||||

try {

|

||||

var isSendSuccessful = false

|

||||

while (isRunning && !isSendSuccessful) {

|

||||

// if a broker goes down for a long time, then at some point the controller's zookeeper listener will trigger a

|

||||

// removeBroker which will invoke shutdown() on this thread. At that point, we will stop retrying.

|

||||

try {

|

||||

//检查跟Broker的网络连接是否畅通,如果连接不上会重试

|

||||

if (!brokerReady()) {

|

||||

isSendSuccessful = false

|

||||

backoff()

|

||||

}

|

||||

else {

|

||||

//构建请求参数

|

||||

val clientRequest = networkClient.newClientRequest(brokerNode.idString, requestBuilder,

|

||||

time.milliseconds(), true)

|

||||

//发起网络请求

|

||||

clientResponse = NetworkClientUtils.sendAndReceive(networkClient, clientRequest, time)

|

||||

isSendSuccessful = true

|

||||

}

|

||||

} catch {

|

||||

}

|

||||

if (clientResponse != null) {

|

||||

val requestHeader = clientResponse.requestHeader

|

||||

val api = requestHeader.apiKey

|

||||

if (api != ApiKeys.LEADER_AND_ISR && api != ApiKeys.STOP_REPLICA && api != ApiKeys.UPDATE_METADATA)

|

||||

throw new KafkaException(s"Unexpected apiKey received: $apiKey")

|

||||

|

||||

if (callback != null) {

|

||||

callback(response)

|

||||

}

|

||||

}

|

||||

} catch {

|

||||

|

||||

}

|

||||

}

|

||||

```

|

||||

1. 从请求队列`queue`中take请求; 如果有的话就开始执行,没有的话就阻塞住

|

||||

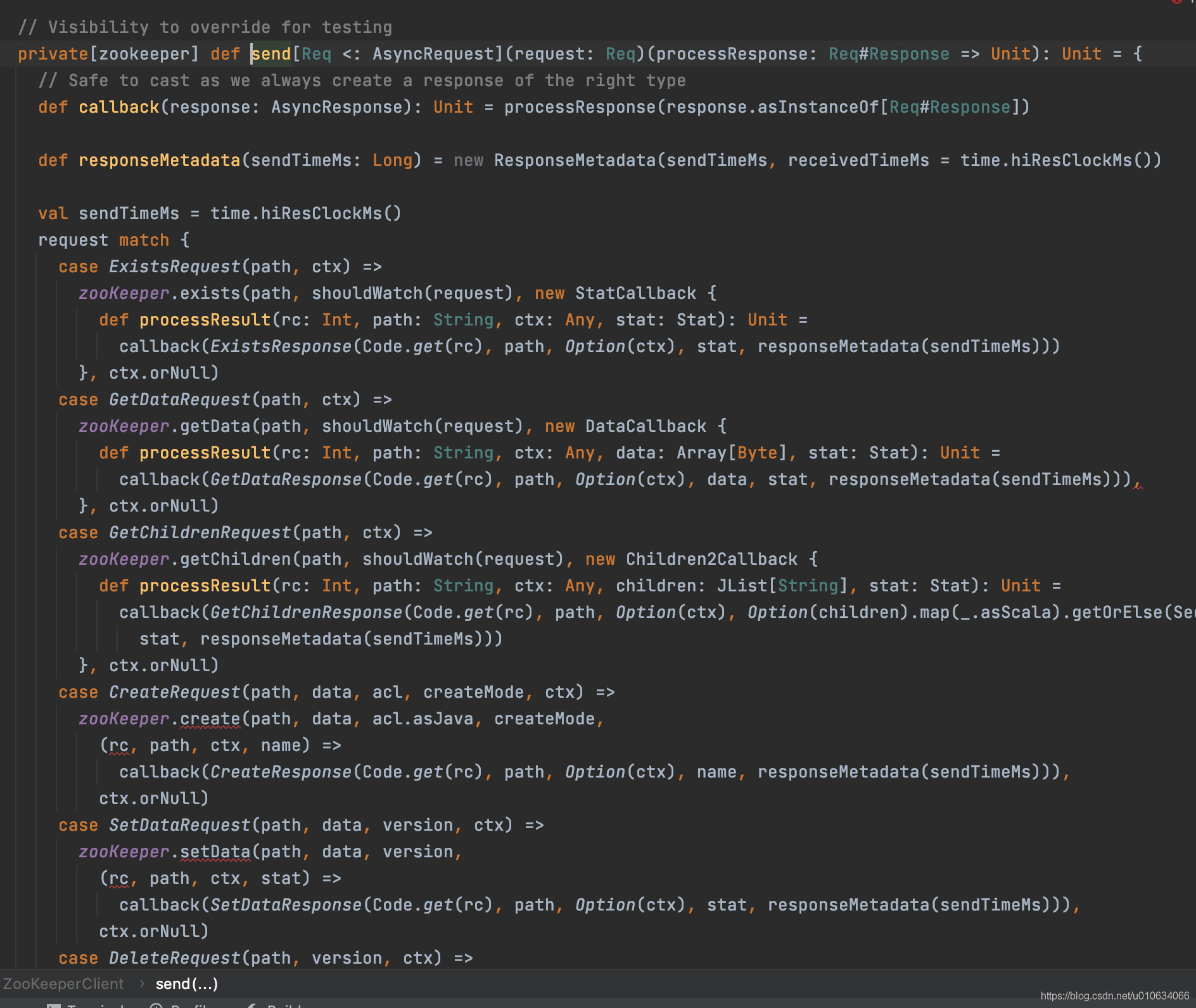

2. 检查请求的目标Broker是否可以连接; 连接不通会一直进行尝试,然后在某个时候,控制器的 zookeeper 侦听器将触发一个 `removeBroker`,它将在此线程上调用 shutdown()。就不会在重试了

|

||||

3. 发起请求;

|

||||

4. 如果请求失败,则重新连接Broker发送请求

|

||||

5. 返回成功,调用回调接口

|

||||

6. 值得注意的是<font color="red"> Controller发起的请求,收到Response中的ApiKeys中如果不是 `LEADER_AND_ISR`、`STOP_REPLICA`、`UPDATE_METADATA` 三个请求,就会抛出异常; 不会进行callBack的回调; </font> 不过也是很奇怪,如果Controller限制只能发起这几个请求的话,为什么在发起请求之前去做拦截,而要在返回之后做拦截; **个人猜测 可能是Broker在Response带上ApiKeys, 在Controller 调用callBack的时候可能会根据ApiKeys的不同而处理不同逻辑吧;但是又只想对Broker开放那三个接口;**

|

||||

|

||||

|

||||

|

||||

### 4. 向RequestSendThread的请求队列queue中添加请求

|

||||

> 上面的线程启动完成之后,queue中还没有待执行的请求的,那么什么时候有添加请求呢?

|

||||

|

||||

添加请求最终都会调用接口`` ,反查一下就知道了;

|

||||

```java

|

||||

def sendRequest(brokerId: Int, request: AbstractControlRequest.Builder[_ <: AbstractControlRequest],

|

||||

callback: AbstractResponse => Unit = null): Unit = {

|

||||

brokerLock synchronized {

|

||||

val stateInfoOpt = brokerStateInfo.get(brokerId)

|

||||

stateInfoOpt match {

|

||||

case Some(stateInfo) =>

|

||||

stateInfo.messageQueue.put(QueueItem(request.apiKey, request, callback, time.milliseconds()))

|

||||

case None =>

|

||||

warn(s"Not sending request $request to broker $brokerId, since it is offline.")

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

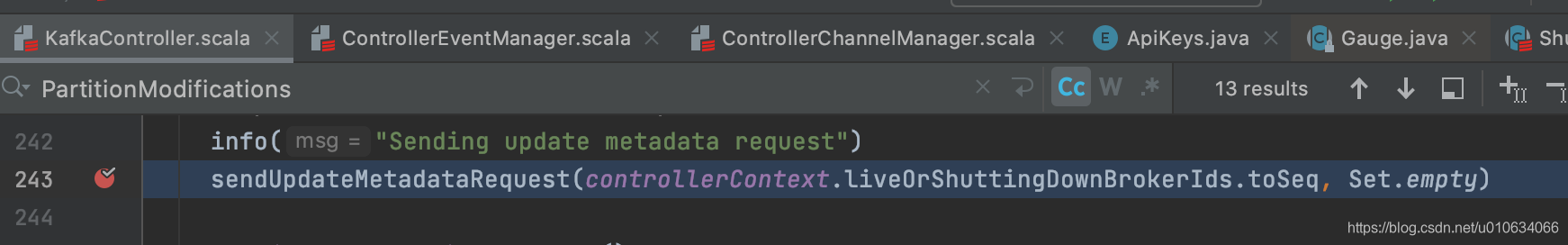

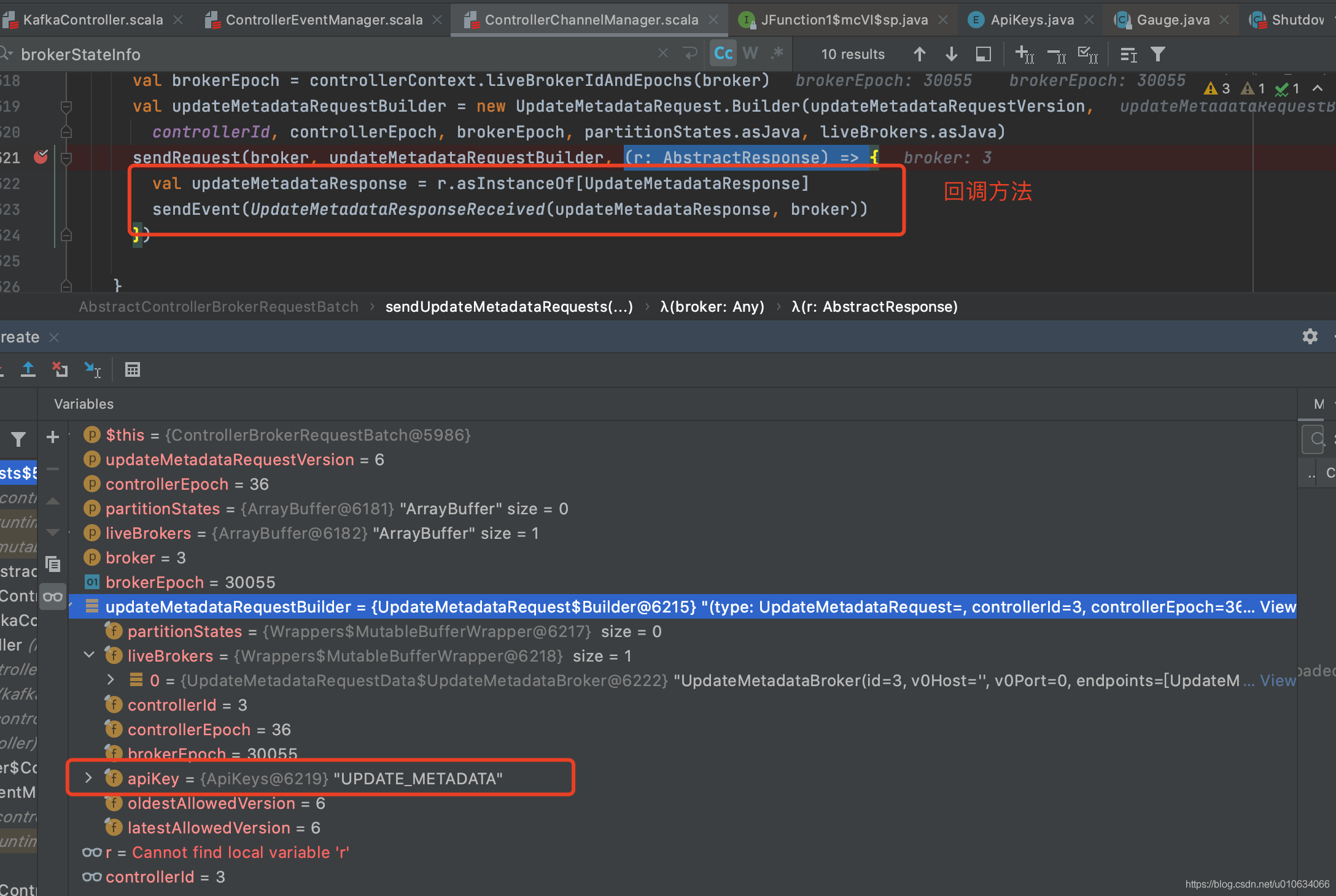

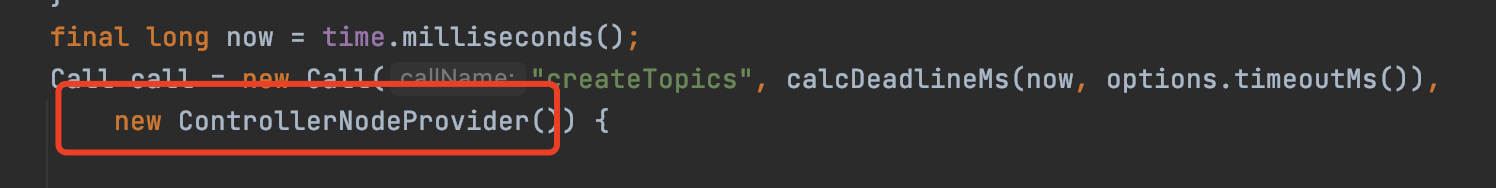

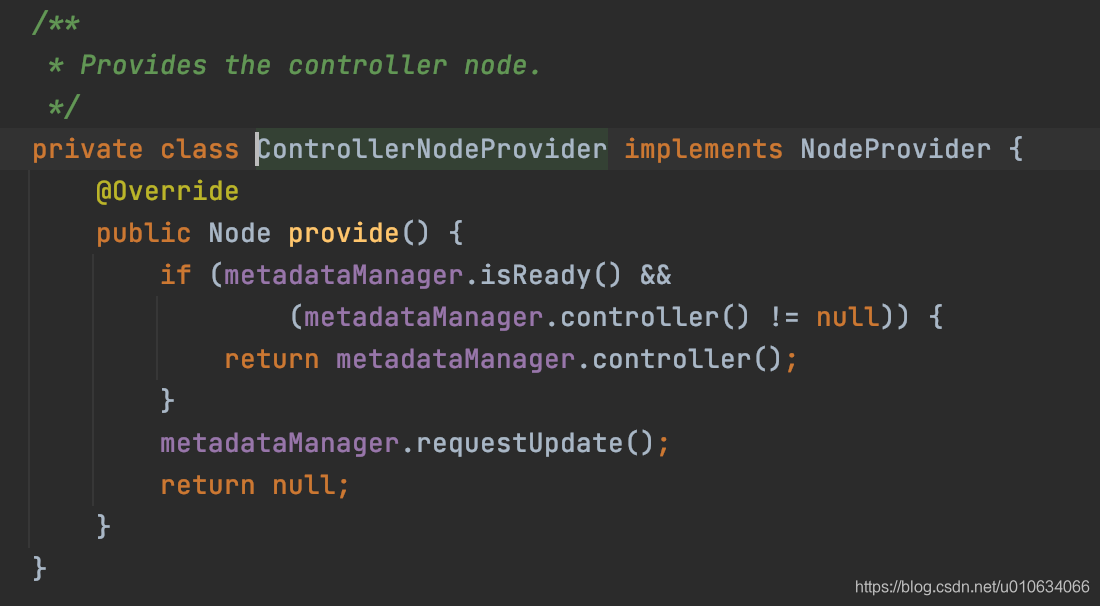

**这里举一个**🌰 ; 看看Controller向Broker发起一个`UPDATE_METADATA`请求;

|

||||

|

||||

|

||||

|

||||

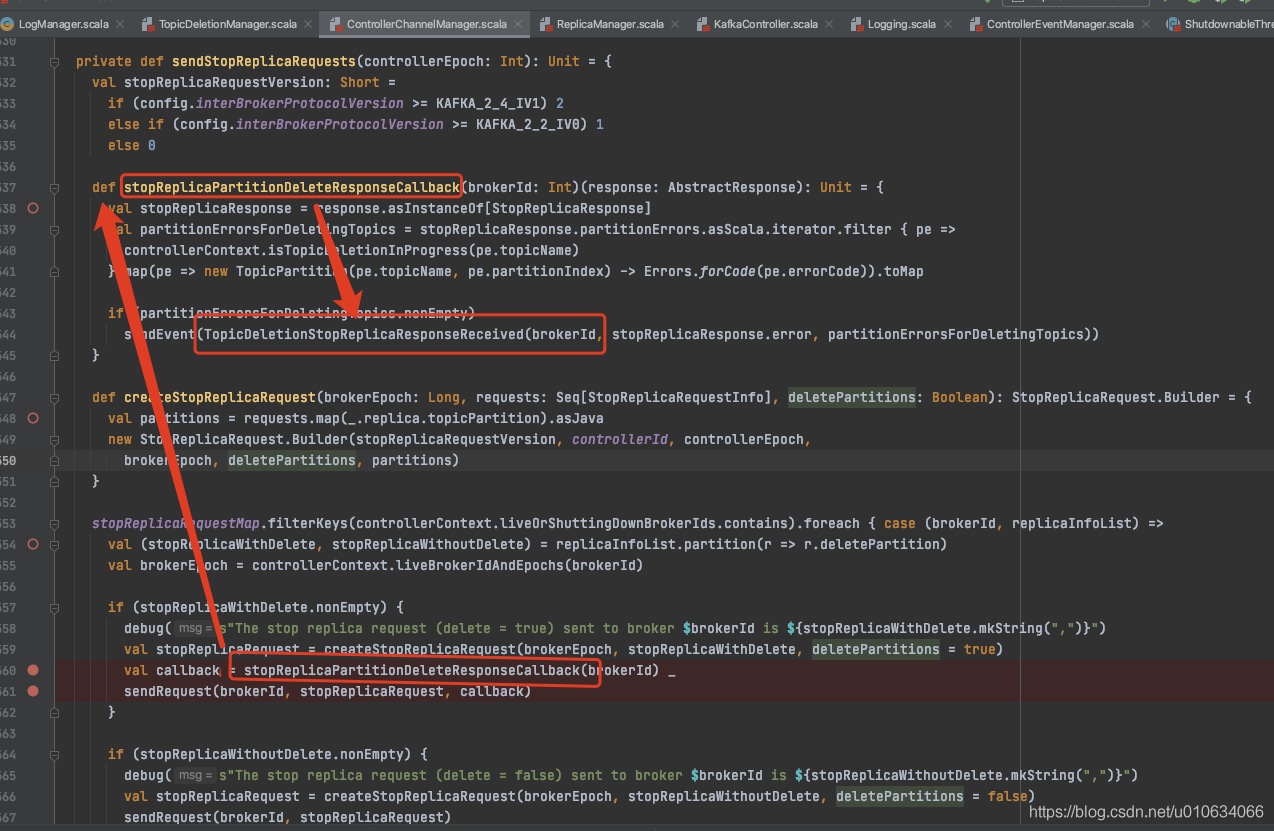

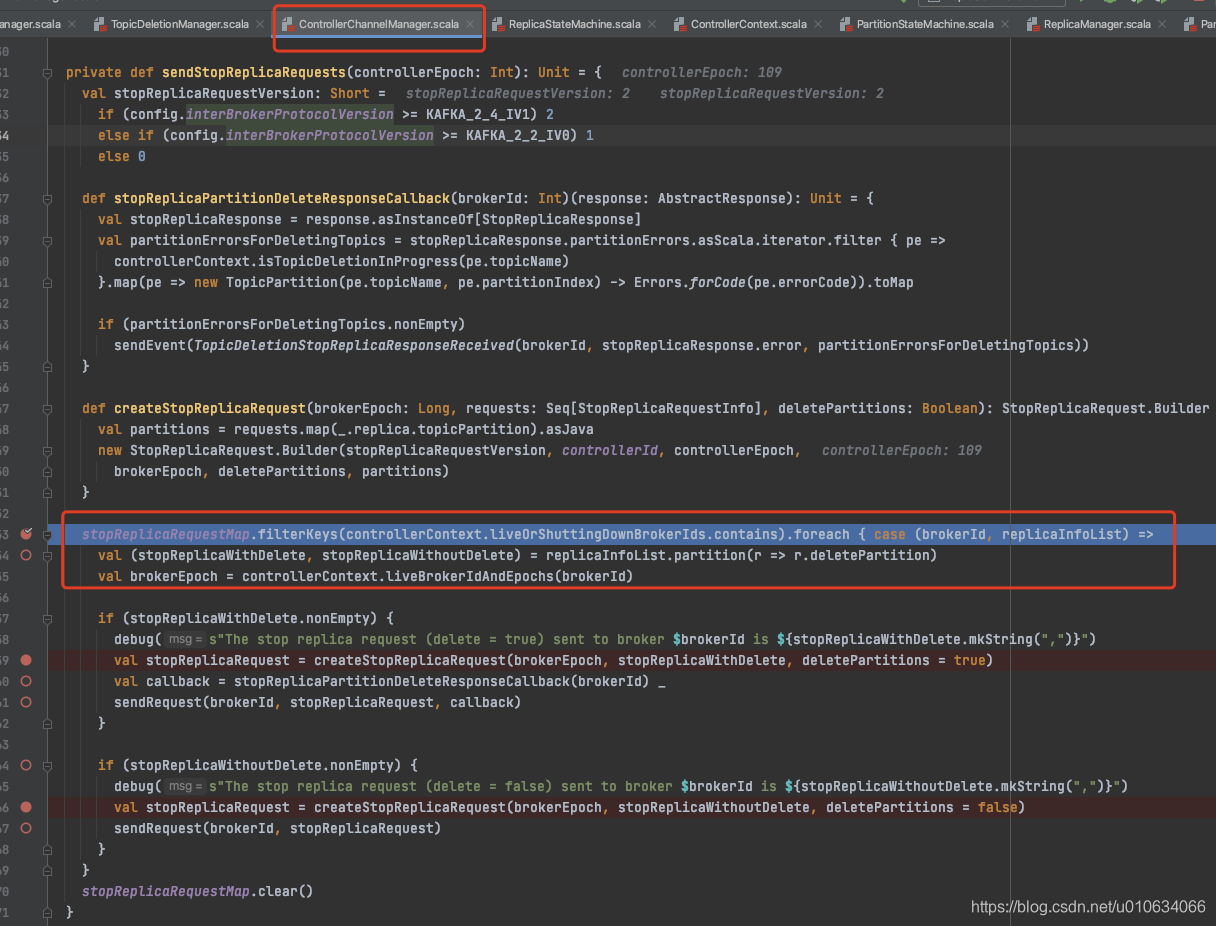

1. 可以看到调用了`sendRequest`请求 ; 请求的接口ApiKey=`UPDATE_METADATA`

|

||||

2. 回调方法就是如上所示; 向事件管理器`ControllerChannelManager`中添加一个事件`UpdateMetadataResponseReceived`

|

||||

3. 当请求成功之后,调用2中的callBack, `UpdateMetadataResponseReceived`被添加到事件管理器中; 就会立马被执行(排队)

|

||||

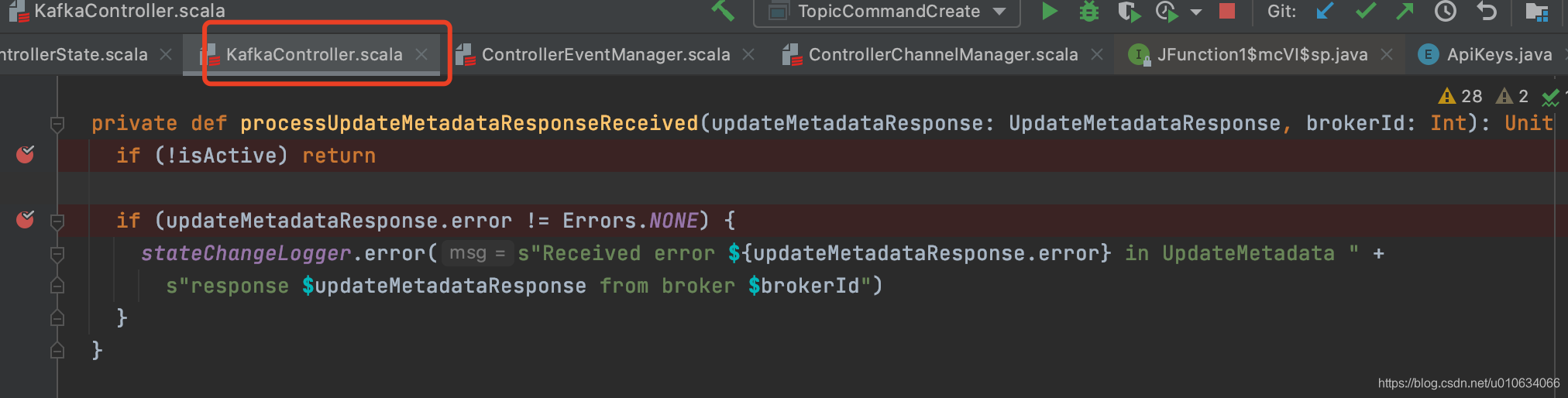

4. 执行地方如下图所示,只不过它也没干啥,也就是如果返回异常response就打印一下日志

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

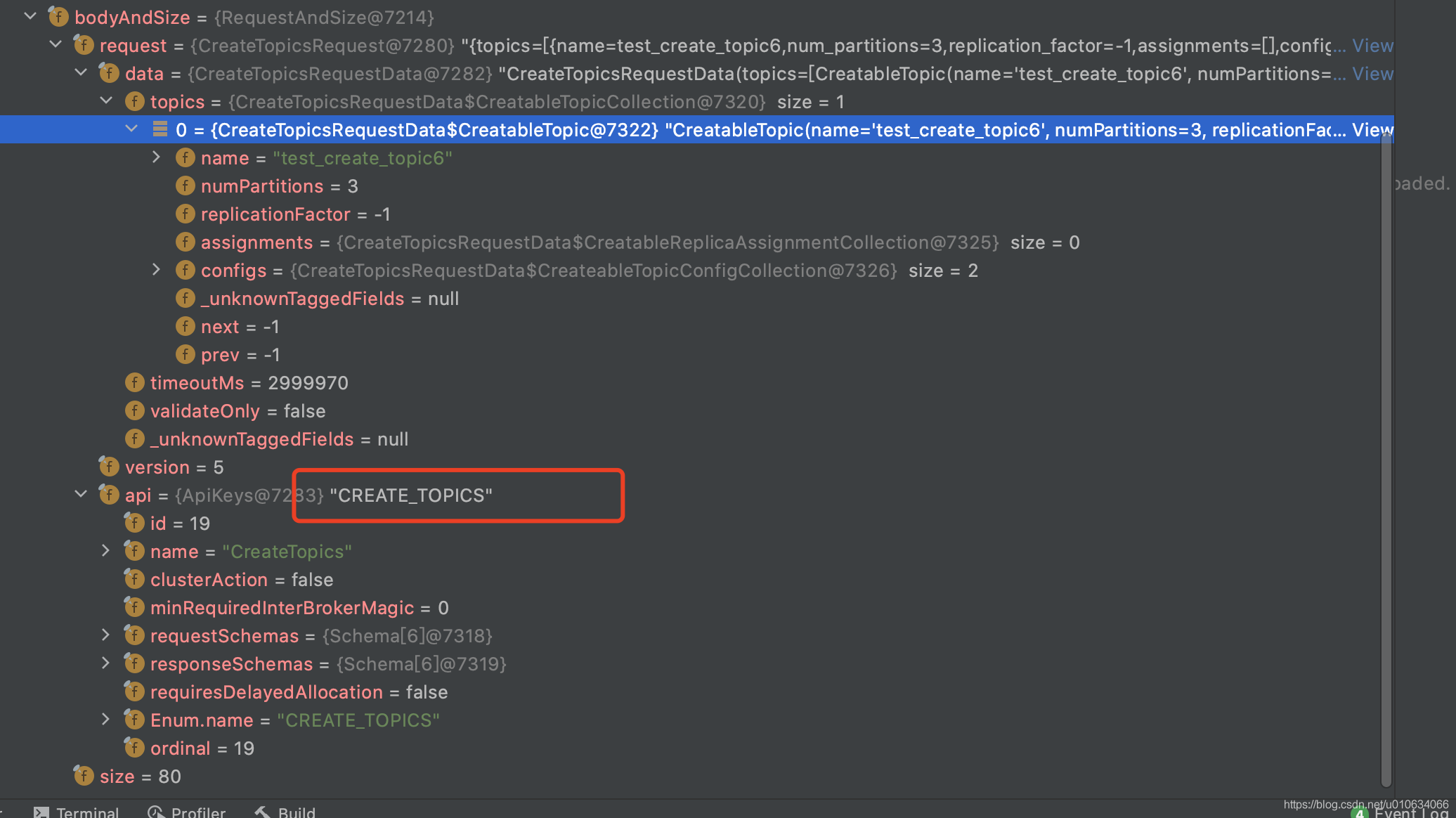

### 5. Broker接收Controller的请求

|

||||

> 上面说了Controller对所有Brokers(当然也包括自己)发起请求; 那么Brokers接受请求的地方在哪里呢,我们下面分析分析

|

||||

|

||||

这个部分内容我们在[【kafka源码】TopicCommand之创建Topic源码解析]() 中也分析过,处理过程都是一样的;

|

||||

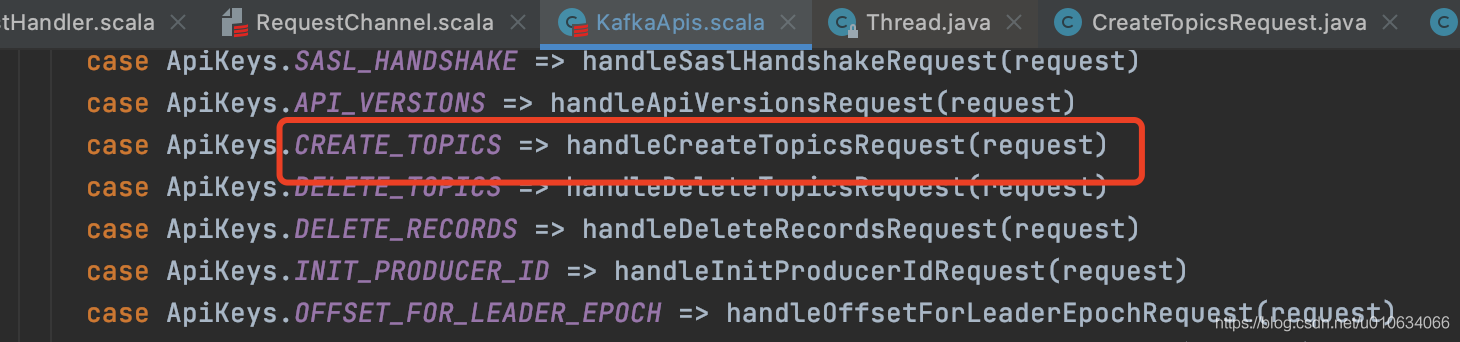

比如还是上面的例子🌰, 发起请求了之后,Broker处理的地方在`KafkaRequestHandler.run`里面的`apis.handle(request)`;

|

||||

|

||||

|

||||

可以看到这里列举了所有的接口请求;我们找到`UPDATE_METADATA`处理逻辑;

|

||||

里面的处理逻辑就不进去看了,不然超出了本篇文章的范畴;

|

||||

|

||||

|

||||

### 6. Broker服务下线

|

||||

我们模拟一下Broker宕机了, 手动把zk上的` /brokers/ids/broker节点`删除; 因为Controller是有对节点`watch`的, 就会看到Controller收到了变更通知,并且调用了 `KafkaController.processBrokerChange()`接口;

|

||||

```scala

|

||||

private def processBrokerChange(): Unit = {

|

||||

if (!isActive) return

|

||||

val curBrokerAndEpochs = zkClient.getAllBrokerAndEpochsInCluster

|

||||

val curBrokerIdAndEpochs = curBrokerAndEpochs map { case (broker, epoch) => (broker.id, epoch) }

|

||||

val curBrokerIds = curBrokerIdAndEpochs.keySet

|

||||

val liveOrShuttingDownBrokerIds = controllerContext.liveOrShuttingDownBrokerIds

|

||||

val newBrokerIds = curBrokerIds -- liveOrShuttingDownBrokerIds

|

||||

val deadBrokerIds = liveOrShuttingDownBrokerIds -- curBrokerIds

|

||||

val bouncedBrokerIds = (curBrokerIds & liveOrShuttingDownBrokerIds)

|

||||

.filter(brokerId => curBrokerIdAndEpochs(brokerId) > controllerContext.liveBrokerIdAndEpochs(brokerId))

|

||||

val newBrokerAndEpochs = curBrokerAndEpochs.filter { case (broker, _) => newBrokerIds.contains(broker.id) }

|

||||

val bouncedBrokerAndEpochs = curBrokerAndEpochs.filter { case (broker, _) => bouncedBrokerIds.contains(broker.id) }

|

||||

val newBrokerIdsSorted = newBrokerIds.toSeq.sorted

|

||||

val deadBrokerIdsSorted = deadBrokerIds.toSeq.sorted

|

||||

val liveBrokerIdsSorted = curBrokerIds.toSeq.sorted

|

||||

val bouncedBrokerIdsSorted = bouncedBrokerIds.toSeq.sorted

|

||||

info(s"Newly added brokers: ${newBrokerIdsSorted.mkString(",")}, " +

|

||||

s"deleted brokers: ${deadBrokerIdsSorted.mkString(",")}, " +

|

||||

s"bounced brokers: ${bouncedBrokerIdsSorted.mkString(",")}, " +

|

||||

s"all live brokers: ${liveBrokerIdsSorted.mkString(",")}")

|

||||

|

||||

newBrokerAndEpochs.keySet.foreach(controllerChannelManager.addBroker)

|

||||

bouncedBrokerIds.foreach(controllerChannelManager.removeBroker)

|

||||

bouncedBrokerAndEpochs.keySet.foreach(controllerChannelManager.addBroker)

|

||||

deadBrokerIds.foreach(controllerChannelManager.removeBroker)

|

||||

if (newBrokerIds.nonEmpty) {

|

||||

controllerContext.addLiveBrokersAndEpochs(newBrokerAndEpochs)

|

||||

onBrokerStartup(newBrokerIdsSorted)

|

||||

}

|

||||

if (bouncedBrokerIds.nonEmpty) {

|

||||

controllerContext.removeLiveBrokers(bouncedBrokerIds)

|

||||

onBrokerFailure(bouncedBrokerIdsSorted)

|

||||

controllerContext.addLiveBrokersAndEpochs(bouncedBrokerAndEpochs)

|

||||

onBrokerStartup(bouncedBrokerIdsSorted)

|

||||

}

|

||||

if (deadBrokerIds.nonEmpty) {

|

||||

controllerContext.removeLiveBrokers(deadBrokerIds)

|

||||

onBrokerFailure(deadBrokerIdsSorted)

|

||||

}

|

||||

|

||||

if (newBrokerIds.nonEmpty || deadBrokerIds.nonEmpty || bouncedBrokerIds.nonEmpty) {

|

||||

info(s"Updated broker epochs cache: ${controllerContext.liveBrokerIdAndEpochs}")

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

1. 这里会去zk里面获取所有的Broker信息; 并将得到的数据跟当前Controller缓存中的所有Broker信息做对比;

|

||||

2. 如果有新上线的Broker,则会执行 Broker上线的流程

|

||||

3. 如果有删除的Broker,则执行Broker下线的流程; 比如`removeLiveBrokers`

|

||||

|

||||

收到删除节点之后, Controller 会觉得Broker已经下线了,即使那台Broker服务是正常的,那么它仍旧提供不了服务

|

||||

|

||||

### 7. Broker上下线

|

||||

本篇主要讲解**Controller与Brokers之间的网络通信**

|

||||

故**Broker上下线**内容单独开一篇文章来详细讲解 [【kafka源码】Brokers的上下线流程](https://shirenchuang.blog.csdn.net/article/details/117846476)

|

||||

|

||||

## 源码总结

|

||||

本篇文章内容比较简单, Controller和Broker之间的通信就是通过 `RequestSendThread` 这个线程来进行发送请求;

|

||||

`RequestSendThread`维护的阻塞请求队列在没有任务的时候处理阻塞状态;

|

||||

当有需要发起请求的时候,直接向`queue`中添加任务就行了;

|

||||

|

||||

Controller自身也是一个Broker,所以Controller发出的请求,自己也会收到并且执行

|

||||

|

||||

|

||||

## Q&A

|

||||

### 如果Controller与Broker网络连接不通会怎么办?

|

||||

> 会一直进行重试, 直到zookeeper发现Broker通信有问题,会将这台Broker的节点移除,Controller就会收到通知,并将Controller与这台Broker的`RequestSendThread`线程shutdown;就不会再重试了; 如果zk跟Broker之间网络通信是正常的,只是发起的逻辑请求就是失败,则会一直进行重试

|

||||

|

||||

### 如果手动将zk中的 /brokers/ids/ 下的子节点删除会怎么样?

|

||||

>手动删除` /brokers/ids/Broker的ID`, Controller收到变更通知,则将该Broker在Controller中处理下线逻辑; 所有该Broker已经游离于集群之外,即使它服务还是正常的,但是它却提供不了服务了; 只能重启该Broker重新注册;

|

||||

289

docs/zh/Kafka分享/Kafka Controller /Controller中的状态机.md

Normal file

289

docs/zh/Kafka分享/Kafka Controller /Controller中的状态机.md

Normal file

@@ -0,0 +1,289 @@

|

||||

|

||||

|

||||

前言

|

||||

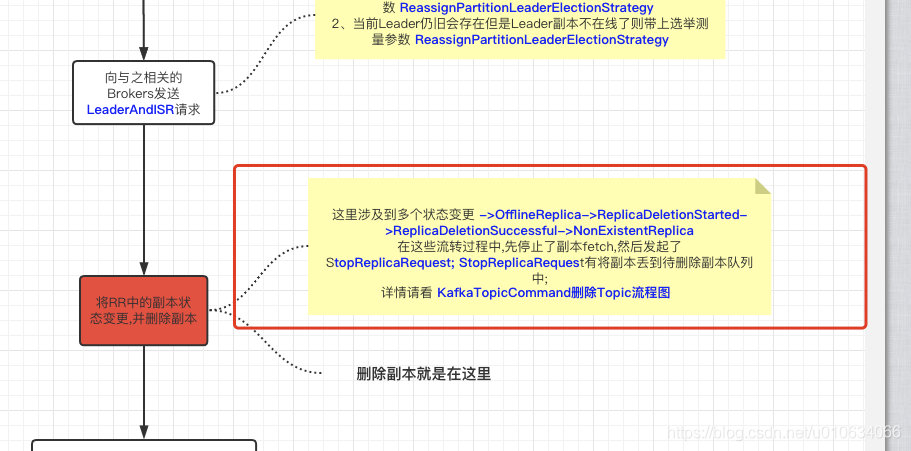

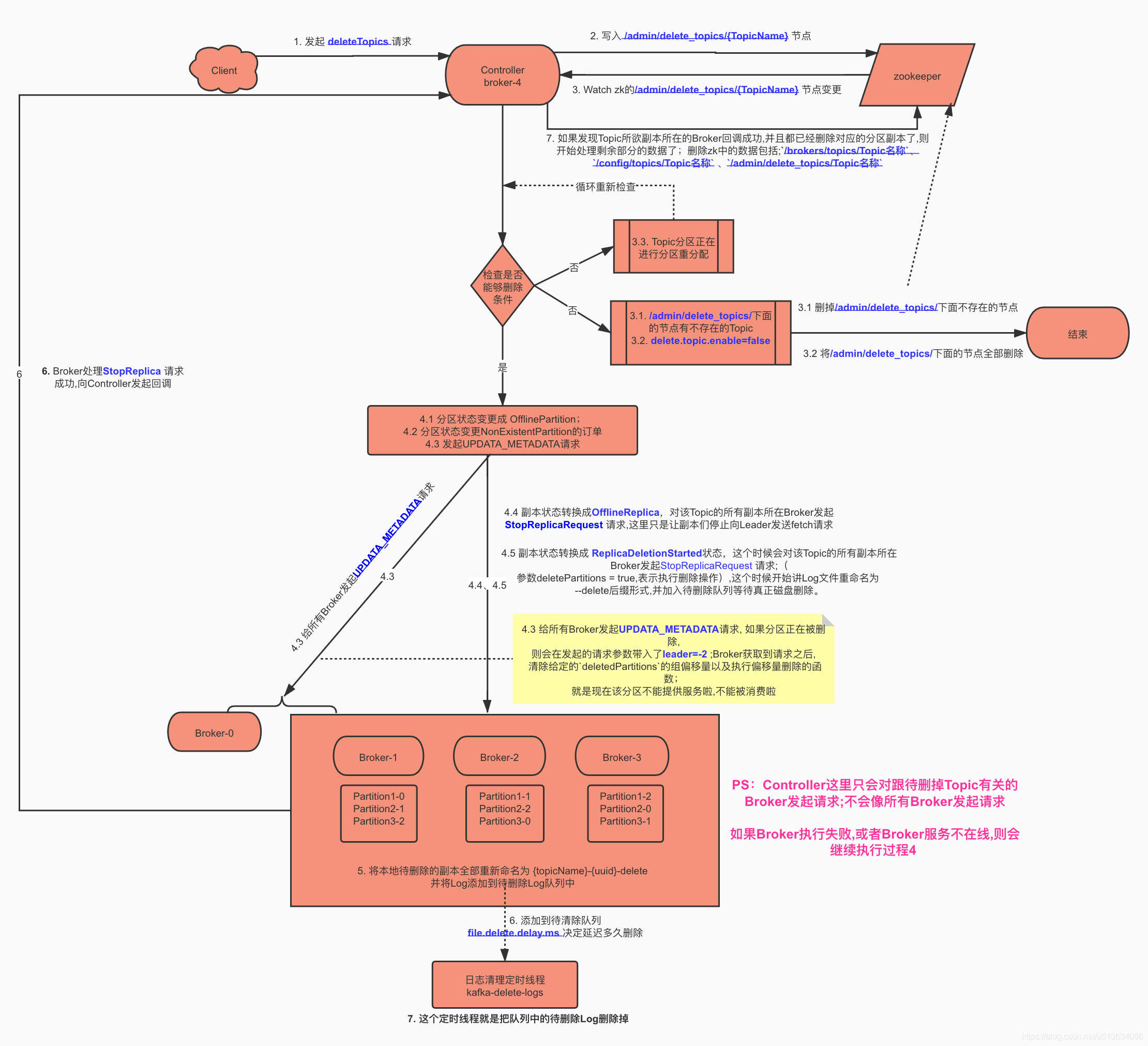

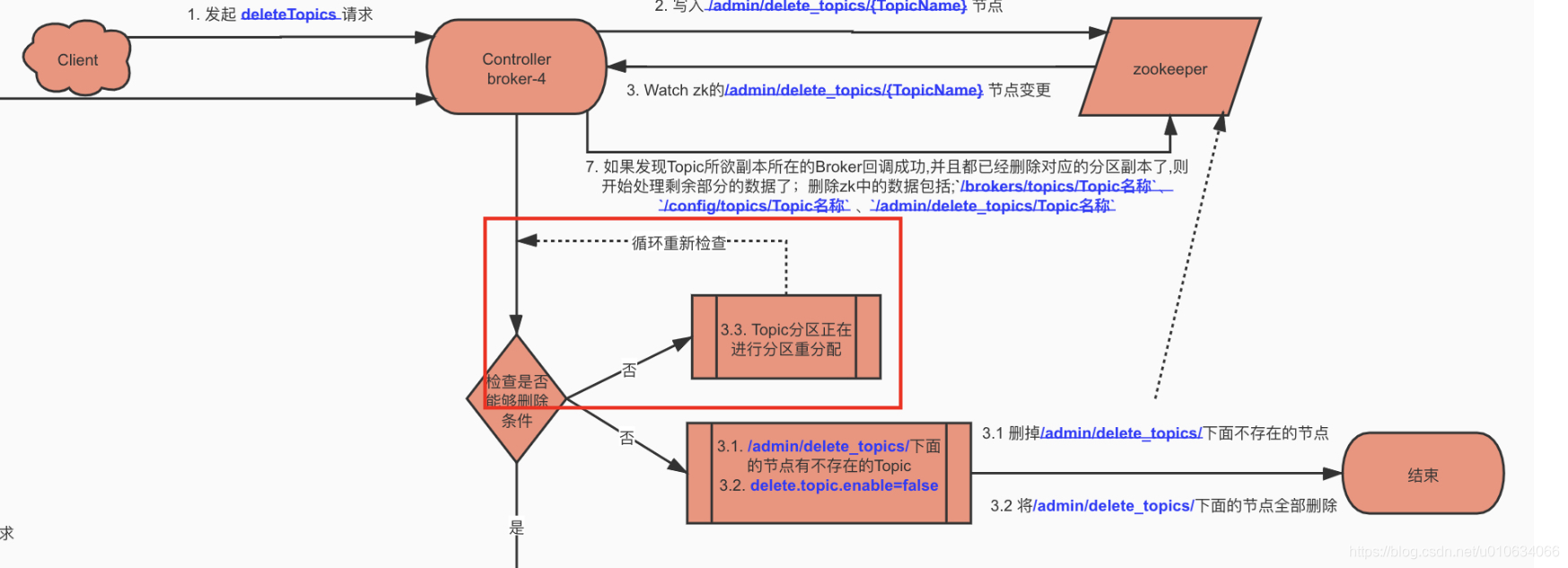

>Controller中有两个状态机分别是`ReplicaStateMachine 副本状态机`和`PartitionStateMachine分区状态机` ; 他们的作用是负责处理每个分区和副本在状态变更过程中要处理的事情; 并且确保从上一个状态变更到下一个状态是合法的; 源码中你能看到很多地方只是进行状态流转; 所以我们要清楚每个流转都做了哪些事情;对我们阅读源码更清晰

|

||||

>

|

||||

>----

|

||||

>在之前的文章 [【kafka源码】Controller启动过程以及选举流程源码分析]() 中,我们有分析到,

|

||||

>`replicaStateMachine.startup()` 和 `partitionStateMachine.startup()`

|

||||

>副本专状态机和分区状态机的启动; 那我们就从这里开始好好讲下两个状态机

|

||||

|

||||

|

||||

## 源码解析

|

||||

<font color="red">如果觉得阅读源码解析太枯燥,请直接看 源码总结及其后面部分</font>

|

||||

|

||||

### ReplicaStateMachine 副本状态机

|

||||

Controller 选举成功之后 调用`ReplicaStateMachine.startup`启动副本状态机

|

||||

```scala

|

||||

|

||||

def startup(): Unit = {

|

||||

//初始化所有副本的状态

|

||||

initializeReplicaState()

|

||||

val (onlineReplicas, offlineReplicas) = controllerContext.onlineAndOfflineReplicas

|

||||

handleStateChanges(onlineReplicas.toSeq, OnlineReplica)

|

||||

handleStateChanges(offlineReplicas.toSeq, OfflineReplica)

|

||||

}

|

||||

|

||||

```

|

||||

1. 初始化所有副本的状态,如果副本在线则状态变更为`OnlineReplica` ;否则变更为`ReplicaDeletionIneligible`副本删除失败状态; 判断副本是否在线的条件是 副本所在Broker需要在线&&副本没有被标记为已下线状态(Map `replicasOnOfflineDirs`用于维护副本失败在线),一般情况下这个里面是被标记为删除的Topic

|

||||

2. 执行状态变更处理器

|

||||

|

||||

#### ReplicaStateMachine状态变更处理器

|

||||

>它确保每个状态转换都发生从合法的先前状态到目标状态。有效的状态转换是:

|

||||

>1. `NonExistentReplica --> NewReplica: `-- 将 LeaderAndIsr 请求与当前领导者和 isr 发送到新副本,并将分区的 UpdateMetadata 请求发送到每个实时代理

|

||||

>2. `NewReplica -> OnlineReplica` --如果需要,将新副本添加到分配的副本列表中

|

||||

>3. `OnlineReplica,OfflineReplica -> OnlineReplica:`--将带有当前领导者和 isr 的 LeaderAndIsr 请求发送到新副本,并将分区的 UpdateMetadata 请求发送到每个实时代理

|

||||

>4. `NewReplica,OnlineReplica,OfflineReplica,ReplicaDeletionIneligible -> OfflineReplica:`:-- 向副本发送 `StopReplicaRequest` ;

|

||||

> -- 从 isr 中删除此副本并将 LeaderAndIsr 请求(带有新的 isr)发送到领导副本,并将分区的 UpdateMetadata 请求发送到每个实时代理。

|

||||

> 5. `OfflineReplica -> ReplicaDeletionStarted:` -- 向副本发送 `StopReplicaRequest` (带 删除参数);

|

||||

> 6. `ReplicaDeletionStarted -> ReplicaDeletionSuccessful:` --在状态机中标记副本的状态

|

||||

> 7. `ReplicaDeletionStarted -> ReplicaDeletionIneligible:` --在状态机中标记副本的状态

|

||||

> 8. `ReplicaDeletionSuccessful -> NonExistentReplica:`--从内存分区副本分配缓存中删除副本

|

||||

```scala

|

||||

private def doHandleStateChanges(replicaId: Int, replicas: Seq[PartitionAndReplica], targetState: ReplicaState): Unit = {

|

||||

//如果有副本没有设置状态,则初始化为`NonExistentReplica`

|

||||

replicas.foreach(replica => controllerContext.putReplicaStateIfNotExists(replica, NonExistentReplica))

|

||||

//校验状态流转是不是正确

|

||||

val (validReplicas, invalidReplicas) = controllerContext.checkValidReplicaStateChange(replicas, targetState)

|

||||

invalidReplicas.foreach(replica => logInvalidTransition(replica, targetState))

|

||||

|

||||

//代码省略,在下面细细说来

|

||||

}

|

||||

```

|

||||

```scala

|

||||

controllerBrokerRequestBatch.sendRequestsToBrokers(controllerContext.epoch)

|

||||

```

|

||||

1. 如果有副本没有设置状态,则初始化为`NonExistentReplica`

|

||||

2. 校验状态流转是不是正确

|

||||

3. 执行完了之后,还会可能尝试发一次`UPDATA_METADATA`

|

||||

|

||||

##### 先前状态 ==> OnlineReplica

|

||||

可流转的状态有

|

||||

1. `NewReplica`

|

||||

2. `OnlineReplica`

|

||||

3. `OfflineReplica`

|

||||

4. `ReplicaDeletionIneligible`

|

||||

|

||||

###### NewReplica ==》OnlineReplica

|

||||

>如果有需要,将新副本添加到分配的副本列表中;

|

||||

>比如[【kafka源码】TopicCommand之创建Topic源码解析]()

|

||||

|

||||

```scala

|

||||

case NewReplica =>

|

||||

val assignment = controllerContext.partitionFullReplicaAssignment(partition)

|

||||

if (!assignment.replicas.contains(replicaId)) {

|

||||

error(s"Adding replica ($replicaId) that is not part of the assignment $assignment")

|

||||

val newAssignment = assignment.copy(replicas = assignment.replicas :+ replicaId)

|

||||

controllerContext.updatePartitionFullReplicaAssignment(partition, newAssignment)

|

||||

}

|

||||

```

|

||||

|

||||

###### 其他状态 ==》OnlineReplica

|

||||

> 将带有当前领导者和 isr 的 LeaderAndIsr 请求发送到新副本,并将分区的 UpdateMetadata 请求发送到每个实时代理

|

||||

```scala

|

||||

case _ =>

|

||||

controllerContext.partitionLeadershipInfo.get(partition) match {

|

||||

case Some(leaderIsrAndControllerEpoch) =>

|

||||

controllerBrokerRequestBatch.addLeaderAndIsrRequestForBrokers(Seq(replicaId),

|

||||

replica.topicPartition,

|

||||

leaderIsrAndControllerEpoch,

|

||||

controllerContext.partitionFullReplicaAssignment(partition), isNew = false)

|

||||

case None =>

|

||||

}

|

||||

```

|

||||

##### 先前状态 ==> ReplicaDeletionIneligible

|

||||

> 在内存`replicaStates`中更新一下副本状态为`ReplicaDeletionIneligible`

|

||||

##### 先前状态 ==》OfflinePartition

|

||||

>-- 向副本发送 StopReplicaRequest ;

|

||||

– 从 isr 中删除此副本并将 LeaderAndIsr 请求(带有新的 isr)发送到领导副本,并将分区的 UpdateMetadata 请求发送到每个实时代理。

|

||||

|

||||

```scala

|

||||

|

||||

case OfflineReplica =>

|

||||

// 添加构建StopReplicaRequest请求的擦书,deletePartition = false表示还不删除分区

|

||||

validReplicas.foreach { replica =>

|

||||

controllerBrokerRequestBatch.addStopReplicaRequestForBrokers(Seq(replicaId), replica.topicPartition, deletePartition = false)

|

||||

}

|

||||

val (replicasWithLeadershipInfo, replicasWithoutLeadershipInfo) = validReplicas.partition { replica =>

|

||||

controllerContext.partitionLeadershipInfo.contains(replica.topicPartition)

|

||||

}

|

||||

//尝试从多个分区的 isr 中删除副本。从 isr 中删除副本会更新 Zookeeper 中的分区状态

|

||||

//反复尝试从多个分区的 isr 中删除副本,直到没有更多剩余的分区可以重试。

|

||||

//从/brokers/topics/test_create_topic13/partitions获取分区相关数据

|

||||

//移除副本之后,重新写入到zk中

|

||||

val updatedLeaderIsrAndControllerEpochs = removeReplicasFromIsr(replicaId, replicasWithLeadershipInfo.map(_.topicPartition))

|

||||

updatedLeaderIsrAndControllerEpochs.foreach { case (partition, leaderIsrAndControllerEpoch) =>

|

||||

if (!controllerContext.isTopicQueuedUpForDeletion(partition.topic)) {

|

||||

val recipients = controllerContext.partitionReplicaAssignment(partition).filterNot(_ == replicaId)

|

||||

controllerBrokerRequestBatch.addLeaderAndIsrRequestForBrokers(recipients,

|

||||

partition,

|

||||

leaderIsrAndControllerEpoch,

|

||||

controllerContext.partitionFullReplicaAssignment(partition), isNew = false)

|

||||

}

|

||||

val replica = PartitionAndReplica(partition, replicaId)

|

||||

val currentState = controllerContext.replicaState(replica)

|

||||

logSuccessfulTransition(replicaId, partition, currentState, OfflineReplica)

|

||||

controllerContext.putReplicaState(replica, OfflineReplica)

|

||||

}

|

||||

|

||||

replicasWithoutLeadershipInfo.foreach { replica =>

|

||||

val currentState = controllerContext.replicaState(replica)

|

||||

logSuccessfulTransition(replicaId, replica.topicPartition, currentState, OfflineReplica)

|

||||

controllerBrokerRequestBatch.addUpdateMetadataRequestForBrokers(controllerContext.liveOrShuttingDownBrokerIds.toSeq, Set(replica.topicPartition))

|

||||

controllerContext.putReplicaState(replica, OfflineReplica)

|

||||

}

|

||||

```

|

||||

1. 添加构建StopReplicaRequest请求的参数,`deletePartition = false`表示还不删除分区

|

||||

2. 反复尝试从多个分区的 isr 中删除副本,直到没有更多剩余的分区可以重试。从`/brokers/topics/{TOPICNAME}/partitions`获取分区相关数据,进过计算然后重新写入到zk中`/brokers/topics/{TOPICNAME}/partitions/state/`; 当然内存中的副本状态机的状态也会变更成 `OfflineReplica` ;

|

||||

3. 根据条件判断是否需要发送`LeaderAndIsrRequest`、`UpdateMetadataRequest`

|

||||

4. 发送`StopReplicaRequests`请求;

|

||||

|

||||

|

||||

##### 先前状态==>ReplicaDeletionStarted

|

||||

> 向指定的副本发送 [StopReplicaRequest 请求]()(带 删除参数);

|

||||

|

||||

```scala

|

||||

controllerBrokerRequestBatch.addStopReplicaRequestForBrokers(Seq(replicaId), replica.topicPartition, deletePartition = true)

|

||||

|

||||

```

|

||||

|

||||

##### 当前状态 ==> NewReplica

|

||||

>一般情况下,创建Topic的时候会触发这个流转;

|

||||

|

||||

```scala

|

||||

case NewReplica =>

|

||||

validReplicas.foreach { replica =>

|

||||

val partition = replica.topicPartition

|

||||

val currentState = controllerContext.replicaState(replica)

|

||||

|

||||

controllerContext.partitionLeadershipInfo.get(partition) match {

|

||||

case Some(leaderIsrAndControllerEpoch) =>

|

||||

if (leaderIsrAndControllerEpoch.leaderAndIsr.leader == replicaId) {

|

||||

val exception = new StateChangeFailedException(s"Replica $replicaId for partition $partition cannot be moved to NewReplica state as it is being requested to become leader")

|

||||

logFailedStateChange(replica, currentState, OfflineReplica, exception)

|

||||

} else {

|

||||

controllerBrokerRequestBatch.addLeaderAndIsrRequestForBrokers(Seq(replicaId),

|

||||

replica.topicPartition,

|

||||

leaderIsrAndControllerEpoch,

|

||||

controllerContext.partitionFullReplicaAssignment(replica.topicPartition),

|

||||

isNew = true)

|

||||

logSuccessfulTransition(replicaId, partition, currentState, NewReplica)

|

||||

controllerContext.putReplicaState(replica, NewReplica)

|

||||

}

|

||||

case None =>

|

||||

logSuccessfulTransition(replicaId, partition, currentState, NewReplica)

|

||||

controllerContext.putReplicaState(replica, NewReplica)

|

||||

}

|

||||

}

|

||||

```

|

||||

1. 在内存中更新 副本状态;

|

||||

2. 在某些情况下,将带有当前领导者和 isr 的 LeaderAndIsr 请求发送到新副本,并将分区的 UpdateMetadata 请求发送到每个实时代理

|

||||

|

||||

##### 当前状态 ==> NonExistentPartition

|

||||

1. `OfflinePartition`

|

||||

|

||||

##### 当前状态 ==> NonExistentPartition

|

||||

|

||||

|

||||

### PartitionStateMachine分区状态机

|

||||

`PartitionStateMachine.startup`

|

||||

```scala

|

||||

def startup(): Unit = {

|

||||

initializePartitionState()

|

||||

triggerOnlinePartitionStateChange()

|

||||

}

|

||||

```

|

||||

`PartitionStateMachine.initializePartitionState()`

|

||||

> 初始化分区状态

|

||||

```scala

|

||||

/**

|

||||

* Invoked on startup of the partition's state machine to set the initial state for all existing partitions in

|

||||

* zookeeper

|

||||

*/

|

||||

private def initializePartitionState(): Unit = {

|

||||

for (topicPartition <- controllerContext.allPartitions) {

|

||||

// check if leader and isr path exists for partition. If not, then it is in NEW state

|

||||

//检查leader和isr路径是否存在

|

||||

controllerContext.partitionLeadershipInfo.get(topicPartition) match {

|

||||

case Some(currentLeaderIsrAndEpoch) =>

|

||||

if (controllerContext.isReplicaOnline(currentLeaderIsrAndEpoch.leaderAndIsr.leader, topicPartition))

|

||||

// leader is alive

|

||||

controllerContext.putPartitionState(topicPartition, OnlinePartition)

|

||||

else

|

||||

controllerContext.putPartitionState(topicPartition, OfflinePartition)

|

||||

case None =>

|

||||

controllerContext.putPartitionState(topicPartition, NewPartition)

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

1. 如果分区不存在`LeaderIsr`,则状态是`NewPartition`

|

||||

2. 如果分区存在`LeaderIsr`,就判断一下Leader是否存活

|

||||

2.1 如果存活的话,状态是`OnlinePartition`

|

||||

2.2 否则是`OfflinePartition`

|

||||

|

||||

|

||||

`PartitionStateMachine. triggerOnlinePartitionStateChange()`

|

||||

>尝试将所有处于 `NewPartition `或 `OfflinePartition `状态的分区移动到 `OnlinePartition` 状态,但属于要删除的主题的分区除外

|

||||

|

||||

```scala

|

||||

def triggerOnlinePartitionStateChange(): Unit = {

|

||||

val partitions = controllerContext.partitionsInStates(Set(OfflinePartition, NewPartition))

|

||||

triggerOnlineStateChangeForPartitions(partitions)

|

||||

}

|

||||

|

||||

private def triggerOnlineStateChangeForPartitions(partitions: collection.Set[TopicPartition]): Unit = {

|

||||

// try to move all partitions in NewPartition or OfflinePartition state to OnlinePartition state except partitions

|

||||

// that belong to topics to be deleted

|

||||

val partitionsToTrigger = partitions.filter { partition =>

|

||||

!controllerContext.isTopicQueuedUpForDeletion(partition.topic)

|

||||

}.toSeq

|

||||

|

||||

handleStateChanges(partitionsToTrigger, OnlinePartition, Some(OfflinePartitionLeaderElectionStrategy(false)))

|

||||

// TODO: If handleStateChanges catches an exception, it is not enough to bail out and log an error.

|

||||

// It is important to trigger leader election for those partitions.

|

||||

}

|

||||

```

|

||||

|

||||

#### PartitionStateMachine 分区状态机

|

||||

|

||||

`PartitionStateMachine.doHandleStateChanges `

|

||||

` controllerBrokerRequestBatch.sendRequestsToBrokers(controllerContext.epoch)

|

||||

`

|

||||

|

||||

>它确保每个状态转换都发生从合法的先前状态到目标状态。有效的状态转换是:

|

||||

>1. `NonExistentPartition -> NewPartition:` 将分配的副本从 ZK 加载到控制器缓存

|

||||

>2. `NewPartition -> OnlinePartition:` 将第一个活动副本指定为领导者,将所有活动副本指定为 isr;将此分区的leader和isr写入ZK ;向每个实时副本发送 LeaderAndIsr 请求,向每个实时代理发送 UpdateMetadata 请求

|

||||

>3. `OnlinePartition,OfflinePartition -> OnlinePartition:` 为这个分区选择新的leader和isr以及一组副本来接收LeaderAndIsr请求,并将leader和isr写入ZK;

|

||||

> 对于这个分区,向每个接收副本发送LeaderAndIsr请求,向每个live broker发送UpdateMetadata请求

|

||||

> 4. `NewPartition,OnlinePartition,OfflinePartition -> OfflinePartition:` 将分区状态标记为 Offline

|

||||

> 5. `OfflinePartition -> NonExistentPartition:` 将分区状态标记为 NonExistentPartition

|

||||

>

|

||||

##### 先前状态==》NewPartition

|

||||

>将分配的副本从 ZK 加载到控制器缓存

|

||||

|

||||

##### 先前状态==》OnlinePartition

|

||||

> 将第一个活动副本指定为领导者,将所有活动副本指定为 isr;将此分区的leader和isr写入ZK ;向每个实时副本发送 LeaderAndIsr 请求,向每个实时Broker发送 UpdateMetadata 请求

|

||||

|

||||

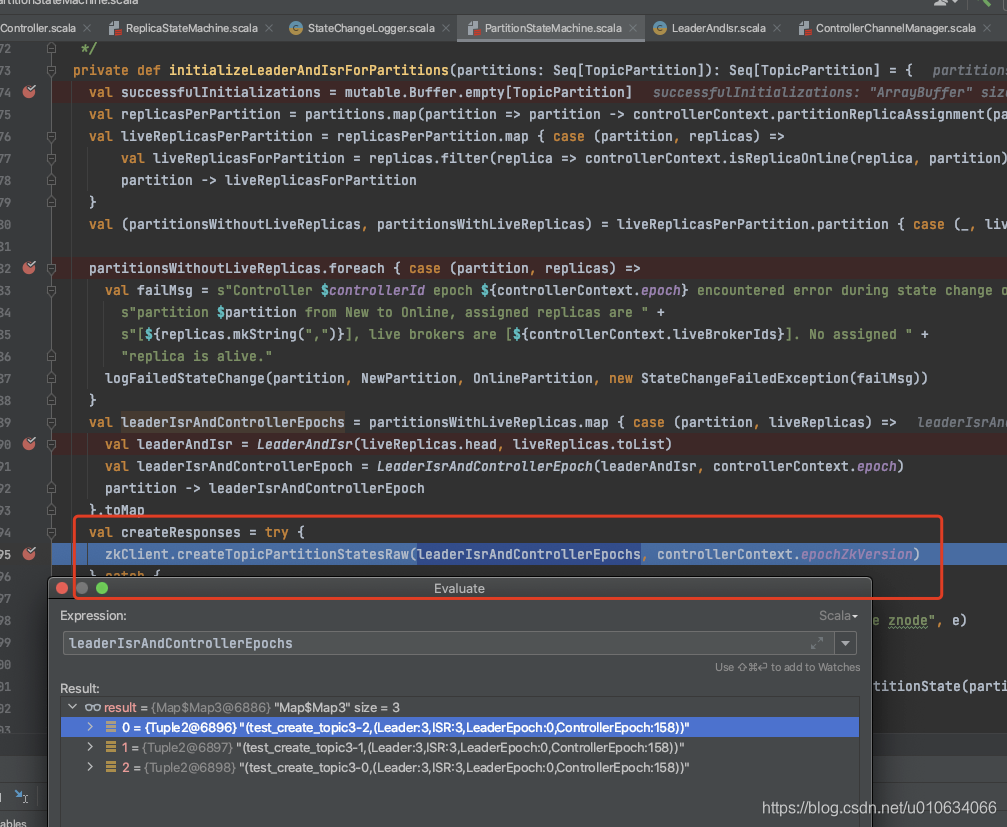

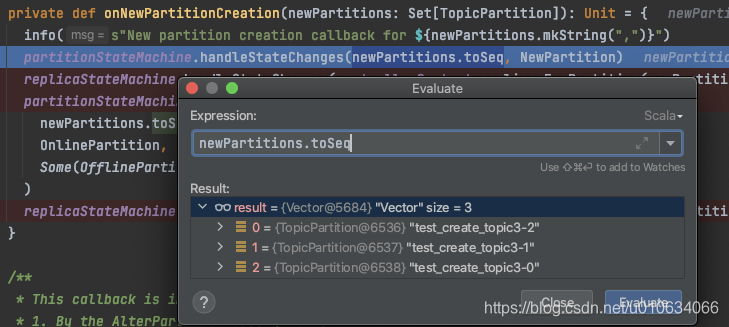

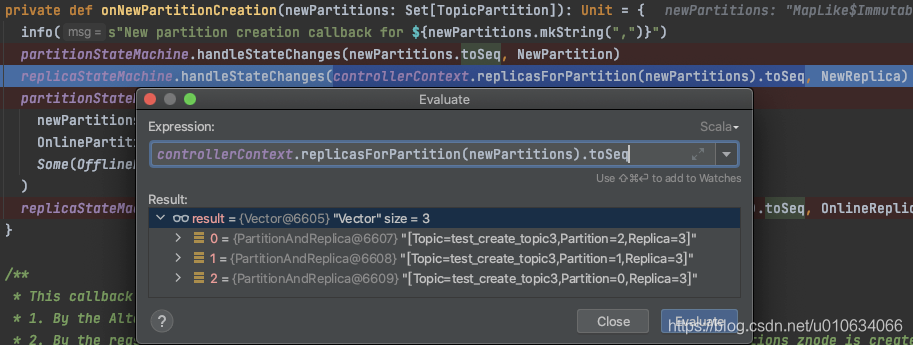

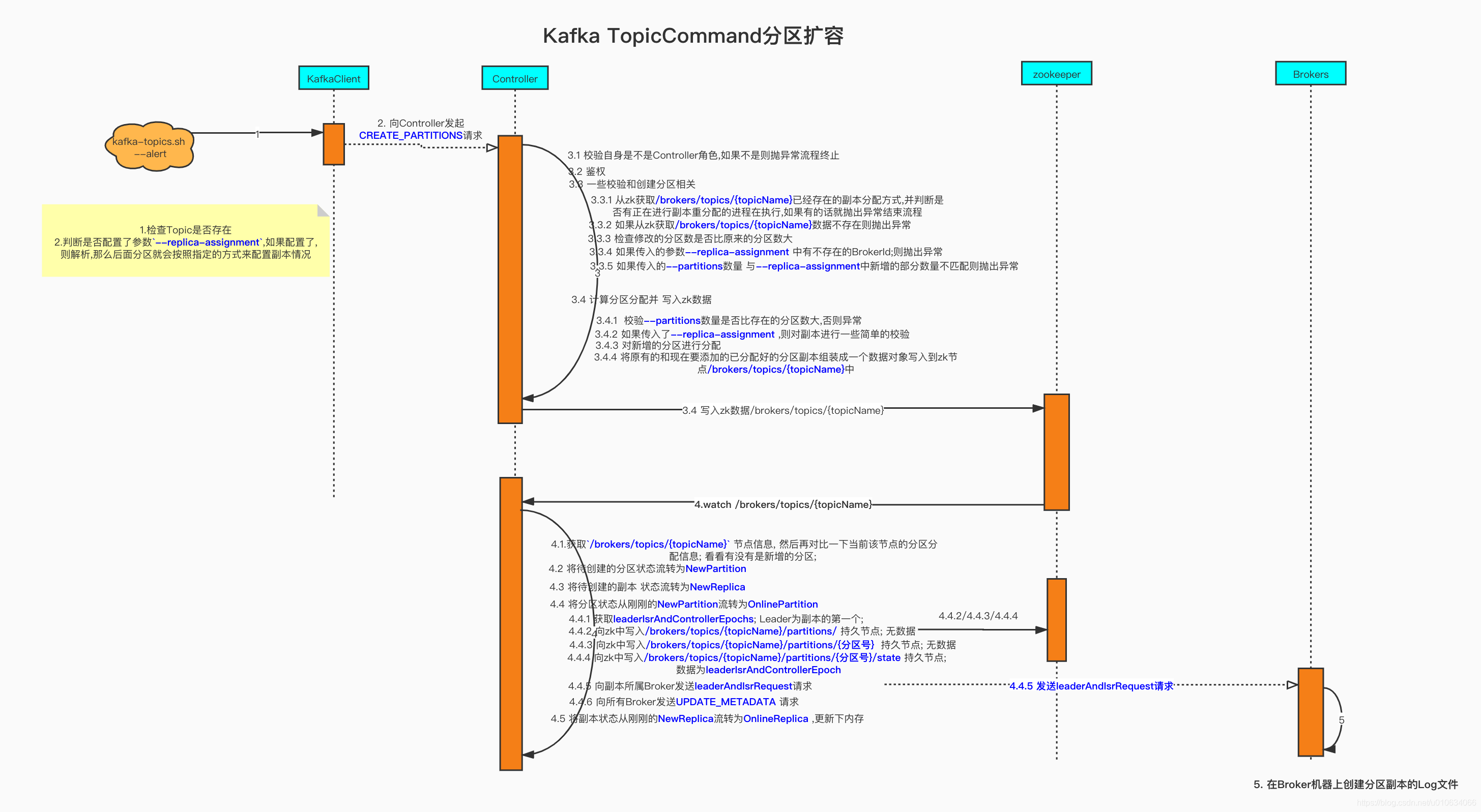

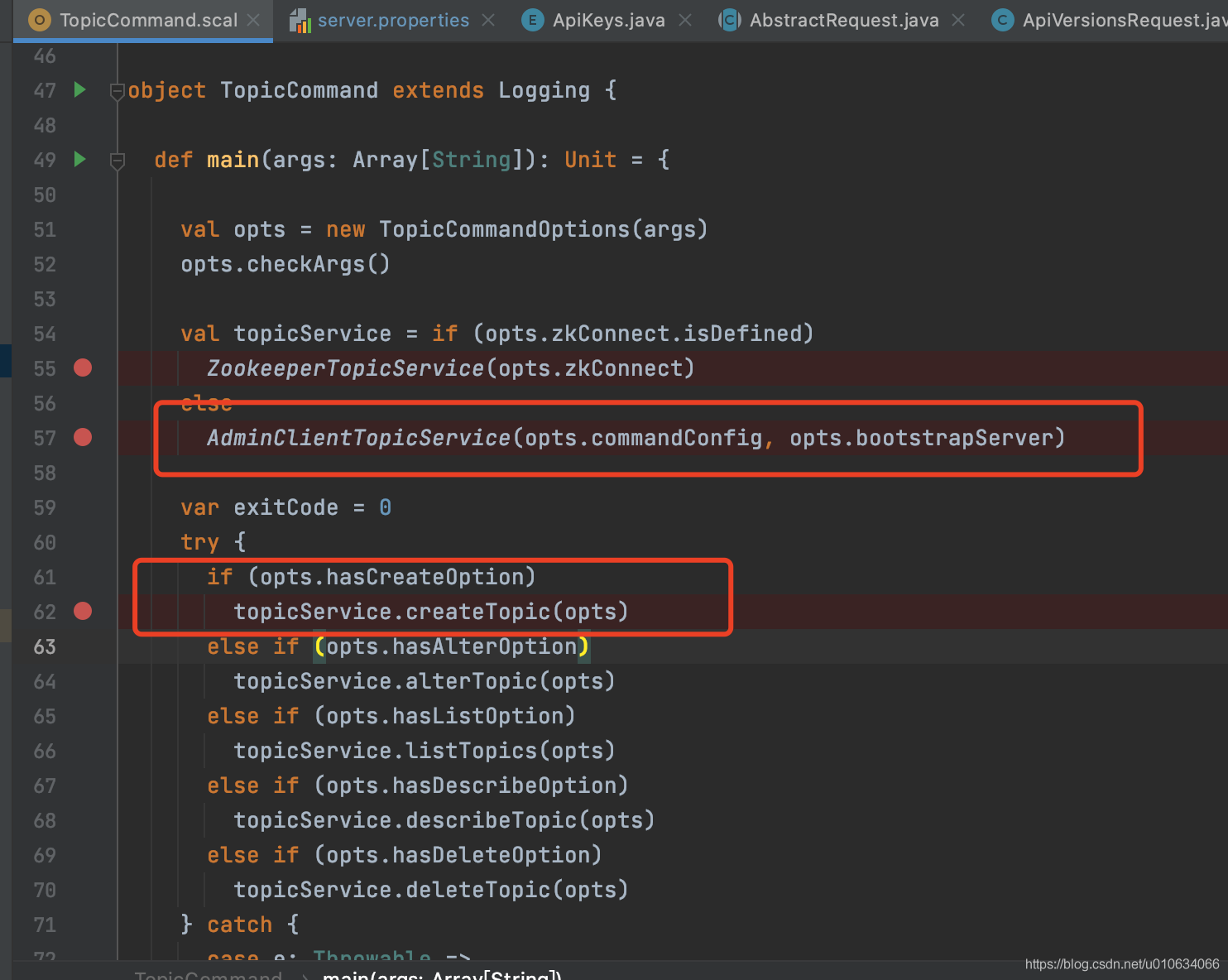

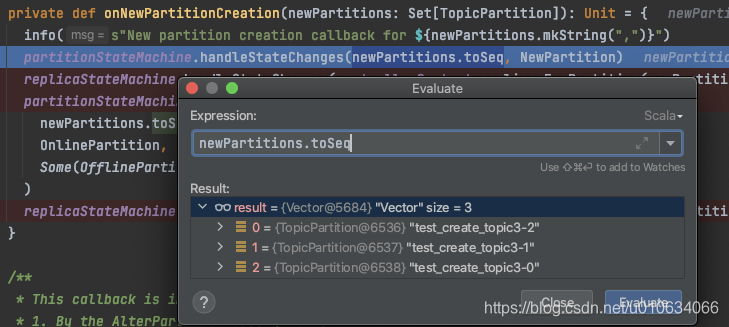

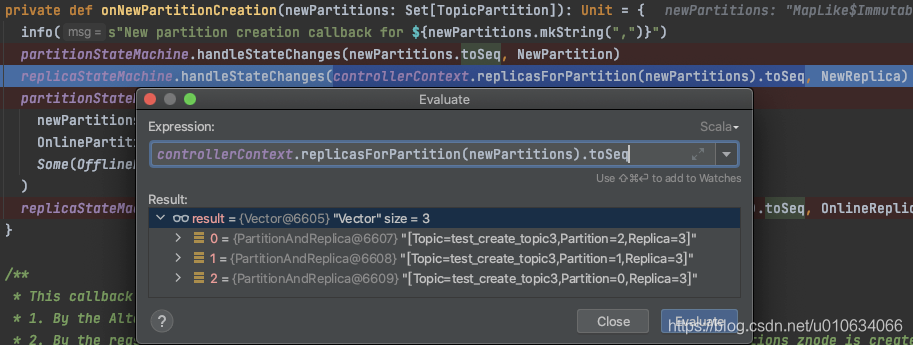

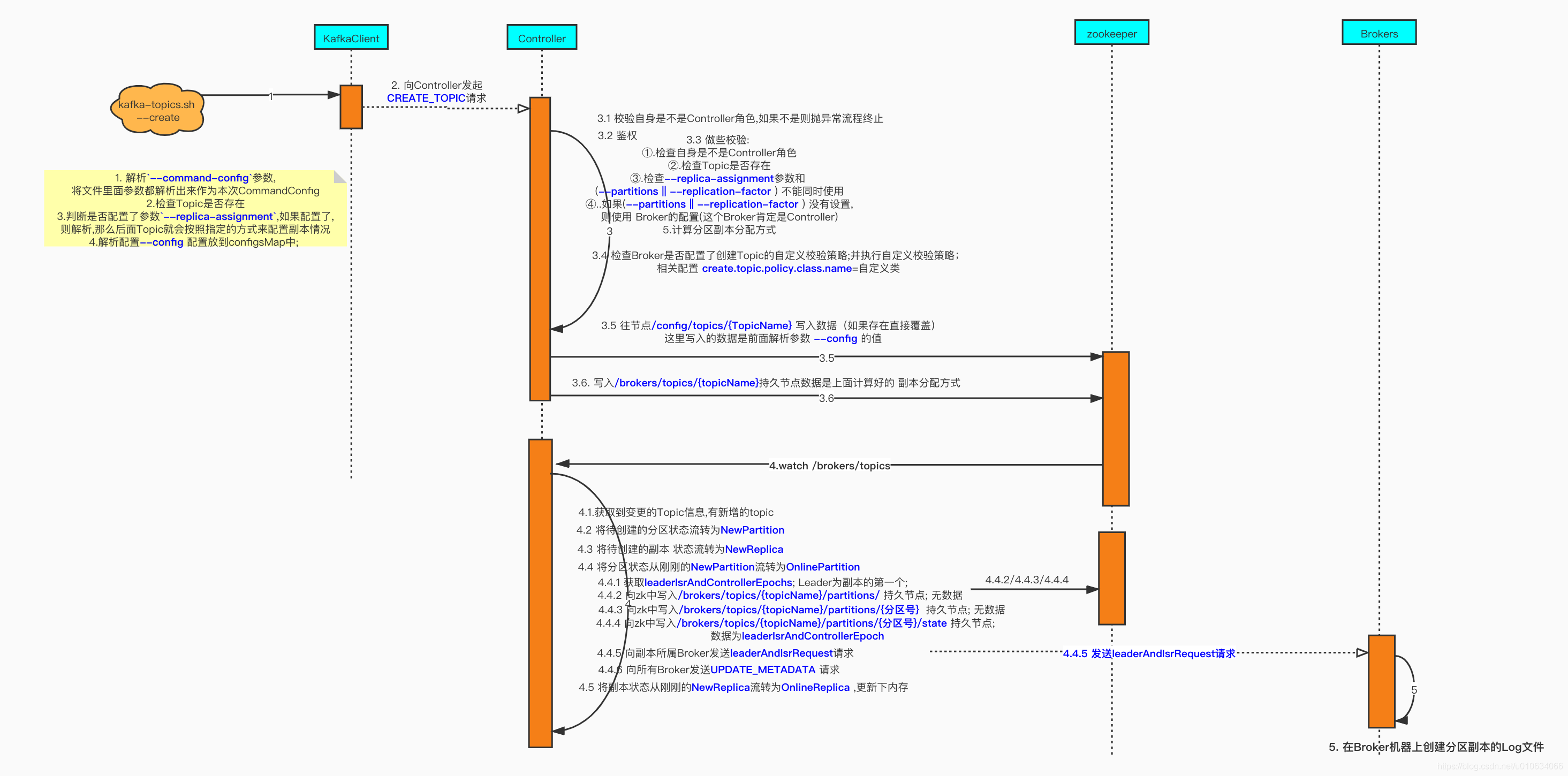

创建一个新的Topic的时候,我们主要看下面这个接口`initializeLeaderAndIsrForPartitions`

|

||||

|

||||

|

||||

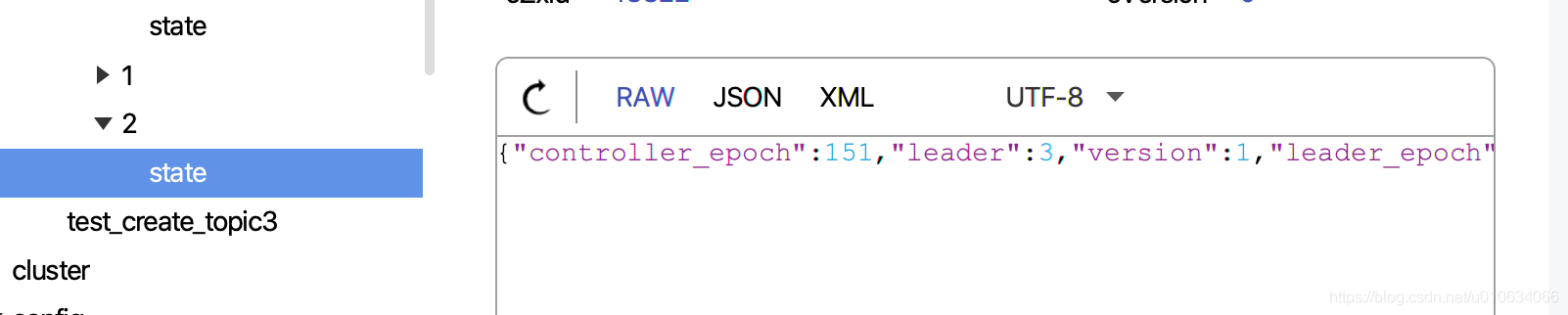

0. 获取`leaderIsrAndControllerEpochs`; Leader为副本的第一个;

|

||||

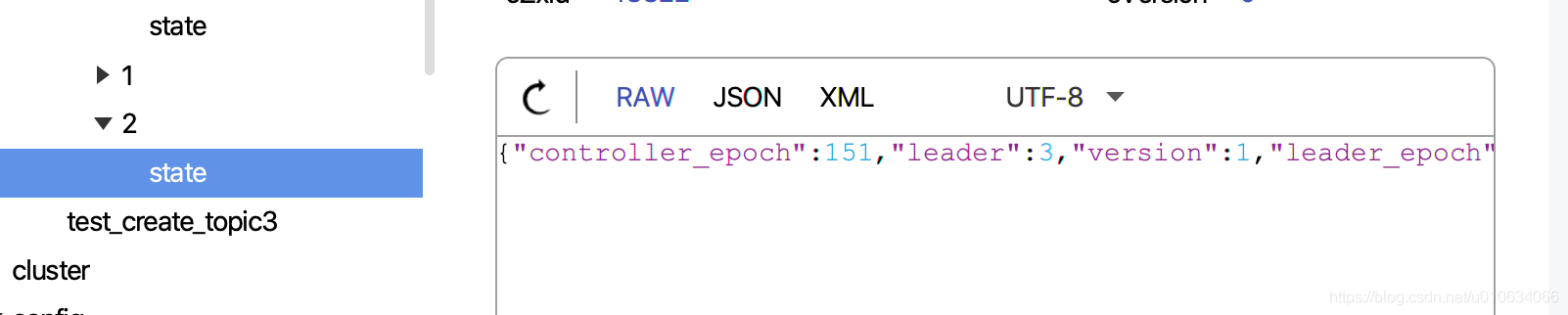

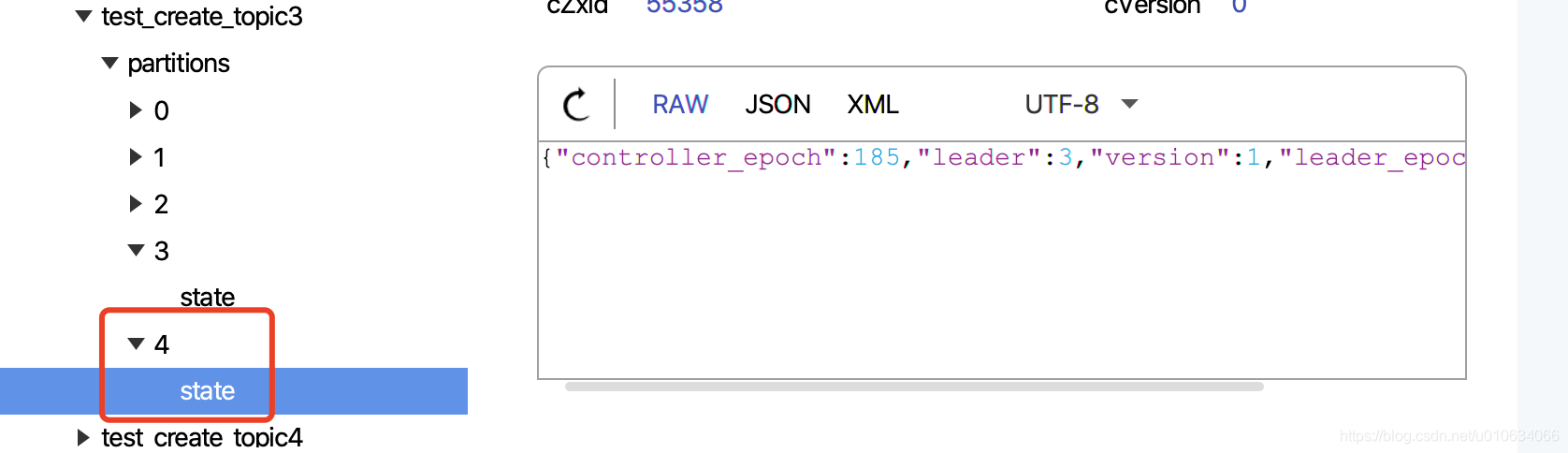

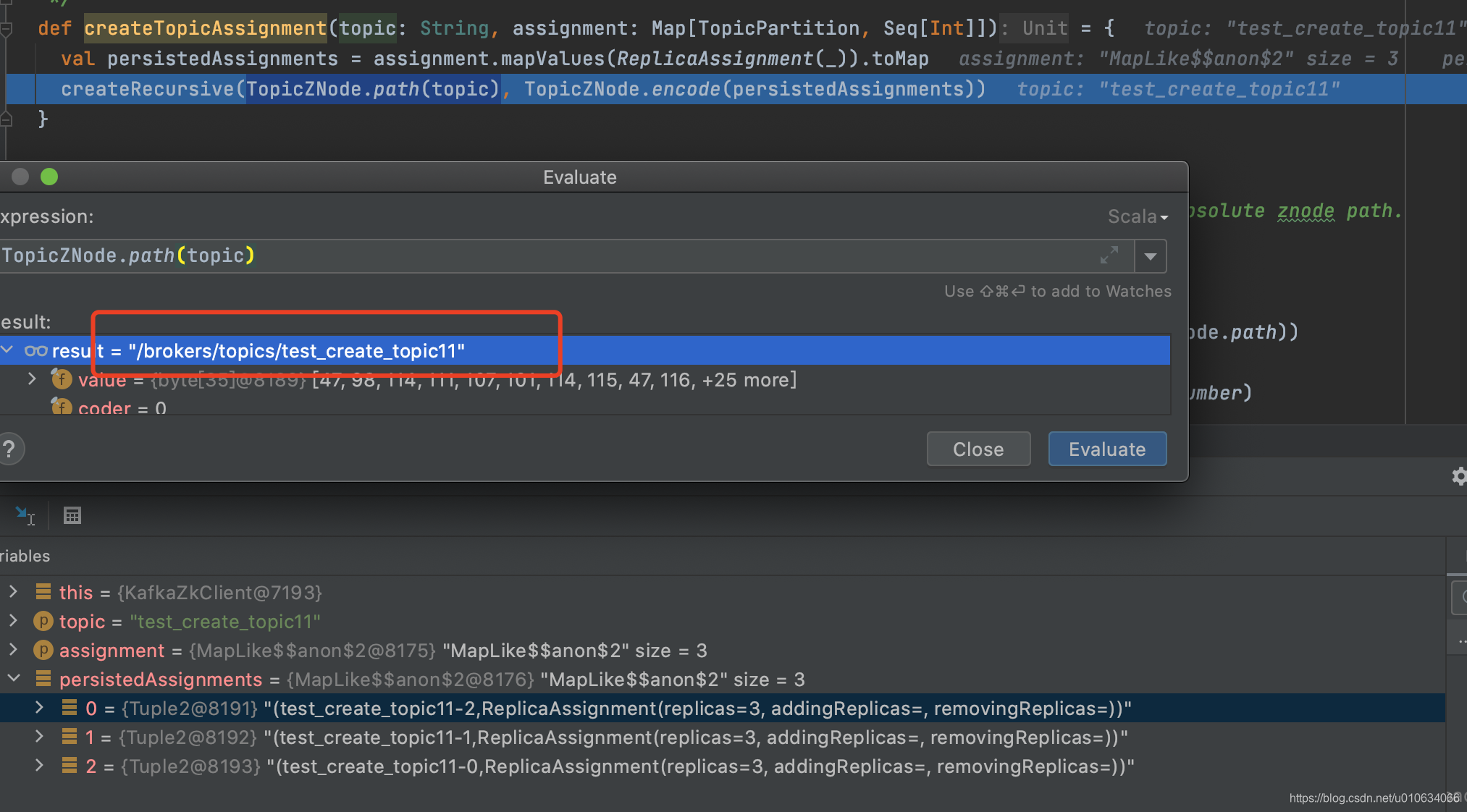

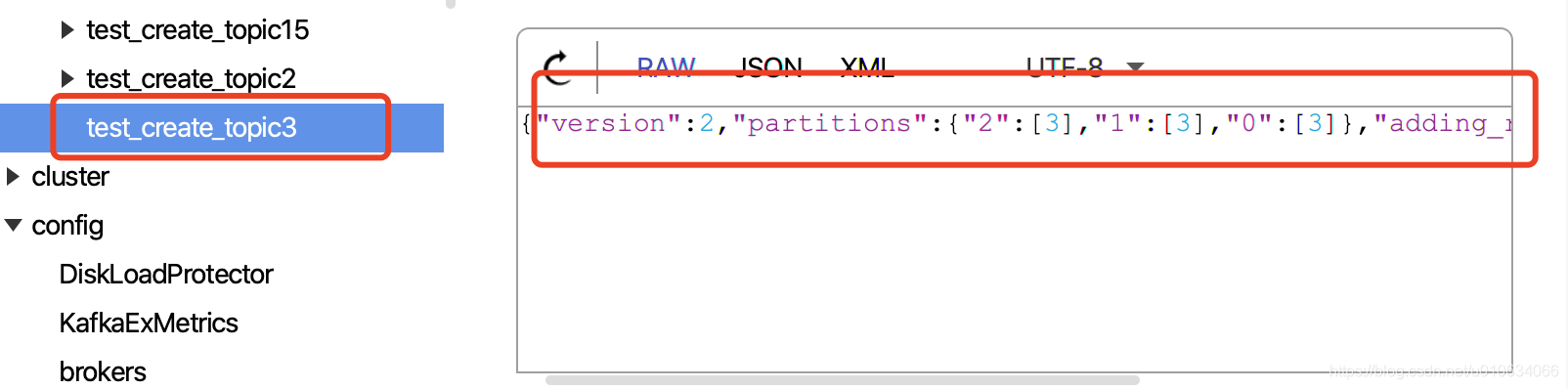

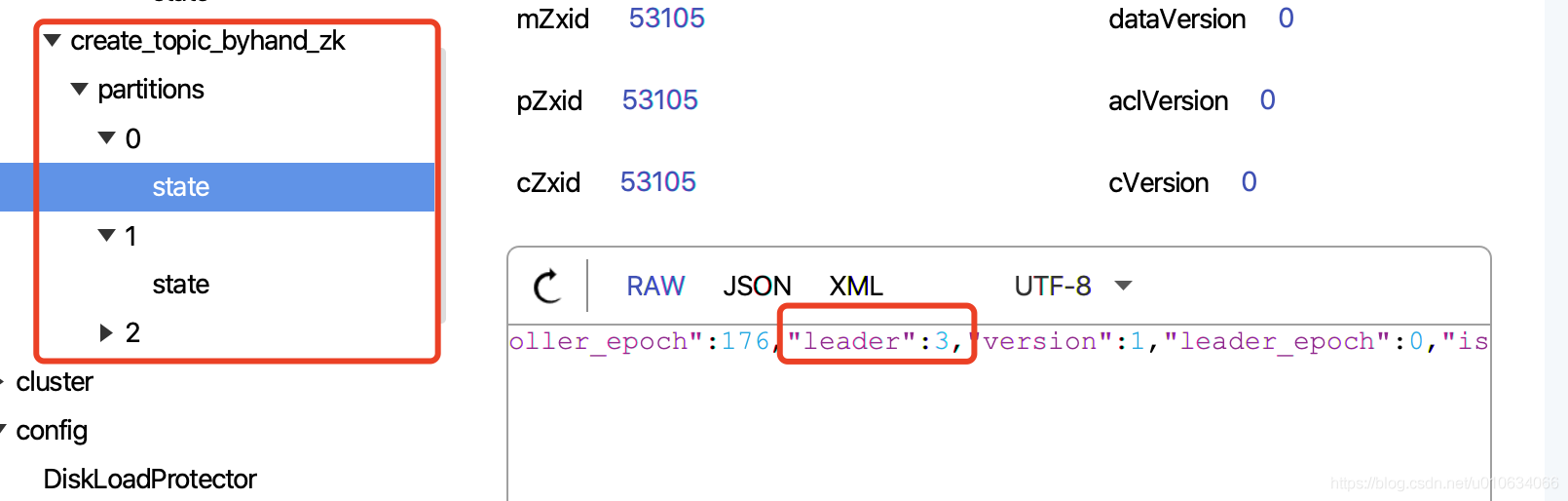

1. 向zk中写入`/brokers/topics/{topicName}/partitions/` 持久节点; 无数据

|

||||

2. 向zk中写入`/brokers/topics/{topicName}/partitions/{分区号}` 持久节点; 无数据

|

||||

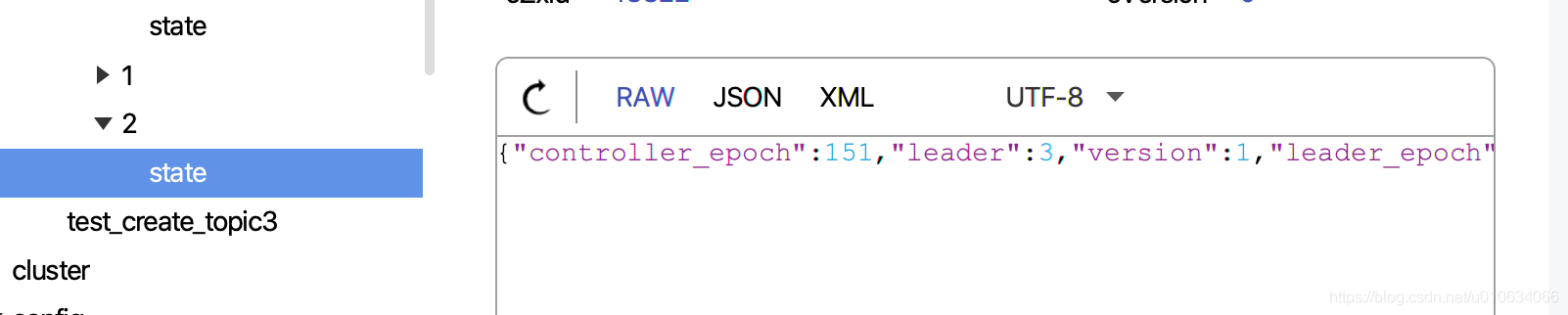

3. 向zk中写入`/brokers/topics/{topicName}/partitions/{分区号}/state` 持久节点; 数据为`leaderIsrAndControllerEpoch`

|

||||

4. 向副本所属Broker发送[`leaderAndIsrRequest`]()请求

|

||||

5. 向所有Broker发送[`UPDATE_METADATA` ]()请求

|

||||

|

||||

|

||||

##### 先前状态==》OfflinePartition

|

||||

>将分区状态标记为 Offline ; 在Map对象`partitionStates`中维护的; `NewPartition,OnlinePartition,OfflinePartition ` 可转;

|

||||

##### 先前状态==》NonExistentPartition

|

||||

|

||||

>将分区状态标记为 Offline ; 在Map对象`partitionStates`中维护的; `OfflinePartition ` 可转;

|

||||

|

||||

|

||||

|

||||

## 源码总结

|

||||

|

||||

## Q&A

|

||||

339

docs/zh/Kafka分享/Kafka Controller /Controller启动过程以及选举流程源码分析.md

Normal file

339

docs/zh/Kafka分享/Kafka Controller /Controller启动过程以及选举流程源码分析.md

Normal file

@@ -0,0 +1,339 @@

|

||||

[TOC]

|

||||

|

||||

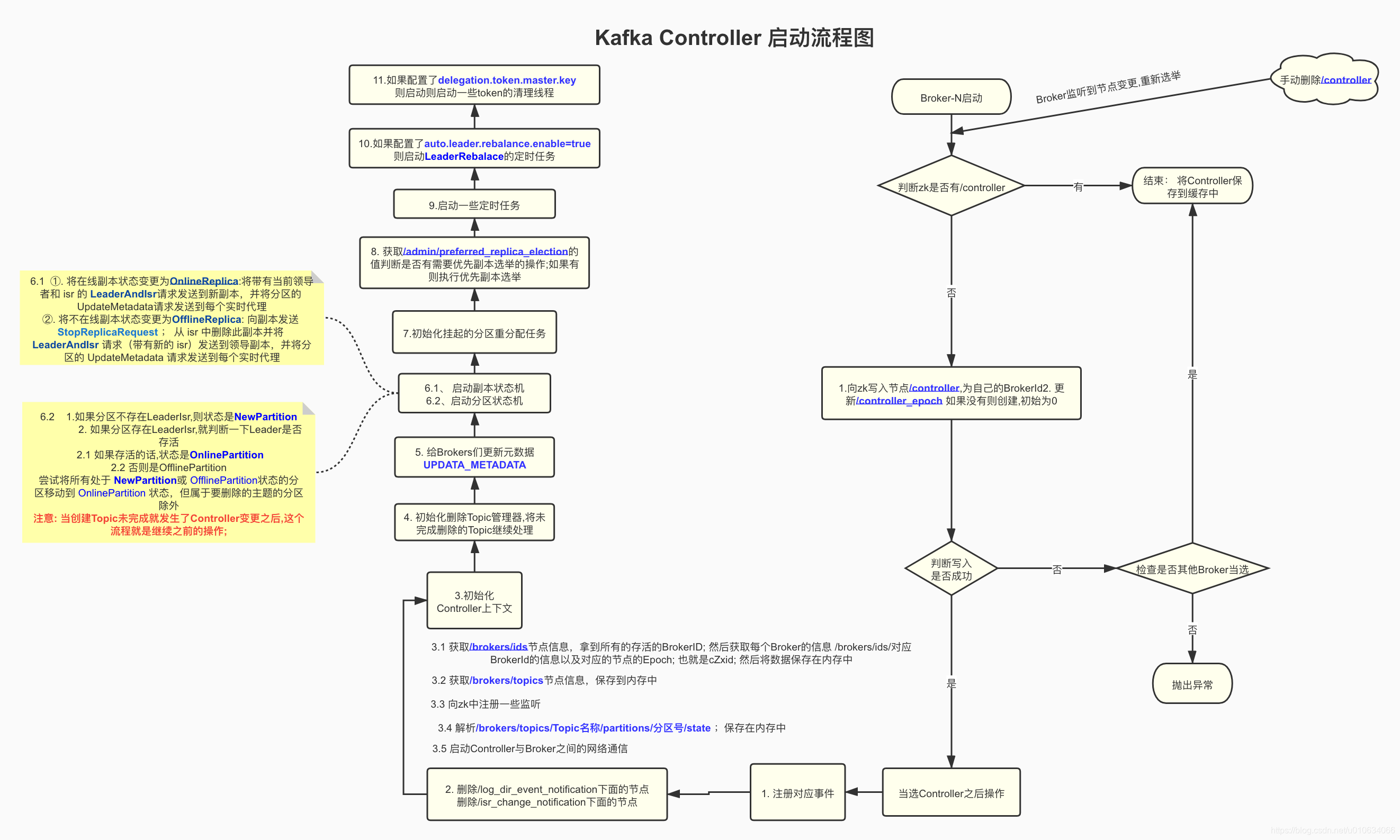

## 前言

|

||||

>本篇文章,我们开始来分析分析Kafka的`Controller`部分的源码,Controller 作为 Kafka Server 端一个重要的组件,它的角色类似于其他分布式系统 Master 的角色,跟其他系统不一样的是,Kafka 集群的任何一台 Broker 都可以作为 Controller,但是在一个集群中同时只会有一个 Controller 是 alive 状态。Controller 在集群中负责的事务很多,比如:集群 meta 信息的一致性保证、Partition leader 的选举、broker 上下线等都是由 Controller 来具体负责。

|

||||

|

||||

## 源码分析

|

||||

老样子,我们还是先来撸一遍源码之后,再进行总结

|

||||

<font color="red">如果觉得阅读源码解析太枯燥,请直接看 **源码总结及其后面部分**</font>

|

||||

|

||||

|

||||

### 1.源码入口KafkaServer.startup

|

||||

我们在启动kafka服务的时候,最开始执行的是`KafkaServer.startup`方法; 这里面包含了kafka启动的所有流程; 我们主要看Controller的启动流程

|

||||

```scala

|

||||

def startup(): Unit = {

|

||||

try {

|

||||

//省略部分代码....

|

||||

/* start kafka controller */

|

||||

kafkaController = new KafkaController(config, zkClient, time, metrics, brokerInfo, brokerEpoch, tokenManager, threadNamePrefix)

|

||||

kafkaController.startup()

|

||||

//省略部分代码....

|

||||

}

|

||||

}

|

||||

```

|

||||

### 2. kafkaController.startup() 启动

|

||||

```scala

|

||||

/**

|

||||

每个kafka启动的时候都会调用, 注意这并不假设当前代理是控制器。

|

||||

它只是注册会话过期侦听器 并启动控制器尝试选举Controller

|

||||

*/

|

||||

def startup() = {

|

||||

//注册状态变更处理器; 这里是把`StateChangeHandler`这个处理器放到一个`stateChangeHandlers` Map中了

|

||||

zkClient.registerStateChangeHandler(new StateChangeHandler {

|

||||

override val name: String = StateChangeHandlers.ControllerHandler

|

||||

override def afterInitializingSession(): Unit = {

|

||||

eventManager.put(RegisterBrokerAndReelect)

|

||||

}

|

||||

override def beforeInitializingSession(): Unit = {

|

||||

val queuedEvent = eventManager.clearAndPut(Expire)

|

||||

|

||||

// Block initialization of the new session until the expiration event is being handled,

|

||||

// which ensures that all pending events have been processed before creating the new session

|

||||

queuedEvent.awaitProcessing()

|

||||

}

|

||||

})

|

||||

// 在事件管理器的队列里面放入 一个 Startup启动事件; 这个时候放入还不会执行;

|

||||

eventManager.put(Startup)

|

||||

//启动事件管理器,启动的是一个 `ControllerEventThread`的线程

|

||||

eventManager.start()

|

||||

}

|

||||

```

|

||||

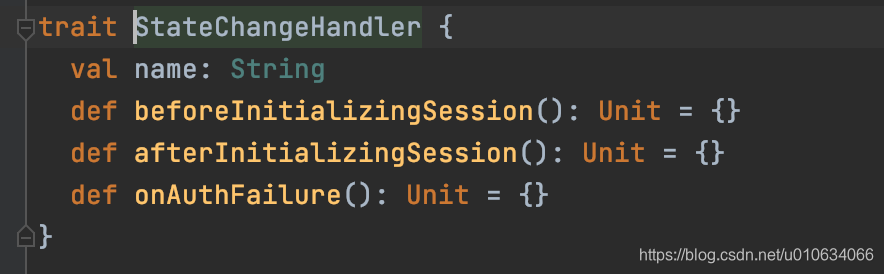

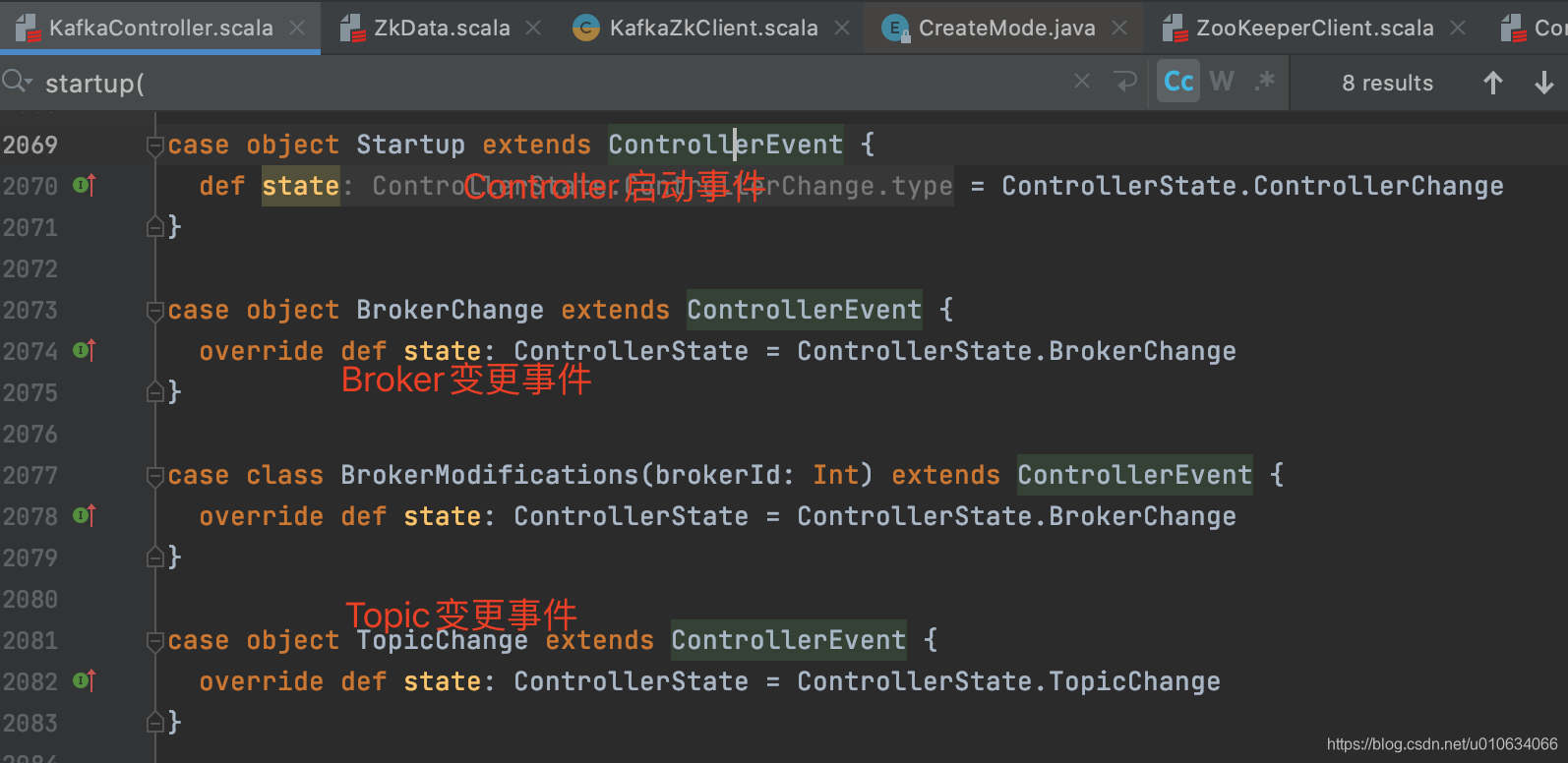

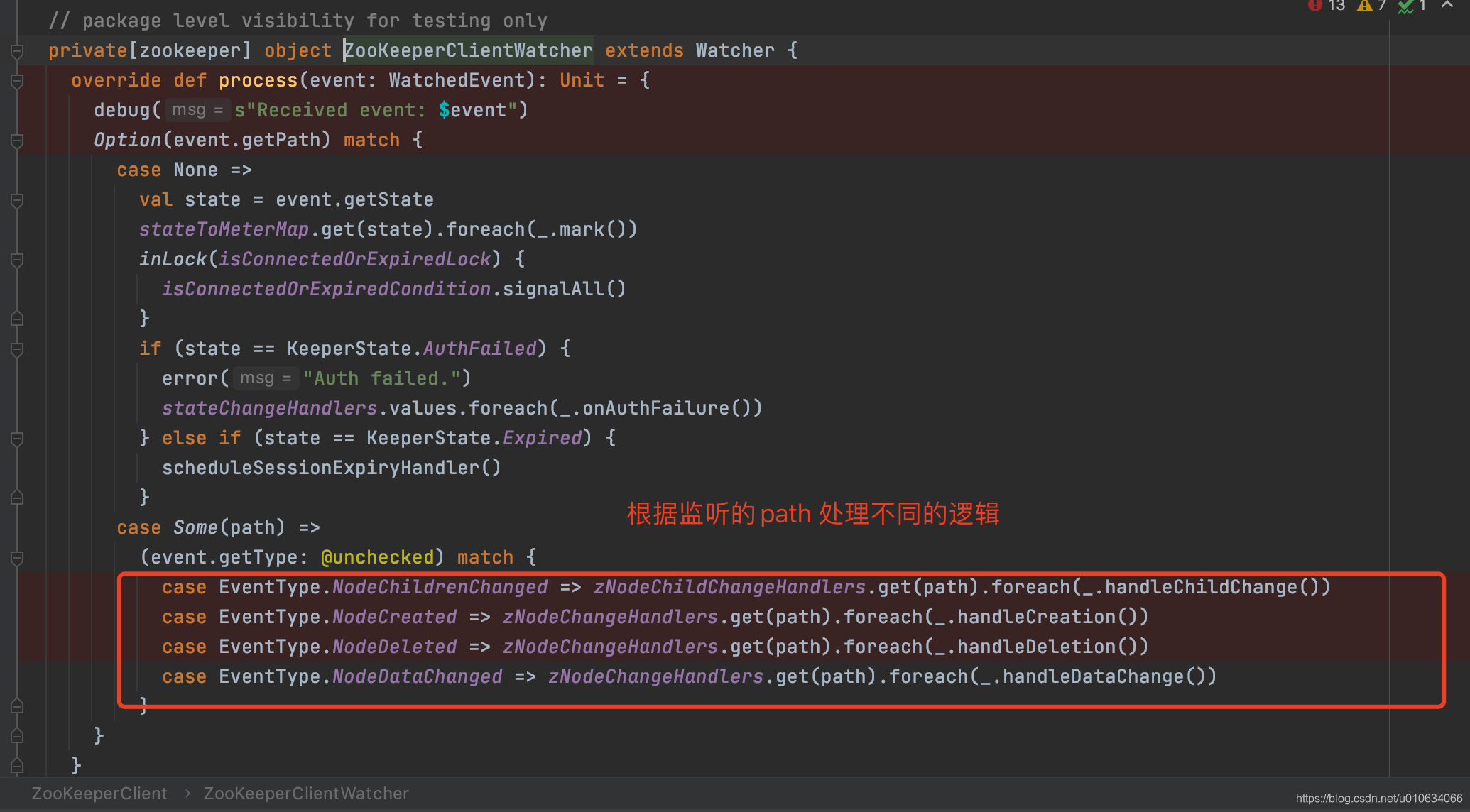

1. `zkClient.registerStateChangeHandler` 注册一个`StateChangeHandler` 状态变更处理器; 有一个map `stateChangeHandlers`来维护这个处理器列表; 这个类型的处理器有下图三个方法,可以看到我们这里实现了`beforeInitializingSession`和`afterInitializingSession`方法,具体调用的时机,我后面再分析(监听zk的数据变更)

|

||||

2. `ControllerEventManager`是Controller的事件管理器; 里面维护了一个阻塞队列`queue`; 这个queue里面存放的是所有的Controller事件; 按顺序排队执行入队的事件; 上面的代码中`eventManager.put(Startup)` 在队列中放入了一个`Startup`启动事件; 所有的事件都是集成了`ControllerEvent`类的

|

||||

3. 启动事件管理器, 从待执行事件队列`queue`中获取事件进行执行,刚刚不是假如了一个`StartUp`事件么,这个事件就会执行这个事件

|

||||

|

||||

### 3. ControllerEventThread 执行事件线程

|

||||

` eventManager.start()` 之后执行了下面的方法

|

||||

|

||||

```scala

|

||||

class ControllerEventThread(name: String) extends ShutdownableThread(name = name, isInterruptible = false) {

|

||||

override def doWork(): Unit = {

|

||||

//从待执行队列里面take一个事件; 没有事件的时候这里会阻塞

|

||||

val dequeued = queue.take()

|

||||

dequeued.event match {

|

||||

case ShutdownEventThread => // The shutting down of the thread has been initiated at this point. Ignore this event.

|

||||

case controllerEvent =>

|

||||

//获取事件的ControllerState值;不同事件不一样,都集成自ControllerState

|

||||

_state = controllerEvent.state

|

||||

eventQueueTimeHist.update(time.milliseconds() - dequeued.enqueueTimeMs)

|

||||

try {

|

||||

// 定义process方法; 最终执行的是 事件提供的process方法;

|

||||

def process(): Unit = dequeued.process(processor)

|

||||

|

||||

//根据state获取不同的KafkaTimer 主要是为了采集数据; 我们只要关注里面是执行了 process()方法就行了

|

||||

rateAndTimeMetrics.get(state) match {

|

||||

case Some(timer) => timer.time { process() }

|

||||

case None => process()

|

||||

}

|

||||

} catch {

|

||||

case e: Throwable => error(s"Uncaught error processing event $controllerEvent", e)

|

||||

}

|

||||

|

||||

_state = ControllerState.Idle

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

```

|

||||

1. `val dequeued = queue.take()`从待执行队列里面take一个事件; 没有事件的时候这里会阻塞

|

||||

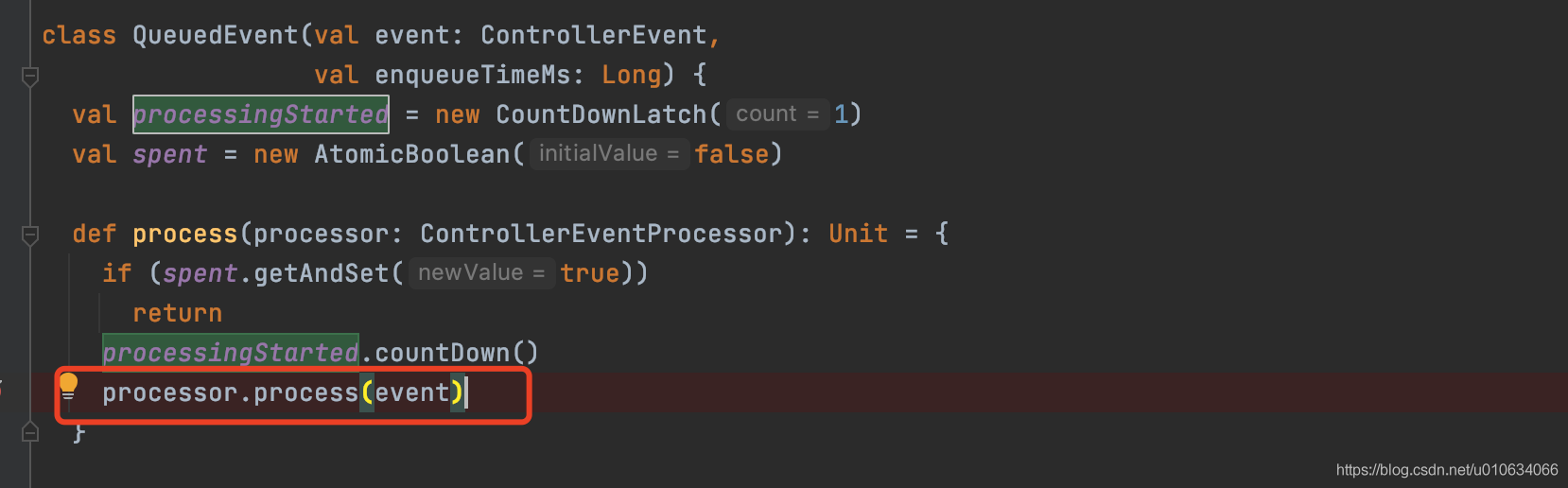

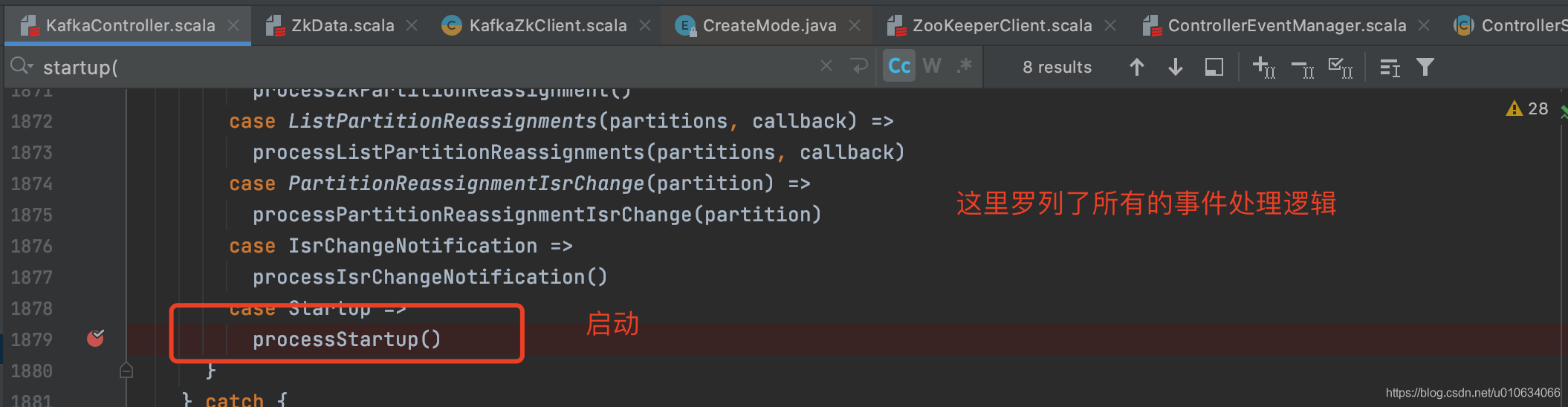

2. `dequeued.process(processor)`调用具体事件实现的 `process方法`如下图, 不过要注意的是这里使用了`CountDownLatch(1)`, 那肯定有个地方调用了`processingStarted.await()` 来等待这里的`process()执行完成`;上面的startUp方法就调用了;

|

||||

|

||||

|

||||

### 4. processStartup 启动流程

|

||||

启动Controller的流程

|

||||

```scala

|

||||

private def processStartup(): Unit = {

|

||||

//注册znode变更事件和watch Controller节点是否在zk中存在

|

||||

zkClient.registerZNodeChangeHandlerAndCheckExistence(controllerChangeHandler)

|

||||

//选举逻辑

|

||||

elect()

|

||||

}

|

||||

|

||||

```

|

||||

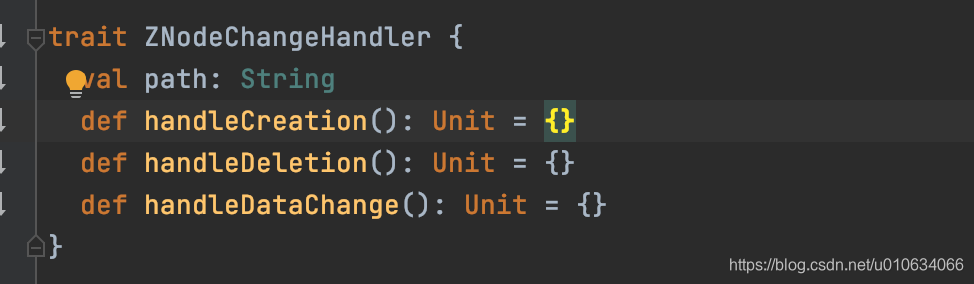

1. 注册`ZNodeChangeHandler` 节点变更事件处理器,在map `zNodeChangeHandlers`中保存了key=`/controller`;value=`ZNodeChangeHandler`的键值对; 其中`ZNodeChangeHandler`处理器有如下三个接口

|

||||

|

||||

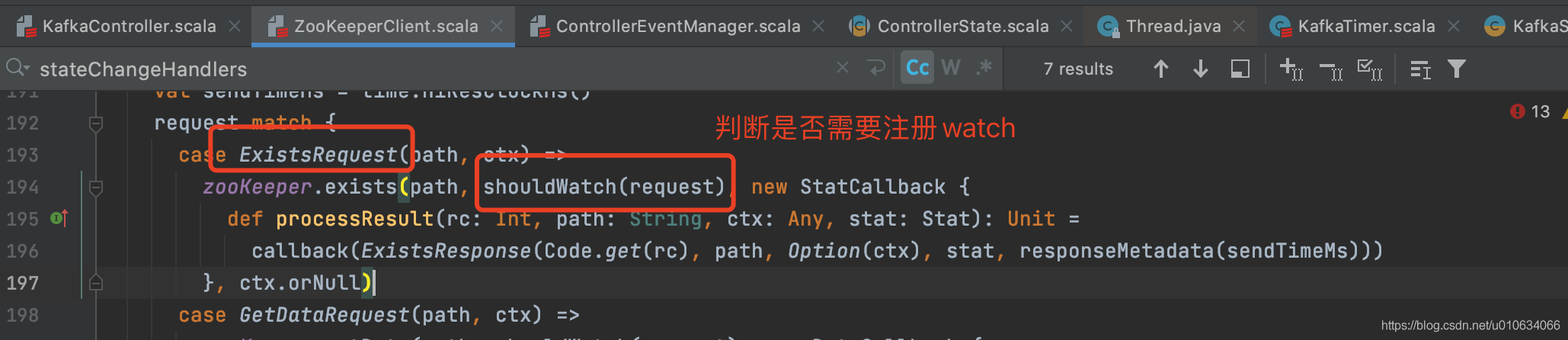

2. 然后向zk发起一个`ExistsRequest(/controller)`的请求,去查询一下`/controller`节点是否存在; 并且如果不存在的话,就注册一个`watch` 监视这个节点;从下面的代码可以看出

|

||||

|

||||

|

||||

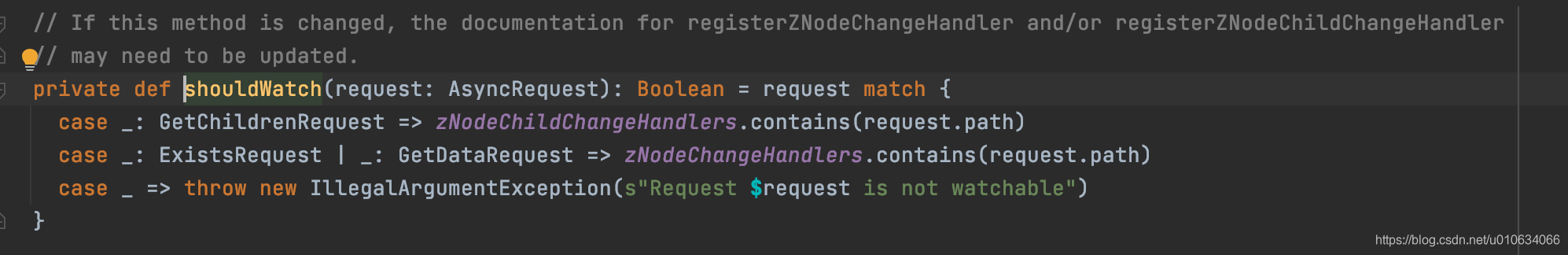

因为上一步中我们在map `zNodeChangeHandlers`中保存了key=`/controller`; 所以上图中可知,需要注册`watch`来进行`/controller`节点的监控;

|

||||

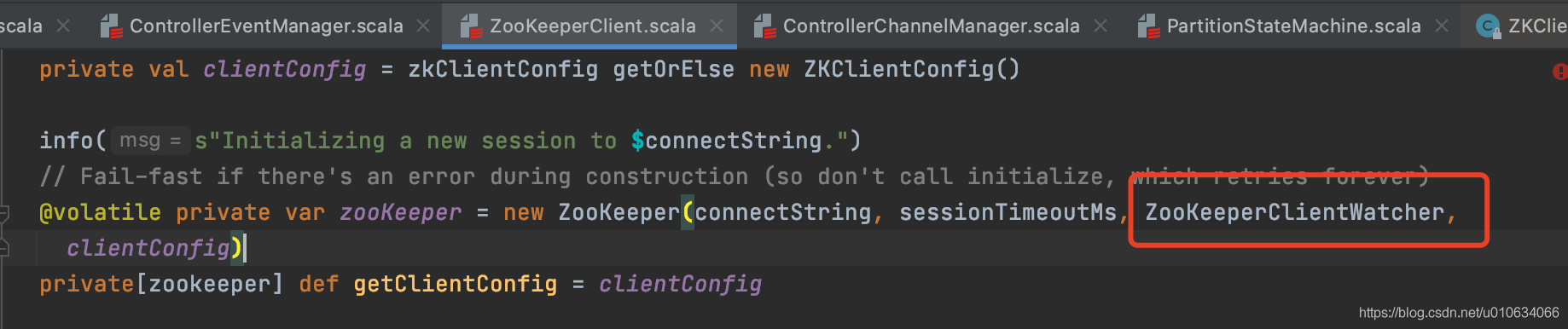

kafka是是怎实现监听的呢?`zookeeper`构建的时候传入了自定义的`WATCH`

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

3. 选举; 选举的过程其实就是几个Broker抢占式去成为Controller; 谁先创建`/controller`这个节点; 谁就成为Controller; 我们下面仔细分析以下选择

|

||||

|

||||

### 5. Controller的选举elect()

|

||||

|

||||

```scala

|

||||

private def elect(): Unit = {

|

||||

//去zk上获取 /controller 节点的数据 如果没有就赋值为-1

|

||||

activeControllerId = zkClient.getControllerId.getOrElse(-1)

|

||||

//如果获取到了数据就

|

||||

if (activeControllerId != -1) {

|

||||

debug(s"Broker $activeControllerId has been elected as the controller, so stopping the election process.")

|

||||

return

|

||||

}

|

||||

|

||||

try {

|

||||

|

||||

//尝试去zk中写入自己的Brokerid作为Controller;并且更新Controller epoch

|

||||

val (epoch, epochZkVersion) = zkClient.registerControllerAndIncrementControllerEpoch(config.brokerId)

|

||||

controllerContext.epoch = epoch

|

||||

controllerContext.epochZkVersion = epochZkVersion

|

||||

activeControllerId = config.brokerId

|

||||

//

|

||||

onControllerFailover()

|

||||

} catch {

|

||||

//尝试卸任Controller的职责

|

||||

maybeResign()

|

||||

//省略...

|

||||

}

|

||||

}

|

||||

```

|

||||

1. 去zk上获取` /controller `节点的数据 如果没有就赋值为-1

|

||||

2. 如果获取到了数据说明已经有Controller注册成功了;直接结束选举流程

|

||||

3. 尝试去zk中写入自己的Brokerid作为Controller;并且更新Controller epoch

|

||||

- 获取zk节点`/controller_epoch`, 这个节点是表示Controller变更的次数,如果没有的话就创建这个节点(**持久节点**); 起始`controller_epoch=0` `ControllerEpochZkVersion=0`

|

||||

- 向zk发起一个`MultiRequest`请求;里面包含两个命令; 一个是向zk中创建`/controller`节点,节点内容是自己的brokerId;另一个命令是向`/controller_epoch`中更新数据; 数据+1 ;

|

||||

- 如果写入过程中抛出异常提示说节点已经存在,说明别的Broker已经抢先成为Controller了; 这个时候会做一个检查`checkControllerAndEpoch` 来检查是不是别的Controller抢先了; 如果是的话就抛出`ControllerMovedException`异常; 抛出了这个异常之后,当前Broker会尝试的去卸任一下Controller的职责; (因为有可能他之前是Controller,Controller转移之后都需要尝试卸任一下)

|

||||

|

||||

5. Controller确定之后,就是做一下成功之后的事情了 `onControllerFailover`

|

||||

|

||||

|

||||

### 6. 当选Controller之后的处理 onControllerFailover

|

||||

进入到`KafkaController.onControllerFailover`

|

||||

```scala

|

||||

private def onControllerFailover(): Unit = {

|

||||

|

||||

// 都是ZNodeChildChangeHandler处理器; 含有接口 handleChildChange;注册了不同事件的处理器

|

||||

// 对应的事件分别有`BrokerChange`、`TopicChange`、`TopicDeletion`、`LogDirEventNotification`

|

||||

val childChangeHandlers = Seq(brokerChangeHandler, topicChangeHandler, topicDeletionHandler, logDirEventNotificationHandler,

|

||||

isrChangeNotificationHandler)

|

||||

//把这些handle都维护在 map类型`zNodeChildChangeHandlers`中

|

||||

childChangeHandlers.foreach(zkClient.registerZNodeChildChangeHandler)

|

||||

//都是ZNodeChangeHandler处理器,含有增删改节点接口;

|

||||

//分别对应的事件 `ReplicaLeaderElection`、`ZkPartitionReassignment`、``

|

||||

val nodeChangeHandlers = Seq(preferredReplicaElectionHandler, partitionReassignmentHandler)

|

||||

//把这些handle都维护在 map类型`zNodeChangeHandlers`中

|

||||

nodeChangeHandlers.foreach(zkClient.registerZNodeChangeHandlerAndCheckExistence)

|

||||

|

||||

info("Deleting log dir event notifications")

|

||||

//删除所有日志目录事件通知。 ;获取zk中节点`/log_dir_event_notification`的值;然后把节点下面的节点全部删除

|

||||

zkClient.deleteLogDirEventNotifications(controllerContext.epochZkVersion)

|

||||

info("Deleting isr change notifications")

|

||||

// 删除节点 `/isr_change_notification`下的所有节点

|

||||

zkClient.deleteIsrChangeNotifications(controllerContext.epochZkVersion)

|

||||

info("Initializing controller context")

|

||||

initializeControllerContext()

|

||||

info("Fetching topic deletions in progress")

|

||||

val (topicsToBeDeleted, topicsIneligibleForDeletion) = fetchTopicDeletionsInProgress()

|

||||

info("Initializing topic deletion manager")

|

||||

topicDeletionManager.init(topicsToBeDeleted, topicsIneligibleForDeletion)

|

||||

|

||||

// We need to send UpdateMetadataRequest after the controller context is initialized and before the state machines

|

||||

// are started. The is because brokers need to receive the list of live brokers from UpdateMetadataRequest before

|

||||

// they can process the LeaderAndIsrRequests that are generated by replicaStateMachine.startup() and

|

||||

// partitionStateMachine.startup().

|

||||

info("Sending update metadata request")

|

||||

sendUpdateMetadataRequest(controllerContext.liveOrShuttingDownBrokerIds.toSeq, Set.empty)

|

||||

|

||||

replicaStateMachine.startup()

|

||||

partitionStateMachine.startup()

|

||||

|

||||

info(s"Ready to serve as the new controller with epoch $epoch")

|

||||

|

||||

initializePartitionReassignments()

|

||||

topicDeletionManager.tryTopicDeletion()

|

||||

val pendingPreferredReplicaElections = fetchPendingPreferredReplicaElections()

|

||||

onReplicaElection(pendingPreferredReplicaElections, ElectionType.PREFERRED, ZkTriggered)

|

||||

info("Starting the controller scheduler")

|

||||

kafkaScheduler.startup()

|

||||

if (config.autoLeaderRebalanceEnable) {

|

||||

scheduleAutoLeaderRebalanceTask(delay = 5, unit = TimeUnit.SECONDS)

|

||||

}

|

||||

scheduleUpdateControllerMetricsTask()

|

||||

|

||||

if (config.tokenAuthEnabled) {

|

||||

info("starting the token expiry check scheduler")

|

||||

tokenCleanScheduler.startup()

|

||||

tokenCleanScheduler.schedule(name = "delete-expired-tokens",

|

||||

fun = () => tokenManager.expireTokens,

|

||||

period = config.delegationTokenExpiryCheckIntervalMs,

|

||||

unit = TimeUnit.MILLISECONDS)

|

||||

}

|

||||

}

|

||||

```

|

||||

1. 把事件`BrokerChange`、`TopicChange`、`TopicDeletion`、`LogDirEventNotification`对应的handle处理器都维护在 map类型`zNodeChildChangeHandlers`中

|

||||

2. 把事件 `ReplicaLeaderElection`、`ZkPartitionReassignment`对应的handle处理器都维护在 map类型`zNodeChildChangeHandlers`中

|

||||

3. 删除zk中节点`/log_dir_event_notification`下的所有节点

|

||||

4. 删除zk中节点 `/isr_change_notification`下的所有节点

|

||||

5. 初始化Controller的上下文对象`initializeControllerContext()`

|

||||

- 获取`/brokers/ids`节点信息,拿到所有的存活的BrokerID; 然后获取每个Broker的信息 `/brokers/ids/对应BrokerId`的信息以及对应的节点的Epoch; 也就是`cZxid`; 然后将数据保存在内存中

|

||||

- 获取`/brokers/topics`节点信息;拿到所有Topic之后,放到Map `partitionModificationsHandlers`中,key=topicName;value=对应节点的`PartitionModificationsHandler`; 节点是`/brokers/topics/topic名称`;最终相当于是在事件处理队列`queue`中给每个Topic添加了一个`PartitionModifications`事件; 这个事件是怎么处理的,我们下面分析

|

||||

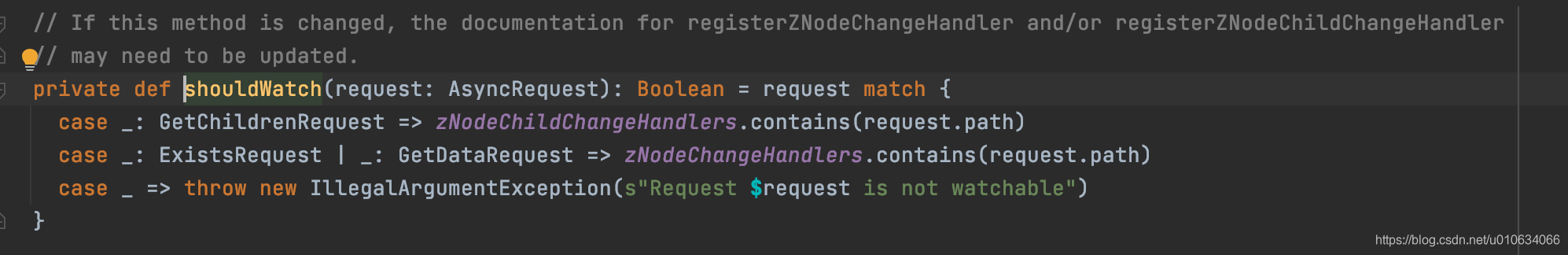

- 同时又注册一下上面的`PartitionModificationsHandler`,保存在map `zNodeChangeHandlers` 中; key= `/brokers/topics/Topic名称`,Value=`PartitionModificationsHandler`; 我们上面也说到过,这个有个功能就是判断需不需要向zk中注册`watch`; 从下图的代码中可以看出,在获取zk数据(`GetDataRequest`)的时候,会去 `zNodeChangeHandlers`判断一下存不存在对应节点key;存在的话就注册`watch`监视数据

|

||||

- zk中获取`/brokers/topics/topic名称`所有topic的分区数据; 保存在内存中

|

||||

- 给每个broker注册broker变更处理器`BrokerModificationsHandler`(也是`ZNodeChangeHandler`)它对应的事件是`BrokerModifications`; 同样的`zNodeChangeHandlers`中也保存着对应的`/brokers/ids/对应BrokerId` 同样的`watch`监控;并且map `brokerModificationsHandlers`保存对应关系 key=`brokerID` value=`BrokerModificationsHandler`

|

||||

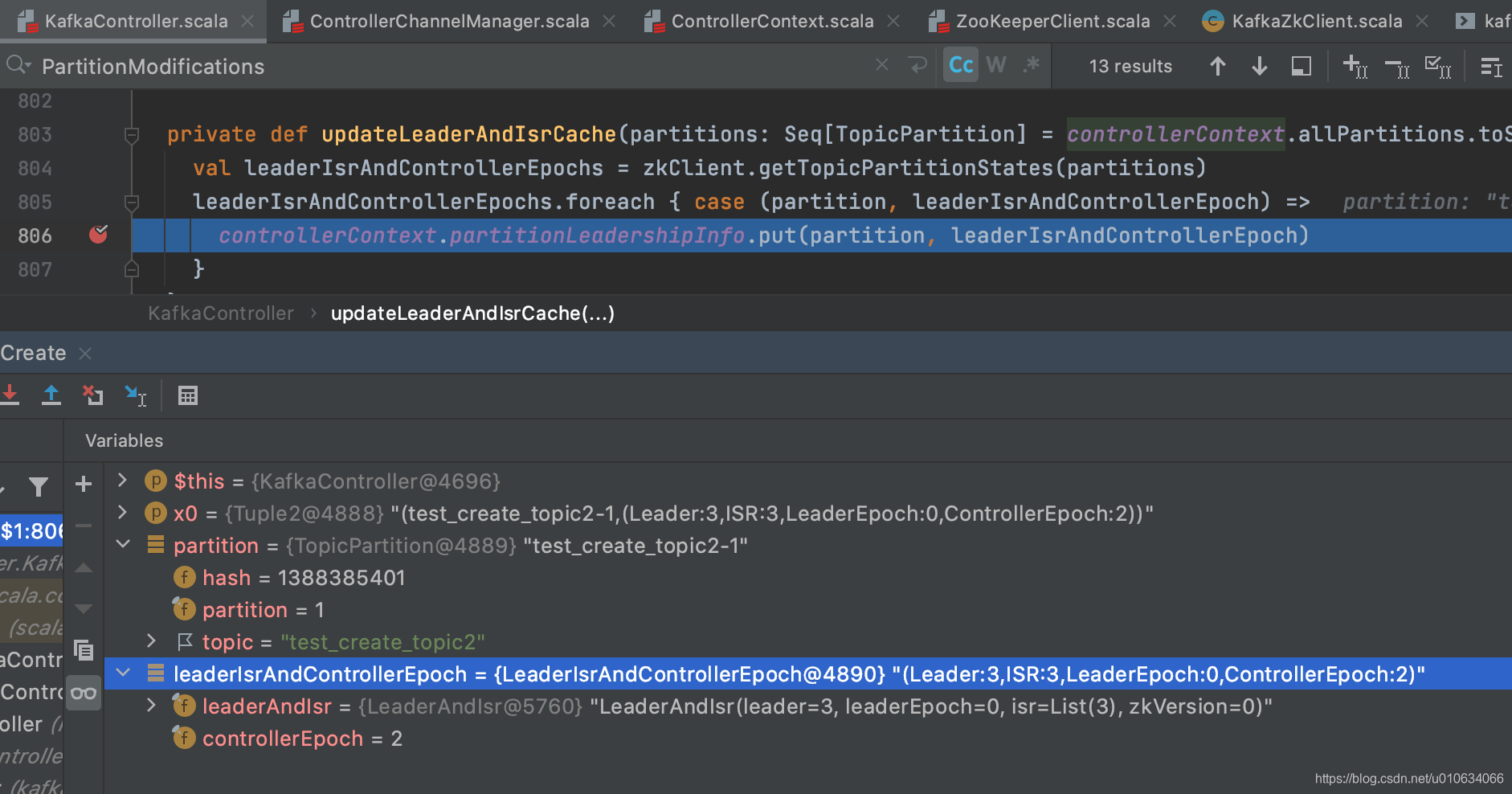

- 从zk中获取所有的topic-partition 信息; 节点: `/brokers/topics/Topic名称/partitions/分区号/state` ; 然后保存在缓存中`controllerContext.partitionLeadershipInfo`

|

||||

|

||||

- `controllerChannelManager.startup()` 这个单独开了一篇文章讲解,请看[【kafka源码】Controller与Brokers之间的网络通信](), 简单来说就是创建一个map来保存于所有Broker的发送请求线程对象`RequestSendThread`;这个对象中有一个 阻塞队列`queue`; 用来排队执行要执行的请求,没有任务时候回阻塞; Controller需要发送请求的时候只需要向这个`queue`中添加任务就行了

|

||||

|

||||

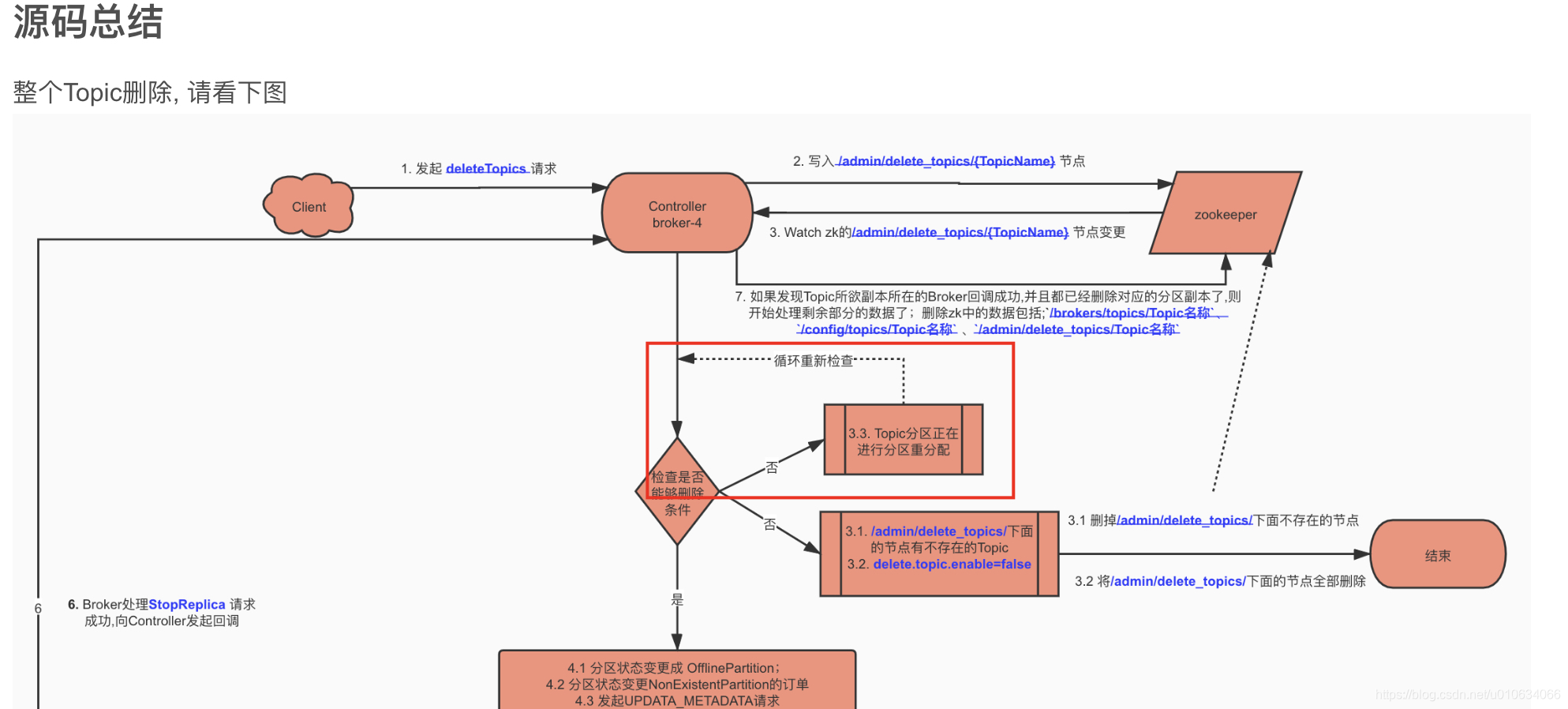

6. 初始化删除Topic管理器`topicDeletionManager.init()`

|

||||

- 读取zk节点`/admin/delete_topics`的子节点数据,表示的是标记为已经删除的Topic

|

||||

- 将被标记为删除的Topic,做一些开始删除Topic的操作;具体详情情况请看[【kafka源码】TopicCommand之删除Topic源码解析]()

|

||||

|

||||

7. `sendUpdateMetadataRequest` 给Brokers们发送`UPDATA_METADATA` 更新元数据的请求,关于更新元数据详细情况 [【kafka源码】更新元数据`UPDATA_METADATA`请求源码分析 ]()

|

||||

8. `replicaStateMachine.startup()` 启动副本状态机,获取所有在线的和不在线的副本;

|

||||

①. 将在线副本状态变更为`OnlineReplica:`将带有当前领导者和 isr 的 `LeaderAndIsr `请求发送到新副本,并将分区的 `UpdateMetadata `请求发送到每个实时代理

|

||||

②. 将不在线副本状态变更为`OfflineReplica:` 向副本发送 [StopReplicaRequest]() ; 从 isr 中删除此副本并将 [LeaderAndIsr]() 请求(带有新的 isr)发送到领导副本,并将分区的 UpdateMetadata 请求发送到每个实时代理。

|

||||

详细请看 [【kafka源码】Controller中的状态机](https://shirenchuang.blog.csdn.net/article/details/117848213)

|

||||

9. `partitionStateMachine.startup()`启动分区状态机,获取所有在线的和不在线(判断Leader是否在线)的分区;

|

||||

1. 如果分区不存在`LeaderIsr`,则状态是`NewPartition`

|

||||

2. 如果分区存在`LeaderIsr`,就判断一下Leader是否存活

|

||||

2.1 如果存活的话,状态是`OnlinePartition`

|

||||

2.2 否则是`OfflinePartition`

|

||||

3. 尝试将所有处于 `NewPartition `或 `OfflinePartition `状态的分区移动到 `OnlinePartition` 状态,但属于要删除的主题的分区除外

|

||||

|

||||

PS:如果之前创建Topic过程中,Controller发生了变更,Topic创建么有完成,那么这个状态流转的过程会继续创建下去; [【kafka源码】TopicCommand之创建Topic源码解析]()

|

||||

关于状态机 详细请看 [【kafka源码】Controller中的状态机](https://shirenchuang.blog.csdn.net/article/details/117848213)

|

||||

|

||||

11. ` initializePartitionReassignments` 初始化挂起的重新分配。这包括通过 `/admin/reassign_partitions` 发送的重新分配,它将取代任何正在进行的 API 重新分配。[【kafka源码】分区重分配 TODO..]()

|

||||

12. `topicDeletionManager.tryTopicDeletion()`尝试恢复未完成的Topic删除操作;相关情况 [【kafka源码】TopicCommand之删除Topic源码解析](https://shirenchuang.blog.csdn.net/article/details/117847877)

|

||||

13. 从`/admin/preferred_replica_election` 获取值,调用`onReplicaElection()` 尝试为每个给定分区选举一个副本作为领导者 ;相关内容请看[【kafka源码】Kafka的优先副本选举源码分析]();

|

||||

14. `kafkaScheduler.startup()`启动一些定时任务线程

|

||||

15. 如果配置了`auto.leader.rebalance.enable=true`,则启动LeaderRebalace的定时任务;线程名`auto-leader-rebalance-task`

|

||||

16. 如果配置了 `delegation.token.master.key`,则启动一些token的清理线程

|

||||

|

||||

|

||||

### 7. Controller重新选举

|

||||

当我们把zk中的节点`/controller`删除之后; 会调用下面接口;进行重新选举

|

||||

```scala

|

||||

private def processReelect(): Unit = {

|

||||

//尝试卸任一下

|

||||

maybeResign()

|

||||

//进行选举

|

||||

elect()

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

## 源码总结

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

PS: 可以看到 Broker当选Controller之后,保存了很多zk上的数据到自己的内存中, 也承担了很多责任; 如果这台Broker自身压力就挺大,那么它当选Controller之后压力会更大,所以尽量让比较空闲的Broker当选Controller,那么如何实现这样一个目标呢? 可以指定Broker作为Controller;

|

||||

这样一个功能可以在 <font color=red size=5>项目地址: [didi/Logi-KafkaManager: 一站式Apache Kafka集群指标监控与运维管控平台](https://github.com/didi/Logi-KafkaManager)</font> 里面可以实现

|

||||

|

||||

|

||||

## Q&A

|

||||

|

||||

### 直接删除zk节点`/controller`会怎么样

|

||||

>Broker之间会立马重新选举Controller;

|

||||

|

||||

### 如果修改节点`/controller/`下的数据会成功将Controller转移吗

|

||||

假如`/controller`节点数据是`{"version":1,"brokerid":3,"timestamp":"1623746563454"}` 我把BrokerId=1;Controller会直接变成Broker-1?

|

||||

>Answer: **不会成功转移,并且当前的集群中Broker是没有Controller角色的;这就是一个非常严重的问题了**

|

||||

|

||||

分析源码:

|

||||

修改`/controller/`数据在Controller执行的代码是

|

||||

```scala

|

||||

private def processControllerChange(): Unit = {

|

||||

maybeResign()

|

||||

}

|

||||

|

||||

private def maybeResign(): Unit = {

|

||||

val wasActiveBeforeChange = isActive

|

||||

zkClient.registerZNodeChangeHandlerAndCheckExistence(controllerChangeHandler)

|

||||

activeControllerId = zkClient.getControllerId.getOrElse(-1)

|

||||

if (wasActiveBeforeChange && !isActive) {

|

||||

onControllerResignation()

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

代码就非常清楚的看到, 修改数据之后,如果修改后的Broker-Id和当前的Controller的BrokerId不一致,执行`onControllerResignation` 就让当前的Controller卸任这个角色了;

|

||||

|

||||

### /log_dir_event_notification 是干啥 的

|

||||

> 当`log.dir`日志文件夹出现访问不了,磁盘损坏等等异常导致读写失败,就会触发一些异常通知事件;

|

||||

> 流程是->

|

||||

> 1. Broker检查到`log.dir`异常,做一些清理工作,然后向zk中创建持久序列节点`/log_dir_event_notification/log_dir_event_+序列号`;数据是 BrokerID;例如:

|

||||

>`/log_dir_event_notification/log_dir_event_0000000003`

|

||||

>2. Controller 监听到了zk的变更; 将从zk节点 /log_dir_event_notification/log_dir_event_序列号 中获取到的数据的Broker上的所有副本进行一个副本状态流转 ->OnlineReplica

|

||||

> 2.1 给所有broker 发送`LeaderAndIsrRequest`请求,让brokers们去查询他们的副本的状态,如果副本logDir已经离线则返回KAFKA_STORAGE_ERROR异常;

|

||||

> 2.2 完事之后会删除节点

|

||||

|

||||

### /isr_change_notification 是干啥用的

|

||||

> 当有isr变更的时候会在这个节点写入数据; Controller监听之后做一些通知

|

||||

### /admin/preferred_replica_election 是干啥用的

|

||||

>优先副本选举, 详情请戳[kafka的优先副本选举流程 .]()

|

||||

>

|

||||

|

||||

## 思考

|

||||

### 有什么办法实现Controller的优先选举?

|

||||

>既然我们知道了Controller承担了这么多的任务,又是Broker又是Controller,身兼数职压力难免会比较大;

|

||||

>所以我们很希望能够有一个功能能够知道Broker为Controller角色; 这样就可以指定压力比较小的Broker来承担Controller的角色了;

|

||||

|

||||

**那么,如何实现呢?**

|

||||

>Kafka原生目前并不支持这个功能,所以我们想要实现这个功能,就得要改源码了;

|

||||

>知道了原理, 改源码实现这个功能就很简单了; 有很多种实现方式;

|

||||

|

||||

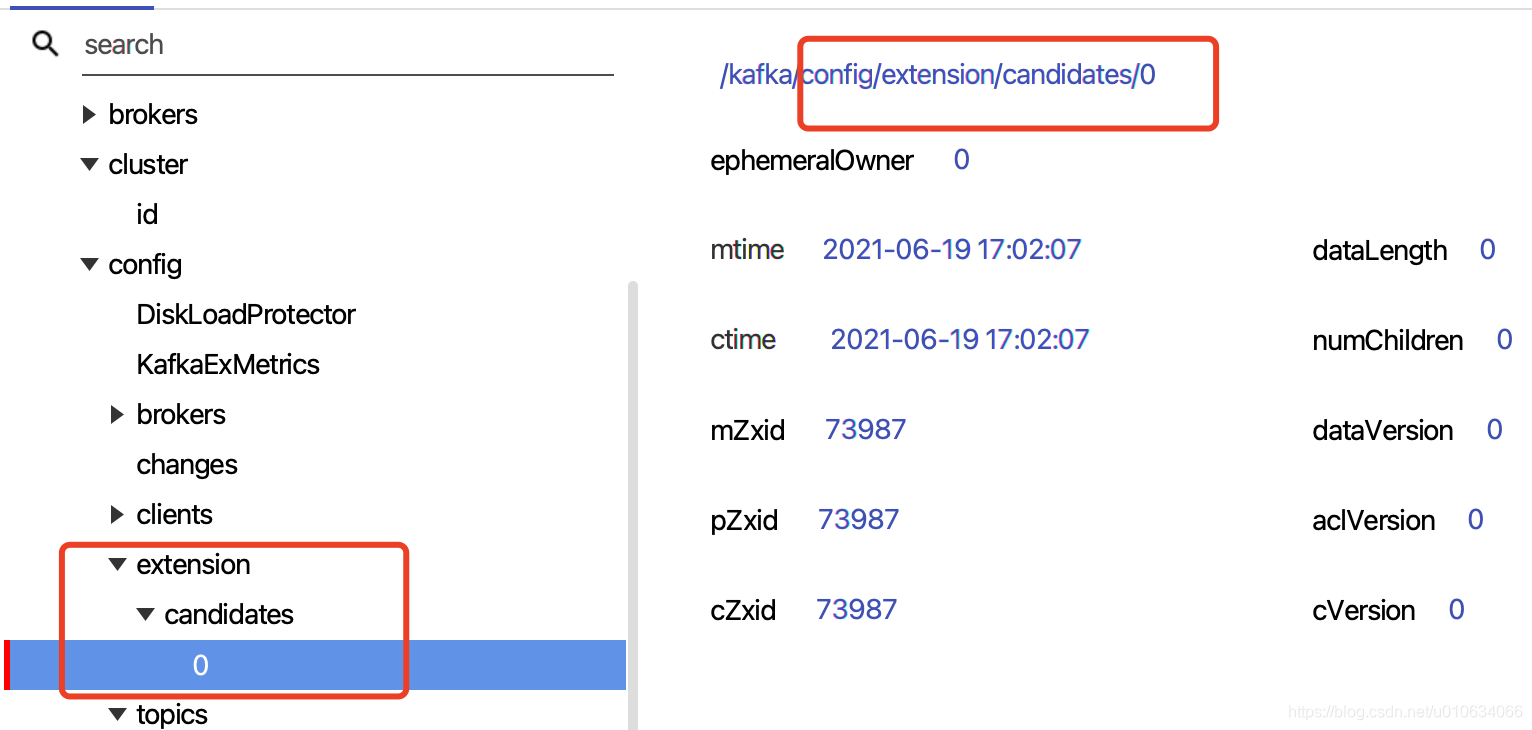

比如说: 在zk里面设置一个节点专门用来存放候选节点; 竞选Controller的时候优先从这里面选择;

|

||||

然后Broker们启动的时候,可以判断一下自己是不是候选节点, 如果不是的话,那就让它睡个两三秒; (让候选者99米再跑)

|

||||

那么大概率的情况下,候选者肯定就会当选了;

|

||||

26

docs/zh/Kafka分享/Kafka Controller /Controller滴滴特性解读.md

Normal file

26

docs/zh/Kafka分享/Kafka Controller /Controller滴滴特性解读.md

Normal file

@@ -0,0 +1,26 @@

|

||||

|

||||

## Controller优先选举

|

||||

> 在原生的kafka中,Controller角色的选举,是每个Broker抢占式的去zk写入节点`Controller`

|

||||

> 任何一个Broker都有可能当选Controller;

|

||||

> 但是Controller角色除了是一个正常的Broker外,还承担着Controller角色的一些任务;

|

||||

> 具体情况 [【kafka源码】Controller启动过程以及选举流程源码分析]()

|

||||

> 当这台Broker本身压力很大的情况下,又当选Controller让Broker压力更大了;

|

||||

> 所以我们期望让Controller角色落在一些压力较小的Broker上;或者专门用一台机器用来当做Controller角色;

|

||||

> 基于这么一个需求,我们内部就对引擎做了些改造,用于支持`Controller优先选举`

|

||||

|

||||

|

||||

## 改造原理

|

||||

> 在`/config`节点下新增了节点`/config/extension/candidates/ `;

|

||||

> 将所有需要被优先选举的BrokerID存放到该节点下面;

|

||||

> 例如:

|

||||

> `/config/extension/candidates/0`

|

||||

>

|

||||

|

||||

当Controller发生重新选举的时候, 每个Broker都去抢占式写入`/controller`节点, 但是会先去节点`/config/extension/candidates/`节点获取所有子节点,获取到有一个BrokerID=0; 这个时候会判断一下是否跟自己的BrokerID相等; 不相等的话就`sleep 3秒` 钟; 这样的话,那么BrokerId=0这个Broker就会大概率当选Controller; 如果这个Broker挂掉了,那么其他Broker就可能会当选

|

||||

|

||||

<font color=red>PS: `/config/extension/candidates/` 节点下可以配置多个候选Controller </font>

|

||||

|

||||

|

||||

## KM管理平台操作

|

||||

|

||||

|

||||

@@ -0,0 +1,614 @@

|

||||

|

||||

|

||||

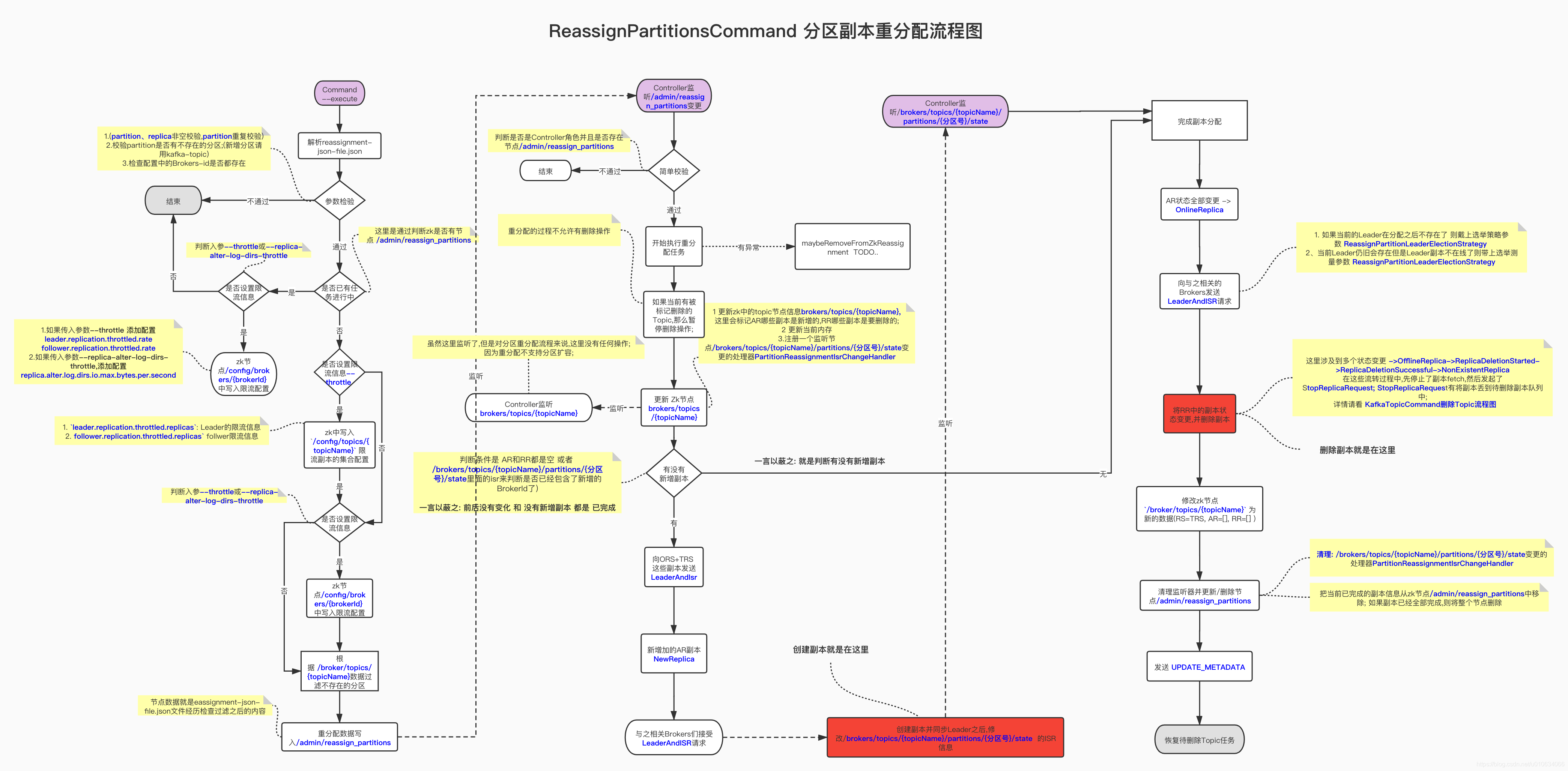

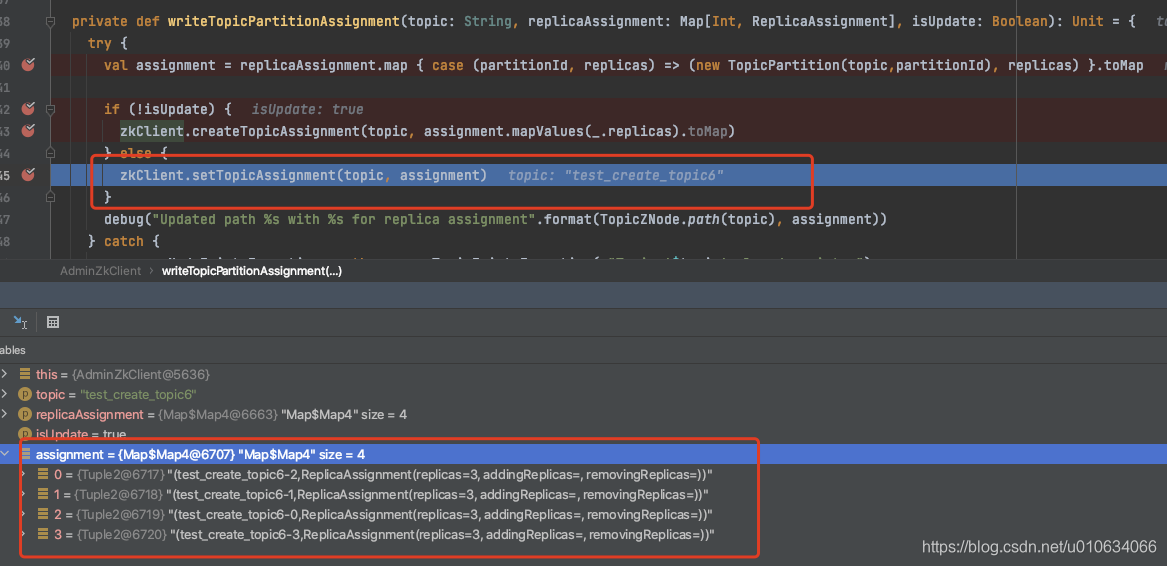

## 1.脚本的使用

|

||||

>请看 [【kafka运维】副本扩缩容、数据迁移、分区重分配]()

|

||||

|

||||

## 2.源码解析

|

||||

<font color=red>如果阅读源码太枯燥,可以直接跳转到 源码总结和Q&A部分<font>

|

||||

|

||||

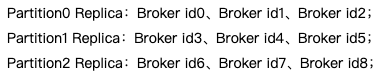

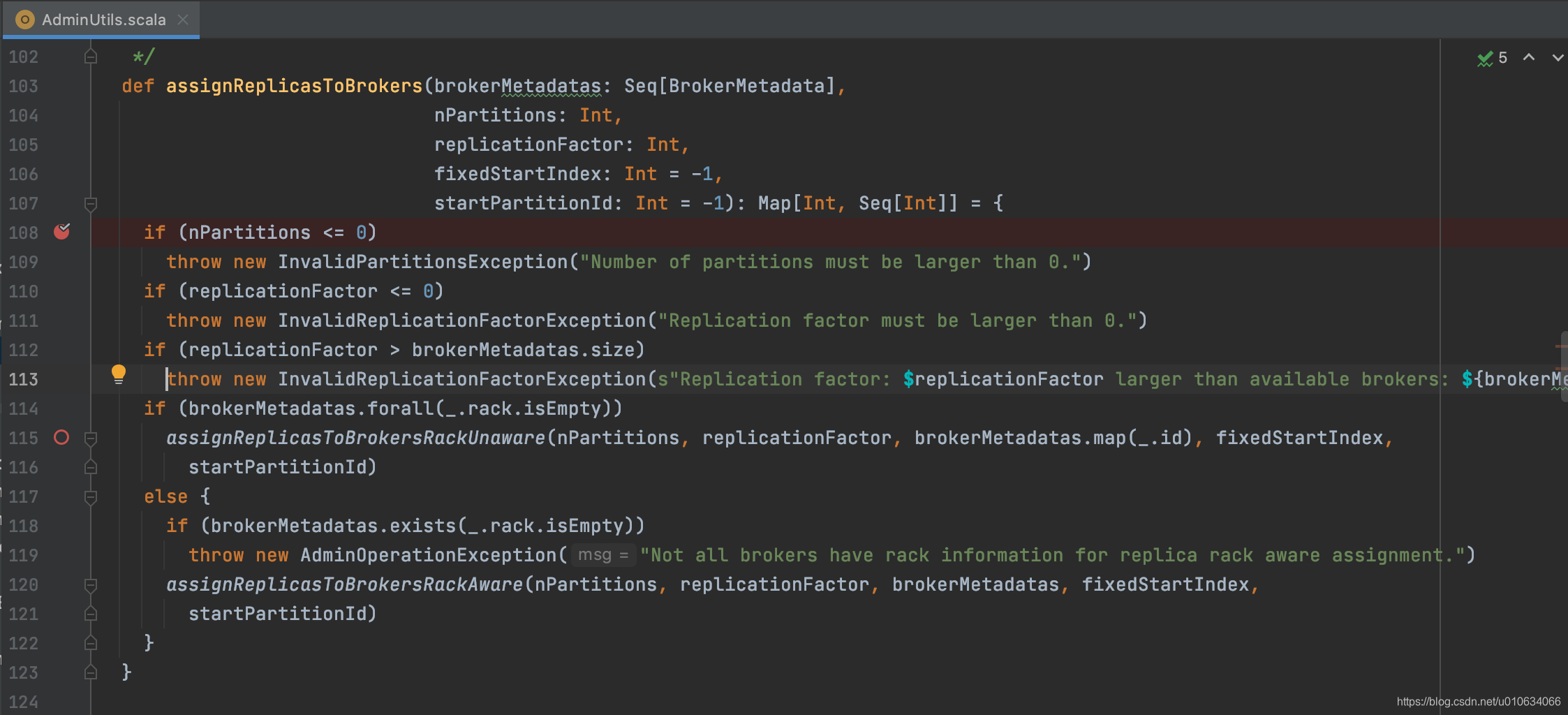

### 2.1`--generate ` 生成分配策略分析

|

||||

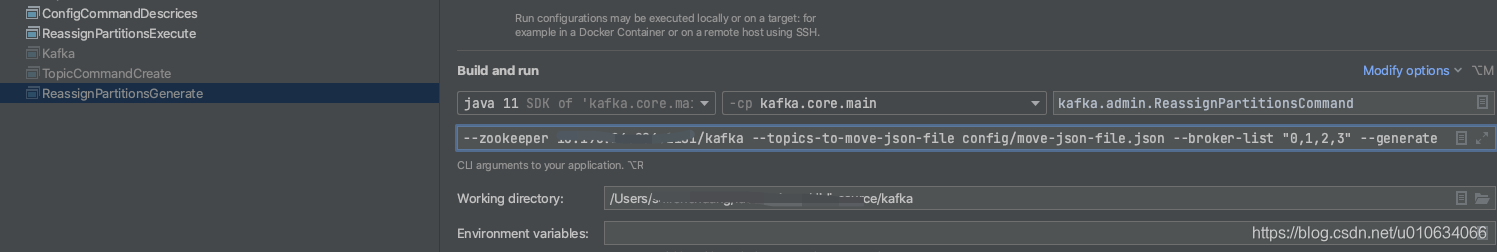

配置启动类`--zookeeper xxxx:2181 --topics-to-move-json-file config/move-json-file.json --broker-list "0,1,2,3" --generate`

|

||||

|

||||

配置`move-json-file.json`文件

|

||||

|

||||

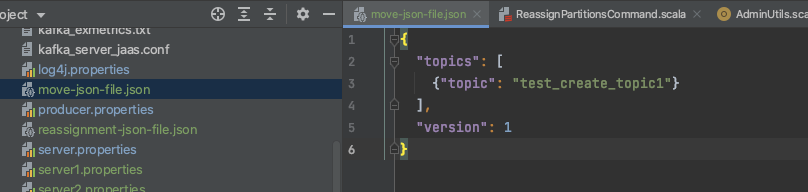

启动,调试:

|

||||

`ReassignPartitionsCommand.generateAssignment`

|

||||

|

||||

1. 获取入参的数据

|

||||

2. 校验`--broker-list`传入的BrokerId是否有重复的,重复就报错

|

||||

3. 开始进行分配

|

||||

|

||||

`ReassignPartitionsCommand.generateAssignment`

|

||||

```scala

|

||||

def generateAssignment(zkClient: KafkaZkClient, brokerListToReassign: Seq[Int], topicsToMoveJsonString: String, disableRackAware: Boolean): (Map[TopicPartition, Seq[Int]], Map[TopicPartition, Seq[Int]]) = {

|

||||

//解析出游哪些Topic

|

||||

val topicsToReassign = parseTopicsData(topicsToMoveJsonString)

|

||||

//检查是否有重复的topic

|

||||

val duplicateTopicsToReassign = CoreUtils.duplicates(topicsToReassign)

|

||||

if (duplicateTopicsToReassign.nonEmpty)

|

||||

throw new AdminCommandFailedException("List of topics to reassign contains duplicate entries: %s".format(duplicateTopicsToReassign.mkString(",")))

|

||||

//获取topic当前的副本分配情况 /brokers/topics/{topicName}

|

||||

val currentAssignment = zkClient.getReplicaAssignmentForTopics(topicsToReassign.toSet)

|

||||

|

||||

val groupedByTopic = currentAssignment.groupBy { case (tp, _) => tp.topic }

|

||||

//机架感知模式

|

||||

val rackAwareMode = if (disableRackAware) RackAwareMode.Disabled else RackAwareMode.Enforced

|

||||

val adminZkClient = new AdminZkClient(zkClient)

|

||||

val brokerMetadatas = adminZkClient.getBrokerMetadatas(rackAwareMode, Some(brokerListToReassign))

|

||||

|

||||

val partitionsToBeReassigned = mutable.Map[TopicPartition, Seq[Int]]()

|

||||

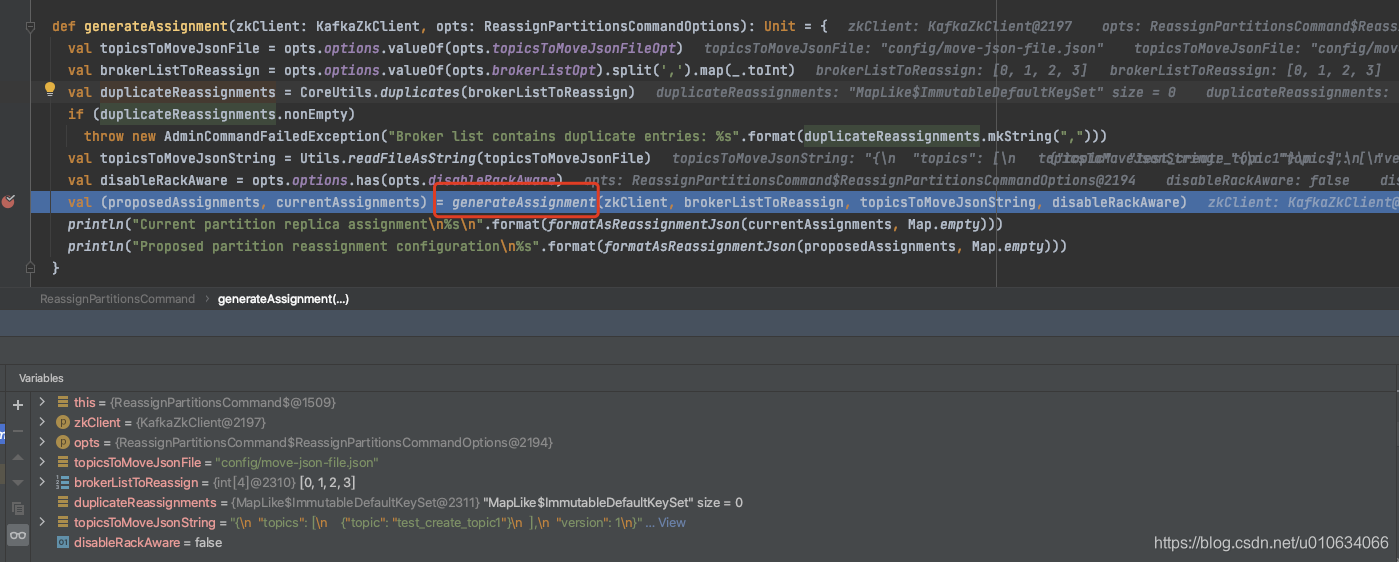

groupedByTopic.foreach { case (topic, assignment) =>

|

||||

val (_, replicas) = assignment.head

|

||||

val assignedReplicas = AdminUtils.assignReplicasToBrokers(brokerMetadatas, assignment.size, replicas.size)

|

||||

partitionsToBeReassigned ++= assignedReplicas.map { case (partition, replicas) =>

|

||||

new TopicPartition(topic, partition) -> replicas

|

||||

}

|

||||

}

|

||||

(partitionsToBeReassigned, currentAssignment)

|

||||

}

|

||||

```

|

||||

1. 检查是否有重复的topic,重复则抛出异常

|

||||

2. 从zk节点` /brokers/topics/{topicName}`获取topic当前的副本分配情况

|

||||

3. 从zk节点`brokers/ids`中获取所有在线节点,并跟`--broker-list`参数传入的取个交集

|

||||

4. 获取Brokers元数据,如果机架感知模式`RackAwareMode.Enforced`(默认)&&上面3中获取到的交集列表brokers不是都有机架信息或者都没有机架信息的话就抛出异常; 因为要根据机架信息做分区分配的话,必须要么都有机架信息,要么都没有机架信息; 出现这种情况怎么办呢? 那就将机架感知模式`RackAwareMode`设置为`RackAwareMode.Disabled` ;只需要加上一个参数`--disable-rack-aware`就行了

|

||||

5. 调用`AdminUtils.assignReplicasToBrokers` 计算分配情况;

|

||||

|

||||

我们在[【kafka源码】创建Topic的时候是如何分区和副本的分配规则]()里面分析过就不再赘述了, `AdminUtils.assignReplicasToBrokers(要分配的Broker们的元数据, 分区数, 副本数)`

|

||||

需要注意的是副本数是通过`assignment.head.replicas.size`获取的,意思是第一个分区的副本数量,正常情况下分区副本都会相同,但是也不一定,也可能被设置为了不同

|

||||

|

||||

<font color=red>根据这条信息我们是不是就可以直接调用这个接口来实现其他功能? **比如副本的扩缩容**</font>

|

||||

|

||||

|

||||

|

||||

|

||||

### 2.2`--execute ` 执行阶段分析

|

||||

> 使用脚本执行

|

||||

> `--zookeeper xxx --reassignment-json-file config/reassignment-json-file.json --execute --throttle 10000`

|

||||

|

||||

|

||||

|

||||

`ReassignPartitionsCommand.executeAssignment`

|

||||

```scala

|

||||

def executeAssignment(zkClient: KafkaZkClient, adminClientOpt: Option[Admin], reassignmentJsonString: String, throttle: Throttle, timeoutMs: Long = 10000L): Unit = {

|

||||

//对json文件进行校验和解析

|

||||

val (partitionAssignment, replicaAssignment) = parseAndValidate(zkClient, reassignmentJsonString)

|

||||

val adminZkClient = new AdminZkClient(zkClient)

|

||||

val reassignPartitionsCommand = new ReassignPartitionsCommand(zkClient, adminClientOpt, partitionAssignment.toMap, replicaAssignment, adminZkClient)

|

||||

|

||||

//检查是否已经存在副本重分配进程, 则尝试限流

|

||||

if (zkClient.reassignPartitionsInProgress()) {

|

||||

reassignPartitionsCommand.maybeLimit(throttle)

|

||||

} else {

|

||||

//打印当前的副本分配方式,方便回滚

|

||||

printCurrentAssignment(zkClient, partitionAssignment.map(_._1.topic))

|

||||

if (throttle.interBrokerLimit >= 0 || throttle.replicaAlterLogDirsLimit >= 0)

|

||||

println(String.format("Warning: You must run Verify periodically, until the reassignment completes, to ensure the throttle is removed. You can also alter the throttle by rerunning the Execute command passing a new value."))

|

||||

//开始进行重分配进程

|

||||

if (reassignPartitionsCommand.reassignPartitions(throttle, timeoutMs)) {

|

||||

println("Successfully started reassignment of partitions.")

|

||||

} else

|

||||

println("Failed to reassign partitions %s".format(partitionAssignment))

|

||||

}

|

||||

}

|

||||

```

|

||||

1. 解析json文件并做些校验

|

||||

1. (partition、replica非空校验,partition重复校验)

|

||||

3. 校验`partition`是否有不存在的分区;(新增分区请用`kafka-topic`)

|

||||

4. 检查配置中的Brokers-id是否都存在

|

||||

3. 如果发现已经存在副本重分配进程(检查是否有节点`/admin/reassign_partitions`),则检查是否需要更改限流; 如果有参数(`--throttle`,`--replica-alter-log-dirs-throttle`) 则设置限流信息; 而后不再执行下一步

|

||||

4. 如果当前没有执行中的副本重分配任务(检查是否有节点`/admin/reassign_partitions`),则开始进行副本重分配任务;

|

||||

|

||||

#### 2.2.1 已有任务,尝试限流

|

||||

如果zk中有节点`/admin/reassign_partitions`; 则表示当前已有一个任务在进行,那么当前操作就不继续了,如果有参数

|

||||

`--throttle:`

|

||||

`--replica-alter-log-dirs-throttle:`

|

||||

则进行限制

|

||||

|

||||

>限制当前移动副本的节流阀。请注意,此命令可用于更改节流阀,但如果某些代理已完成重新平衡,则它可能不会更改最初设置的所有限制。所以后面需要将这个限制给移除掉 通过`--verify`

|

||||

|

||||

`maybeLimit`

|

||||

```scala

|

||||

def maybeLimit(throttle: Throttle): Unit = {

|

||||

if (throttle.interBrokerLimit >= 0 || throttle.replicaAlterLogDirsLimit >= 0) {

|

||||

//当前存在的broker

|

||||

val existingBrokers = existingAssignment().values.flatten.toSeq

|

||||

//期望的broker

|

||||

val proposedBrokers = proposedPartitionAssignment.values.flatten.toSeq ++ proposedReplicaAssignment.keys.toSeq.map(_.brokerId())

|

||||

//前面broker相加去重

|

||||

val brokers = (existingBrokers ++ proposedBrokers).distinct

|

||||

|

||||

//遍历与之相关的Brokers, 添加限流配置写入到zk节点/config/broker/{brokerId}中

|

||||

for (id <- brokers) {

|

||||

//获取broker的配置 /config/broker/{brokerId}

|

||||

val configs = adminZkClient.fetchEntityConfig(ConfigType.Broker, id.toString)

|

||||

if (throttle.interBrokerLimit >= 0) {

|

||||

configs.put(DynamicConfig.Broker.LeaderReplicationThrottledRateProp, throttle.interBrokerLimit.toString)

|

||||

configs.put(DynamicConfig.Broker.FollowerReplicationThrottledRateProp, throttle.interBrokerLimit.toString)

|

||||

}

|

||||

if (throttle.replicaAlterLogDirsLimit >= 0)

|

||||

configs.put(DynamicConfig.Broker.ReplicaAlterLogDirsIoMaxBytesPerSecondProp, throttle.replicaAlterLogDirsLimit.toString)

|

||||

|

||||

adminZkClient.changeBrokerConfig(Seq(id), configs)

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

`/config/brokers/{brokerId}`节点配置是Broker端的动态配置,不需要重启Broker实时生效;

|

||||

1. 如果传入了参数`--throttle:` 则从zk节点`/config/brokers/{BrokerId}`节点获取Broker们的配置信息,然后再加上以下两个配置重新写入到节点`/config/brokers/{BrokerId}`中

|

||||

`leader.replication.throttled.rate` 控制leader副本端处理FETCH请求的速率

|

||||

`follower.replication.throttled.rate` 控制follower副本发送FETCH请求的速率

|

||||

2. 如果传入了参数`--replica-alter-log-dirs-throttle:` 则将如下配置也写入节点中;

|

||||

`replica.alter.log.dirs.io.max.bytes.per.second:` broker内部目录之间迁移数据流量限制功能,限制数据拷贝从一个目录到另外一个目录带宽上限

|

||||

|

||||

例如写入之后的数据

|

||||

```json

|

||||

{"version":1,"config":{"leader.replication.throttled.rate":"1","follower.replication.throttled.rate":"1"}}

|

||||

```

|

||||

|

||||

**注意: 这里写入的限流配置,是写入所有与之相关的Broker的限流配置;**

|

||||

|

||||

#### 2.2.2 当前未有执行任务,开始执行副本重分配任务

|

||||

`ReassignPartitionsCommand.reassignPartitions`

|

||||

```scala

|

||||

def reassignPartitions(throttle: Throttle = NoThrottle, timeoutMs: Long = 10000L): Boolean = {

|

||||

//写入一些限流数据

|

||||

maybeThrottle(throttle)

|

||||

try {

|

||||

//验证分区是否存在

|

||||

val validPartitions = proposedPartitionAssignment.groupBy(_._1.topic())

|

||||

.flatMap { case (topic, topicPartitionReplicas) =>

|

||||

validatePartition(zkClient, topic, topicPartitionReplicas)

|

||||

}

|

||||

if (validPartitions.isEmpty) false

|

||||

else {

|

||||

if (proposedReplicaAssignment.nonEmpty && adminClientOpt.isEmpty)

|

||||

throw new AdminCommandFailedException("bootstrap-server needs to be provided in order to reassign replica to the specified log directory")

|

||||

val startTimeMs = System.currentTimeMillis()

|

||||

|

||||

// Send AlterReplicaLogDirsRequest to allow broker to create replica in the right log dir later if the replica has not been created yet.

|

||||

if (proposedReplicaAssignment.nonEmpty)

|

||||

alterReplicaLogDirsIgnoreReplicaNotAvailable(proposedReplicaAssignment, adminClientOpt.get, timeoutMs)

|

||||

|

||||

// Create reassignment znode so that controller will send LeaderAndIsrRequest to create replica in the broker

|

||||

zkClient.createPartitionReassignment(validPartitions.map({case (key, value) => (new TopicPartition(key.topic, key.partition), value)}).toMap)

|

||||

|

||||

// Send AlterReplicaLogDirsRequest again to make sure broker will start to move replica to the specified log directory.

|

||||

// It may take some time for controller to create replica in the broker. Retry if the replica has not been created.

|

||||

var remainingTimeMs = startTimeMs + timeoutMs - System.currentTimeMillis()

|

||||

val replicasAssignedToFutureDir = mutable.Set.empty[TopicPartitionReplica]

|

||||

while (remainingTimeMs > 0 && replicasAssignedToFutureDir.size < proposedReplicaAssignment.size) {

|

||||

replicasAssignedToFutureDir ++= alterReplicaLogDirsIgnoreReplicaNotAvailable(

|

||||

proposedReplicaAssignment.filter { case (replica, _) => !replicasAssignedToFutureDir.contains(replica) },

|

||||

adminClientOpt.get, remainingTimeMs)

|

||||

Thread.sleep(100)

|

||||

remainingTimeMs = startTimeMs + timeoutMs - System.currentTimeMillis()

|

||||

}

|

||||

replicasAssignedToFutureDir.size == proposedReplicaAssignment.size

|

||||

}

|

||||

} catch {

|

||||

case _: NodeExistsException =>

|

||||

val partitionsBeingReassigned = zkClient.getPartitionReassignment()

|

||||

throw new AdminCommandFailedException("Partition reassignment currently in " +

|

||||

"progress for %s. Aborting operation".format(partitionsBeingReassigned))

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

1. `maybeThrottle(throttle)` 设置副本移动时候的限流配置,这个方法只用于任务初始化的时候

|

||||

```scala

|

||||

private def maybeThrottle(throttle: Throttle): Unit = {

|

||||

if (throttle.interBrokerLimit >= 0)

|

||||

assignThrottledReplicas(existingAssignment(), proposedPartitionAssignment, adminZkClient)

|

||||

maybeLimit(throttle)

|

||||

if (throttle.interBrokerLimit >= 0 || throttle.replicaAlterLogDirsLimit >= 0)

|

||||

throttle.postUpdateAction()

|

||||

if (throttle.interBrokerLimit >= 0)

|

||||

println(s"The inter-broker throttle limit was set to ${throttle.interBrokerLimit} B/s")

|

||||

if (throttle.replicaAlterLogDirsLimit >= 0)

|

||||

println(s"The replica-alter-dir throttle limit was set to ${throttle.replicaAlterLogDirsLimit} B/s")

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

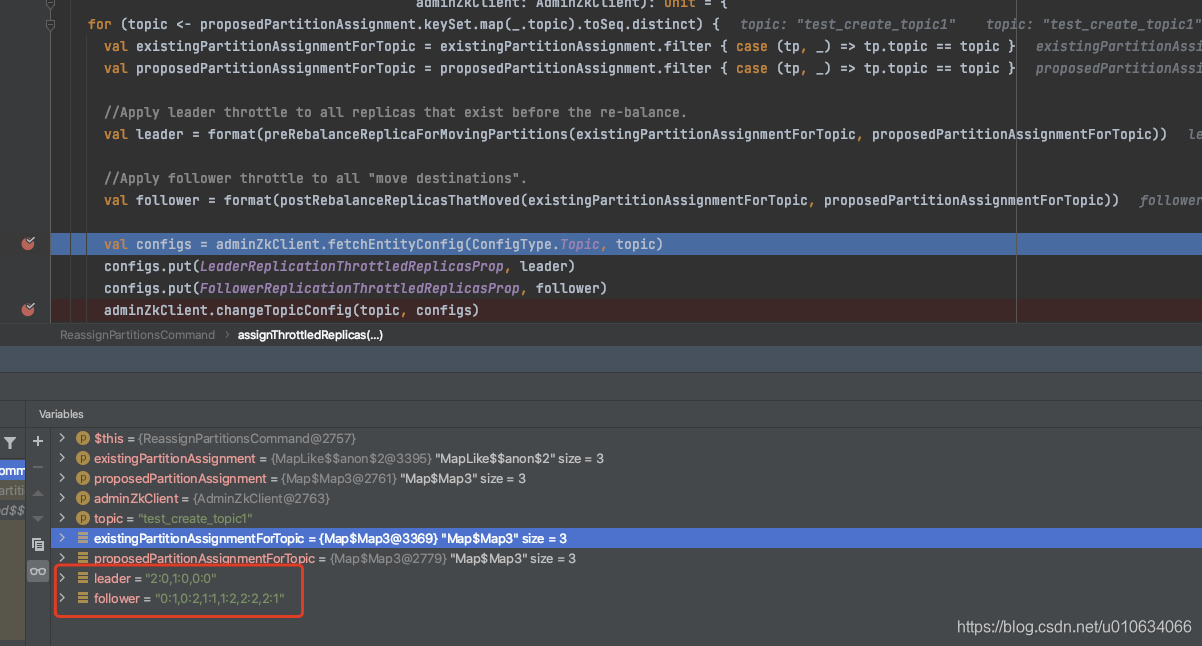

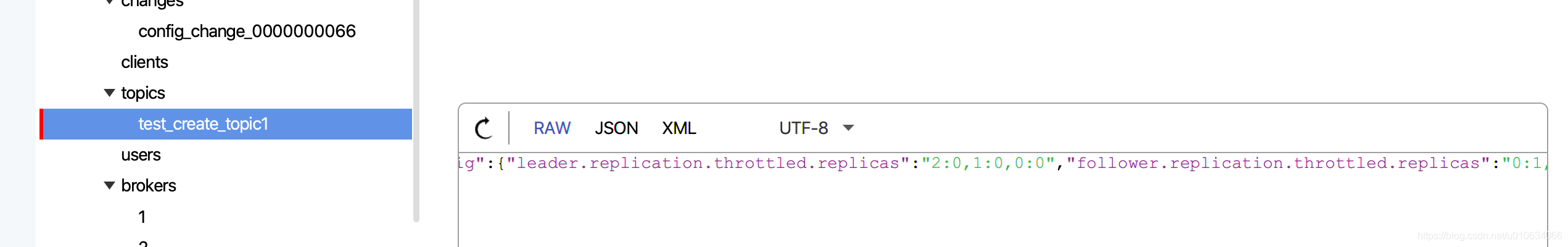

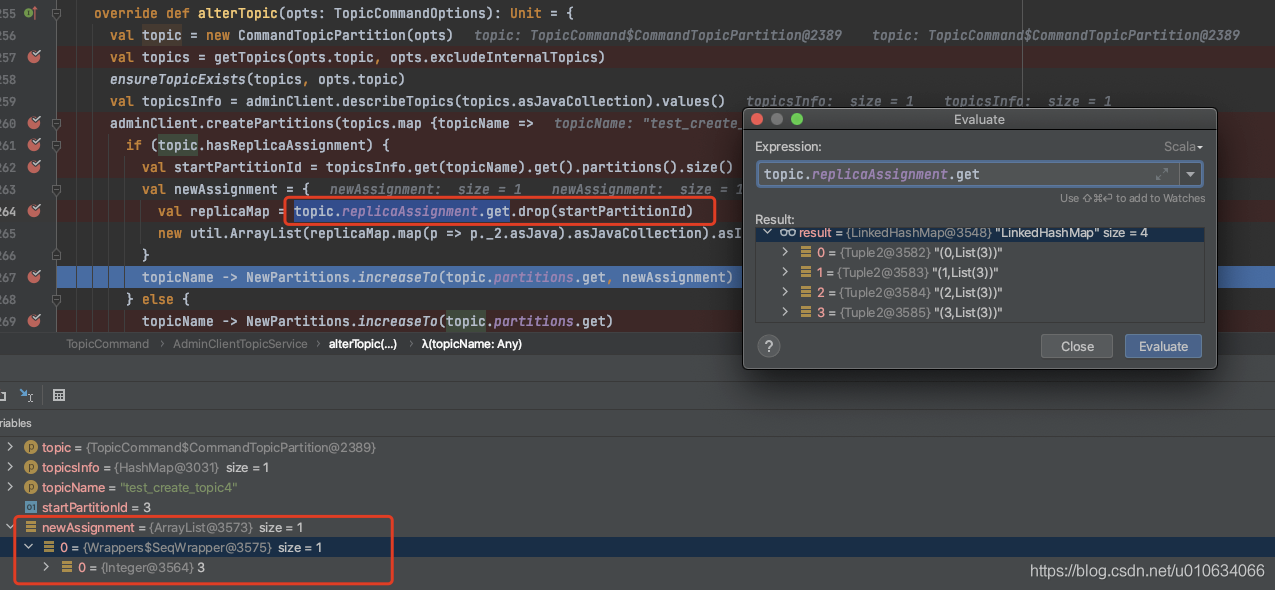

1.1 将一些topic的限流配置写入到节点`/config/topics/{topicName}`中

|

||||

|

||||

将计算得到的leader、follower 值写入到`/config/topics/{topicName}`中

|

||||

leader: 找到 TopicPartition中有新增的副本的 那个分区;数据= 分区号:副本号,分区号:副本号

|

||||

follower: 遍历 预期 TopicPartition,副本= 预期副本-现有副本;数据= 分区号:副本号,分区号:副本号

|

||||

`leader.replication.throttled.replicas`: leader

|

||||

`follower.replication.throttled.replicas`: follower

|

||||

|

||||

1.2. 执行 《**2.2.1 已有任务,尝试限流**》流程

|

||||

|

||||

2. 从zk中获取`/broker/topics/{topicName}`数据来验证给定的分区是否存在,如果分区不存在则忽略此分区的配置,继续流程

|

||||

3. 如果尚未创建副本,则发送 `AlterReplicaLogDirsRequest` 以允许代理稍后在正确的日志目录中创建副本。这个跟 `log_dirs` 有关 TODO....

|

||||

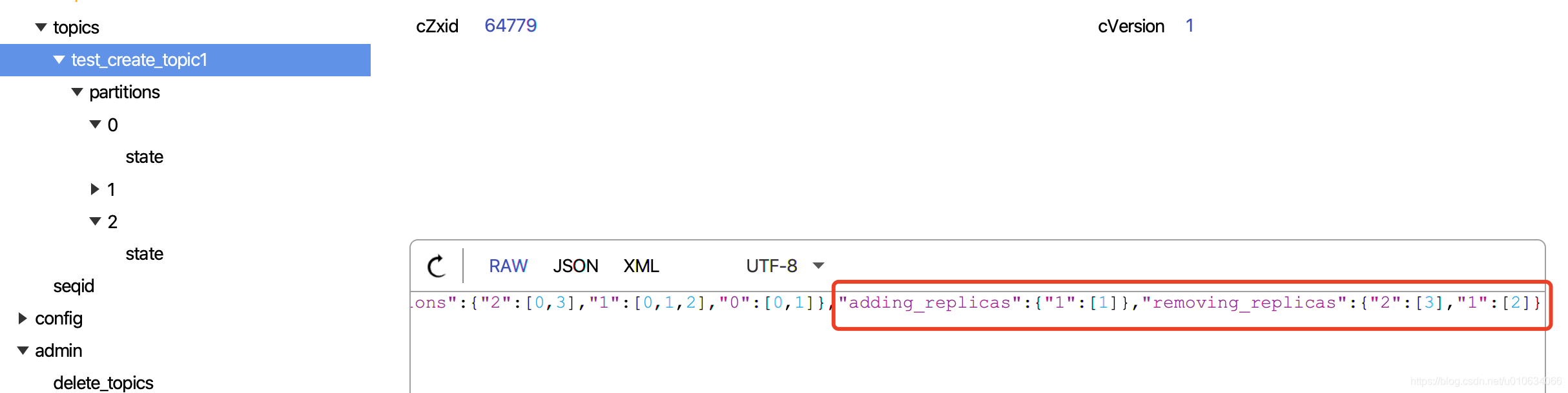

4. 将重分配的数据写入到zk的节点`/admin/reassign_partitions`中;数据内容如:

|

||||

```

|

||||

{"version":1,"partitions":[{"topic":"test_create_topic1","partition":0,"replicas":[0,1,2,3]},{"topic":"test_create_topic1","partition":1,"replicas":[1,2,0,3]},{"topic":"test_create_topic1","partition":2,"replicas":[2,1,0,3]}]}

|

||||

```

|

||||

5. 再次发送 `AlterReplicaLogDirsRequest `以确保代理将开始将副本移动到指定的日志目录。控制器在代理中创建副本可能需要一些时间。如果尚未创建副本,请重试。

|

||||

1. 像Broker发送`alterReplicaLogDirs`请求

|

||||

|

||||

|

||||

|

||||

|

||||

#### 2.2.3 Controller监听`/admin/reassign_partitions`节点变化

|

||||

|

||||

|

||||

`KafkaController.processZkPartitionReassignment`

|

||||

```scala

|

||||

private def processZkPartitionReassignment(): Set[TopicPartition] = {

|

||||

// We need to register the watcher if the path doesn't exist in order to detect future

|

||||

// reassignments and we get the `path exists` check for free

|

||||

if (isActive && zkClient.registerZNodeChangeHandlerAndCheckExistence(partitionReassignmentHandler)) {

|

||||

val reassignmentResults = mutable.Map.empty[TopicPartition, ApiError]

|

||||

val partitionsToReassign = mutable.Map.empty[TopicPartition, ReplicaAssignment]

|

||||

|

||||

zkClient.getPartitionReassignment().foreach { case (tp, targetReplicas) =>

|

||||

maybeBuildReassignment(tp, Some(targetReplicas)) match {

|

||||

case Some(context) => partitionsToReassign.put(tp, context)

|

||||

case None => reassignmentResults.put(tp, new ApiError(Errors.NO_REASSIGNMENT_IN_PROGRESS))

|

||||

}

|

||||

}

|

||||

|

||||

reassignmentResults ++= maybeTriggerPartitionReassignment(partitionsToReassign)

|

||||

val (partitionsReassigned, partitionsFailed) = reassignmentResults.partition(_._2.error == Errors.NONE)

|

||||

if (partitionsFailed.nonEmpty) {

|

||||

warn(s"Failed reassignment through zk with the following errors: $partitionsFailed")

|

||||

maybeRemoveFromZkReassignment((tp, _) => partitionsFailed.contains(tp))

|

||||

}

|

||||

partitionsReassigned.keySet

|

||||

} else {

|

||||

Set.empty

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

1. 判断是否是Controller角色并且是否存在节点`/admin/reassign_partitions`

|

||||

2. `maybeTriggerPartitionReassignment` 重分配,如果topic已经被标记为删除了,则此topic流程终止;

|

||||

3. `maybeRemoveFromZkReassignment`将执行失败的一些分区信息从zk中删除;(覆盖信息)

|

||||

|

||||

##### onPartitionReassignment

|

||||

`KafkaController.onPartitionReassignment`

|

||||

|

||||

```scala

|

||||

private def onPartitionReassignment(topicPartition: TopicPartition, reassignment: ReplicaAssignment): Unit = {

|

||||

// 暂停一些正在删除的Topic操作

|

||||

topicDeletionManager.markTopicIneligibleForDeletion(Set(topicPartition.topic), reason = "topic reassignment in progress")

|

||||

//更新当前的分配

|

||||

updateCurrentReassignment(topicPartition, reassignment)

|

||||

|

||||

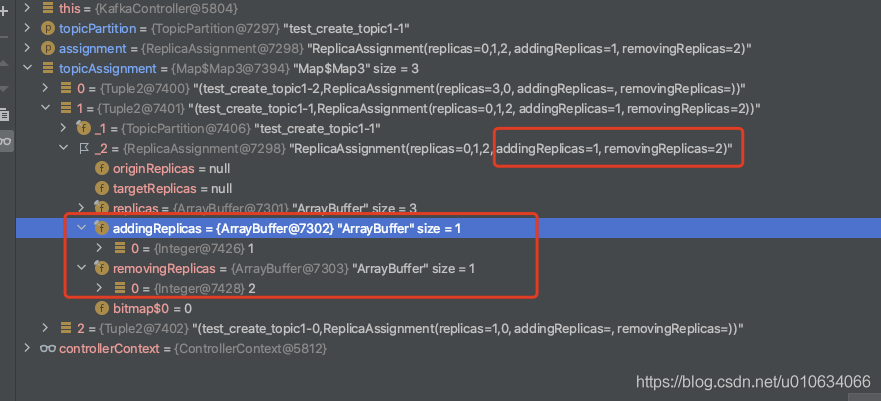

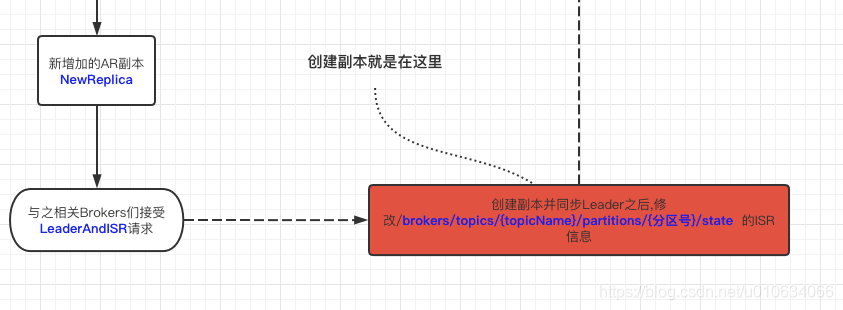

val addingReplicas = reassignment.addingReplicas

|

||||

val removingReplicas = reassignment.removingReplicas

|

||||

|

||||

if (!isReassignmentComplete(topicPartition, reassignment)) {

|

||||

// A1. Send LeaderAndIsr request to every replica in ORS + TRS (with the new RS, AR and RR).

|

||||

updateLeaderEpochAndSendRequest(topicPartition, reassignment)

|

||||